Unlocking enterprise agility in the API economy

Across industries, enterprises are increasingly adopting an on-demand approach to compute, storage, and applications. They are favoring digital services that are faster to deploy, easier to scale, and better integrated with partner ecosystems. Yet, one critical pillar has lagged: the network. While software-defined networking has made inroads, many organizations still operate rigid, pre-provisioned networks. As applications become increasingly distributed and dynamic—including hybrid cloud and edge deployments—a programmable, on-demand network infrastructure can enhance and enable this new era.

From CapEx to OpEx: The new connectivity mindset

Another, practical concern is also driving this shift: the need for IT models that align cost with usage. Rising uncertainty about inflation, consumer spending, business investment, and global supply chains are just a few of the economic factors weighing on company decision-making. And chief information officers (CIOs) are scrutinizing capital-expenditure-heavy infrastructure more closely and increasingly adopting operating-expenses-based subscription models.

Instead of long-term circuit contracts and static provisioning, companies are looking for cloud-ready, on-demand network services that can scale, adapt, and integrate across hybrid environments. This trend is fueling demand for API-first network infrastructure connectivity that behaves like software, dynamically orchestrated and integrated into enterprise IT ecosystems. There has been such rapid interest, the global network API market is projected to surge from $1.53 billion in 2024 to over $72 billion in 2034.

In fact, McKinsey estimates the network API market could unlock between $100 billion and $300 billion in connectivity- and edge-computing-related revenue for telecom operators over the next five to seven years, with an additional $10 billion to $30 billion generated directly from APIs themselves.

“When the cloud came in, first there was a trickle of adoptions. And then there was a deluge,” says Rajarshi Purkayastha, VP of solutions at Tata Communications. “We’re seeing the same trend with programmable networks. What was once a niche industry is now becoming mainstream as CIOs prioritize agility and time-to-value.”

Programmable networks as a catalyst for innovation

Programmable subscription-based networks are not just about efficiency, they are about enabling faster innovation, better user experiences, and global scalability. Organizations are preferring API-first systems to avoid vendor lock-in, enable multi-vendor integration, and foster innovation. API-first approaches allow seamless integration across different hardware and software stacks, reducing operational complexity and costs.

With APIs, enterprises can provision bandwidth, configure services, and connect to clouds and edge locations in real time, all through automation layers embedded in their DevOps and application platforms. This makes the network an active enabler of digital transformation rather than a lagging dependency.

For example, Netflix—one of the earliest adopters of microservices—handles billions of API requests daily through over 500 microservices and gateways, supporting global scalability and rapid innovation. After a two-year transition period, it redesigned its IT structure and organized it using microservice architecture.

Elsewhere, Coca-Cola integrated its global systems using APIs, enabling faster, lower-cost delivery and improved cross-functional collaboration. And Uber moved to microservices with API gateways, allowing independent scaling and rapid deployment across markets.

In each case, the network had to evolve from being static and hardware-bound to dynamic, programmable, and consumption-based. “API-first infrastructure fits naturally into how today’s IT teams work,” says Purkayastha. “It aligns with continuous integration and continuous delivery/deployment (CI/CD) pipelines and service orchestration tools. That reduces friction and accelerates how fast enterprises can launch new services.”

Powering on-demand connectivity

Tata Communications deployed Network Fabric—its programmable platform that uses APIs to allow enterprise systems to request and adjust network resources dynamically—to help a global software-as-a-service (SaaS) company modernize how it manages network capacity in response to real-time business needs. As the company scaled its digital services worldwide, it needed a more agile, cost-efficient way to align network performance with unpredictable traffic surges and fast-changing user demands. With Tata’s platform, the company’s operations teams were able to automatically scale bandwidth in key regions for peak performance, during high-impact events like global software releases. And just as quickly scale down once demand normalized, avoiding unnecessary costs.

In another scenario, when the SaaS provider needed to run large-scale data operations between its US and Asia hubs, the network was programmatically reconfigured in under an hour; a process that previously required weeks of planning and provisioning. “What we delivered wasn’t just bandwidth, it was the ability for their teams to take control,” says Purkayastha. “By integrating our Network Fabric APIs into their automation workflows, we gave them a network that responds at the pace of their business.”

Barriers to transformation — and how to overcome them

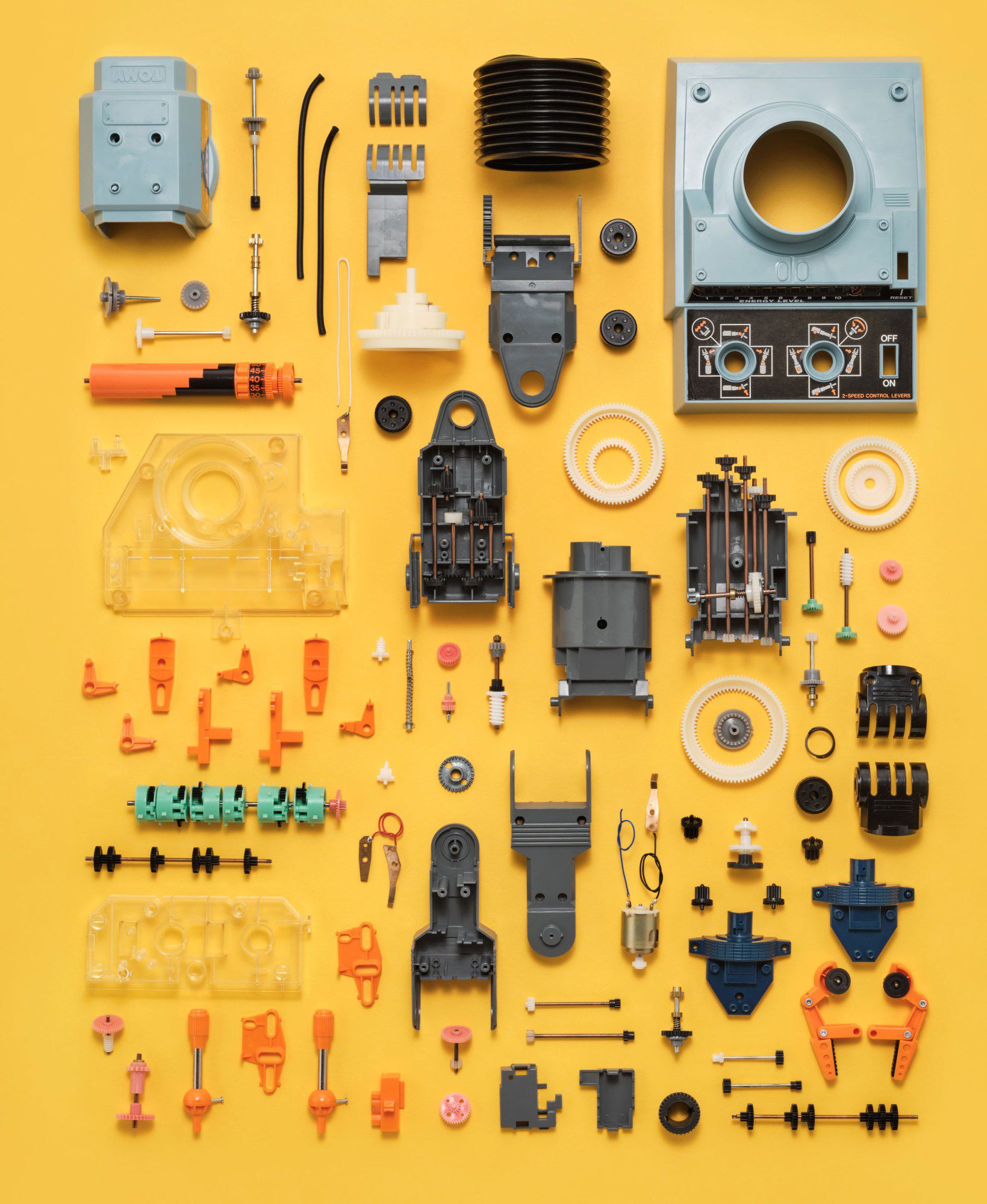

Transforming network infrastructure is no small task. Many enterprises still rely on legacy multiprotocol label switching (MPLS) and hardware-defined wide-area network (WAN) architectures. These environments are rigid, manually managed, and often incompatible with modern APIs or automation frameworks. As with any organization, barriers can be both technical and internal, and legacy devices may not support programmable interfaces. Organizations are often siloed, meaning networks are managed separately to application and DevOps workflows.

Furthermore, CIOs face pressure for quick returns and may not even remain in the company long enough to oversee the process and results, making it harder to push for long-term network modernization strategies. “Often, it’s easier to address the low-hanging fruit rather than go after the transformation because decision-makers may not be around to see the transformation come to life,” says Purkayastha.

But quick fixes or workarounds may not yield the desired results; transformation is needed instead. “Enterprises have historically built their networks for stability, not agility,” says Purkayastha. “But now, that same rigidity becomes a bottleneck when applications, users, and workloads are distributed across the cloud, edge, and remote locations.”

Despite the challenges, there is a clear path forward, starting with overlay orchestration, well-defined API contracts, and security-first design. Instead of completely removing and replacing an existing system, many enterprises are layering APIs over existing infrastructure, enabling controlled migrations and real-time service automation.

“We don’t just help customers adopt APIs, we guide them through the operational shift it requires,” says Purkayastha. “We have blueprints for what to automate first, how to manage hybrid environments, and how to design for resilience.”

For some organizations, there will be resistance to the change initially. Fears of extra workloads, or misalliance with teams’ existing goals and objectives are common, as is the deeply human distrust of change. These can be overcome, however. “There are playbooks on what we’ve done earlier—learnings from transformation—which we share with clients,” says Purkayastha. “We also plan for the unknowns. We usually reserve 10% of time and resources just to manage unforeseen risks, and the result is an empowered organization to scale innovation and reduce operational complexity.”

This content was produced by Insights, the custom content arm of MIT Technology Review. It was not written by MIT Technology Review’s editorial staff. It was researched, designed, and written entirely by human writers, editors, analysts, and illustrators. This includes the writing of surveys and collection of data for surveys. AI tools that may have been used were limited to secondary production processes that passed thorough human review.