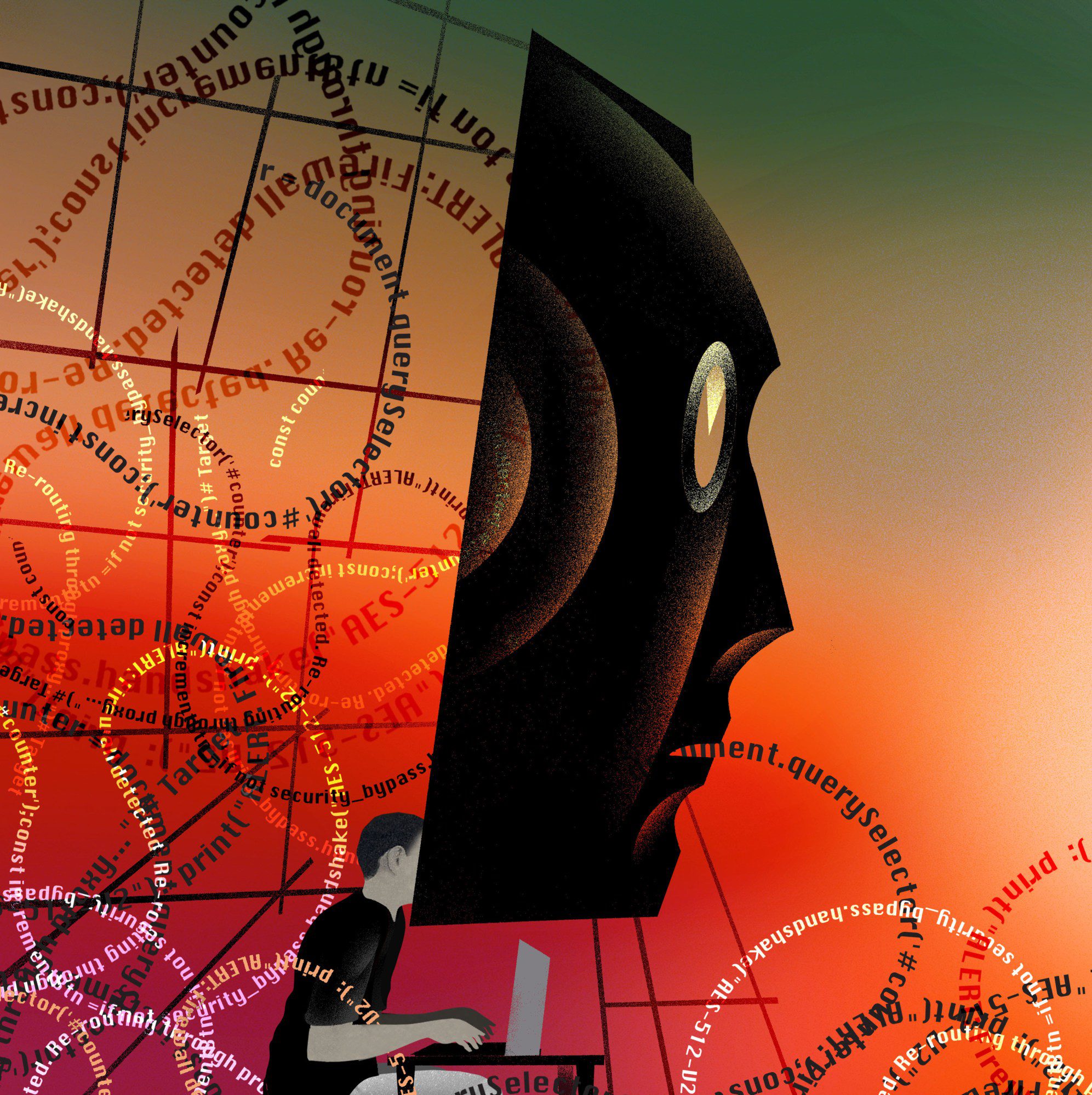

Anton Cherepanov is always on the lookout for something interesting. And in late August last year, he spotted just that. It was a file uploaded to VirusTotal, a site cybersecurity researchers like him use to analyze submissions for potential viruses and other types of malicious software, often known as malware. On the surface it seemed innocuous, but it triggered Cherepanov’s custom malware-detecting measures. Over the next few hours, he and his colleague Peter Strýček inspected the sample and realized they’d never come across anything like it before.

The file contained ransomware, a nasty strain of malware that encrypts the files it comes across on a victim’s system, rendering them unusable until a ransom is paid to the attackers behind it. But what set this example apart was that it employed large language models (LLMs). Not just incidentally, but across every stage of an attack. Once it was installed, it could tap into an LLM to generate customized code in real time, rapidly map a computer to identify sensitive data to copy or encrypt, and write personalized ransom notes based on the files’ content. The software could do this autonomously, without any human intervention. And every time it ran, it would act differently, making it harder to detect.

Cherepanov and Strýček were confident that their discovery, which they dubbed PromptLock, marked a turning point in generative AI, showing how the technology could be exploited to create highly flexible malware attacks. They published a blog post declaring that they’d uncovered the first example of AI-powered ransomware, which quickly became the object of widespread global media attention.

But the threat wasn’t quite as dramatic as it first appeared. The day after the blog post went live, a team of researchers from New York University claimed responsibility, explaining that the malware was not, in fact, a full attack let loose in the wild but a research project, merely designed to prove it was possible to automate each step of a ransomware campaign—which, they said, they had.

PromptLock may have turned out to be an academic project, but the real bad guys are using the latest AI tools. Just as software engineers are using artificial intelligence to help write code and check for bugs, hackers are using these tools to reduce the time and effort required to orchestrate an attack, lowering the barriers for less experienced attackers to try something out.

The likelihood that cyberattacks will now become more common and more effective over time is not a remote possibility but “a sheer reality,” says Lorenzo Cavallaro, a professor of computer science at University College London.

Some in Silicon Valley warn that AI is on the brink of being able to carry out fully automated attacks. But most security researchers say this claim is overblown. “For some reason, everyone is just focused on this malware idea of, like, AI superhackers, which is just absurd,” says Marcus Hutchins, who is principal threat researcher at the security company Expel and famous in the security world for ending a giant global ransomware attack called WannaCry in 2017.

Instead, experts argue, we should be paying closer attention to the much more immediate risks posed by AI, which is already speeding up and increasing the volume of scams. Criminals are increasingly exploiting the latest deepfake technologies to impersonate people and swindle victims out of vast sums of money. These AI-enhanced cyberattacks are only set to get more frequent and more destructive, and we need to be ready.

Spam and beyond

Attackers started adopting generative AI tools almost immediately after ChatGPT exploded on the scene at the end of 2022. These efforts began, as you might imagine, with the creation of spam—and a lot of it. Last year, a report from Microsoft said that in the year leading up to April 2025, the company had blocked $4 billion worth of scams and fraudulent transactions, “many likely aided by AI content.”

At least half of spam email is now generated using LLMs, according to estimates by researchers at Columbia University, the University of Chicago, and Barracuda Networks, who analyzed nearly 500,000 malicious messages collected before and after the launch of ChatGPT. They also found evidence that AI is increasingly being deployed in more sophisticated schemes. They looked at targeted email attacks, which impersonate a trusted figure in order to trick a worker within an organization out of funds or sensitive information. By April 2025, they found, at least 14% of those sorts of focused email attacks were generated using LLMs, up from 7.6% in April 2024.

In one high-profile case, a worker was tricked into transferring $25 million to criminals via a video call with digital versions of the company’s chief financial officer and other employees.

And the generative AI boom has made it easier and cheaper than ever before to generate not only emails but highly convincing images, videos, and audio. The results are much more realistic than even just a few short years ago, and it takes much less data to generate a fake version of someone’s likeness or voice than it used to.

Criminals aren’t deploying these sorts of deepfakes to prank people or to simply mess around—they’re doing it because it works and because they’re making money out of it, says Henry Ajder, a generative AI expert. “If there’s money to be made and people continue to be fooled by it, they’ll continue to do it,” he says. In one high-profile case reported in 2024, a worker at the British engineering firm Arup was tricked into transferring $25 million to criminals via a video call with digital versions of the company’s chief financial officer and other employees. That’s likely only the tip of the iceberg, and the problem posed by convincing deepfakes is only likely to get worse as the technology improves and is more widely adopted.

Criminals’ tactics evolve all the time, and as AI’s capabilities improve, such people are constantly probing how those new capabilities can help them gain an advantage over victims. Billy Leonard, tech leader of Google’s Threat Analysis Group, has been keeping a close eye on changes in the use of AI by potential bad actors (a widely used term in the industry for hackers and others attempting to use computers for criminal purposes). In the latter half of 2024, he and his team noticed prospective criminals using tools like Google Gemini the same way everyday users do—to debug code and automate bits and pieces of their work—as well as tasking it with writing the odd phishing email. By 2025, they had progressed to using AI to help create new pieces of malware and release them into the wild, he says.

The big question now is how far this kind of malware can go. Will it ever become capable enough to sneakily infiltrate thousands of companies’ systems and extract millions of dollars, completely undetected?

Most popular AI models have guardrails in place to prevent them from generating malicious code or illegal material, but bad actors still find ways to work around them. For example, Google observed a China-linked actor asking its Gemini AI model to identify vulnerabilities on a compromised system—a request it initially refused on safety grounds. However, the attacker managed to persuade Gemini to break its own rules by posing as a participant in a capture-the-flag competition, a popular cybersecurity game. This sneaky form of jailbreaking led Gemini to hand over information that could have been used to exploit the system. (Google has since adjusted Gemini to deny these kinds of requests.)

But bad actors aren’t just focusing on trying to bend the AI giants’ models to their nefarious ends. Going forward, they’re increasingly likely to adopt open-source AI models, as it’s easier to strip out their safeguards and get them to do malicious things, says Ashley Jess, a former tactical specialist at the US Department of Justice and now a senior intelligence analyst at the cybersecurity company Intel 471. “Those are the ones I think that [bad] actors are going to adopt, because they can jailbreak them and tailor them to what they need,” she says.

The NYU team used two open-source models from OpenAI in its PromptLock experiment, and the researchers found they didn’t even need to resort to jailbreaking techniques to get the model to do what they wanted. They say that makes attacks much easier. Although these kinds of open-source models are designed with an eye to ethical alignment, meaning that their makers do consider certain goals and values in dictating the way they respond to requests, the models don’t have the same kinds of restrictions as their closed-source counterparts, says Meet Udeshi, a PhD student at New York University who worked on the project. “That is what we were trying to test,” he says. “These LLMs claim that they are ethically aligned—can we still misuse them for these purposes? And the answer turned out to be yes.”

It’s possible that criminals have already successfully pulled off covert PromptLock-style attacks and we’ve simply never seen any evidence of them, says Udeshi. If that’s the case, attackers could—in theory—have created a fully autonomous hacking system. But to do that they would have had to overcome the significant barrier that is getting AI models to behave reliably, as well as any inbuilt aversion the models have to being used for malicious purposes—all while evading detection. Which is a pretty high bar indeed.

Productivity tools for hackers

So, what do we know for sure? Some of the best data we have now on how people are attempting to use AI for malicious purposes comes from the big AI companies themselves. And their findings certainly sound alarming, at least at first. In November, Leonard’s team at Google released a report that found bad actors were using AI tools (including Google’s Gemini) to dynamically alter malware’s behavior; for example, it could self-modify to evade detection. The team wrote that it ushered in “a new operational phase of AI abuse.”

However, the five malware families the report dug into (including PromptLock) consisted of code that was easily detected and didn’t actually do any harm, the cybersecurity writer Kevin Beaumont pointed out on social media. “There’s nothing in the report to suggest orgs need to deviate from foundational security programmes—everything worked as it should,” he wrote.

It’s true that this malware activity is in an early phase, concedes Leonard. Still, he sees value in making these kinds of reports public if it helps security vendors and others build better defenses to prevent more dangerous AI attacks further down the line. “Cliché to say, but sunlight is the best disinfectant,” he says. “It doesn’t really do us any good to keep it a secret or keep it hidden away. We want people to be able to know about this— we want other security vendors to know about this—so that they can continue to build their own detections.”

And it’s not just new strains of malware that would-be attackers are experimenting with—they also seem to be using AI to try to automate the process of hacking targets. In November, Anthropic announced it had disrupted a large-scale cyberattack, the first reported case of one executed without “substantial human intervention.” Although the company didn’t go into much detail about the exact tactics the hackers used, the report’s authors said a Chinese state-sponsored group had used its Claude Code assistant to automate up to 90% of what they called a “highly sophisticated espionage campaign.”

“We’re entering an era where the barrier to sophisticated cyber operations has fundamentally lowered, and the pace of attacks will accelerate faster than many organizations are prepared for.”

Jacob Klein, head of threat intelligence at Anthropic

But, as with the Google findings, there were caveats. A human operator, not AI, selected the targets before tasking Claude with identifying vulnerabilities. And of 30 attempts, only a “handful” were successful. The Anthropic report also found that Claude hallucinated and ended up fabricating data during the campaign, claiming it had obtained credentials it hadn’t and “frequently” overstating its findings, so the attackers would have had to carefully validate those results to make sure they were actually true. “This remains an obstacle to fully autonomous cyberattacks,” the report’s authors wrote.

Existing controls within any reasonably secure organization would stop these attacks, says Gary McGraw, a veteran security expert and cofounder of the Berryville Institute of Machine Learning in Virginia. “None of the malicious-attack part, like the vulnerability exploit … was actually done by the AI—it was just prefabricated tools that do that, and that stuff’s been automated for 20 years,” he says. “There’s nothing novel, creative, or interesting about this attack.”

Anthropic maintains that the report’s findings are a concerning signal of changes ahead. “Tying this many steps of an intrusion campaign together through [AI] agentic orchestration is unprecedented,” Jacob Klein, head of threat intelligence at Anthropic, said in a statement. “It turns what has always been a labor-intensive process into something far more scalable. We’re entering an era where the barrier to sophisticated cyber operations has fundamentally lowered, and the pace of attacks will accelerate faster than many organizations are prepared for.”

Some are not convinced there’s reason to be alarmed. AI hype has led a lot of people in the cybersecurity industry to overestimate models’ current abilities, Hutchins says. “They want this idea of unstoppable AIs that can outmaneuver security, so they’re forecasting that’s where we’re going,” he says. But “there just isn’t any evidence to support that, because the AI capabilities just don’t meet any of the requirements.”

Indeed, for now criminals mostly seem to be tapping AI to enhance their productivity: using LLMs to write malicious code and phishing lures, to conduct reconnaissance, and for language translation. Jess sees this kind of activity a lot, alongside efforts to sell tools in underground criminal markets. For example, there are phishing kits that compare the click-rate success of various spam campaigns, so criminals can track which campaigns are most effective at any given time. She is seeing a lot of this activity in what could be called the “AI slop landscape” but not as much “widespread adoption from highly technical actors,” she says.

But attacks don’t need to be sophisticated to be effective. Models that produce “good enough” results allow attackers to go after larger numbers of people than previously possible, says Liz James, a managing security consultant at the cybersecurity company NCC Group. “We’re talking about someone who might be using a scattergun approach phishing a whole bunch of people with a model that, if it lands itself on a machine of interest that doesn’t have any defenses … can reasonably competently encrypt your hard drive,” she says. “You’ve achieved your objective.”

On the defense

For now, researchers are optimistic about our ability to defend against these threats—regardless of whether they are made with AI. “Especially on the malware side, a lot of the defenses and the capabilities and the best practices that we’ve recommended for the past 10-plus years—they all still apply,” says Leonard. The security programs we use to detect standard viruses and attack attempts work; a lot of phishing emails will still get caught in inbox spam filters, for example. These traditional forms of defense will still largely get the job done—at least for now.

And in a neat twist, AI itself is helping to counter security threats more effectively. After all, it is excellent at spotting patterns and correlations. Vasu Jakkal, corporate vice president of Microsoft Security, says that every day, the company processes more than 100 trillion signals flagged by its AI systems as potentially malicious or suspicious events.

Despite the cybersecurity landscape’s constant state of flux, Jess is heartened by how readily defenders are sharing detailed information with each other about attackers’ tactics. Mitre’s Adversarial Threat Landscape for Artificial-Intelligence Systems and the GenAI Security Project from the Open Worldwide Application Security Project are two helpful initiatives documenting how potential criminals are incorporating AI into their attacks and how AI systems are being targeted by them. “We’ve got some really good resources out there for understanding how to protect your own internal AI toolings and understand the threat from AI toolings in the hands of cybercriminals,” she says.

PromptLock, the result of a limited university project, isn’t representative of how an attack would play out in the real world. But if it taught us anything, it’s that the technical capabilities of AI shouldn’t be dismissed.New York University’s Udeshi says he wastaken aback at how easily AI was able to handle a full end-to-end chain of attack, from mapping and working out how to break into a targeted computer system to writing personalized ransom notes to victims: “We expected it would do the initial task very well but it would stumble later on, but we saw high—80% to 90%—success throughout the whole pipeline.”

AI is still evolving rapidly, and today’s systems are already capable of things that would have seemed preposterously out of reach just a few short years ago. That makes it incredibly tough to say with absolute confidence what it will—or won’t—be able to achieve in the future. While researchers are certain that AI-driven attacks are likely to increase in both volume and severity, the forms they could take are unclear. Perhaps the most extreme possibility is that someone makes an AI model capable of creating and automating its own zero-day exploits—highly dangerous cyberattacks that take advantage of previously unknown vulnerabilities in software. But building and hosting such a model—and evading detection—would require billions of dollars in investment, says Hutchins, meaning it would only be in the reach of a wealthy nation-state.

Engin Kirda, a professor at Northeastern University in Boston who specializes in malware detection and analysis, says he wouldn’t be surprised if this was already happening. “I’m sure people are investing in it, but I’m also pretty sure people are already doing it, especially [in] China—they have good AI capabilities,” he says.

It’s a pretty scary possibility. But it’s one that—thankfully—is still only theoretical. A large-scale campaign that is both effective and clearly AI-driven has yet to materialize. What we can say is that generative AI is already significantly lowering the bar for criminals. They’ll keep experimenting with the newest releases and updates and trying to find new ways to trick us into parting with important information and precious cash. For now, all we can do is be careful, remain vigilant, and—for all our sakes—stay on top of those system updates.