Why handing over total control to AI agents would be a huge mistake

AI agents have set the tech industry abuzz. Unlike chatbots, these groundbreaking new systems operate outside of a chat window, navigating multiple applications to execute complex tasks, like scheduling meetings or shopping online, in response to simple user commands. As agents are developed to become more capable, a crucial question emerges: How much control are we willing to surrender, and at what cost?

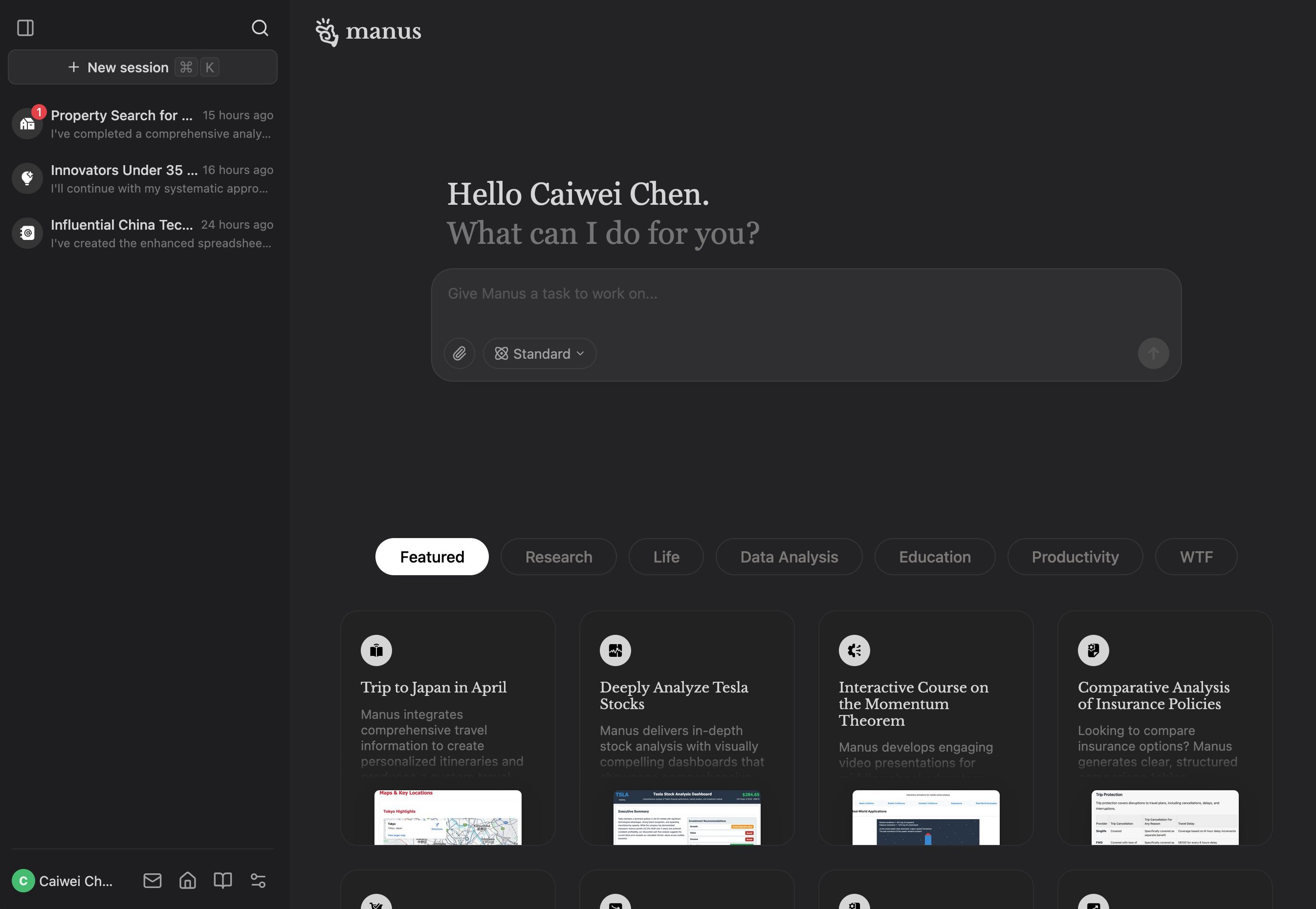

New frameworks and functionalities for AI agents are announced almost weekly, and companies promote the technology as a way to make our lives easier by completing tasks we can’t do or don’t want to do. Prominent examples include “computer use,” a function that enables Anthropic’s Claude system to act directly on your computer screen, and the “general AI agent” Manus, which can use online tools for a variety of tasks, like scouting out customers or planning trips.

These developments mark a major advance in artificial intelligence: systems designed to operate in the digital world without direct human oversight.

The promise is compelling. Who doesn’t want assistance with cumbersome work or tasks there’s no time for? Agent assistance could soon take many different forms, such as reminding you to ask a colleague about their kid’s basketball tournament or finding images for your next presentation. Within a few weeks, they’ll probably be able to make presentations for you.

There’s also clear potential for deeply meaningful differences in people’s lives. For people with hand mobility issues or low vision, agents could complete tasks online in response to simple language commands. Agents could also coordinate simultaneous assistance across large groups of people in critical situations, such as by routing traffic to help drivers flee an area en masse as quickly as possible when disaster strikes.

But this vision for AI agents brings significant risks that might be overlooked in the rush toward greater autonomy. Our research team at Hugging Face has spent years implementing and investigating these systems, and our recent findings suggest that agent development could be on the cusp of a very serious misstep.

Giving up control, bit by bit

This core issue lies at the heart of what’s most exciting about AI agents: The more autonomous an AI system is, the more we cede human control. AI agents are developed to be flexible, capable of completing a diverse array of tasks that don’t have to be directly programmed.

For many systems, this flexibility is made possible because they’re built on large language models, which are unpredictable and prone to significant (and sometimes comical) errors. When an LLM generates text in a chat interface, any errors stay confined to that conversation. But when a system can act independently and with access to multiple applications, it may perform actions we didn’t intend, such as manipulating files, impersonating users, or making unauthorized transactions. The very feature being sold—reduced human oversight—is the primary vulnerability.

To understand the overall risk-benefit landscape, it’s useful to characterize AI agent systems on a spectrum of autonomy. The lowest level consists of simple processors that have no impact on program flow, like chatbots that greet you on a company website. The highest level, fully autonomous agents, can write and execute new code without human constraints or oversight—they can take action (moving around files, changing records, communicating in email, etc.) without your asking for anything. Intermediate levels include routers, which decide which human-provided steps to take; tool callers, which run human-written functions using agent-suggested tools; and multistep agents that determine which functions to do when and how. Each represents an incremental removal of human control.

It’s clear that AI agents can be extraordinarily helpful for what we do every day. But this brings clear privacy, safety, and security concerns. Agents that help bring you up to speed on someone would require that individual’s personal information and extensive surveillance over your previous interactions, which could result in serious privacy breaches. Agents that create directions from building plans could be used by malicious actors to gain access to unauthorized areas.

And when systems can control multiple information sources simultaneously, potential for harm explodes. For example, an agent with access to both private communications and public platforms could share personal information on social media. That information might not be true, but it would fly under the radar of traditional fact-checking mechanisms and could be amplified with further sharing to create serious reputational damage. We imagine that “It wasn’t me—it was my agent!!” will soon be a common refrain to excuse bad outcomes.

Keep the human in the loop

Historical precedent demonstrates why maintaining human oversight is critical. In 1980, computer systems falsely indicated that over 2,000 Soviet missiles were heading toward North America. This error triggered emergency procedures that brought us perilously close to catastrophe. What averted disaster was human cross-verification between different warning systems. Had decision-making been fully delegated to autonomous systems prioritizing speed over certainty, the outcome might have been catastrophic.

Some will counter that the benefits are worth the risks, but we’d argue that realizing those benefits doesn’t require surrendering complete human control. Instead, the development of AI agents must occur alongside the development of guaranteed human oversight in a way that limits the scope of what AI agents can do.

Open-source agent systems are one way to address risks, since these systems allow for greater human oversight of what systems can and cannot do. At Hugging Face we’re developing smolagents, a framework that provides sandboxed secure environments and allows developers to build agents with transparency at their core so that any independent group can verify whether there is appropriate human control.

This approach stands in stark contrast to the prevailing trend toward increasingly complex, opaque AI systems that obscure their decision-making processes behind layers of proprietary technology, making it impossible to guarantee safety.

As we navigate the development of increasingly sophisticated AI agents, we must recognize that the most important feature of any technology isn’t increasing efficiency but fostering human well-being.

This means creating systems that remain tools rather than decision-makers, assistants rather than replacements. Human judgment, with all its imperfections, remains the essential component in ensuring that these systems serve rather than subvert our interests.

Margaret Mitchell, Avijit Ghosh, Sasha Luccioni, Giada Pistilli all work for Hugging Face, a global startup in responsible open-source AI.

Dr. Margaret Mitchell is a machine learning researcher and Chief Ethics Scientist at Hugging Face, connecting human values to technology development.

Dr. Sasha Luccioni is Climate Lead at Hugging Face, where she spearheads research, consulting and capacity-building to elevate the sustainability of AI systems.

Dr. Avijit Ghosh is an Applied Policy Researcher at Hugging Face working at the intersection of responsible AI and policy. His research and engagement with policymakers has helped shape AI regulation and industry practices.

Dr. Giada Pistilli is a philosophy researcher working as Principal Ethicist at Hugging Face.