How three filmmakers created Sora’s latest stunning videos

In the last month, a handful of filmmakers have taken Sora for a test drive. The results, which OpenAI published this week, are amazing. The short films are a big jump up even from the cherry-picked demo videos that OpenAI used to tease its new generative model just six weeks ago. Here’s how three of the filmmakers did it.

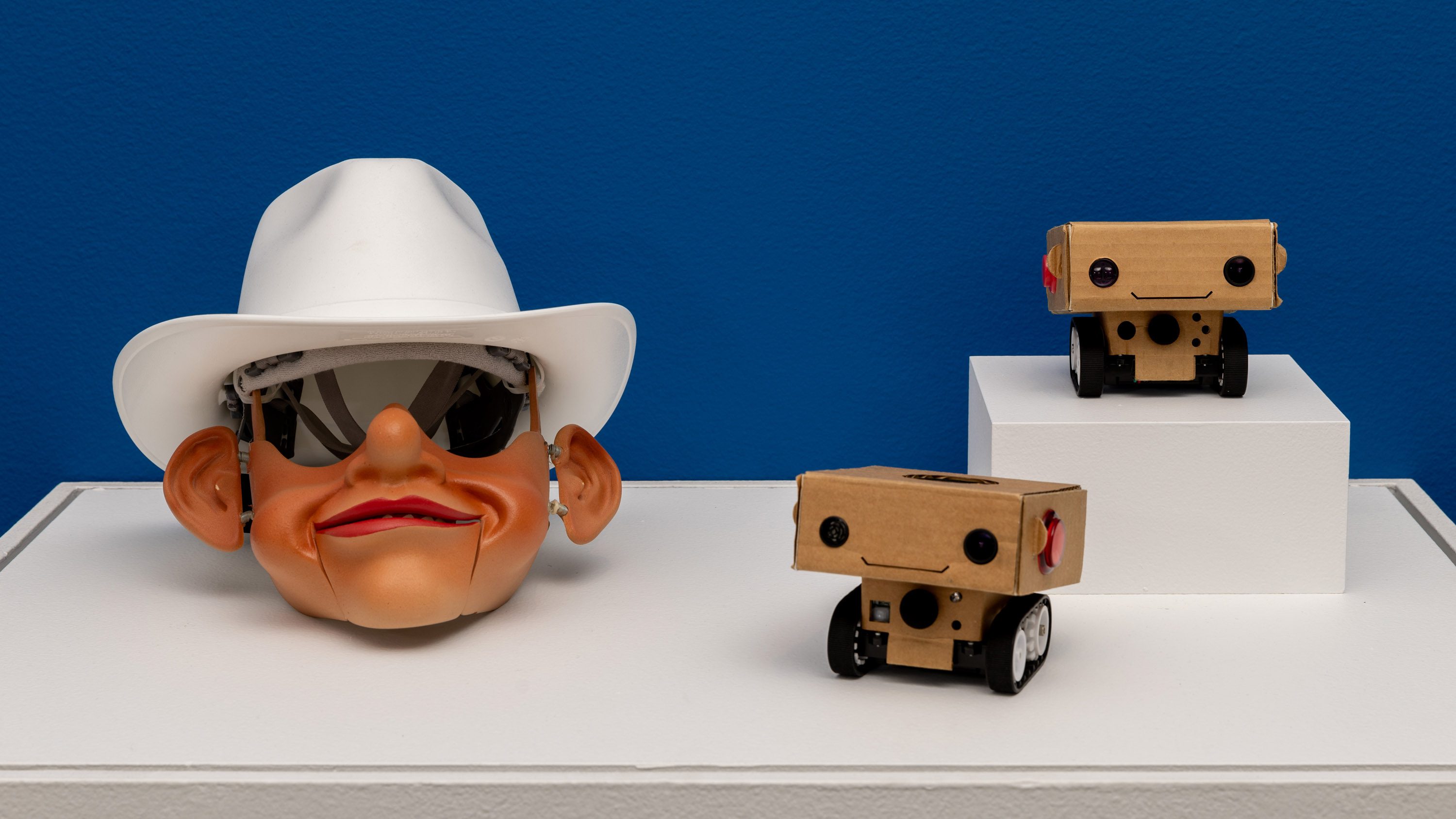

“Air Head” by Shy Kids

Shy Kids is a pop band and filmmaking collective based in Toronto that describes its style as “punk-rock Pixar.” The group has experimented with generative video tech before. Last year it made a music video for one of its songs using an open-source tool called Stable Warpfusion. It’s cool, but low-res and glitchy. The film it made with Sora, called “Air Head,” could pass for real footage—if it didn’t feature a man with a balloon for a face.

One problem with most generative video tools is that it’s hard to maintain consistency across frames. When OpenAI asked Shy Kids to try out Sora, the band wanted to see how far they could push it. “We thought a fun, interesting experiment would be—could we make a consistent character?” says Shy Kids member Walter Woodman. “We think it was mostly successful.”

Generative models can also struggle with anatomical details like hands and faces. But in the video there is a scene showing a train car full of passengers, and the faces are near perfect. “It’s mind-blowing what it can do,” says Woodman. “Those faces on the train were all Sora.”

Has generative video’s problem with faces and hands been solved? Not quite. We still get glimpses of warped body parts. And text is still a problem (in another video, by the creative agency Native Foreign, we see a bike repair shop with the sign “Biycle Repaich”). But everything in “Air Head” is raw output from Sora. After editing together many different clips produced with the tool, Shy Kids did a bunch of post-processing to make the film look even better. They used visual effects tools to fix certain shots of the main character’s balloon face, for example.

Woodman also thinks that the music (which they wrote and performed) and the voice-over (which they also wrote and performed) help to lift the quality of the film even more. Mixing these human touches in with Sora’s output is what makes the film feel alive, says Woodman. “The technology is nothing without you,” he says. “It is a powerful tool, but you are the person driving it.”

[Update: Shy Kids have posted a behind-the-scenes video for Air Head on X. Come for the pro tips, stay for the Sora bloopers: “How do you maintain a character and look consistent even though Sora is a slot machine as to what you get back?” asks Woodman.]

“Abstract“ by Paul Trillo

Paul Trillo, an artist and filmmaker, wanted to stretch what Sora could do with the look of a film. His video is a mash-up of retro-style footage with shots of a figure who morphs into a glitter ball and a breakdancing trash man. He says that everything you see is raw output from Sora: “No color correction or post FX.” Even the jump-cut edits in the first part of the film were produced using the generative model.

Trillo felt that the demos that OpenAI put out last month came across too much like clips from video games. “I wanted to see what other aesthetics were possible,” he says. The result is a video that looks like something shot with vintage 16-millimeter film. “It took a fair amount of experimenting, but I stumbled upon a series of prompts that helps make the video feel more organic or filmic,” he says.

“Beyond Our Reality” by Don Allen Stevenson

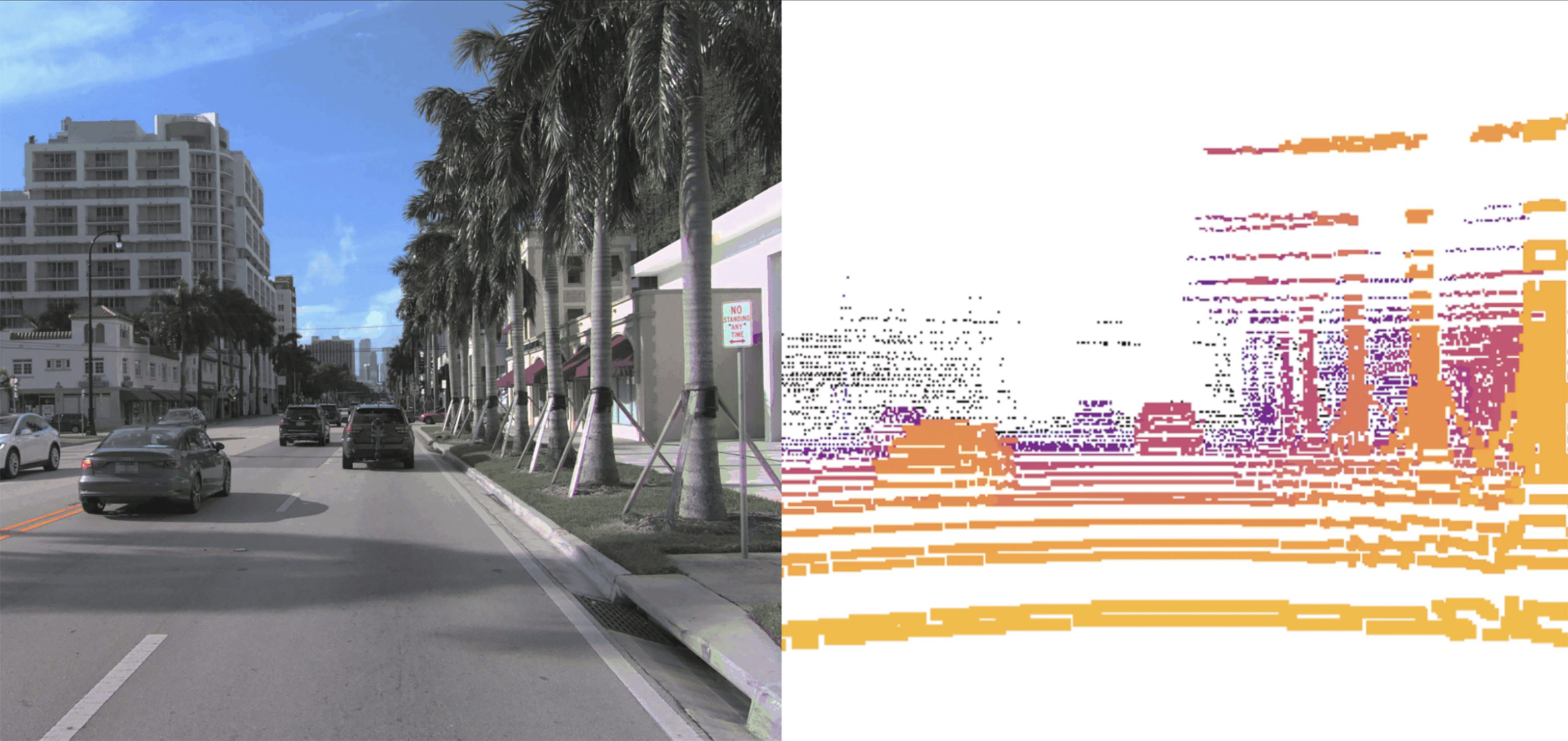

Don Allen Stevenson III is a filmmaker and visual effects artist. He was one of the artists invited by OpenAI to try out DALL-E 2, its text-to-image model, a couple of years ago. Stevenson’s film is a NatGeo-style nature documentary that introduces us to a menagerie of imaginary animals, from the girafflamingo to the eel cat.

In many ways working with text-to-video is like working with text-to-image, says Stevenson. “You enter a text prompt and then you tweak your prompt a bunch of times,” he says. But there’s an added hurdle. When you’re trying out different prompts, Sora produces low-res video. When you hit on something you like, you can then increase the resolution. But going from low to high res is involves another round of generation, and what you liked in the low-res version can be lost.

Sometimes the camera angle is different or the objects in the shot have moved, says Stevenson. Hallucination is still a feature of Sora, as it is in any generative model. With still images this might produce weird visual defects; with video those defects can appear across time as well, with weird jumps between frames.

Stevenson also had to figure out how to speak Sora’s language. It takes prompts very literally, he says. In one experiment he tried to create a shot that zoomed in on a helicopter. Sora produced a clip in which it mixed together a helicopter with a camera’s zoom lens. But Stevenson says that with a lot of creative prompting, Sora is easier to control than previous models.

Even so, he thinks that surprises are part of what makes the technology fun to use: “I like having less control. I like the chaos of it,” he says. There are many other video-making tools that give you control over editing and visual effects. For Stevenson, the point of a generative model like Sora is to come up with strange, unexpected material to work with in the first place.

The clips of the animals were all generated with Sora. Stevenson tried many different prompts until the tool produced something he liked. “I directed it, but it’s more like a nudge,” he says. He then went back and forth, trying out variations.

Stevenson pictured his fox crow having four legs, for example. But Sora gave it two, which worked even better. (It’s not perfect: sharp-eyed viewers will see that at one point in the video the fox crow switches from two legs to four, then back again.) Sora also produced several versions that he thought were too creepy to use.

When he had a collection of animals he really liked, he edited them together. Then he added captions and a voice-over on top. Stevenson could have created his made-up menagerie with existing tools. But it would have taken hours, even days, he says. With Sora the process was far quicker.

“I was trying to think of something that would look cool and experimented with a lot of different characters,” he says. “I have so many clips of random creatures.” Things really clicked when he saw what Sora did with the girafflamingo. “I started thinking: What’s the narrative around this creature? What does it eat, where does it live?” he says. He plans to put out a series of extended films following each of the fantasy animals in more detail.

Stevenson also hopes his fantastical animals will make a bigger point. “There’s going to be a lot of new types of content flooding feeds,” he says. “How are we going to teach people what’s real? In my opinion, one way is to tell stories that are clearly fantasy.”

Stevenson points out that his film could be the first time a lot of people see a video created by a generative model. He wants that first impression to make one thing very clear: This is not real.