Designing digital resilience in the agentic AI era

Digital resilience—the ability to prevent, withstand, and recover from digital disruptions—has long been a strategic priority for enterprises. With the rise of agentic AI, the urgency for robust resilience is greater than ever.

Agentic AI represents a new generation of autonomous systems capable of proactive planning, reasoning, and executing tasks with minimal human intervention. As these systems shift from experimental pilots to core elements of business operations, they offer new opportunities but also introduce new challenges when it comes to ensuring digital resilience. That’s because the autonomy, speed, and scale at which agentic AI operates can amplify the impact of even minor data inconsistencies, fragmentation, or security gaps.

While global investment in AI is projected to reach $1.5 trillion in 2025, fewer than half of business leaders are confident in their organization’s ability to maintain service continuity, security, and cost control during unexpected events. This lack of confidence, coupled with the profound complexity introduced by agentic AI’s autonomous decision-making and interaction with critical infrastructure, requires a reimagining of digital resilience.

Organizations are turning to the concept of a data fabric—an integrated architecture that connects and governs information across all business layers. By breaking down silos and enabling real-time access to enterprise-wide data, a data fabric can empower both human teams and agentic AI systems to sense risks, prevent problems before they occur, recover quickly when they do, and sustain operations.

Machine data: A cornerstone of agentic AI and digital resilience

Earlier AI models relied heavily on human-generated data such as text, audio, and video, but agentic AI demands deep insight into an organization’s machine data: the logs, metrics, and other telemetry generated by devices, servers, systems, and applications.

To put agentic AI to use in driving digital resilience, it must have seamless, real-time access to this data flow. Without comprehensive integration of machine data, organizations risk limiting AI capabilities, missing critical anomalies, or introducing errors. As Kamal Hathi, senior vice president and general manager of Splunk, a Cisco company, emphasizes, agentic AI systems rely on machine data to understand context, simulate outcomes, and adapt continuously. This makes machine data oversight a cornerstone of digital resilience.

“We often describe machine data as the heartbeat of the modern enterprise,” says Hathi. “Agentic AI systems are powered by this vital pulse, requiring real-time access to information. It’s essential that these intelligent agents operate directly on the intricate flow of machine data and that AI itself is trained using the very same data stream.”

Few organizations are currently achieving the level of machine data integration required to fully enable agentic systems. This not only narrows the scope of possible use cases for agentic AI, but, worse, it can also result in data anomalies and errors in outputs or actions. Natural language processing (NLP) models designed prior to the development of generative pre-trained transformers (GPTs) were plagued by linguistic ambiguities, biases, and inconsistencies. Similar misfires could occur with agentic AI if organizations rush ahead without providing models with a foundational fluency in machine data.

For many companies, keeping up with the dizzying pace at which AI is progressing has been a major challenge. “In some ways, the speed of this innovation is starting to hurt us, because it creates risks we’re not ready for,” says Hathi. “The trouble is that with agentic AI’s evolution, relying on traditional LLMs trained on human text, audio, video, or print data doesn’t work when you need your system to be secure, resilient, and always available.”

Designing a data fabric for resilience

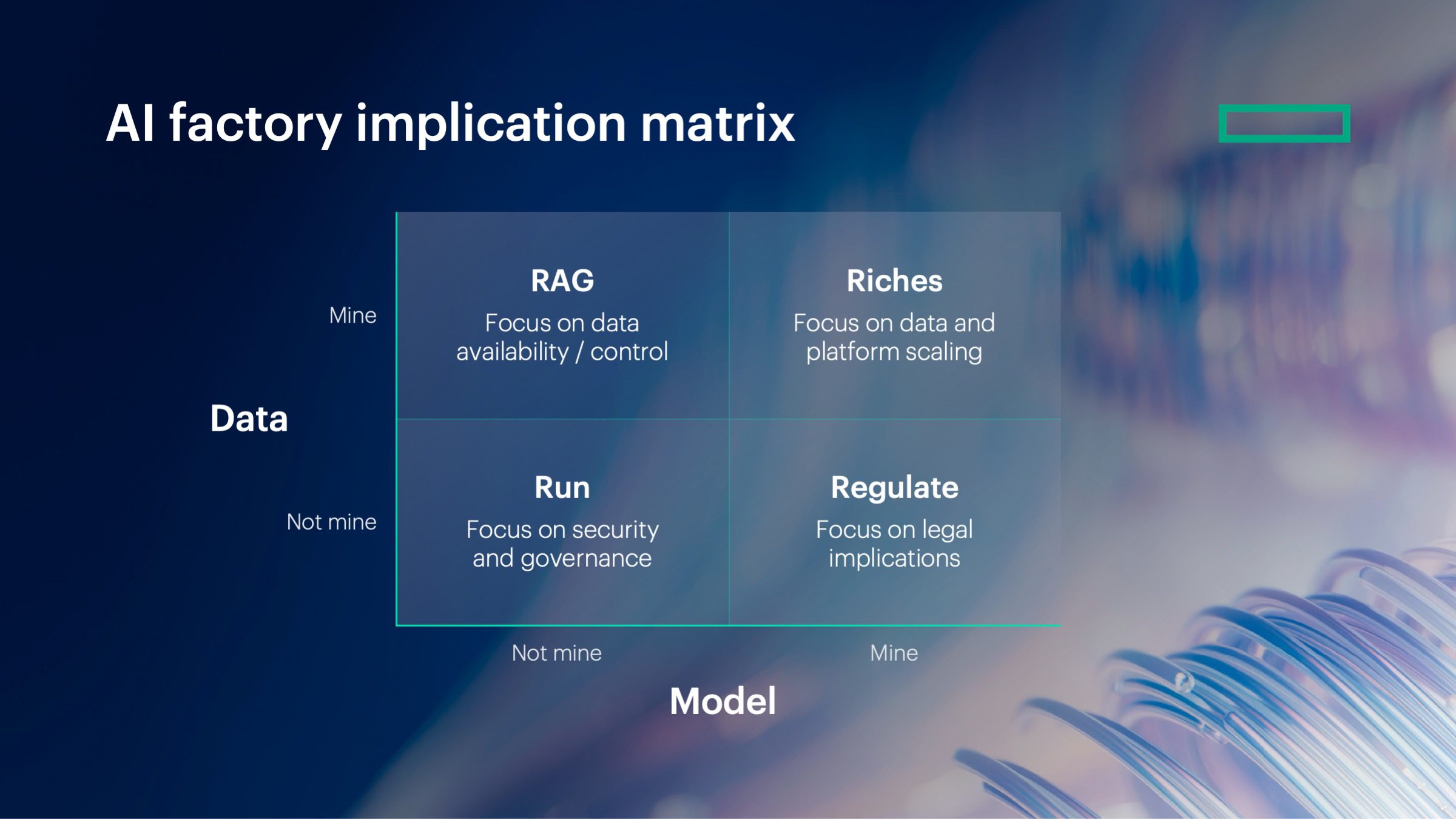

To address these shortcomings and build digital resilience, technology leaders should pivot to what Hathi describes as a data fabric design, better suited to the demands of agentic AI. This involves weaving together fragmented assets from across security, IT, business operations, and the network to create an integrated architecture that connects disparate data sources, breaks down silos, and enables real-time analysis and risk management.

“Once you have a single view, you can do all these things that are autonomous and agentic,” says Hathi. “You have far fewer blind spots. Decision-making goes much faster. And the unknown is no longer a source of fear because you have a holistic system that’s able to absorb these shocks and disruption without losing continuity,” he adds.

To create this unified system, data teams must first break down departmental silos in how data is shared, says Hathi. Then, they must implement a federated data architecture—a decentralized system where autonomous data sources work together as a single unit without physically merging—to create a unified data source while maintaining governance and security. And finally, teams must upgrade data platforms to ensure this newly unified view is actionable for agentic AI.

During this transition, teams may face technical limitations if they rely on traditional platforms modeled on structured data—that is, mostly quantitative information such as customer records or financial transactions that can be organized in a predefined format (often in tables) that is easy to query. Instead, companies need a platform that can also manage streams of unstructured data such as system logs, security events, and application traces, which lack uniformity and are often qualitative rather than quantitative. Analyzing, organizing, and extracting insights from these kinds of data requires more advanced methods enabled by AI.

Harnessing AI as a collaborator

AI itself can be a powerful tool in creating the data fabric that enables AI systems. AI-powered tools can, for example, quickly identify relationships between disparate data—both structured and unstructured—automatically merging them into one source of truth. They can detect and correct errors and employ NLP to tag and categorize data to make it easier to find and use.

Agentic AI systems can also be used to augment human capabilities in detecting and deciphering anomalies in an enterprise’s unstructured data streams. These are often beyond human capacity to spot or interpret at speed, leading to missed threats or delays. But agentic AI systems, designed to perceive, reason, and act autonomously, can plug the gap, delivering higher levels of digital resilience to an enterprise.

“Digital resilience is about more than withstanding disruptions,” says Hathi. “It’s about evolving and growing over time. AI agents can work with massive amounts of data and continuously learn from humans who provide safety and oversight. This is a true self-optimizing system.”

Humans in the loop

Despite its potential, agentic AI should be positioned as assistive intelligence. Without proper oversight, AI agents could introduce application failures or security risks.

Clearly defined guardrails and maintaining humans in the loop is “key to trustworthy and practical use of AI,” Hathi says. “AI can enhance human decision-making, but ultimately, humans are in the driver’s seat.”

This content was produced by Insights, the custom content arm of MIT Technology Review. It was not written by MIT Technology Review’s editorial staff. It was researched, designed, and written by human writers, editors, analysts, and illustrators. This includes the writing of surveys and collection of data for surveys. AI tools that may have been used were limited to secondary production processes that passed thorough human review.