Google released the first phase of its next-generation AI model, Gemini, today. Gemini reflects years of efforts from inside Google, overseen and driven by its CEO, Sundar Pichai.

(You can read all about Gemini in our report from Melissa Heikkilä and Will Douglas Heaven here.)

Pichai, who previously oversaw Chrome and Android, is famously product obsessed. In his first founder’s letter as CEO in 2016, he predicted that “[w]e will move from mobile first to an AI first world.” In the years since, Pichai has infused AI deeply into all of Google’s products, from Android devices all the way up to the cloud.

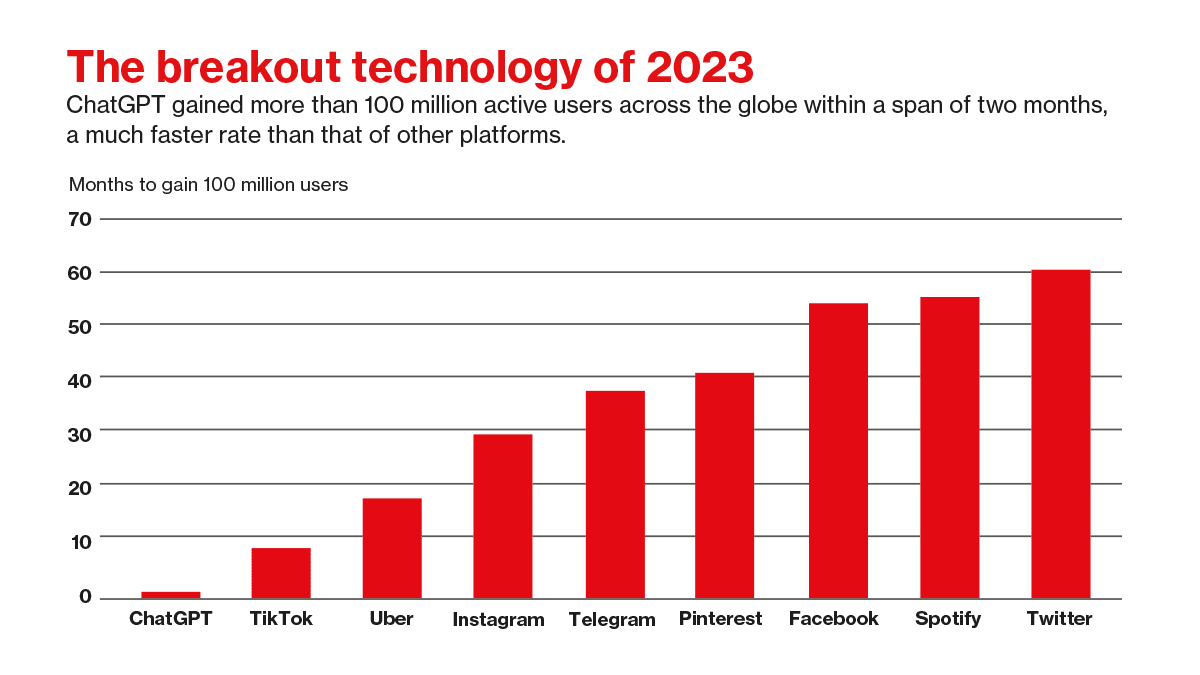

Despite that, the last year has largely been defined by the AI releases from another company, OpenAI. The rollout of DALL-E and GPT-3.5 last year, followed by GPT-4 this year, dominated the sector and kicked off an arms race between startups and tech giants alike.

Gemini is now the latest effort in that race. This state-of-the-art system was led by Google DeepMind, the newly integrated organization led by Demis Hassabis that brings together the company’s AI teams under one umbrella. You can experience Gemini in Bard today, and it will become integrated across the company’s line of products throughout 2024.

We sat down with Sundar Pichai at Google’s offices in Mountain View, California, on the eve of Gemini’s launch to discuss what it will mean for Google, its products, AI, and society writ large.

The following transcript represents Pichai in his own words. The conversation has been edited for clarity and readability.

MIT Technology Review: Why is Gemini exciting? Can you tell me what’s the big picture that you see as it relates to AI, its power, its usefulness, the direction as it goes into all of your products?

Sundar Pichai: A specific part of what makes it exciting is it’s a natively multimodal model from the ground up. Just like humans, it’s not just learning on text alone. It’s text, audio, code. So the model is innately more capable because of that, and I think will help us tease out newer capabilities and contribute to the progress of the field. That’s exciting.

It’s also exciting because Gemini Ultra is state of the art in 30 of the 32 leading benchmarks, and particularly in the multimodal benchmarks. That MMMU benchmark—it shows the progress there. I personally find it exciting that in MMLU [massive multi-task language understanding], which has been one of the leading benchmarks, it crossed the 90% threshold, which is a big milestone. The state of the art two years ago was 30, or 40%. So just think about how much the field is progressing. Approximately 89% is a human expert across these 57 subjects. It’s the first model to cross that threshold.

I’m excited, also, because it’s finally coming in our products. It’s going to be available to developers. It’s a platform. AI is a profound platform shift, bigger than web or mobile. And so it represents a big step for us from that moment as well.

Let’s start with those benchmarks. It seemed to be ahead of GPT-4 in almost all of them, or most all of them, but not by a lot. Whereas GPT-4 seemed like a very large leap forward. Are we starting to plateau with what we’re going to see some of these large-language-model technologies be able to do, or do you think we will continue to have these big growth curves?

First of all, looking ahead, we do see a lot of headroom. Some of the benchmarks are already high. You have to realize, when you’re trying to go to something from 85%, you’re now at that edge of the curve. So it may not seem like much, but it’s making progress. We are going to need newer benchmarks, too. It’s part of the reason we also looked at the MMLU multimodal benchmark. [For] some of these new benchmarks, the state of the art is still much lower. There’s a lot of progress ahead. The scaling laws are still going to work. As we make the models bigger, there’s going to be more progress. When I take it in the totality of it, I genuinely feel like we are at the very beginning.

I’m interested in what you see as the key breakthroughs of Gemini, and how they will be applied.

It’s so difficult for people to imagine the leaps that will happen. We are providing APIs, and people will imagine it in pretty deep ways.

I think multimodality will be big. As we teach these models to reason more, there will be bigger and bigger breakthroughs. Deeper breakthroughs are to come yet.

One way to think about this question is Gemini Pro. It does very well on benchmarks. But when we put it in Bard, I could feel it as a user. We’ve been testing it, and the favorability ratings go up across all categories pretty significantly. It’s why we’re calling it one of our biggest upgrades yet. And when we do side-by-side blind evaluations, it really shows the outperformance. So you make these better models improve on benchmarks. It makes progress. And we’ll continue training and pick it up from there.

But I can’t wait to put it in our products. These models are so capable. Actually designing the product experiences to take advantage of all what the models have—stuff will be exciting for the next few months.

I imagine there was an enormous amount of pressure to get Gemini out the door. I’m curious what you learned by seeing what had happened with GPT-4’s release. What did you learn? What approaches changed in that time frame?

One thing, at least to me: it feels very far from a zero-sum game, right? Think about how profound the shift to AI is, and how early we are. There’s a world of opportunity ahead.

But to your specific question, it’s a rich field in which we are all progressing. There is a scientific component to it, there’s an academic component to it; being published a lot, seeing how models like GPT-4 work in the real world. We have learned from that. Safety is an important area. So in part with Gemini, there are safety techniques we have learned and improved on based on how models are working out in the real world. It shows the importance of various things like fine-tuning. One of the things we showed with Med-PaLM 2 was to take a model like PaLM, to really fine-tune it to a specific domain, show it could outperform state-of-the-art models. And so that was a way by which we learned the power of fine-tuning.

A lot of that is applied as we are working our way through Gemini. Part of the reason we are taking some more time with Ultra [the more advanced version of Gemini that will be available next year] is to make sure we are testing it rigorously for safety. But we’re also fine-tuning it to really tease out the capabilities.

When you see some of these releases come out and people begin tinkering with them in the real world, they’ll have hallucinations, or they can reveal some of the private data that their models are trained on. And I wonder how much of that is inherent in the technology, given the data that it’s trained on, if that’s inevitable. If it is inevitable, what types of things do you try and do to limit that?

You’re right. These are all active fields of research. In fact, we just published a paper which shows how these models can reveal training data by a series of prompts. Hallucination is not a solved problem. I think we are all making progress on it, and there’s more work to be done. There are some fundamental limitations we need to work through. One example is if you take Gemini Ultra, we are actively red-teaming these models with external third parties using it who are specialists in these things.

In areas like multimodality, we want to be bold and we want to be responsible. We will be more careful with multimodal rollouts, because the chances of wrong use cases are higher.

But you are right in the sense that it is still a technology which is work in progress, which is why they won’t make sense for everything. Which is why in search, we are being more careful about how we use it, and when and what, where we use it, and then when we trigger it. They have these amazing capabilities, and they have clear shortcomings. This is the hard work ahead for all of us.

Do you think ultimately this is going to be a solved problem—hallucinations, or with revealing other training data?

With the current technology of auto-regressive LLMs, hallucinations are not a solved problem. But future AI systems may not look like what we have today. This is one version of technology. It’s like when people thought there is no way you can fit a computer in your pocket. There were people who were really opinionated, 20 years ago. Similarly, looking at these systems and saying you can’t design better systems. I don’t subscribe to that view. There are already many research explorations underway to think about how else to come upon these problems.

You’ve talked about how profound a shift this is. In some of these last shifts, like the shift to mobile, it didn’t necessarily increase productivity, which has been flat for a long time. I think there’s an argument that it may have even worsened income inequality. What type of work is Google doing to try to make sure that this shift is more widely beneficial to society?

It’s a very important question. I think about it on a few levels. One thing at Google we’ve always been focused on is: How do we get technology access as broadly available as possible? So I would argue even in the case of mobile, the work we do with Android—hundreds of millions of people wouldn’t have otherwise had computing access. We work hard to push toward an affordable smartphone, to maybe sub-$50.

So making AI helpful for everyone is the framework I think about. You try to promote access to as many people as possible. I think that’s one part of it.

We are thinking deeply about applying it to use cases which can benefit people. For example, the reason we did flood forecasting early on is because we realized, AI can detect patterns and do it well. We’re using it to translate 1,000 languages. We’re literally trying to bring content now in languages where otherwise you wouldn’t have had access.

This doesn’t solve all the problems you’re talking about. But being deliberate about when and where, what kind of problems you’re going to focus on—we’ve always been focused on that. Take areas like AlphaFold. We have provided an open database for viruses everywhere in the world. But … who uses it first? Where does it get sold? AI is not going to magically make things better on some of the more difficult issues like inequality; it could exacerbate it.

But what is important is you make sure that technology is available for everyone. You’re developing it early and giving people access and engaging in conversation so that society can think about it and adapt to it.

We’ve definitely, in this technology, participated earlier on than other technologies. You know, the recent UK AI Safety Forum or work in the US with Congress and the administration. We are trying to do more public-private partnerships, pulling in nonprofit and academic institutions earlier.

Impacts on areas like jobs need to be studied deeply, but I do think there are surprises. There’ll be surprising positive externalities, there’ll be negative externalities too. Solving the negative externalities is larger than any one company. It’s the role of all the stakeholders in society. So I don’t have easy answers there.

I can give you plenty of examples of the benefits mobile brings. I think that will be true of this too. We already showed it with areas like diabetic retinopathy. There are just not enough doctors in many parts of the world to detect it.

Just like I felt giving people access to Google Search everywhere in the world made a positive difference, I think that’s the way to think about expanding access to AI.

There are things that are clearly going to make people more productive. Programming is a great example of this. And yet, that democratization of this technology is the very thing that is threatening jobs. And even if you don’t have all the answers for society—and it’s not incumbent on one company to solve society’s problems—one company can put out a product that can dramatically change the world and have this profound impact.

We never offered facial-recognition APIs. But people built APIs and the technology moves forward. So it is also not in any one company’s hands. Technology will move forward.

I think the answer is more complex than that. Societies can also get left behind. If you don’t adopt these technologies, it could impact your economic competitiveness. You could lose more jobs.

I think the right answer is to responsibly deploy technology and make progress and think about areas where it can cause disproportionate harm and do work to mitigate it. There will be newer types of jobs. If you look at the last 50, 60 years, there are studies from economists from MIT which show most of the new jobs that have been created are in new areas which have come since then.

There will be newer jobs that are created. There will be jobs which are made better, where some of the repetitive work is freed up in a way that you can express yourself more creatively. You could be a doctor, you could be a radiologist, you could be a programmer. The amount of time you’re spending on routine tasks versus higher-order thinking—all that could change, making the job more meaningful. Then there are jobs which could be displaced. So, as a society, how do you retrain, reskill people, and create opportunities?

The last year has really brought out this philosophical split in the way people think we should approach AI. You could talk about it as being safety first or business use cases first, or accelerationists versus doomers. You’re in a position where you have to bridge all of that philosophy and bring it together. I wonder what you personally think about trying to bridge those interests at Google, which is going to be a leader in this field, into this new world.

I’m a technology optimist. I have always felt, based on my personal life, a belief in people and humanity. And so overall, I think humanity will harness technology to its benefit. So I’ve always been an optimist. You’re right: a powerful technology like AI—there is a duality to it.

Which means there will be times we will boldly move forward because I think we can push the state of the art. For example, if AI can help us solve problems like cancer or climate change, you want to do everything in your power to move forward fast. But you definitely need society to develop frameworks to adapt, be it to deepfakes or to job displacement, etc. This is going to be a frontier—no different from climate change. This will be one of the biggest things we all grapple with for the next decade ahead.

Another big, unsettled thing is the legal landscape around AI. There are questions about fair use, questions about being able to protect the outputs. And it seems like it’s going to be a really big deal for intellectual property. What do you tell people who are using your products, to give them a sense of security, that what they’re doing isn’t going to get them sued?

These are not all topics that will have easy answers. When we build products, like Search and YouTube and stuff in the pre-AI world, we’ve always been trying to get the value exchange right. It’s no different for AI. We are definitely focused on making sure we can train on data that is allowed to be trained on, consistent with the law, giving people a chance to opt out of the training. And then there’s a layer about that—about what is fair use. It’s important to create value for the creators of the original content. These are important areas. The internet was an example of it. Or when e-commerce started: How do you draw the line between e-commerce and regular commerce?

There’ll be new legal frameworks developed over time, I think is how I would think about it as this area evolves. But meanwhile, we will work hard to be on the right side of the law and make sure we also have deep relationships with many providers of content today. There are some areas where it’s contentious, but we are working our way through those things, and I am committed to working to figure it out. We have to create that win-win ecosystem for all of this to work over time.

Something that people are very worried about with the web now is the future of search. When you have a type of technology that just answers questions for you, based on information from around the web, there’s a fear people may no longer need to visit those sites. This also seems like it could have implications for Google. I also wonder if you’re thinking about it in terms of your own business.

One of the unique value propositions we’ve had in Search is we are helping users find and learn new things, find answers, but always with a view of sharing with them the richness and the diversity that exists on the web. That will be true, even as we go through our journey with Search Generative Experience. It’s an important principle by which we are developing our product. I don’t think people always come to Search saying, “Just answer it for me.” There may be a question or two for which you may want that, but even then you come back, you learn more, or even in that journey, go deeper. We constantly want to make sure we are getting it right. And I don’t think that’s going to change. It’s important that we get the balance right there.

Similarly, if you deliver value deeply, there is commercial value in what you’re delivering. We had questions like this from desktop to mobile. It’s not new to us. I feel comfortable based on everything we are seeing and how users respond to high-quality ads. YouTube is a good example where we have developed subscription models. That’s also worked well.

How do you think people’s experience is going to change next year, as these products begin to really hit the marketplace and they begin to interact? How is their experience gonna change?

I think a year out from now, anybody starting on something in Google Docs will expect something different. And if you give it to them, and later put them back in the version of Google Docs we had, let’s say, in 2022, they will find it so out of date. It’s like, for my kids, if they don’t have spell-check, they fundamentally will think it’s broken. And you and I may remember what it was to use these products before spell-check. But more than any other company, we’ve incorporated so much AI in Search, people take it for granted. That’s one thing I’ve learned over time. They take it for granted.

In terms of what new stuff people can do, as we develop the multimodal capabilities, people will be able to do more complex tasks in a way that they weren’t able to do before. And there’ll be real use cases which are way more powerful.

Correction: This story was updated to fix transcription errors. Notably, MMMU was incorrectly transcribed as MMLU, and search generative experience originally appeared as search related experience.