SEO For Paws Live Stream Conference To Support Pet Shelters In Ukraine via @sejournal, @theshelleywalsh

One of the industry’s best-known influencers is organizing SEO for Paws, a live-streamed fundraiser on February 29, 2024, featuring a stellar speaker list that includes some of the industry’s best SEO professionals.

Anton Shulke is best known as the head of influencer marketing at Duda and an expert at organizing live-stream events.

When the war broke out in Ukraine, Anton was living in Kyiv but managed to seamlessly continue his work running live events while also escaping the conflict in Kyiv with his family and cat.

Even though Anton managed to leave the city, he has tirelessly continued his support for his favorite charity, which aids the many pets that were left behind in Kyiv after the war broke out.

SEO for Paws will mark two years since war broke out in Kyiv, and Anton has organized the event to provide even more support to the charity.

All proceeds will go to animal shelters in Ukraine that care for cats and dogs.

An Inspirational Journey With His Family And Dynia The Cat

When war broke out, Shulke was living in Kyiv with his wife, children, and cat, Dynia.

As the situation rapidly evolved, Anton kept his friends informed of his situation with his daily #coffeeshot posts on social media.

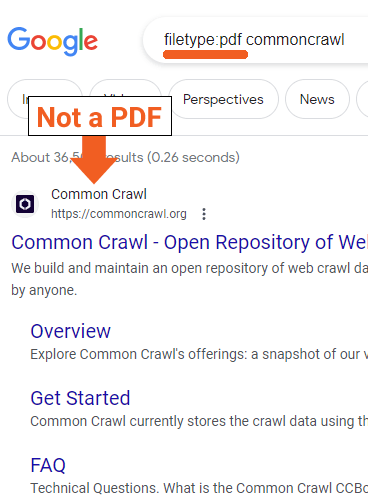

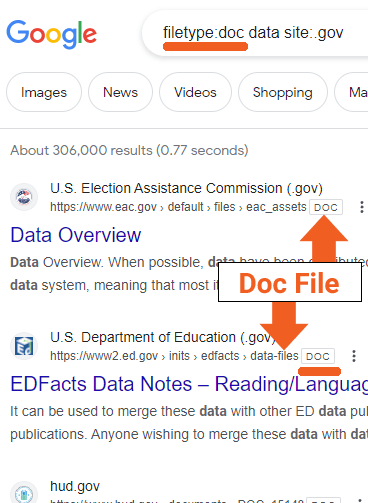

Image from Anton Shulke, February 2024

Image from Anton Shulke, February 2024

As the situation in Kyiv became more serious, his frequent updates became a lifeline for his SEO friends and network, who anxiously awaited news of his safety on a daily basis.

After managing to get out of Ukraine and traveling across Europe, it was actually Dynia who chose the final destination of Spain, where Anton sought asylum with his family.

Though facing personal hardships, Shulke has remained dedicated to Ukraine’s small, donation-dependent pet shelters and vulnerable animals.

Shulke shares:

“Before the war, I tried to help those small cats and dogs shelters, but just a bit.

We are talking about super small shelters, 30-100 animals, they are in private flats. Sometimes in tiny flats, like one-bedroom or even studio flats. And the owner lives there; often, it is family.”

These tiny shelters operate entirely on donations as they do not receive government funding or support from large charities.

The war has made their situation even more dire, with increased animal abandonment and limited resources.

SEO For Paws – Cat Lovers, Dog Lovers, And SEO

The upcoming “SEO for Paws” livestream aims to continue fundraising efforts. The five-hour event, which runs from 10:55 a.m. to 3:30 p.m. ET, will offer actionable SEO and digital marketing advice from experts while raising money for the animal shelters.

Headline speakers who have donated their time to support his cause include Navah Hopkins, Dixon Jones, Ashley Segura, Barry Schwartz, Glenn Gabe, Arnout Hellemans, and Grant Simmons, among others.

Attendance is free, but participants are encouraged to donate.

Event Highlights

- Date and Time: February 29, 2024, from 10:55 a.m. to 3:30 p.m. ET (4:55 p.m. to 9:30 p.m. GMT).

- Access: Free registration with the option to join live, participate in Q&A sessions, and network with peers. A recording will be made available on YouTube.

- Speakers: The live stream will feature 17 SEO and digital marketing experts, as well as some “special furry guests,” who will share actionable insights into SEO strategies, PPC tips, and content creation hacks.

- Networking Opportunities: Attendees will have the chance to interact with experts and colleagues through a live chat during the event.

The event page states:

“Join us for an extraordinary live stream dedicated to supporting Ukrainian cat and dog shelters while diving deep into the world of SEO and digital marketing. Whether you’re a cat person, a dog lover, or simply passionate about SEO, there’s something here for everyone!”

How To Make A Difference

The “SEO for Paws” live stream is an opportunity to make a meaningful difference while listening to excellent speakers.

All money raised is donated to help cats and dogs in Ukraine.

You can register for the event here.

And you can help support the charity by buying coffee.

Search Engine Journal is proud to be sponsoring the event.

More resources:

Featured Image: savitskaya iryna/Shutterstock