“Should we use ChatGPT, Gemini, or Claude to create trending content?”

That’s a good question – and one that I’m frequently asked when teaching a live class about engaging audiences through content.

You might mistakenly assume that instructors are supposed to answer questions like that. But my colleagues and I do something different.

We give our students relevant assignments that provide them with the opportunity to discover the answers to good questions for themselves.

This test-and-learn approach is something that you can also apply within your own company, agency, or team. Want to know which generative AI model to use to create trending content?

Well, conduct a test and compare the output.

Write Concise Descriptions Of The Most Emotionally Engaging Video Ads Of Euros 2024

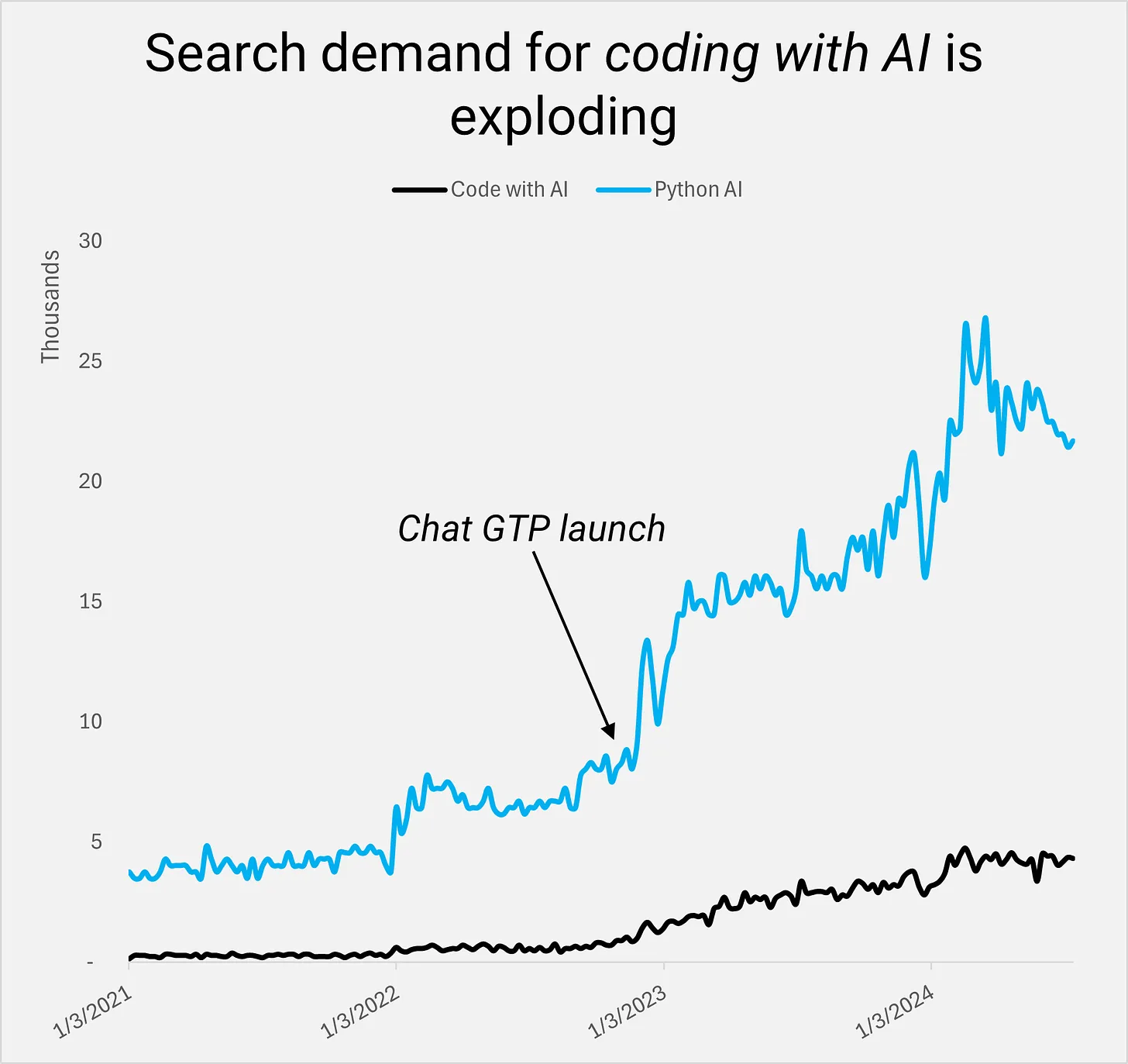

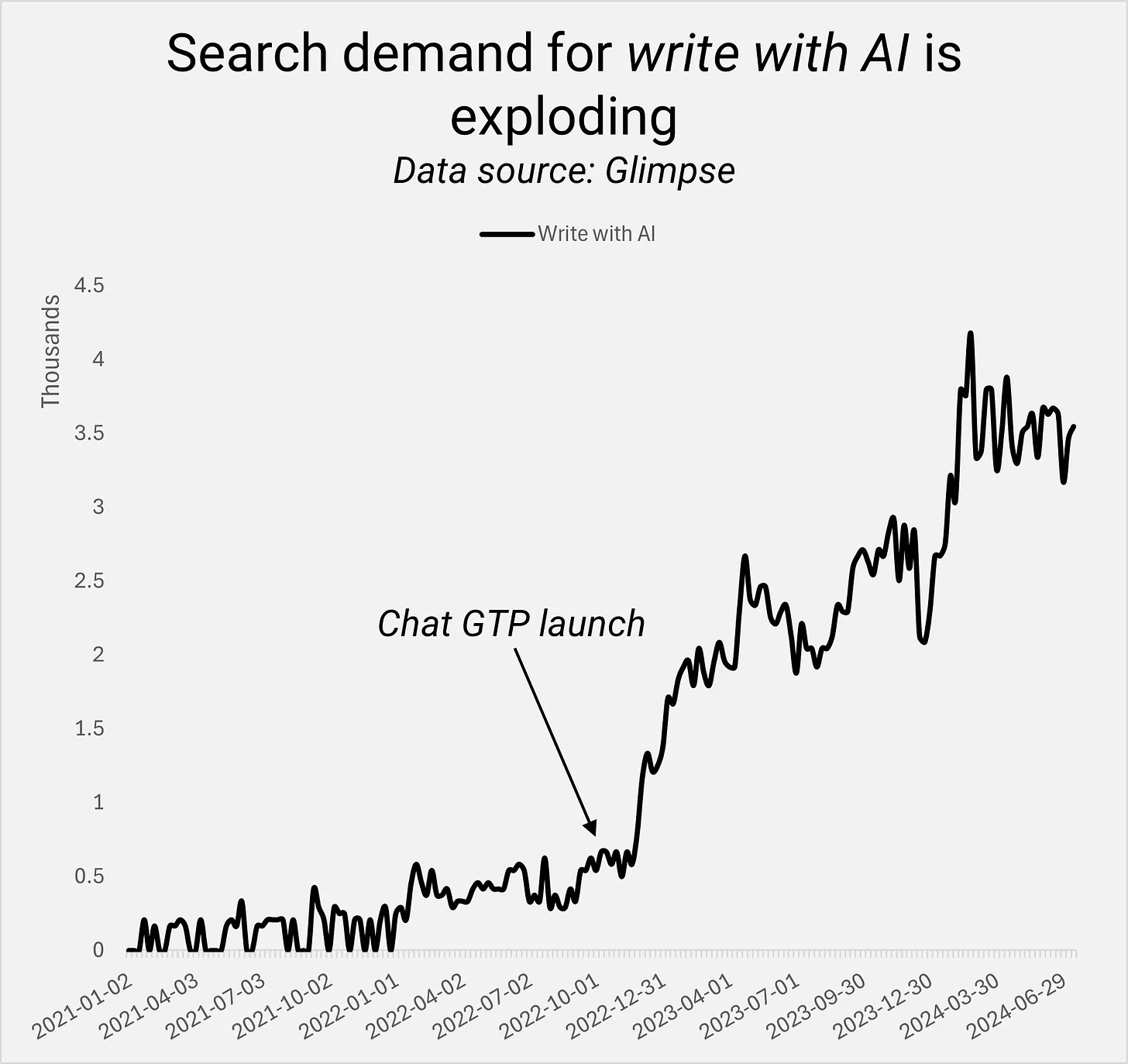

You already know how to use Google Trends to identify search terms or topics that are trending now. In fact, many journalists use this free tool to get story ideas.

Over the long Fourth of July weekend, I used Google Trends and saw that the topic “Euros 2024” ranked No. 1 in Trending Searches with more than 1 million queries.

Next, I decided to avoid using OpenAI’s ChatGPT 3.5 for my tests. Why? Because it’s the most popular generative AI model, which means that your competitors are probably using it, too.

That means the content that this tool creates is less likely “to provide substantial value when compared to other pages in search results,” as Google’s long-standing advice recommends.

ChatGPT Output

So, I explored the custom versions of ChatGPT and selected Write For Me by puzzle.today.

Why? Because it ranked No. 1 in the Writing category. The tool also claimed it would help me “write tailored, engaging content with a focus on quality, relevance and precise word count.”

I want to create better content, not just content that is different from what everyone else is creating. So, I asked Write For Me to “write concise descriptions of the most emotionally engaging video ads of Euros 2024.”

Here’s what this generative AI tool produced:

1. Unity Through Football

“A diverse group of children from different European countries come together on a pristine football pitch. As they play, the camera captures their joy and camaraderie, transcending language and cultural barriers. The ad concludes with a montage of professional players joining the children, emphasizing football's power to unite nations and foster friendship.”

2. Legacy of Legends

“The ad begins with black-and-white footage of iconic moments from past Euros, highlighting legendary players. It transitions to vibrant, modern clips of current stars recreating these moments, symbolizing the passing of the torch. A heartfelt narration speaks to the continuity of dreams and the legacy carried by each generation.”

3. Homecoming

“A montage of fans traveling from various parts of Europe to the host country, capturing their excitement and anticipation. Emotional reunions with fellow fans, old friends, and family members create a sense of belonging and community. The ad closes with a panoramic shot of a stadium filled with cheering supporters, underscoring the tournament's role in bringing people together.”

4. The Dream Begins

“The ad follows a young aspiring footballer practicing tirelessly, inspired by their favorite Euros moments. Interspersed with clips of professional matches, it shows the child's journey from local games to a stadium filled with fans. The message is clear: dreams start small but can lead to greatness, encouraging viewers to pursue their passions.”

5. Heartbeat of the Game

“This ad features a rhythmic sequence of football-related sounds: the thud of a ball, cheers from the crowd, and the whistle of the referee. These sounds build into a symphony, interspersed with clips of players’ intense focus and emotional celebrations. The heartbeat sound underscores the passion and excitement of the Euros, resonating with fans’ own emotions.”

6. From Streets to Stadiums

“Children playing football in urban alleys, rural fields, and makeshift pitches across Europe are showcased. Their raw passion and joy are mirrored by professional players in grand stadiums, blurring the lines between dreams and reality. The ad concludes with a powerful message: football belongs to everyone, from the streets to the grandest arenas.

Now, it’s perfectly clear why video ads with these descriptions would be emotionally engaging, but it is frustratingly unclear which brands are running these ads during Euros 2024.

So, I’d have to do some double-checking to make sure that ChatGPT isn’t hallucinating.

Gemini Output

Next, I gave Google’s Gemini (formerly Bard) the same prompt. Here’s what this generative AI model produced:

1. Adidas: "Hey Jude" (Most Engaging)

“This ad features rising England star Jude Bellingham alongside football legends like David Beckham and Frank Lampard. It taps into positive emotions by showcasing the passing of the torch and the enduring spirit of the game.”

2. Irn-Bru: "Get to Germany"

“This ad, likely using humor, aims to create positive feelings and excitement around the Euros being held in Germany.”

3. Budweiser: "Bring it Home"

“This ad uses a patriotic theme, likely aiming to inspire viewers and evoke a sense of national pride for their team's chance to win the Euros.”

It’s perfectly clear which brands are running the most emotionally engaging video ads of Euros 2024, but it’s still unclear which methodology Gemini is using to make this selection.

So, I’d still have to do more due diligence to discover that it’s probably recent articles and posts about new research from DAIVID.

Claude Output

I asked Anthropic’s Claude 3.5 Sonnet to “write concise descriptions of the most emotionally engaging video ads of Euros 2024.” Here’s what this generative AI tool replied:

“I apologize, but I don't have access to specific information about video ads from Euro 2024, as my knowledge cutoff is in April 2024, and I can't browse the internet for current information.”

“I can, however, offer some general thoughts on what makes emotionally engaging soccer/football ads:”

“Underdog stories of players or teams overcoming adversity.”

“Nostalgic montages showcasing historic Euro moments.”

“Depictions of passionate fans and their connection to the sport.”

“Inspirational messages about unity and international cooperation.”

“Cinematic recreations of iconic goals or plays.”

“If you'd like me to expand on any of these concepts or discuss emotionally engaging sports ads in general, I'd be happy to do so. For accurate information about Euro 2024 ads, I'd recommend checking official UEFA sources or recent sports media coverage.”

Well, Claude’s honesty is admirable. But its knowledge cutoff means that I shouldn’t use this generative AI model for content about fairly recent trends.

Write Short Captions For The Funniest Photos From The 2024 Nathan’s Hot Dog Eating Contest

For my second test, I used the Google autocomplete feature to confirm that the phrase, [Nathan’s hot dog eating contest], was a trending search term.

Then, I asked each of the three generative AI tools to “write short captions for the funniest photos from the 2024 Nathan’s Hot Dog Eating Contest.”

ChatGPT Output

Here’s what Write For Me generated:

“Captions for Funniest Photos from the 2024 Nathan’s Hot Dog Eating Contest:”

"When you realize you're only halfway through your 50 hot dogs."

"Eyes on the prize: the ketchup bottle!"

"Training for this by swallowing my pride daily."

"When the bun hits you harder than the hot dog."

"Mustard not? I mustered up the courage!"

"New strategy: inhale, don’t chew."

"Hot dogs: 10, Dignity: 0."

"When you think it’s the last dog but it’s not."

"The face you make when you beat your personal best... by 1 dog."

"Calories don’t count if you don’t chew, right?"

Okay, I admit that many of these captions are funny. But it’s difficult to tell if they belong to actual photos or if this custom version of ChatGPT hallucinated the entire list.

And don’t get me started on getting permission to use any photos that exist. Of course, I could always use DALL-E 3 to turn the text above into images.

Gemini Output

Next, I gave Gemini the same assignment. Here’s what it generated:

1. Mustard Mishap: "Someone's about to be redecorated in yellow!" (Photo of a competitor with mustard splattered all over their face).

2. Relish the Moment: "This is what pure competitive joy looks like." (Photo of a competitor mid-devour with a look of intense concentration and relish dripping down their chin).

3. Bun Bungee: "Is that a hot dog or a bungee jumper? Defying the laws of physics, one frankfurter at a time." (Photo of a competitor with a hot dog halfway down their esophagus).

4. Fountain of Fury: "Looks like someone forgot their manners! (But they're probably winning.)" (Photo of a competitor with a spray of water and ketchup erupting from their mouth).

5. Doggy Downtime: "Even champions gotta rest sometimes. (Just don't let Joey Chestnut see this.)" (Photo of a competitor slumped back in their chair with a belly full of hot dogs).”

Um, okay. These are also funny captions.

But it’s hard to know if they go with actual photos that were posted on July 4, 2024, without using Google Images to conduct a series of relevant searches. And that still doesn’t give me permission to use them even if they do exist.

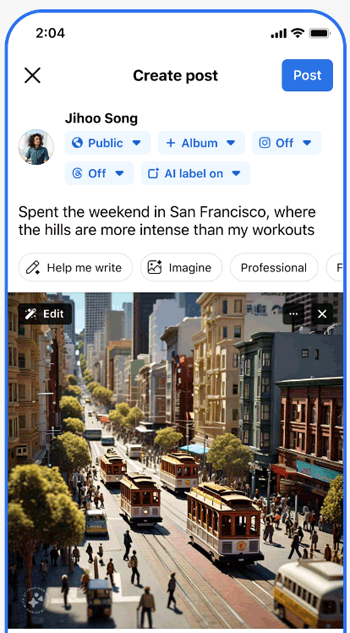

[Note, these responses were generated a few days before Google announced their support for labelling AI-generated images.]

Claude Output

Then, I asked Claude 3.5 Sonnet to “write short captions for the funniest photos from the 2024 Nathan’s Hot Dog Eating Contest.” Here’s what it replied:

“I apologize, but I don't have access to specific photos from the 2024 Nathan's Hot Dog Eating Contest, as my knowledge cutoff is in April 2024. Additionally, I can't generate, produce, edit, manipulate or create images.”

“However, I can suggest some hypothetical humorous captions that might be appropriate for funny moments at a hot dog eating contest:”

"When the hot dogs fight back"

"Regretting that 50th hot dog"

"Mid-contest nap: Expert level achieved"

"Ketchup everywhere... except on the hot dogs"

"When you realize it's only been 2 minutes"

"The face of a champion... or indigestion"

"Spectators' faces: Equal parts horror and awe"

"When the bun-to-dog ratio is all wrong"

"Competitive eating: Where table manners go to die"

"That moment you question all your life choices"

“Remember, these are fictional captions for imaginary photos. For accurate information about the 2024 contest, you'd need to check official sources or news reports from after the event.”

Once again, Claude gets high marks for being honest about its limitations.

But unless I want to select some of these hypothetical humorous captions and use them as prompts in Adobe Firefly, then I need to use one of the other options.

Write A 400-Word Blog Post About The Top Omni Channel Retailing Trends Of 2024

For my third test, I used SparkToro to research the audience of 5,000 to 6,000 people who search for the keyword [customer retention] each month.

I used SparkToro’s trending keywords feature to analyze the search terms that have risen in popularity among searchers for “customer retention” over the last quarter.

Then, I selected the trending keyword, “omni channel retailing trends,” for this test.

ChatGPT Output

When I asked Write For Me to “write a 400-word blog post about the top omni channel retailing trends of 2024,” here’s what this generative AI tool produced:

Top Omnichannel Retailing Trends of 2024

“As we move into 2024, the landscape of retail continues to evolve rapidly, driven by technological advancements and changing consumer expectations. Omnichannel retailing, which integrates various shopping channels into a seamless customer experience, remains at the forefront of this evolution. Here are the top omnichannel retailing trends to watch out for in 2024.”

1. Enhanced Personalization Through AI and Data Analytics

“In 2024, personalization goes beyond just using the customer’s name in emails. Retailers are leveraging advanced AI and data analytics to offer hyper-personalized shopping experiences. By analyzing consumer behavior, preferences, and purchase history, retailers can tailor recommendations, promotions, and content to individual customers. This level of personalization enhances customer satisfaction and increases conversion rates.”

2. Seamless Integration of Physical and Digital Channels

“The line between online and offline shopping continues to blur. In 2024, more retailers are perfecting the integration of physical and digital channels. Innovations like in-store augmented reality (AR) mirrors, smart fitting rooms, and mobile app functionalities that enhance in-store experiences are becoming standard. Additionally, services like click-and-collect and in-store returns for online purchases are expected to become more streamlined and widespread.”

3. Expansion of Mobile Commerce (M-Commerce)

“Mobile commerce continues to grow as consumers increasingly use their smartphones for shopping. Retailers are optimizing their mobile websites and apps to provide faster, more intuitive, and secure shopping experiences. Features like one-click purchasing, mobile wallets, and personalized push notifications are becoming essential components of mobile strategies.”

4. Social Commerce Revolution

“Social media platforms are transforming into powerful retail channels. In 2024, social commerce, where shopping experiences are embedded within social media, is set to explode. Platforms like Instagram, Facebook, and TikTok are enhancing their shopping functionalities, allowing users to discover, browse, and purchase products without leaving the app. Influencer marketing and user-generated content continue to drive this trend, making social media a critical part of omnichannel strategies.”

5. Sustainable and Ethical Retailing

“Consumers are increasingly demanding sustainability and transparency from the brands they support. In response, retailers are incorporating sustainable practices and ethical sourcing into their omnichannel strategies. This includes offering eco-friendly products, reducing packaging waste, and providing transparency about product origins and manufacturing processes. Brands that can effectively communicate their sustainability efforts through all channels will gain a competitive edge.”

6. Voice Commerce and Conversational AI

“Voice-activated shopping and conversational AI are becoming more prevalent. With the rise of smart speakers and virtual assistants, consumers are using voice commands to search for products, place orders, and track deliveries. Retailers are integrating voice commerce capabilities into their omnichannel strategies to enhance convenience and improve customer engagement.”

Conclusion

“In 2024, the retail industry is embracing these omnichannel trends to create more cohesive and engaging shopping experiences. By focusing on personalization, integrating physical and digital channels, leveraging mobile and social commerce, prioritizing sustainability, and adopting voice commerce, retailers can stay ahead in a competitive market. These trends highlight the importance of flexibility and innovation in meeting the evolving needs of modern consumers.”

Um, okay. This is 498 words long. This content seems designed for publication at the beginning of the year.

The blog post is missing citations from reliable sources, credible quotes, and relevant statistics, as Roger Montti’s article, “Researchers Discover How To SEO For AI Search,” noted back on Dec. 18, 2023.

So, it’s not as bad as a first draft. But I would hope that writers would learn by using this custom version of ChatGPT that they need to craft something a little bit better.

Gemini Output

Next, I asked Gemini to tackle the same assignment. Here’s what this generative AI tool produced:

The Future of Shopping: Top Omnichannel Retail Trends in 2024

“The retail landscape is ever-evolving, and 2024 is no exception. Consumers today expect a seamless shopping experience, regardless of whether they're browsing online, visiting a physical store, or interacting with a brand on social media. This is where omnichannel retail thrives. By creating a unified experience across all touchpoints, retailers can build stronger customer loyalty and drive sales.”

“Let's delve into the top omnichannel retail trends shaping the industry in 2024:”

1. The Rise of the Data-Driven Experience:

“With the demise of third-party cookies, retailers are focusing on collecting and utilizing first-party customer data. This data allows for real-time personalization across all channels. Imagine receiving targeted email promotions based on your recent in-store purchases, or seeing personalized product recommendations on a brand's app. Advanced analytics will not only personalize marketing efforts but also optimize search functionalities and product merchandising on e-commerce platforms.”

2. AI Takes Center Stage:

“Artificial intelligence (AI) is rapidly transforming the retail experience. AI-powered chatbots can answer customer queries 24/7, recommend products based on browsing history, and even assist with in-store tasks. Generative AI can create personalized marketing content at scale, while AI-driven sales automation streamlines processes for both retailers and customers.”

3. The Blur Between Physical and Digital:

“The lines between online and offline shopping are continuing to blur. We're seeing a rise in ‘phygital’ experiences, such as augmented reality (AR) apps that allow customers to virtually try on clothes or visualize furniture in their homes. In-store kiosks can facilitate online purchases and allow for easy returns or exchanges. Additionally, ‘showrooming,’ where customers browse in-store and then purchase online, necessitates seamless product availability and pricing across channels.”

4. Social Commerce Takes Off:

“Social media platforms are no longer just for browsing; they're becoming powerful shopping destinations. ‘Live shopping’ experiences with influencers showcasing products are gaining traction, while social media platforms are integrating smoother buying functionalities within their apps. User-generated content and social proof will continue to influence purchasing decisions, making social media a crucial touchpoint in the omnichannel journey.”

5. Personalization Beyond Products:

“The future of omnichannel retail goes beyond just product recommendations. Retailers are focusing on creating personalized experiences that cater to individual customer preferences. This could involve offering flexible delivery options, loyalty programs with tiered benefits, or the ability to curate in-store shopping experiences through appointments or personalized product selections.”

Conclusion:

“In today's competitive retail environment, omnichannel isn't just a trend; it's a necessity. By embracing these trends and prioritizing a data-driven, personalized approach, retailers can not only survive but thrive in the ever-evolving landscape of commerce.”

Well, the headline is better and it’s 433 words long, which is closer to what I’d asked for. But this blog post is also missing citations from reliable sources, credible quotes, and relevant statistics.

So, I hope writers would learn the same lesson by using Gemini that they learned using a custom version of ChatGPT: It’s not bad, but a human with experience and expertise can do better.

Claude Output

Finally, I asked Claude 3.5 Sonnet to “write a 400-word blog post about the top omni channel retailing trends of 2024.” Here’s what this generative AI tool produced:

Top Omnichannel Retailing Trends Shaping 2024

“As we navigate through 2024, the retail landscape continues to evolve rapidly. Omnichannel strategies have become more crucial than ever, with consumers expecting seamless experiences across all touchpoints. Let's explore the key trends defining omnichannel retailing this year.”

1. AI-Powered Personalization: “Artificial intelligence is revolutionizing how retailers understand and cater to individual customer preferences. In 2024, we're seeing more sophisticated AI algorithms that analyze customer data across channels to deliver hyper-personalized recommendations, offers, and experiences.”

2. Augmented Reality Integration: “AR is bridging the gap between online and offline shopping. Retailers are leveraging AR technology to allow customers to virtually try on clothes, visualize furniture in their homes, or explore products in detail before making a purchase decision.”

3. Social Commerce Acceleration: “Social media platforms are increasingly becoming direct sales channels. Retailers are optimizing their presence on platforms like Instagram, TikTok, and Pinterest, enabling in-app purchases and creating shoppable content that seamlessly integrates with users' social experiences.”

4. Sustainable and Ethical Omnichannel Practices: “Consumers are more environmentally conscious than ever. Retailers are responding by implementing sustainable practices across their omnichannel operations, from eco-friendly packaging to transparent supply chains and ethical sourcing information available across all channels.”

5. Voice Commerce Integration: “With the growing adoption of smart speakers and voice assistants, retailers are optimizing their digital presence for voice search and enabling voice-activated purchases, adding another dimension to the omnichannel experience.”

6. Phygital Experiences: “The blend of physical and digital experiences, or ‘phygital,’ is gaining traction. Retailers are creating innovative in-store digital touchpoints and bringing elements of the physical store experience to online platforms.”

7. Unified Customer Data Platforms: “Retailers are investing in advanced customer data platforms that consolidate information from all channels, providing a single view of the customer and enabling more cohesive marketing and service strategies.”

8. Flexible Fulfillment Options: “The line between online and offline continues to blur with the expansion of services like buy online, pick up in-store (BOPIS), curbside pickup, and same-day delivery. Retailers are optimizing their logistics to offer customers maximum flexibility.”

9. Subscription-Based Models: “More retailers are incorporating subscription services into their omnichannel strategies, providing regular product deliveries or exclusive access to content and experiences across multiple platforms.”

10. Advanced Analytics for Real-Time Insights: “Retailers are leveraging advanced analytics tools to gain real-time insights into customer behavior across channels, allowing for quick adjustments to inventory, pricing, and marketing strategies.”

“As these trends show, the future of retail lies in creating cohesive, customer-centric experiences that seamlessly blend online and offline touchpoints. Retailers who successfully adapt to these omnichannel trends will be well-positioned to thrive in the dynamic retail environment of 2024 and beyond.”

Claude’s content is 443 words long, which is longer than Gemini’s, but shorter than the custom version of ChatGPT. And Claude generated 10 trends, while ChatGPT generated six, and Gemini generated five.

But just like the other generative AI tools, Claude’s blog post is missing citations from reliable sources, credible quotes, and relevant statistics.

So, Claude is also good at creating a rough draft. But I’d hope that writers would learn by using ChatGPT, Gemini, and Claude that they shouldn’t become a tool of their tools.

AI Is Not Going To Take Your Job

A year ago, Jensen Huang, the founder, president, and CEO of Nvidia, said,

“AI is not going to take your job. The person who uses AI is going to take your job.”

Well, a year later, we’ve learned by comparing the content output generated by ChatGPT, Gemini, and Claude that it’s still smarter to use generative AI tools to brainstorm or create a good first draft.

Then, use your experience, expertise, authoritativeness, and trustworthiness (E-E-A-T) to add emotionally engaging videos, eye-catching photos, citations from reliable sources, credible quotes, and relevant statistics to your content.

Why? Because this is the best way to provide substantial value when compared to other pages in search results.

More resources:

Featured Image: jomel alos/Shutterstock