Jono Alderson, former head of SEO at Yoast and now at Meta, spoke on the Majestic Podcast about the state of SEO, offering insights on the decline of traditional content strategies and the rise of AI-driven search. He shared what SEOs should be doing now to succeed in 2025.

Decline Of Generic SEO Content

Generic keyword-focused SEO content, as well as every SEO tactic, has always been on a slow decline, arguably beginning with statistical analysis, machine learning and then into the age of AI-powered search. Citations from Google are now more precise and multimodal.

Alderson makes the following points:

- Writing content for the sake of ranking is becoming obsolete because AI-driven search results provide those answer.

- Many industries and topics like dentists and recipes sites have an oversaturation of nearly identical content that doesn’t add value.

According to Alderson:

“…every single dentist site I looked at had a tedious blog that was quite clearly outsourced to a local agency that had an article about Top 8 tips for cosmetic dentistry, etc.

Maybe you zoom out how many dentists are there in every city in the world, across how many countries, right? Every single one of those websites has the same mediocre article that somebody has done some keyword research. Spotted a gap they think they can write one that’s slightly better than their competitors. And yet in aggregate, we’ve created 10 million pages that none of which show the purpose, all of which are fundamentally the same, none of which are very good, none of which add new value to the corpse of the Internet.

All of that stops working because Google can just answer those kinds of queries in situ.”

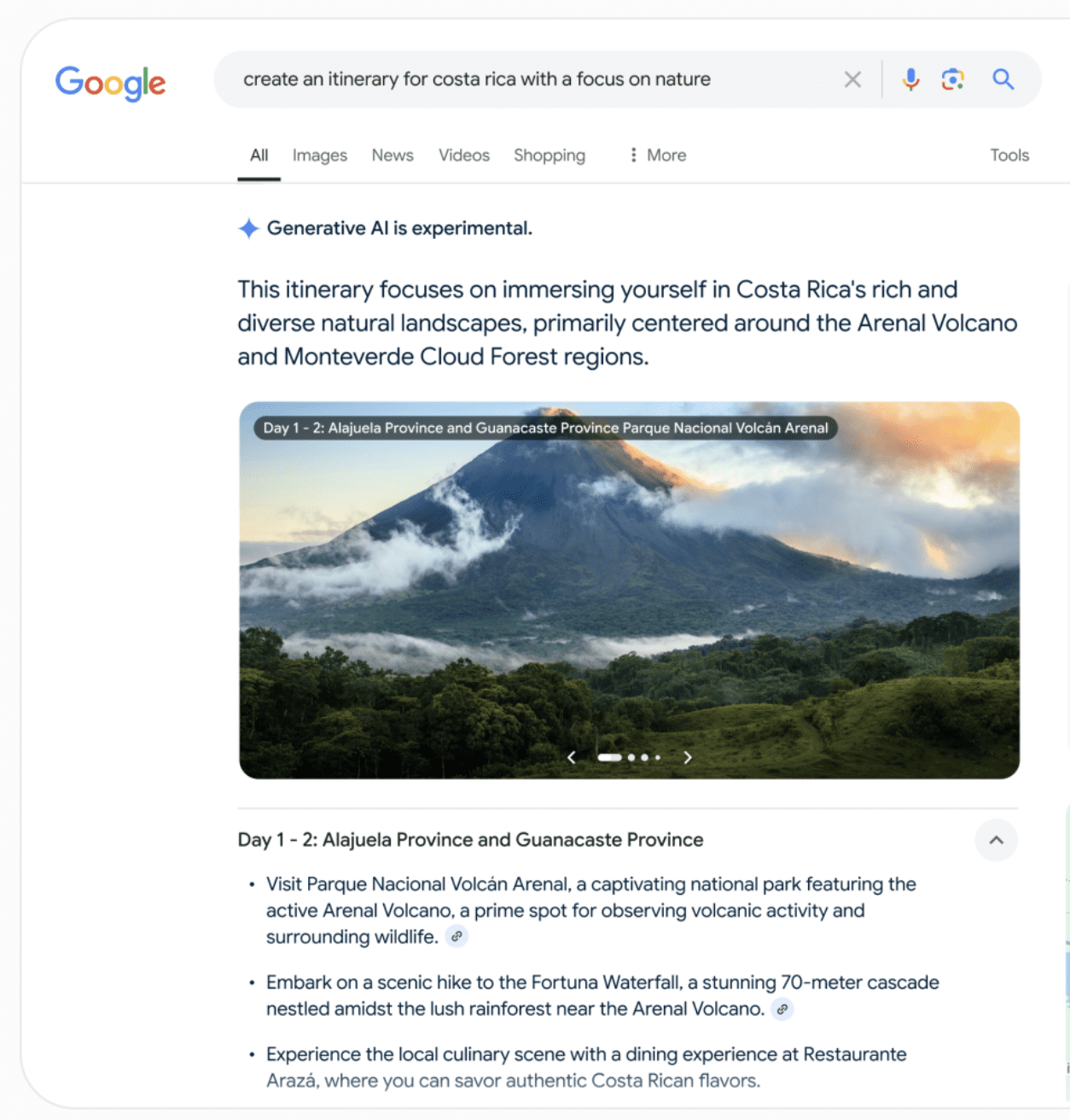

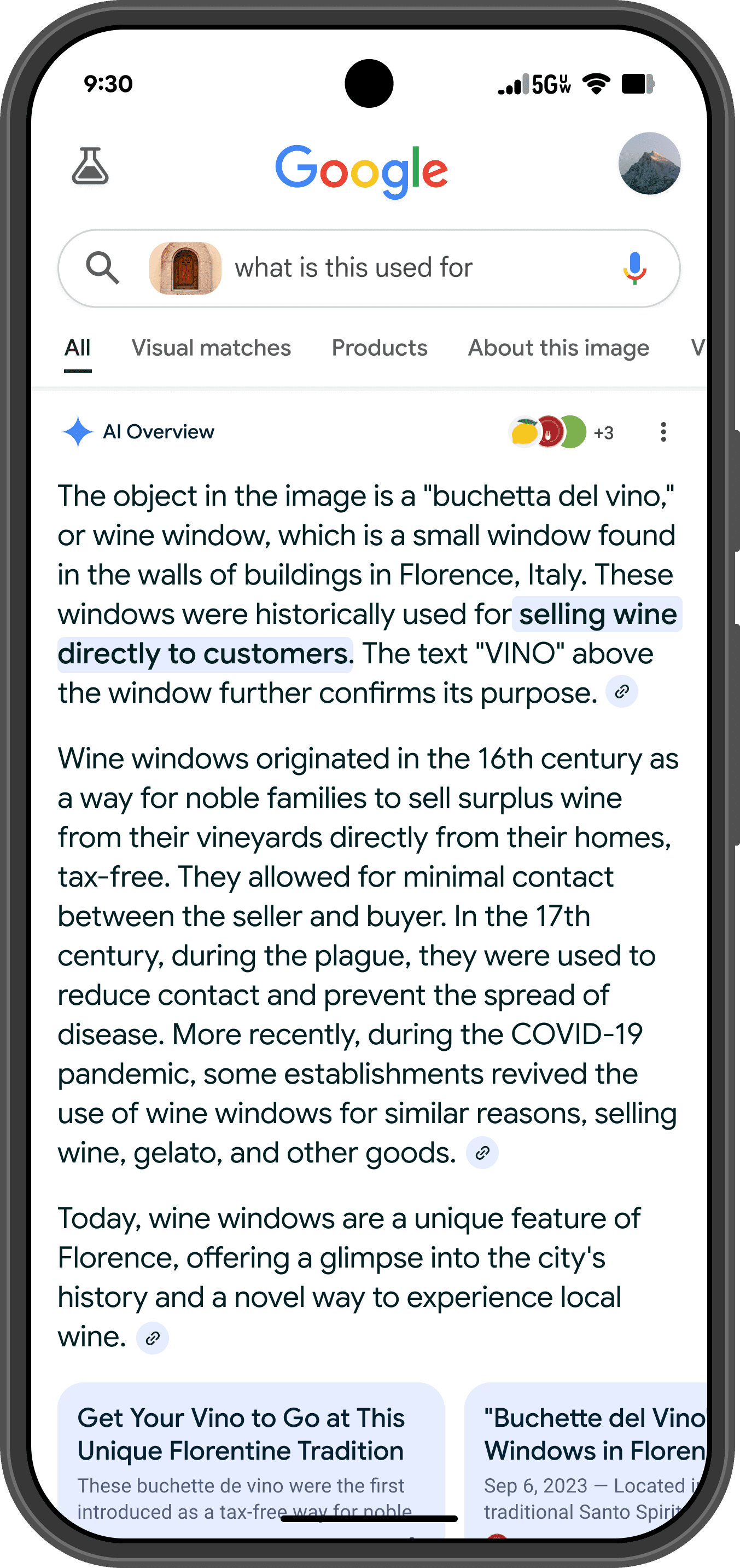

Google Is Deprioritizing Redundant Content

Another good point he makes is that the days where redundant pages have a chance are going away. For example, Danny Sullivan explained at Search Central Live New York that many of the links shown in some of the AI Overviews aren’t related to the keyword phrase but are related to the topic, providing access to the next kind of information that a user would be interested in after they’d ingested the answer to their question. So, rather than show five or eight links to pages that essentially say the same thing Google is now showing links to a variety of topics. This is an important thing publishers and SEOs need to wrap their minds around, which you can read more about here: Google Search Central Live NYC: Insights On SEO For AI Overviews.

Alderson explained:

“I think we need to stop assuming that producing content is a kind of fundamental or even necessary part of modern SEO. I think we all need to take a look at what our content marketing strategies and playbooks look like and really ask the questions of what is the role of content and articles in a world of ChatGPT and AI results and where Google can synthesize answers without needing our inputs.

…And in fact, one of the things that Google is definitely looking for, and one of the things which will be safe to a degree from this AI revolution, is if you can publish, if you can move quickly, if you can produce stuff at a better depth than Google can just synthesize, if you can identify, discover, create new information and new value.

There is always space for that kind of content, but there’s definitely no value if what you’re doing is saying, ‘every month we will produce four articles focusing on a given keyword’ when all 10,000 of our competitors employ somebody who looks like us to produce the same article.”

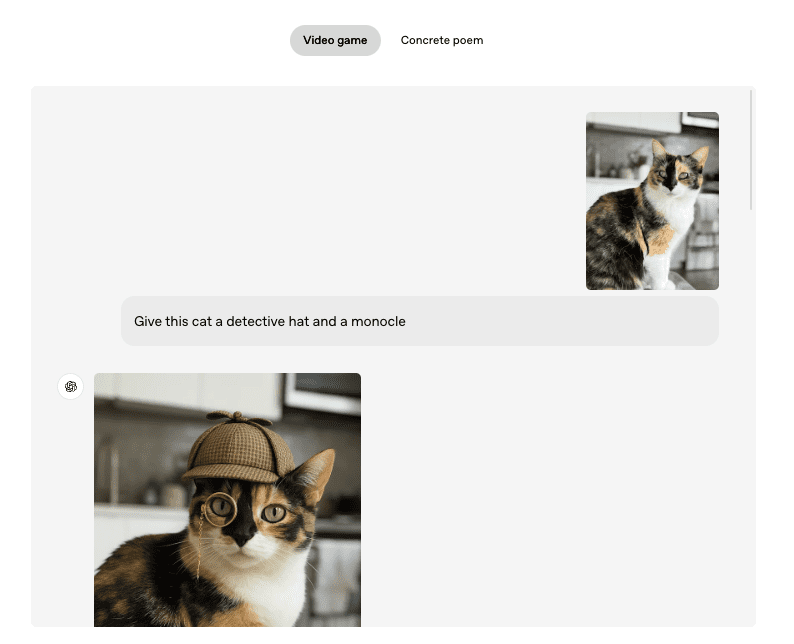

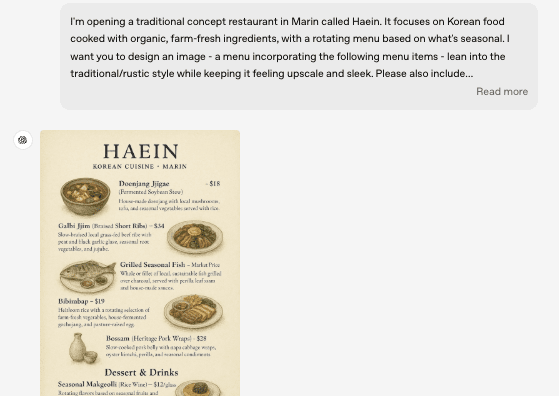

How To Use AI For Content

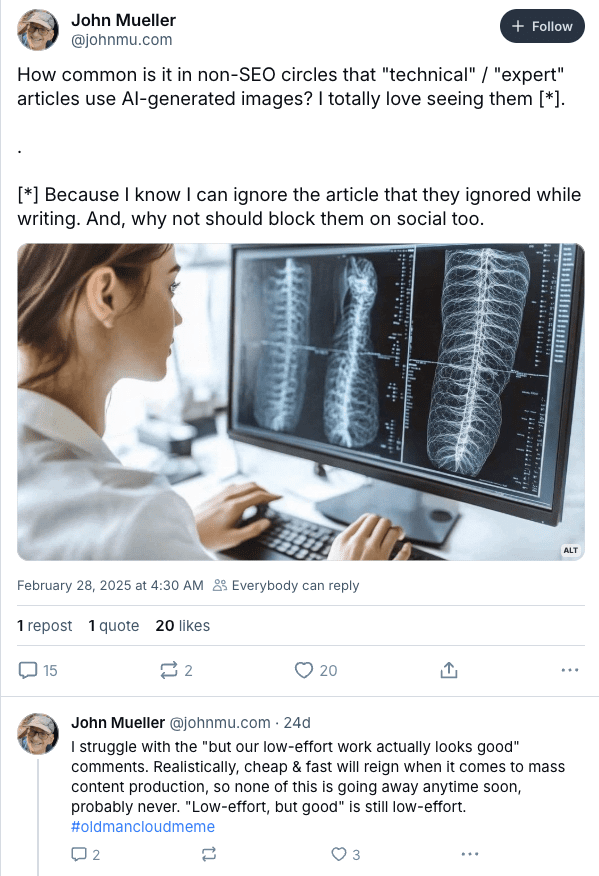

Alderson discouraged the use of AI for producing content, saying that it tends to produce a “word soup” in which original ideas get lost in the noise. He’s right, we all know what AI-generated content looks like when we see it. But I think that what many people don’t notice is the extra garbage-y words and phrases AI uses that have lost their impact from overuse. Impactful writing is what supports engagement, and original ideas are what make content stand apart. These are the two things AI is absolutely rubbish at.

Alderson notes that Google may have anticipated the onslaught of AI-generated content by emphasizing EEAT (Experience, Expertise, Authoritativeness and Trustworthiness and argues that AI can be helpful.

He observed:

“And a lot of the changes we’re seeing in Google might well be anticipating that future. All of the EEAT stuff, all of the product review stuff, is designed to combat a world where there’s an infinite amount of recursive nonsense.

So definitely avoid the temptation to be using the tools just to produce. Use them as assistance and muses to bounce ideas around with and then do the heavy thinking yourself.”

The Shift from Content Production to Content Publishing

Jono encouraged content publishers to focus on creating original research, expert insights, to show things that have gone unnoticed. He suggested that succesful publishers are the ones who get out in the world and experience what they’re writing about through original research. He also encouraged focusing on authoritative voices rather than settling for generic content.

He explained:

“I think there’s definitely room to publish good content and publish. 2015-ish everyone started saying become a publisher and the whole industry misinterpreted that to mean write lots of articles. When actually you look at successful publishers, what they do is original research, by experts, they break news, they visit the places, they interact with things. A lot of what Google’s looking for in those kind of EEAT criteria, it describes the act of publishing. Yet very little of SEO actually publishes. They just produce And I think if you …close that gap there is definitely value.

And in fact, one of the things that Google is definitely looking for, and one of the things which will be safe to a degree from this AI revolution, is if you can publish, if

you can move quickly, if you can produce stuff at a better depth than Google can just synthesize.”

What does that mean in terms of a content strategy? One of the things that bothers me is the lack of originality in content. Things like concluding paragraphs with headings like “Why We Care” drive me crazy because to me it indicates a rote approach to content.

I was researching how to flavor shrimp for sautéing and every recipe site says to sprinkle seasonings on the shrimp prior to a quick sauté at a medium high heat, which burns the seasonings. Out of the thousands of recipe sites out there, not one can figure out that you can sauté the shrimp, add some garlic, then when it’s done add the seasoning just after turning off the flame? And if you ask AI how to do it the AI will tell you to burn your seasonings because that’s what everyone else says.

What that all means is that publishers and SEOs should focus on hands-on original research and unique insights instead of regurgitating what everyone else is saying. If you follow directions and it comes out poorly maybe the directions are wrong and that’s an opportunity to do something original.

SEO’s Role in Brand-Building & Audience Engagement

When asked what the role of content is in a world where AI is producing summaries, Alderson suggested that publishers and SEOs need to get ahead of the point where consumers are asking questions, go back to before they ask those questions.

He answered:

“Yeah, it’s really tricky because the kind of content that we’re producing there is going to change. It’s not not going to be the “8 Tips For X” in the hope the 2% of that audience convert. It’s not going to work anymore.

You’re going to need to go much higher up the funnel and much earlier into the research cycle. And the role of content will need to change to not try and convert people who are at the point of purchase or ready to make a decision, but to influence what happens next for the people who are at the very start of those journeys.

So what you can do is, for example, I know this is radical, but audience research, find out what kind of questions people in your sector had six months before they purchased or the kind of frustrations and challenges- what do they wish they’d known when they’d started to engage upon those processes?”

Turning that into a strategy, it may mean that SEOs and publishers may want to shift away from focusing solely on transactional keywords and toward developing content that builds brand trust early. As Jono recommends, conduct audience research to identify what potential customers are thinking about months before they are ready to buy and then create content that builds long-term familiarity.

The Changing Nature of SEO Metrics & Attribution

Alderson goes on to offer a critique about the overreliance on conversion-based metrics like last-click attribution. He suggests that the focus on proving success by showing that a user didn’t return to the search results page is outdated because SEO should be influencing earlier stages of the customer journey

“You look at the the kind of there’s increasing belief that attribution as a whole is a bit of a pseudoscience and that as the technology gets harder to track all the pieces together, it becomes increasingly impossible to produce an overarching picture of what are the influences of all these pieces.

You’ve got to go back to conventional marketing …You’ve got to look at actually, does this influence what people think and feel about our brand and our logo and our recall rather than going, ‘how many clicks did we get out of, how many impressions and how many sales?’ Because if you’re competing there, you’re probably too late.

You need to be influencing people much higher the funnel. So, yeah… All, everything we’ve ever learned in the nineteen fifties and sixties about marketing, that is how we measure what good SEO looks like. Yeah, it looks like maybe we need to step back from some of the more conventional measures.”

Turning that into a strategy means that maybe it’s a good exercise to rethink traditional success metrics and start looking at customer sentiment rather than just search rankings.

Radical Ideas For A Turning Point In History

Jono Alderson prefaced his recommendation for doing audience research with the phrase, “I know this is radical…” and what he proposes is indeed radical but not in the sense that he’s proposing something extreme. His suggestions are radical in the sense that he’s pointing out that what used to be common sense in SEO (like keyword research, volume-driven content production, last-click attribution) is increasingly losing relevance to how people seek out information today. The takeaway is that adapting means rethinking SEO to the point that it goes back to its roots in marketing.

Watch Jono Alderson speak on the Majestic SEO podcast:

Stop assuming that ‘producing content’ is a necessary component of modern SEO – Jono Alderson

Featured Image/Screenshot of Majestic Podcast