LinkedIn Shares 7 Insights For Powerful Online Engagement via @sejournal, @martinibuster

LinkedIn shared insights with Search Engine Journal about how to effectively plan and roll out new features based on their experience planning and rolling out new AI features. The insights are useful whether you’re planning a content strategy or adding new features to your business.

I spoke with Prashanthi Padmanabhan, Head of Engineering for LinkedIn Premium. LinkedIn recently rolled out a massive change for their premium subscribers that analyzes comments, articles, videos, and posts and suggest how the information is useful for the member, as well as a new job seeker experience.

What happened behind the scenes and the takeaways from it offer useful insights that are useful to anyone who publishes or sells online.

Prashanthi Padmanabhan, Head of Engineering for LinkedIn Premium

Image/LinkedIn

Image/LinkedInCreating A Foundation For Success

I asked Prashanthi about her takeaways on planning and creating these features and her answer consisted of three points

- Anchor your strategy to your mission

- Think through how your plans add value to your audience or customers

- Get member feedback from day one

Here is what she shared:

“There are three main takeaways for me from this experience so far. The first is to anchor your strategy to your mission. A robust product strategy and roadmap should always be anchored in the company’s overarching mission. By aligning every decision on our roadmap with this purpose, we ensure our efforts directly contribute to member success.

The next is about thinking through how to leverage technical innovations. As part of the engineering team, we embrace cutting-edge technologies like Generative AI. These innovations allow us to craft elegant and practical solutions that cater to our members’ needs. Our commitment lies in delivering features that truly add value to our members’ experiences.

Last, but not least, is to incorporate member feedback early and often. We strongly believe that our members’ feedback and sentiments are invaluable. From the moment our product faces our customers, it’s Day 1. We build and roll out features through iterative development, relying on a blend of internal reviews and in-product feedback to gauge quality.

For instance, our initial foray into AI-powered writing suggestions for LinkedIn profiles and messages provided valuable insights from our members’ point of view. By listening to our members and adapting based on their actions, we will continue to refine features to meet—and ideally exceed—their expectations.”

Map Your Plans To User’s Needs, Not Trends

There are always many ideas of things that a business can do for their users. But what’s the right way to assess if something is worth doing?

Prashanthi answered that she and team started with understanding member’s needs as an ongoing iterative process. This is a great insight for anyone who works online and wants to go beyond what competitors are doing.

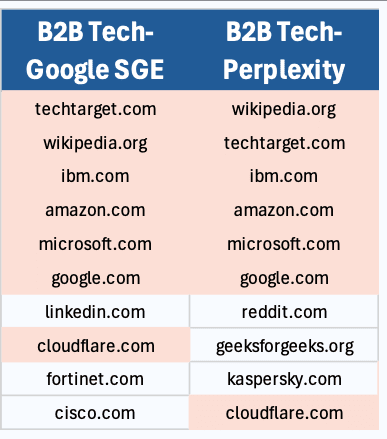

Another insight that everyone should pay attention to is that LinkedIn didn’t look at what others are doing, they focused on what their users might find useful. A lot of SEO and online content projects begin with competitor research and that’s something that in my opinion leads to unoriginal content that is the opposite of the unique experiences that Google wants to show in the search engine results pages (SERPs).

She answered:

“The process of identifying the right features to add begins with a deep understanding of our members’ and customers’ needs. We do this by validating our hypotheses through research and feedback. However, it’s not a one-time task; it’s an ongoing, iterative process. At LinkedIn, we rely on a combination of data, success metrics, and member feedback to gauge how well we’re meeting those needs. As we evolve our products, alignment to our mission, data insights, and feedback help guide our overall development journey.

For example, when we recognized that Generative AI could revolutionize technology, we didn’t simply follow trends. Instead, we asked ourselves: Could this technology truly benefit our members? If so, how could we integrate it into our Premium platform? For instance, we explored using it to simplify tasks like helping to write when starting a blank page or extracting key insights from LinkedIn feed posts.

It’s important to note that LinkedIn Premium is intentionally designed to enhance member productivity and experience based on their individual goals. So the features we add to Premium should map to their specific needs – for job seekers that could be helping them stand out to find the right job, getting the right insights for creators to help them build their audiences, and giving businesses a platform to build and grow their brand.”

The Importance Of The Why, What, & When

Every business faces the question, what do we do next and how do we do it? Prashanthi offered her insights on what to focus on in order to maximize for a successful outcome.

Prashanthi shared:

“Our product engineering principles at LinkedIn are rooted in three fundamental elements: starting with the “why,” aligning on the “what,” and optimizing for the “when.” We found these principles are a solid guide for navigating through the complex process of creating impactful products that resonate with our members.

The why is determined by delving into the site’s purpose and identifying the target audience—those who will benefit most from the site’s offerings. This clarity on the “why” sets the foundation for subsequent decisions.

With the “why” firmly in mind, now align on the “what.” This step involves defining the set of features and capabilities the site needs. We ask ourselves, what functionalities are essential to address the identified needs and then go from there. Carefully curating this feature set can help get a better feel for how they align with members’ requirements.

The final step is optimizing for the “when.” Engineering teams often grapple with the delicate balance between craftsmanship and time-to-market. Rather than waiting indefinitely for perfection, embrace early testing, such as releasing a minimum viable product (MVP) to gather feedback promptly. Metrics such as site visitor volume, engagement duration, and return frequency guide the assessment of the site’s value. It’s a dynamic dance between precision and speed, all aimed at delivering an exceptional experience.”

What Is A Good User Experience?

The concept of user experience can be subjective, we all have an idea of what it might be. I wanted to find out from Prashanthi, as head of engineering, how does one even translate the concept of a good user experience to an actual user experience online?

Her answer emphasized the importance of keeping things as simple and intuitive as possible, plus consistency.

She shared:

“For me, a good user experience means a product is simple, intuitive, and trustworthy. As an engineering team, translating the concept of a good user experience into reality requires meticulous attention to detail throughout the process. At LinkedIn this starts at the very beginning when we are transforming product and design specifications into a technical design. It’s essential to focus on simplicity and the consistency of the user experience across the entire product, so it’s intuitive to use with less cognitive load.

I’m also a big fan of clear and concise messaging (copy) for our customers as they help to build trust; in fact, when users run into issues, the clarity and usefulness of error messages and support resources make a huge difference.

I’ve found that customers are forgiving when your product works well and fast most of the time, and during times when there are issues, clear guidance on how they can best navigate that situation is critical. When it comes to reliability and performance, it’s simple – the product should work reliably every single time. A high-performance product gives users instant gratification as people care a lot about productivity and saving time, so they should be able to trust that the product will always work, and work fast.”

Importance Of Commitment To Improvement

A majority of LinkedIn’s users indicated that the new features are useful. I asked Prashanthi is the takeaway for online businesses that would in their own way increase the helpfulness of their business, whether that’s an ecommerce site, recipe blog, product review or comparison site?

Her answer suggests that creating content or features that resonate with users is a key to increasing the helpfulness of a website, something that’s super important for any online business today.

She offered the following insights:

“We’re extremely excited that early tests show that 90% of subscribers with access to our popular AI-powered job experience find it useful! This positive feedback underscores our commitment to creating features that genuinely resonate with our members. Rather than focusing on technology for technology’s sake, prioritizing how this tech can genuinely benefit our members seems to be resonating.

As professionals we know that job hunting can be an isolating and overwhelming experience, so we’ve introduced AI-assistant features designed to support and guide members throughout their job search journey, leveraging the knowledge from our Economic Graph. Our goal is to provide a virtual handhold, enabling job seekers to efficiently and confidently identify roles that align with their skills and aspirations. The overwhelmingly positive response reinforces that we’re moving in the right direction.

Our product development journey is guided by a combination of essential factors:

- Product intuition

- Technical innovation

- Data insights

- Customer feedback.

These elements apply universally to any product we create. It’s essential to recognize that achieving success doesn’t happen overnight. Instead, it requires a culture of rapid experimentation and continuous learning. We understand that perfection isn’t attainable on the first try, but our commitment to improvement drives us forward.”

How To Decide What’s Helpful For Users?

Being unique and helpful is important for ranking in today’s search engine. But how does one go about reimagining the user’s experience? It can be difficult to someone inside the business to understand what users may need.

I asked, what advice would you give an online business, whether that’s an ecommerce or a product review site that is contemplating what they can do better to serve their users?

She suggested the following steps:

“When we create new products, it’s essential to consider what other people need. So, right at the start, finding ways to bring more of the outside into development is critical. In the initial phases of developing our product strategy and roadmap for Premium, our user experience research and marketing teams conducted a combination of qualitative (numbers) and quantitative (stories) research to develop a deeper understanding of specific needs and related sentiments. This kind of research helps refine the personas we are building products for and clearly articulates the specific jobs and goals people are trying to accomplish with our products. For any business, this process can really humanize the product development process by helping to build a clear picture of the people that the product is designed for. It’s like getting to know them as real individuals.

But don’t just stop there. Once a basic version of the product (MVP) is ready, test it with a small group and pay attention to how well it works and what is said by the users. At LinkedIn, we involve our engineers in this process so they can learn about member’s needs and hear feedback first hand. As an engineering leader, I really enjoy sitting in these research sessions!—it makes the problems the team and I are solving feel more real. It’s better than just reading a list of product requirements.”

Cultivate Empathy For Online Success

A lot of times I read posts on social media where someone describes how they did their keyword research, hired experts for content and did many things to demonstrate expertise, experience, authoritativeness, and trustworthiness but nothing about empathizing with the site visitors, something that Prashanthi suggested was key to creating quality user experiences.

Reading some of LinkedIn’s descriptions of what they do, I saw a reference to a “user-focused lens” and I was curious about what that means to LinkedIn and what the end goal of that is.

She answered:

“Looking through a user-focused lens is about really connecting with our members and understanding their needs and experiences, with the goal being that what we create is functional as well as a joy to use.

As product builders, our most important job is to build ones that solve our member’s needs and create value for them at every touch point. For me, the only way to internalize what this means is to put ourselves in our members’ shoes and empathize with their needs. And this is where all product development functions, especially engineering, staying close to the member experience, sentiments, feedback, etc. will go a long way in developing a member-centric product development culture.

For example, when discussing features like AI-powered writing assistants, some members have reflected on how they consider themselves novice writers and how useful they find our thought-starters and suggested message drafts. When I hear these sentiments, it gives me confidence that the products we are building are helping make their lives easier, taking them a step closer to their goals and, in turn, making our jobs and purpose more meaningful.”

User Focused Online Experiences

Prashanthi’s answers show the value of a user-centric approach to everything we do online. Anchoring your content strategy to your mission, cultivating the quality of empathy, and listening to your site visitors is important.

The information she shared is adaptable to any scenario in online marketing whether that business is sales, content, recipes or reviews.