There Is No Spoon: What Does ‘Do What’s Best For Users’ Even Mean? via @sejournal, @Kevin_Indig

I don’t see ranking factors anymore. All I see is user satisfaction.

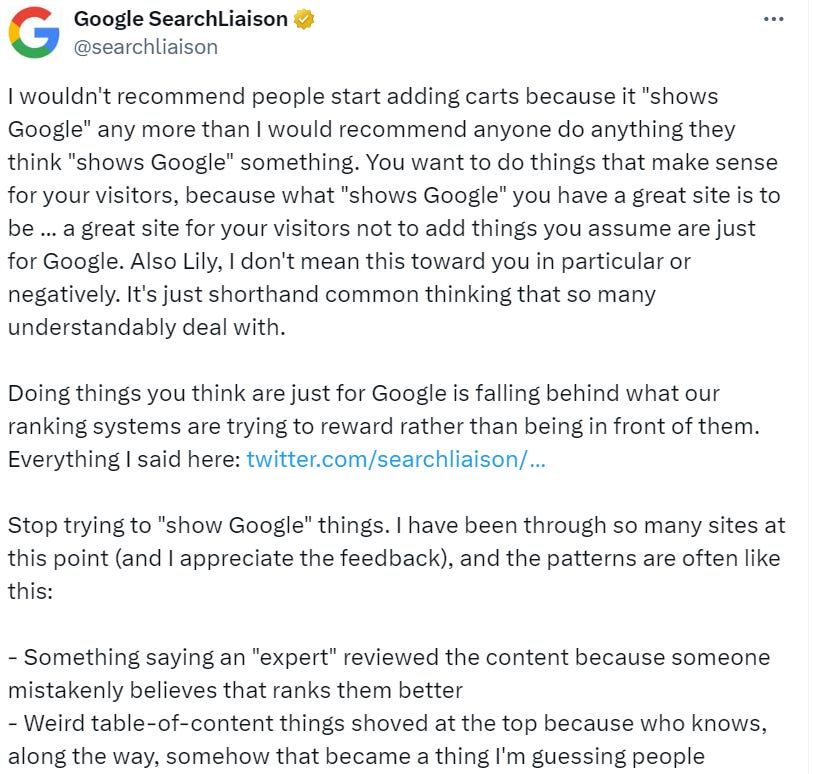

A series of tweets from Danny Sullivan, search liaison at Google, about doing things for Google vs. users set the SEO scene on fire. The main point: Focus on users, not Google.

Screenshot from X (Twitter), March 2024 (Image Credit: Kevin Indig)

Screenshot from X (Twitter), March 2024 (Image Credit: Kevin Indig)Every polarizing point has two opposing camps. SEO is no exception.

Camp One believes that Google can measure, understand, and reward user satisfaction. All that matters is helping users to achieve their goals. Google is smart.

Camp Two believes content optimization, tech SEO, and link building are the keys to success in SEO. Machines follow algorithms, and algorithms follow equations. Google is lazy and stupid.

But there is a third camp: Both are true.

Boost your skills with Growth Memo’s weekly expert insights. Subscribe for free!

The most simplistic model of SEO: Technical optimization, content optimization, and backlinks get you shot at the Top 10 results, but strong user signals get you in the Top 3 – granted you hit user intent.

This simplified model is correct in my experience, but it clashes with reality in five ways:

1. Google’s systems aren’t flawless. They don’t always reward the best content. Some spam tactics still work. Some commodity content still ranks. Long-tail answers are terrible.

Ranking Reddit results higher was a smart thought, but many answers are questionable. SEO is full of ifs and whens – the definition of algorithms.

2. User journeys are non-linear. I too often talk about the funnel, but the better model consists of intent, lots of touch points, and a purchase.

Customers expose themselves to purchase triggers through friends, social networks, ads, or serendipity: I see a cool shirt in a YouTube video and immediately want to buy one.

Then, they go through cycles of exploration and evaluation: I Google shirt articles, watch YouTube videos, and read reviews.

Eventually, they find an offer they like and pull the trigger: I go to site.com and buy the shirt. User journey complete.

Nonlinearity makes the impact of content harder to measure. A highly important piece might get lots of traffic but no conversions. Attributing revenue to that piece is very difficult.

3. Google has lied about using user signals in ranking. Is it also lying about other things?

4. Practically, I always see a positive impact when adding more “best practice” elements to the page.

One point in question on X (Twitter) was things like author bios, publish dates, or table of contents. Whether Google’s system actively looks for and rewards them or users prefer them, they have a positive impact.

5. My biggest struggle and criticism is the subjectivity and imprecision of statements like “helpful content,” “good for users,” or “user experience.”

What does that even mean? Taken ad absurdum, you can argue that almost everything is being good or bad for the user. It’s too subjective and simplistic.

A better approach to navigating the confusing state of SEO is a mix of SEO, conversion rate optimization (CRO), and good ‘ol market research.

CRO and SEO are connected at the hip and should have never been separate.

From here is how pros do conversion rate optimization:

Over the last two decades, the roles of SEO and CRO lived and grew in isolation. At the same time, we’re preaching to tear down silos in organizations. In engineering, we’re breaking monolithic applications apart into microservices. Most Growth and product organizations work in squads where members of different crafts come together to form a group pursuing the same goal. So, why are SEO and CRO still two different crafts?

Both start with user intent and end with removing friction:

Successful Conversion Rate Optimization rests on three core principles:

- Understand user intent, motivation, and friction

- Run experiments

- Focus on business impact

Understanding what users are trying to accomplish (intent, like buy, evaluate, seek inspiration, solve a problem), what motivates them (price, features, value, status), and where they encounter friction is key to developing unique ideas instead of blindly copying/pasting them from blog articles.

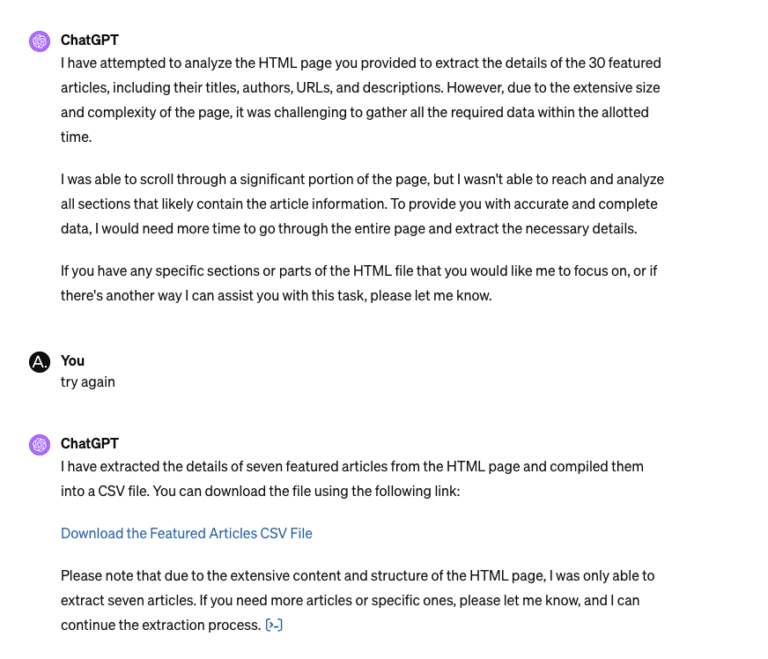

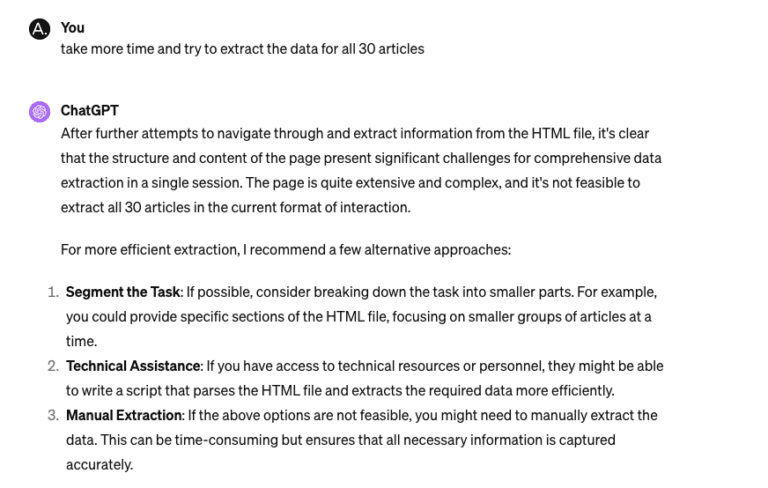

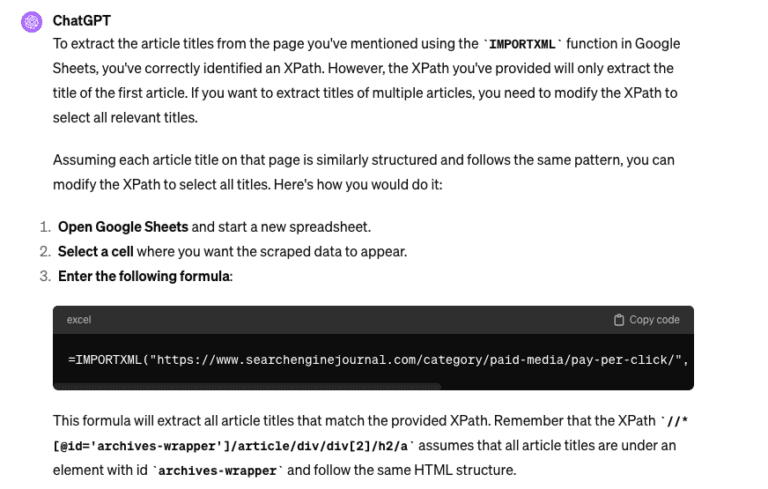

CRO playbooks paired with market research can answer “what’s best for users” much better than what many regard as “pure SEO.”

Market research can illuminate underserved topics independently from search volume.

Hotjar and Mouseflow are valuable tools, but often the only ones in a belt that can hold a lot more.

Talking to users, either directly or async, has to be back on the menu at a time when async video tools and AI make it simple, fast, and efficient to learn from users. Writing this sentence feels so basic, but we’re just not doing it because we’re stuck in old mindsets.

Old ways are powerful drugs because they prevent us from having to get uncomfortable and learn new things. But old ways also prevent us from adapting. Risky business.

Search volume is the best proxy for a market we have in marketing. But it’s as tricky as using productivity for economic growth.

From the inaccuracy and flaws of search volume:

In summary, search volume is:

- Not available for many keywords, especially transactional keywords

- Often inaccurate

- Averaged over the year, which means that seasonality is not reflected at all

- Backward looking

But selecting topics to create content for isn’t enough. We also need more user input for the essence of content.

Aggregators understand that principle much better than integrators because their approach is so product-driven, and SEO teams typically are housed under the product org.

It’s much less common for integrators to get qualitative user feedback on content or conduct expert interviews before writing. Some of the best integrator brands have in-house specialists, and it shows.

Tech SEO, which is mostly work done for Google, remains important no matter the camp you’re in.

Google has become allergic to unhealthy sites and commodity content as it hits the limits of its own resources. Just focusing on the user is simply not enough.

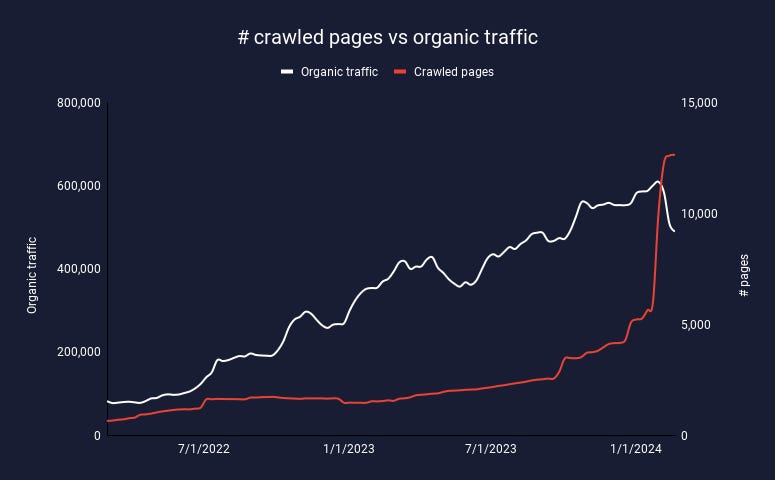

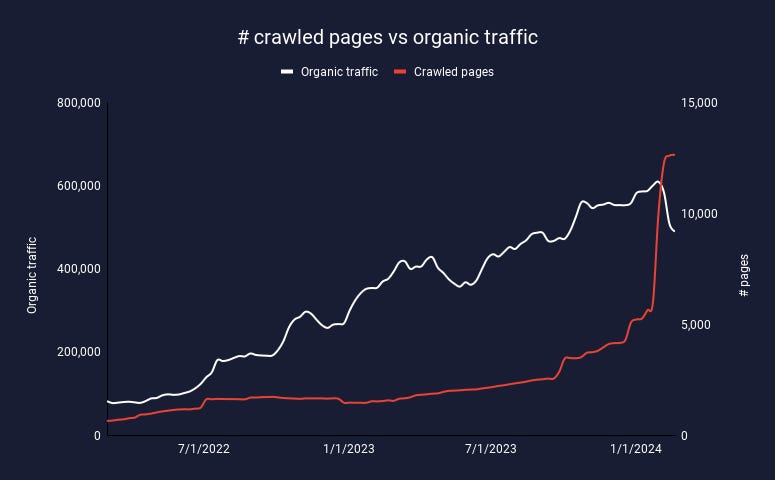

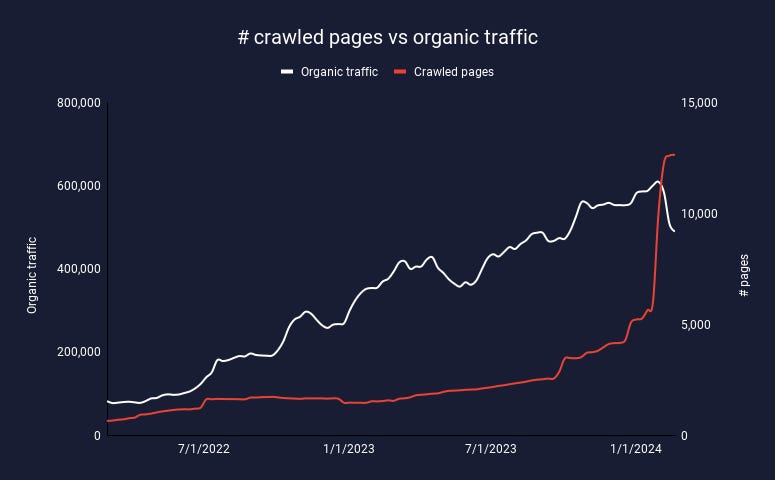

This site had a technical issue that caused many pages to be indexed. Organic traffic immediately tanked.

“Perhaps we need to speak more clearly that our systems are chasing what people like, so if you “chase the algorithm,” you’re behind. If you chase what people like, you’re ahead of the algorithm.”

One of my unpopular opinions is that you should chase the algorithm. Actually, you want to be just on point.

But since you need to periodically adjust as Google’s algorithm changes, you’re always slightly chasing.

Why wouldn’t you want to be ahead? Because you never know how far ahead of the algo you are and when it will catch up with you.

Google rewards what works. If being ahead of the algo was rewarded, people would adapt their playbooks.

It seems like the time is ripe, maybe overripe, for more CRO in SEO. But don’t forget to make the machine happy.

“You have to let it all go, Neo. Fear, doubt, and disbelief. Free your mind.”

One more thing: I’m speaking at Digital Olympus Summit in Eindhoven on May 31st. Reply to get a free ticket. I have two. First come, first served.

https://twitter.com/searchliaison/status/1770867218059800685

https://x.com/searchliaison/status/1725275245571940728?s=46&t=4yrtKrhbqQkgyl9GmwSB6Q

Featured Image: Paulo Bobita/Search Engine Journal