Google Ecommerce SERP Features 2025 Vs. 2024 via @sejournal, @Kevin_Indig

In 2024, Google turned the SERP into a storefront.

In 2025, it turned it into a marketplace with an AI-based mind of its own.

Over the past 12 months, Google has layered AI into nearly every inch of the shopping search experience by merging organic results with product listings, rolling out AI Overviews that replace traditional product grids, and introducing a full-screen “AI Mode.”

Meanwhile, ChatGPT is inching closer to becoming a personalized shopping assistant, but for now, the most dramatic shifts for SEOs are still happening inside Google.

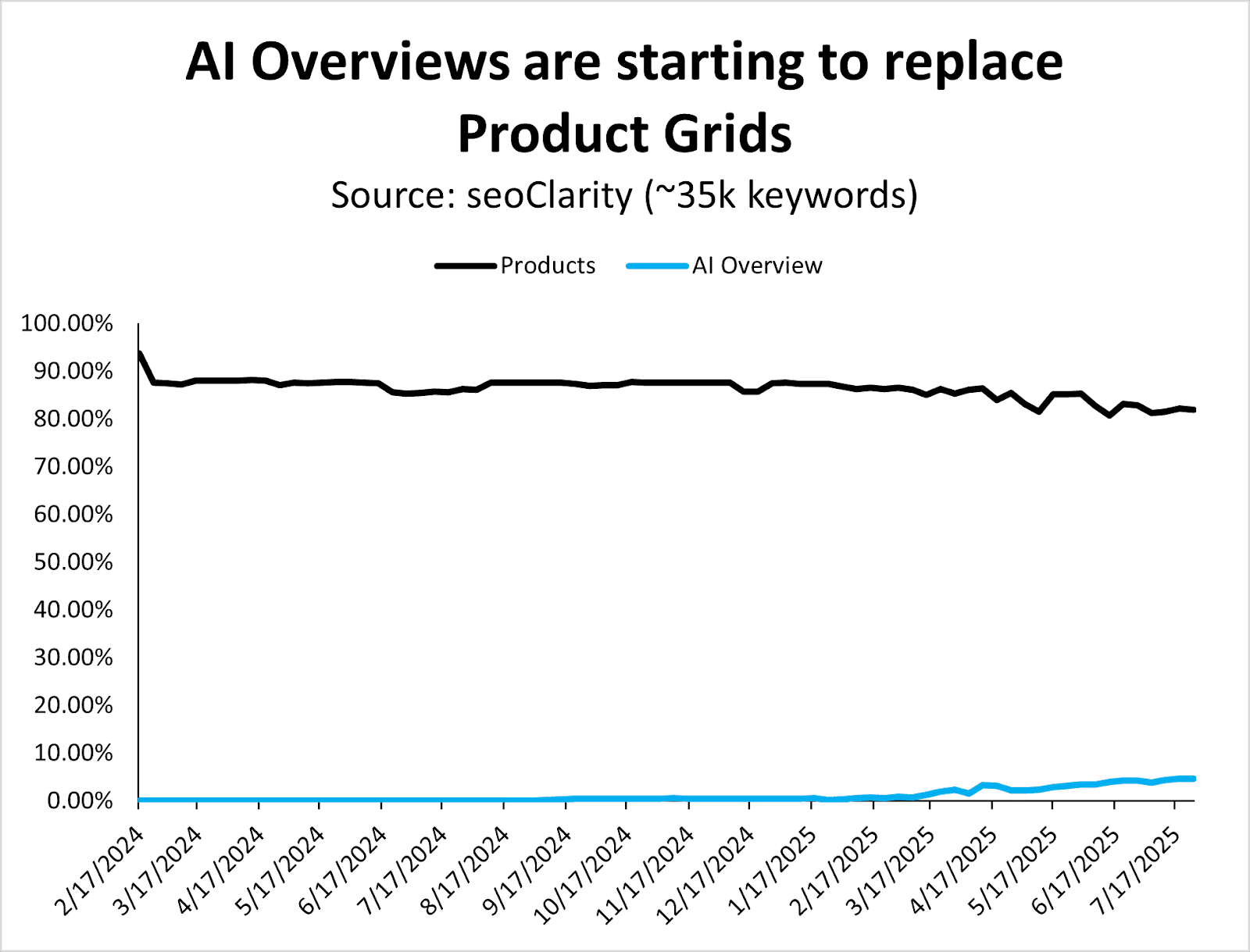

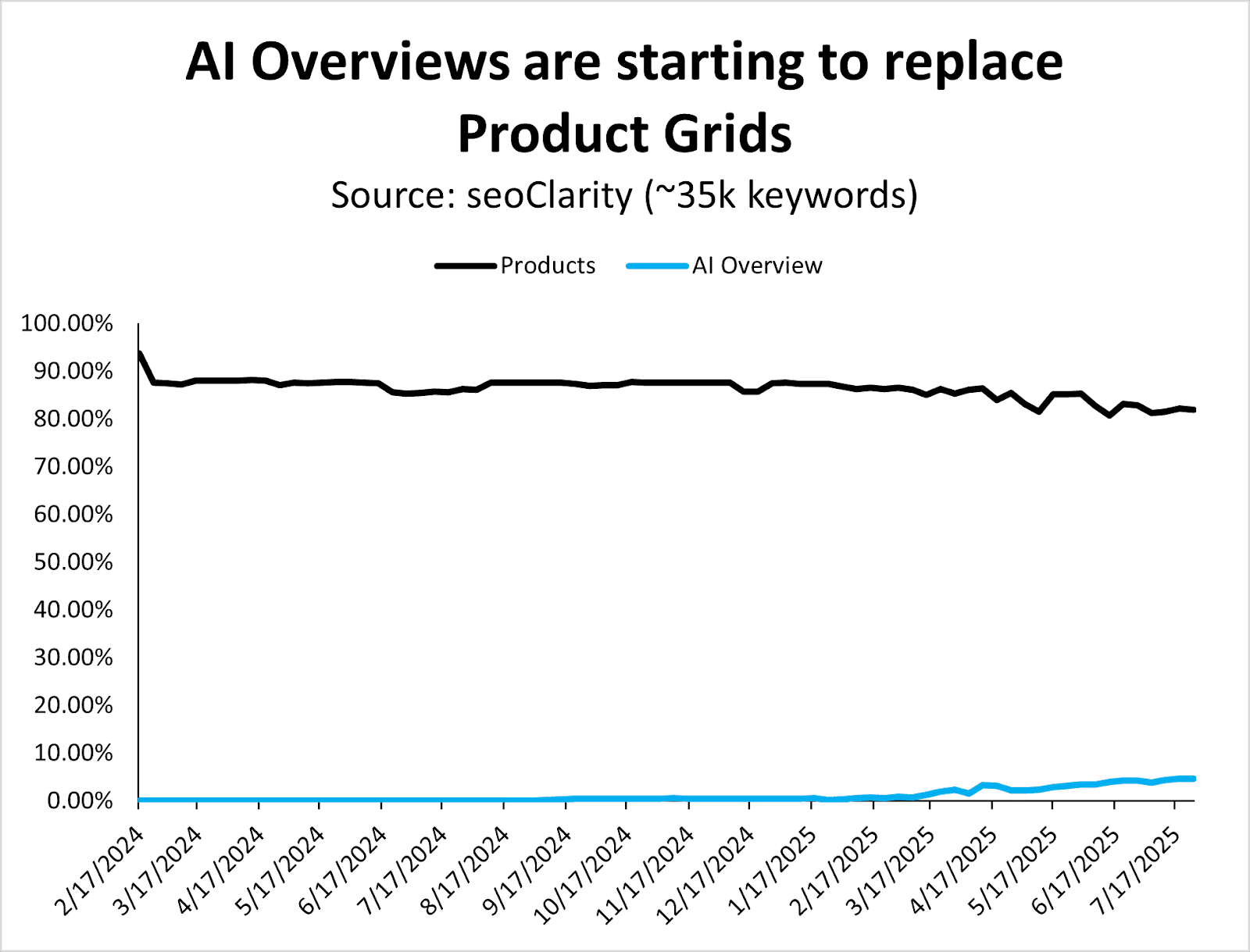

To understand the impact, I revisited a set of 35,000+ U.S. shopping queries I first analyzed in July 2024.

In today’s Memo, I’m breaking down the state of Google Shopping SERPs in 2025. A year later, the landscape looks … different:

- AI Overviews have started to displace classic ecommerce SERP features.

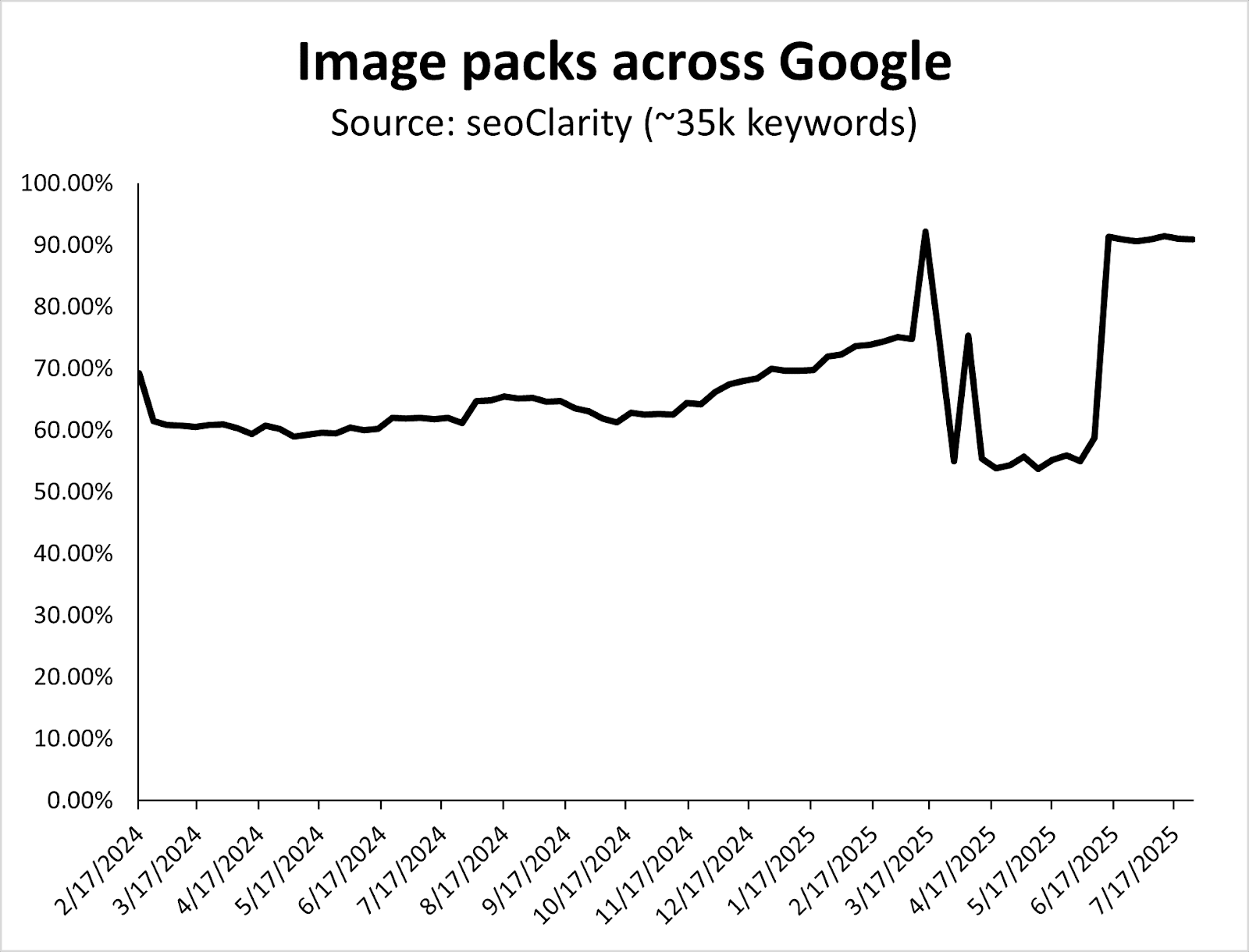

- Image packs dominate the page.

- Discussion forums are on the decline.

Plus, an exclusive comparison of 2024 vs. 2025 ecommerce SERP features and a full, detailed checklist of optimizations for the SERP features that matter most today (available for premium subscribers. I show you exactly how I do this).

This memo breaks down exactly what’s changed in Google’s shopping SERPs over the past year. Let’s goooooo.

Boost your skills with Growth Memo’s weekly expert insights. Subscribe for free!

In the last 12 months, Google hasn’t just transformed itself into a publisher that serves up content to answer queries right in the SERP (via AI Overviews and AI Mode). It’s also built out an extensive marketplace for shopping queries.

However, Google now provides a whole slew of SERP features and AI features for ecommerce queries that are at least as impactful as AIOs and AI Mode.

Meanwhile, ChatGPT & Co. are starting to include product recommendations with links, reviews, buy buttons, and recommendations directly in the chat. (But this analysis focuses on Google results only.)

To better understand the key trends for Google shopping queries, in July 2024, I analyzed 35,305 keywords across product categories like fashion, beds, plants, and automotive in the U.S. over the last five months using seoClarity.

We’re revisiting that data today, examining those same keywords and categories for July 2025.

The results:

- AI Overviews have started to replace product grids.

- Ecommerce SERPs are increasingly visual.

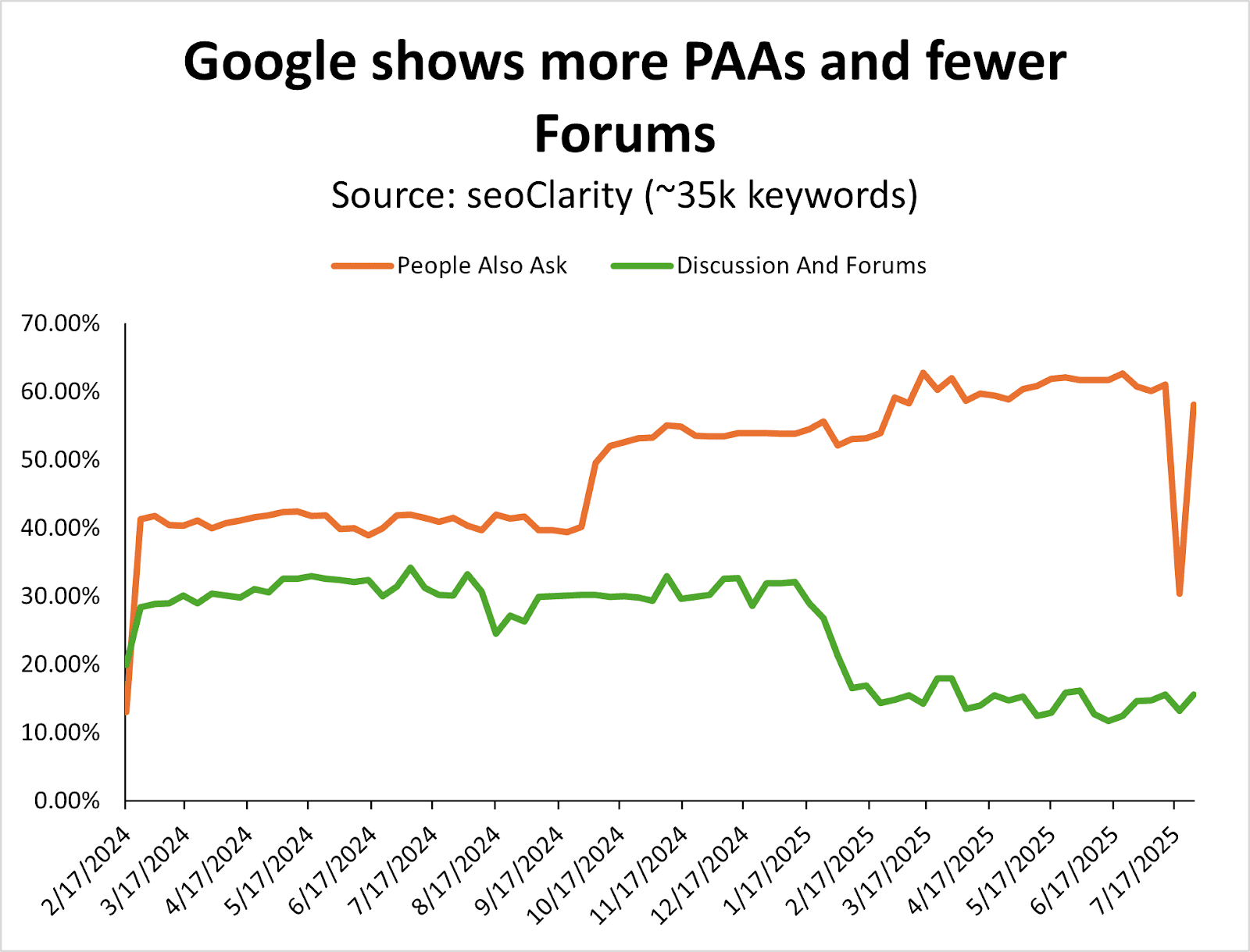

- There are more question-related SERP features (like People Also Ask), less UGC.

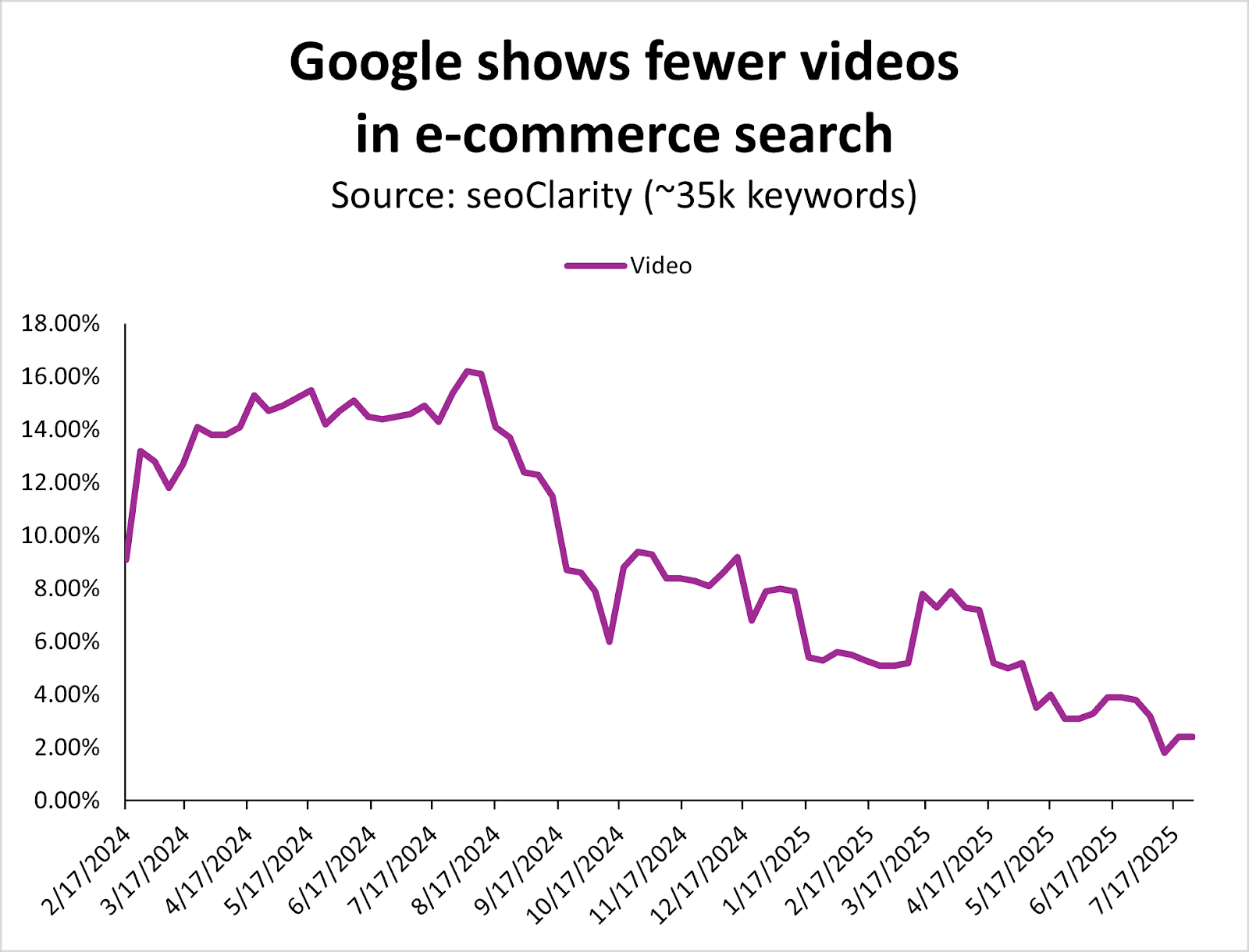

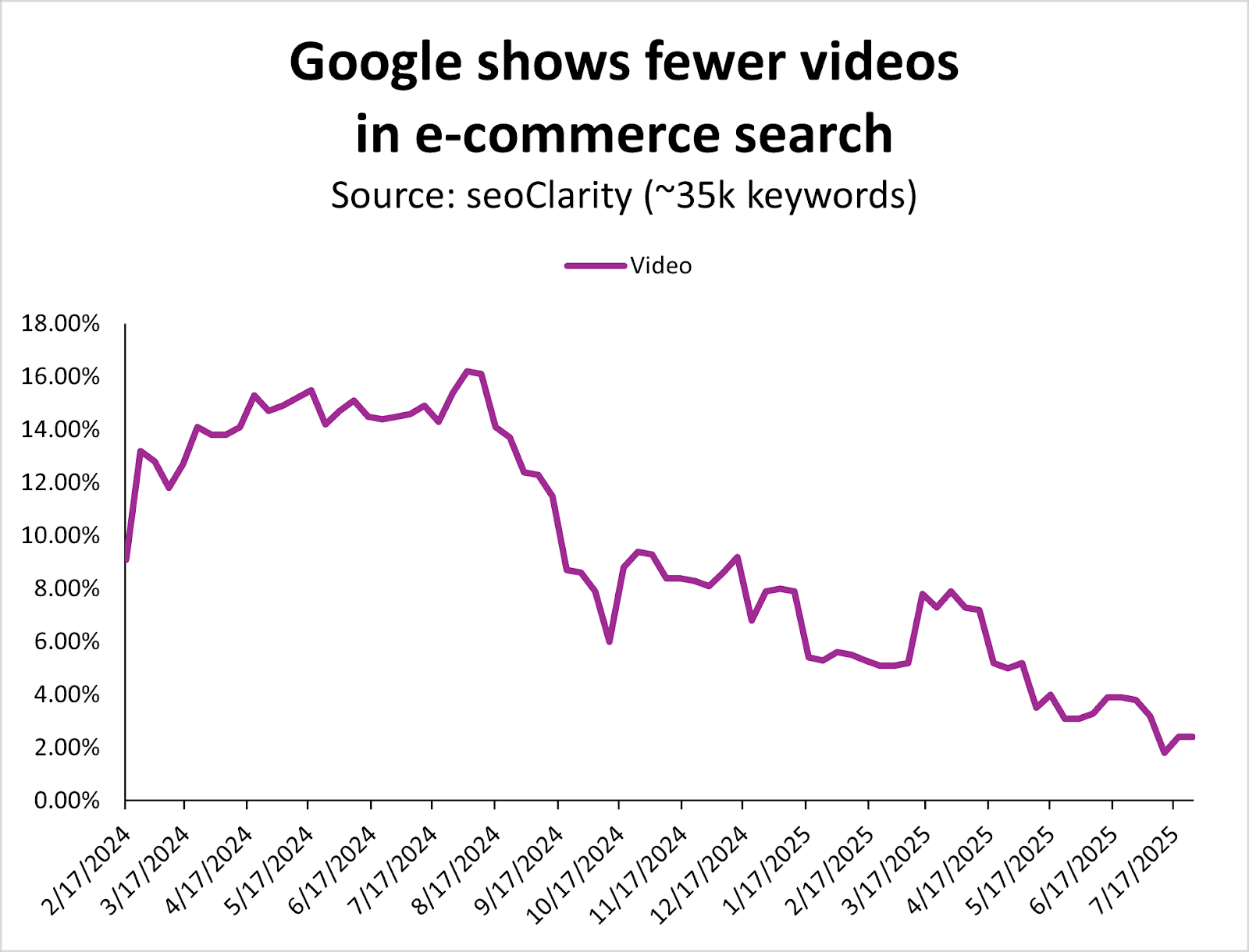

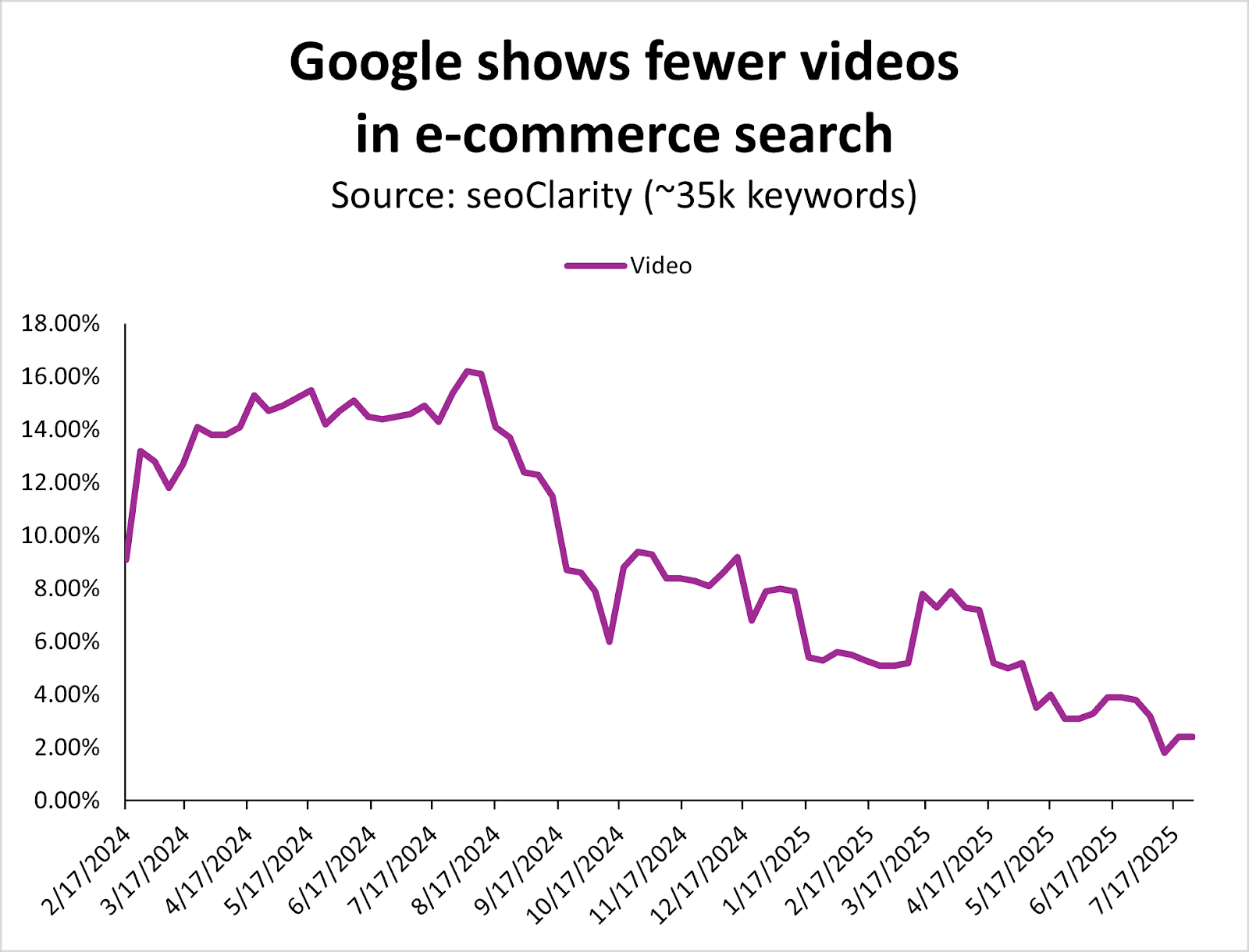

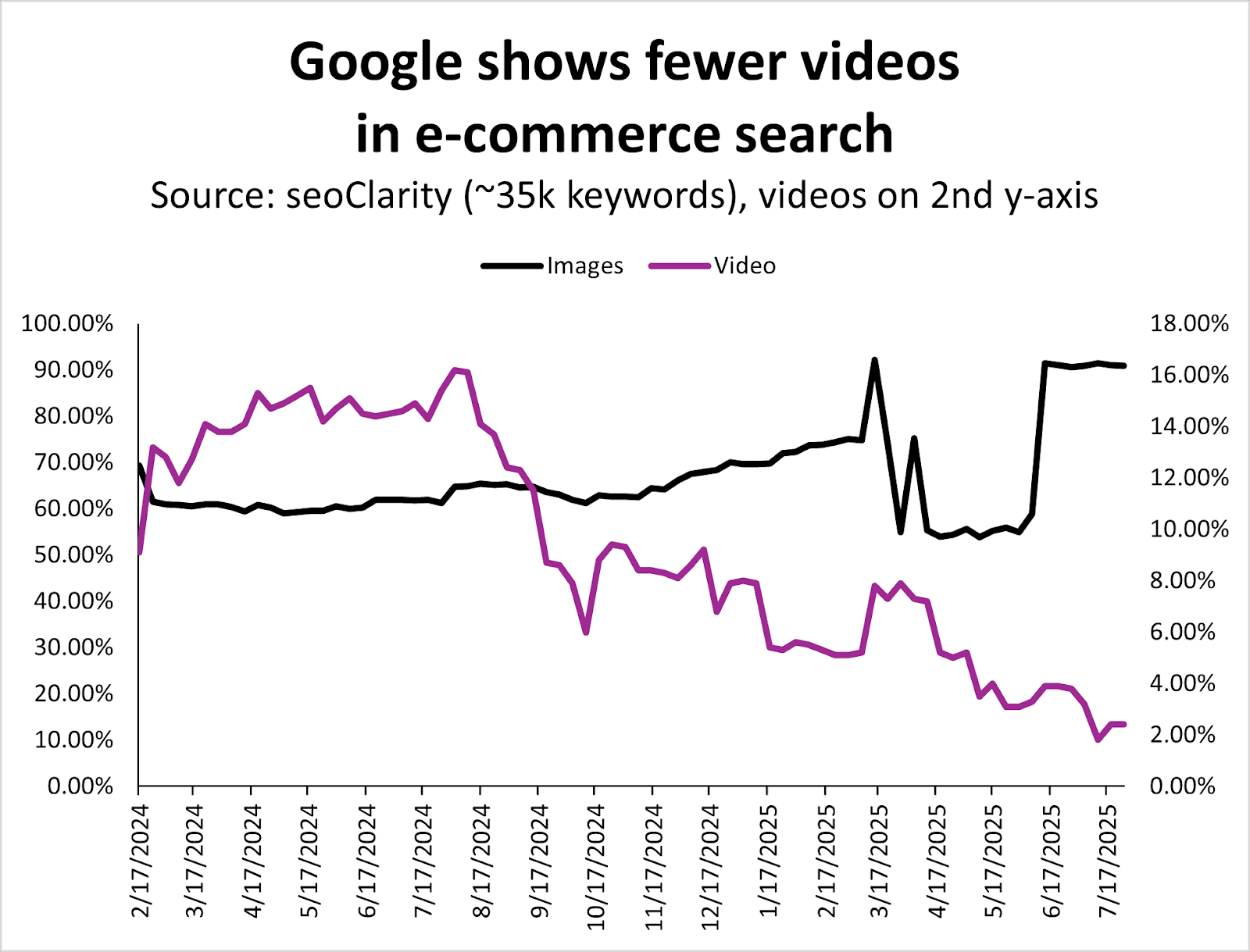

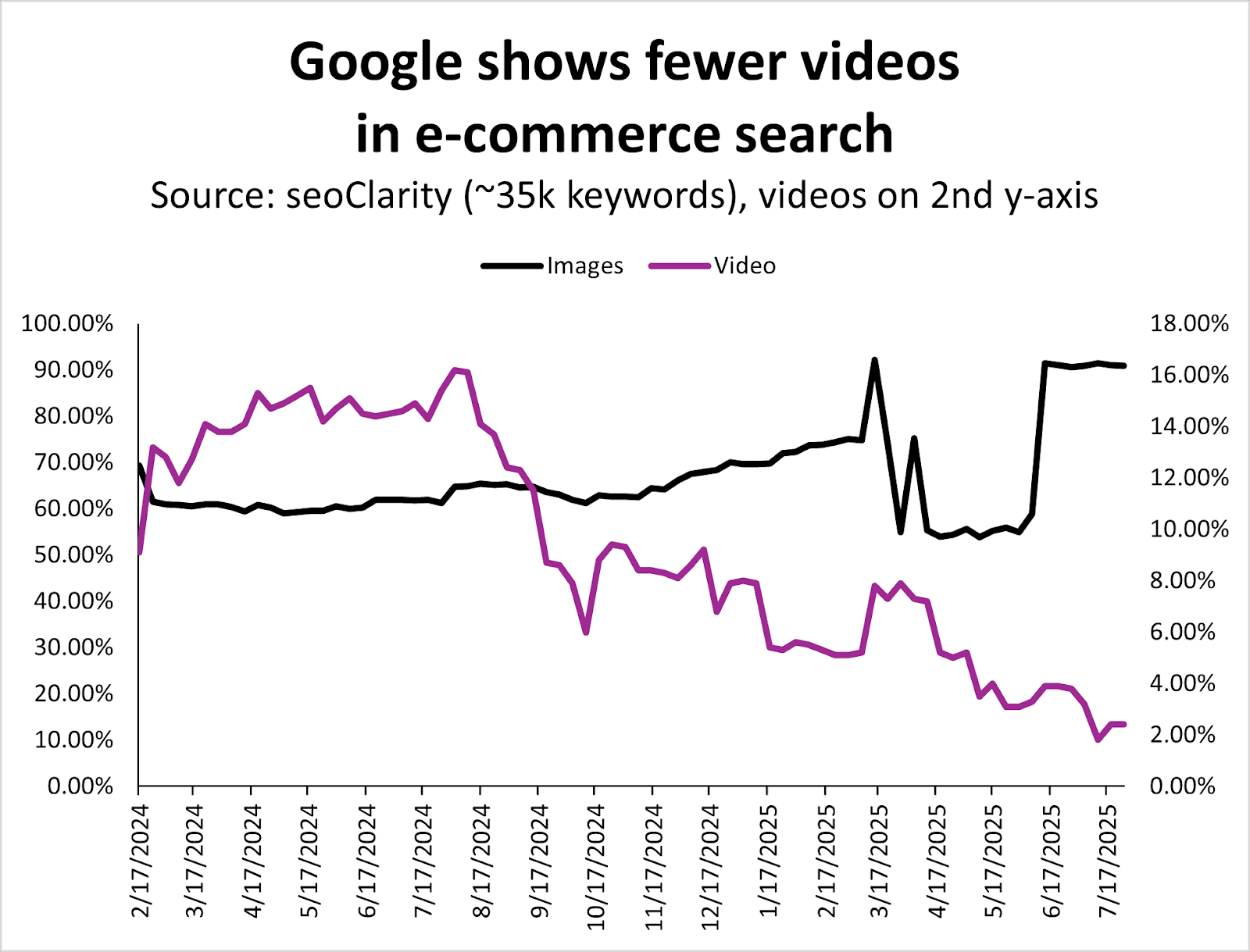

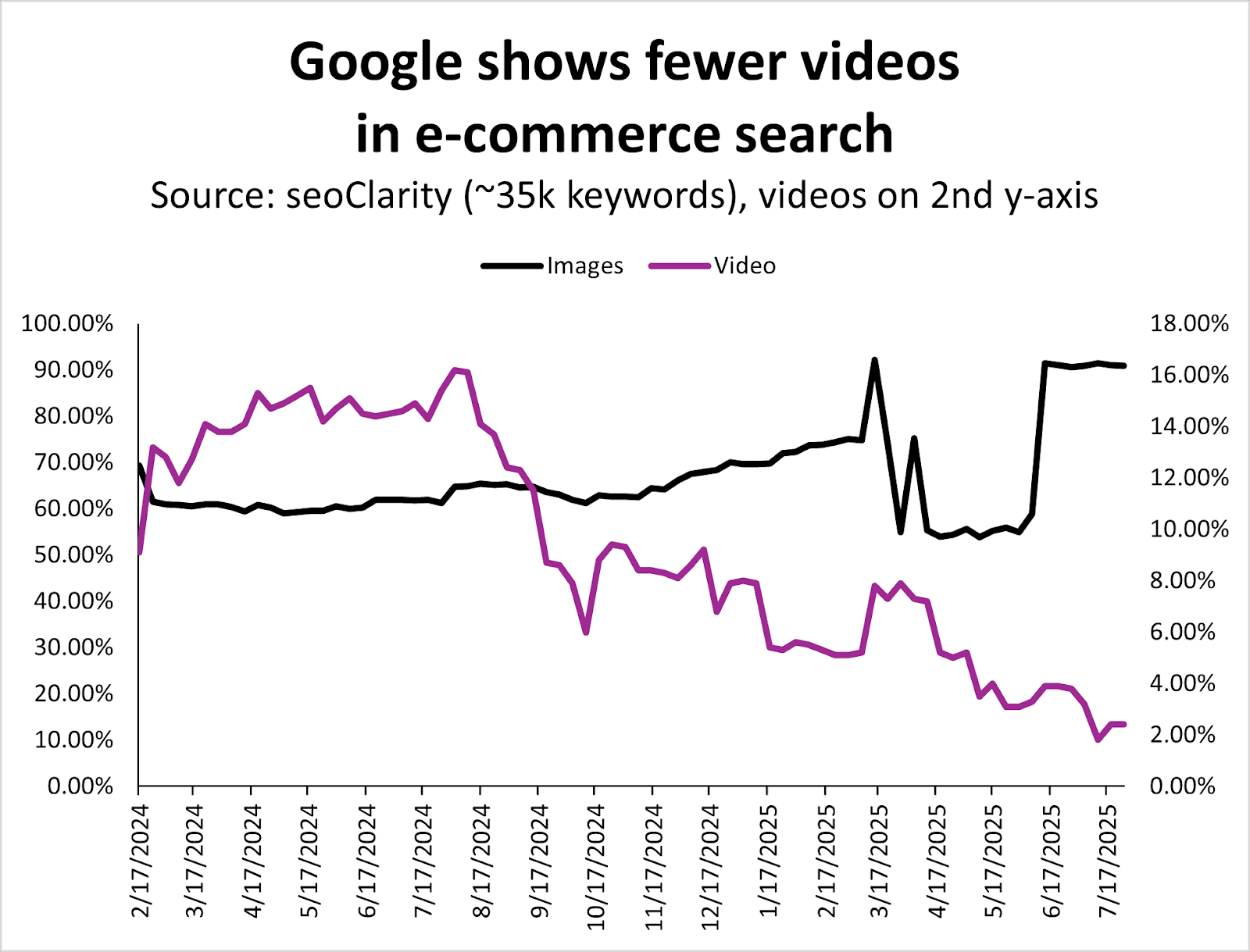

- Fewer videos are appearing across the SERPs for product-related searches.

About the data:

- This data specifically covers Google search results and features. It doesn’t include ChatGPT, Perplexity, etc. However, we’ll touch on this briefly below.

- Over 35,000 search queries were analyzed, and the same group was examined in both July 2024 and July 2025.

- The search queries analyzed include product-related queries across a broad spectrum, from brand terms (like Walmart) to individual products (iPads) and categories (e-bikes).

- If you’re curious about the exact list of Google shopping SERP features included in this analysis, they’re included at the bottom of this memo.

Before we dig into the findings…

In Google’s shift from search engine to ecommerce marketplace (and from search engine to publisher), Google has merged as much as possible into the SERP page.

Web results and the shopping tab for shopping searches were combined as a response to Amazon’s long-standing dominance.

The shopping tab still exists, sure.

But for product-related searches, the main search page and the Google shopping experience look incredibly similar, with the Shopping tab streamlined to a product-grid experience only.

In June 2024, I reported in Critical SERP Features of Google’s shopping marketplace:

- Google has fully transitioned into a shopping marketplace by adding product filters to search result pages and implementing a direct checkout option.

- These new features create an ecommerce search experience within Google Search and may significantly impact the organic traffic merchants and retailers rely on.

- Google has quietly introduced a direct checkout feature that allows merchants to link free listings directly to their checkout pages.

- Google’s move to a shopping marketplace was likely driven by the need to compete with Amazon’s successful advertising business.

- Google faces the challenge of balancing its role as a search engine with the need to generate revenue through its shopping marketplace, especially considering its dependence on partners for logistics.

And now?

Google’s layered AI and personalized SERP features into the shopping experience as well.

Below are the Google SERP features I’ll be examining in this year-over-year (YoY) analysis, specifically, with a quick synopsis if you’re not familiar.

- Images: A horizontal carousel of image results related to the query pulled from product pages or image-rich content; usually appear at the top or mid-page and link to Google Images or directly to source pages.

- Products: Displays a visual grid or carousel of products with titles, images, prices, reviews, and merchants. This includes free product listings (organic) and Product Listing Ads (PLAs) (paid).

- People Also Ask (PAA): Related questions users frequently ask. Clicking a question reveals a source link. (These often inform Google’s understanding of search intent and user curiosity.)

- Things To Know: An AI-driven feature that breaks a topic into subtopics and frequently misunderstood concepts. Found mostly on broad, educational, or commercial-intent queries, this is Google’s way of guiding users deeper into a topic and understanding deeper search intent.

- Discussion and Forums: Highlights relevant threads from platforms like Reddit, Quora, and niche forums. Answers are often community-generated and authentic. Replaced some traditional “People Also Ask” real estate for shopping or reviews queries.

- Knowledge Graph: Displays structured facts about a person, brand, product, or topic-sourced from trusted databases. Appears in a right-hand sidebar or embedded box.

- Buying Guide: A feature that explains what to consider when shopping for a product, e.g., “What to look for in a DSLR camera.” Usually placed mid-page for commerce-intent queries. It mimics a human assistant or product expert’s advice. Contains snippets and links to sources.

- Local Listing: Shows local business listings with map, ratings, hours, and quick call/location links. Prominent in searches with local intent like “shoe store near me” or “coffee shops in Detroit.”

- AI Overview: Generative AI summary at the top of the SERP that answers the query using information synthesized from multiple sources. For shopping queries, it often includes product summaries.

- Video: A carousel or block of video content, mostly from YouTube, but also from other video-hosting platforms. May include timestamps, captions, or “key moments” for long videos.

- Answer Box (a.k.a. Featured Snippet): A direct answer to a query extracted from a single web page, shown at the top of the SERP in a stylized box. Often used for factual or how-to queries. Includes the source link.

- Free Product Listings: Organic product results submitted via Google Merchant Center feeds. These listings show in the Shopping tab and occasionally in the main SERP product grid (distinct from paid Shopping ads).

- From sources across the web: A content block showing opinions or quotes on a product or topic from a variety of sites. Often used in AI Overviews or product reviews to surface aggregated user sentiment or editorial input.

- FAQ: An expandable schema-driven block showing common questions and answers sourced from a specific page. Typically appears under a site’s organic result when FAQ schema is properly implemented.

- PPC: Sponsored links shown at the top or bottom of the SERP, marked “Sponsored” or “Ad.” These can show up as text, product images/grids, etc.

In addition to the standard SERP features tracked in this analysis via the above list, here’s a look at the current Google shopping marketplace SERP features and/or elements (like toggle filters) that we’re dealing with at the halfway point of 2025.

- AI Mode (Full-Screen): Interactive, immersive full-page AI shopping experience with filters and buy links.

- Shopping filters inline: Dynamic filters (brand, color, price) within AI Mode and Shopping grids.

- Virtual try-on: This feature was recently released. It’s a generative AI module showing clothes on diverse body types (expanding by category).

- Price tracking/alerts: Users can track price drops and get alerts via Gmail or Chrome. Honestly, a pretty great tool.

- Popular stores/top stores: Scrollable carousel of prominent retailers for the product category.

- Product sites (EU market): Organic feature that shows prominent ecommerce domains (due to regulatory changes in the EU).

- Trending products/popular products: Highlights products rising in popularity based on recent search activity.

- Merchant star ratings: Display review scores and counts in summaries or tiles.

- Free shipping/returns labels: Highlighted callouts in product tiles.

- “Verified by Google” merchant badges: Google-trusted seller icon in some listings.

- Quick comparison panels: Side-by-side spec or feature comparisons (this is an early-stage rollout, similar to Amazon’s product comparison panel or module).

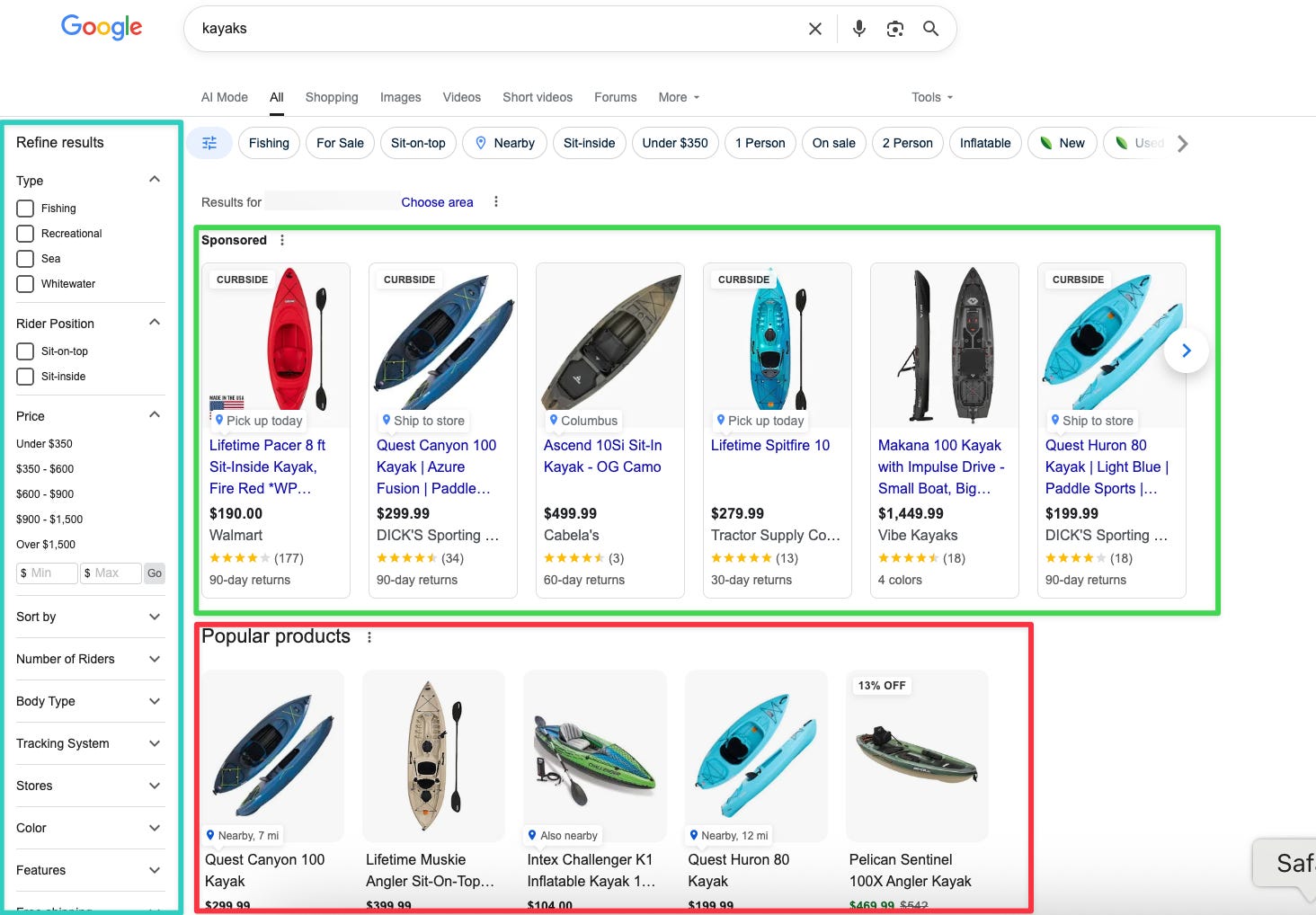

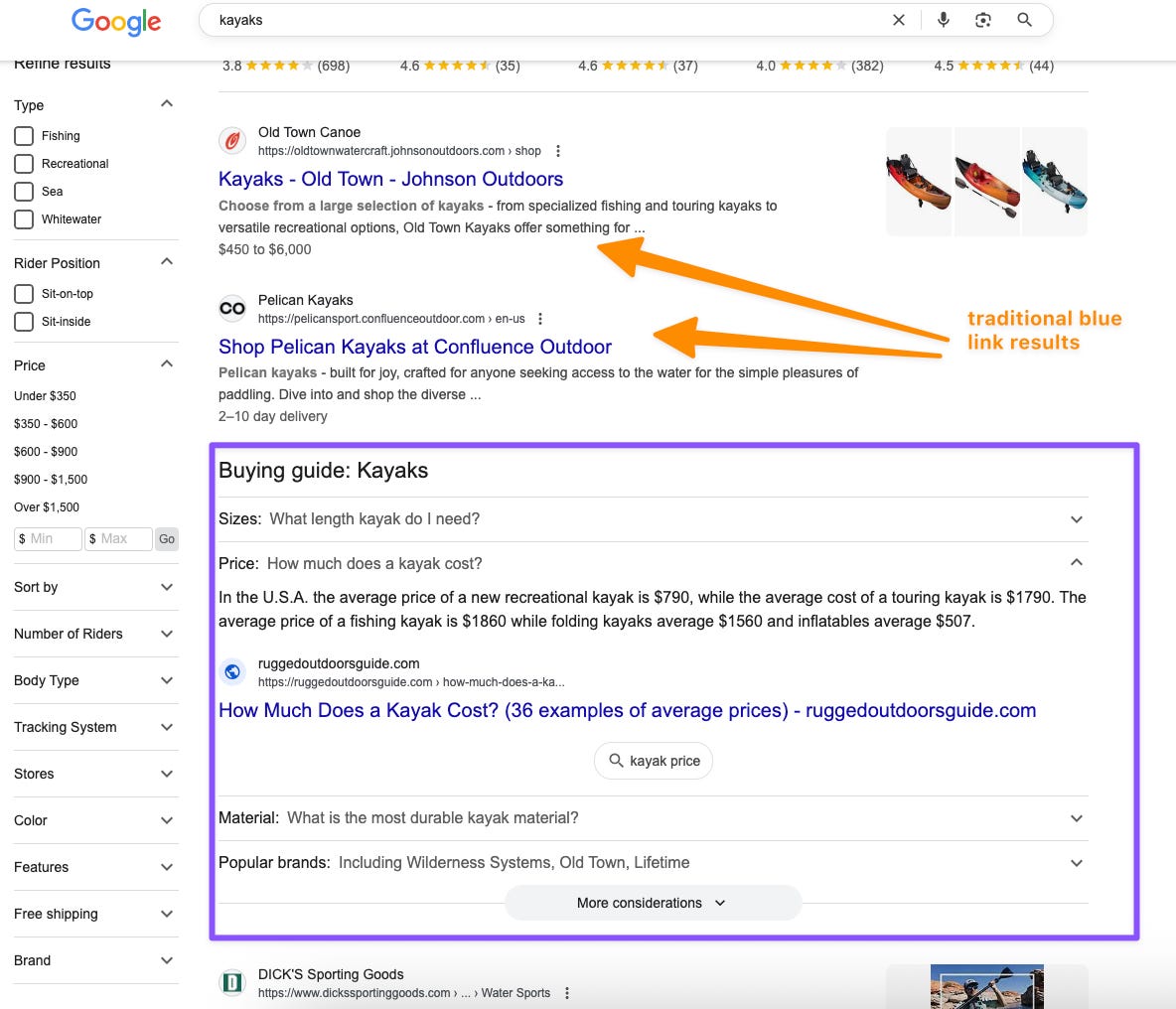

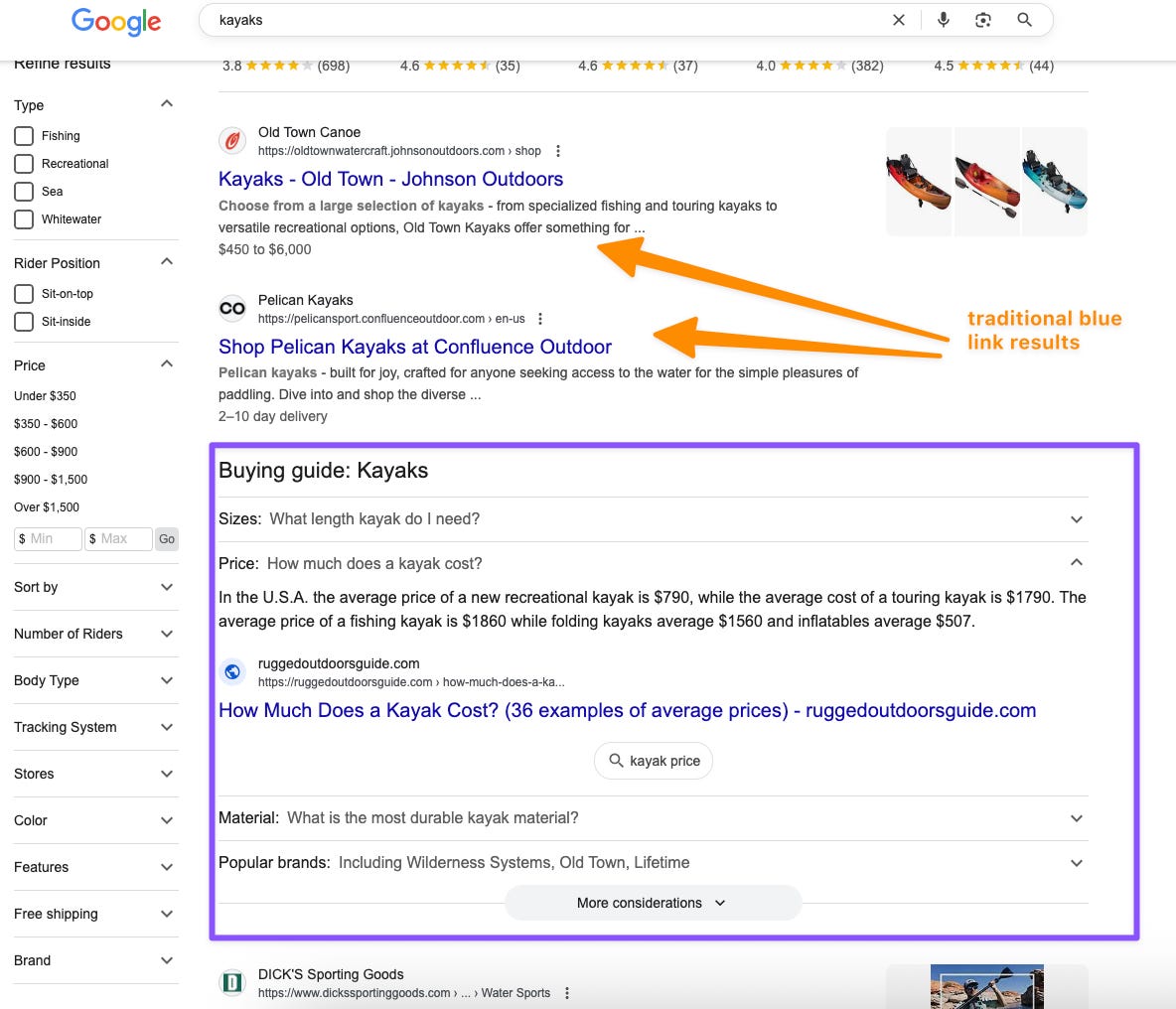

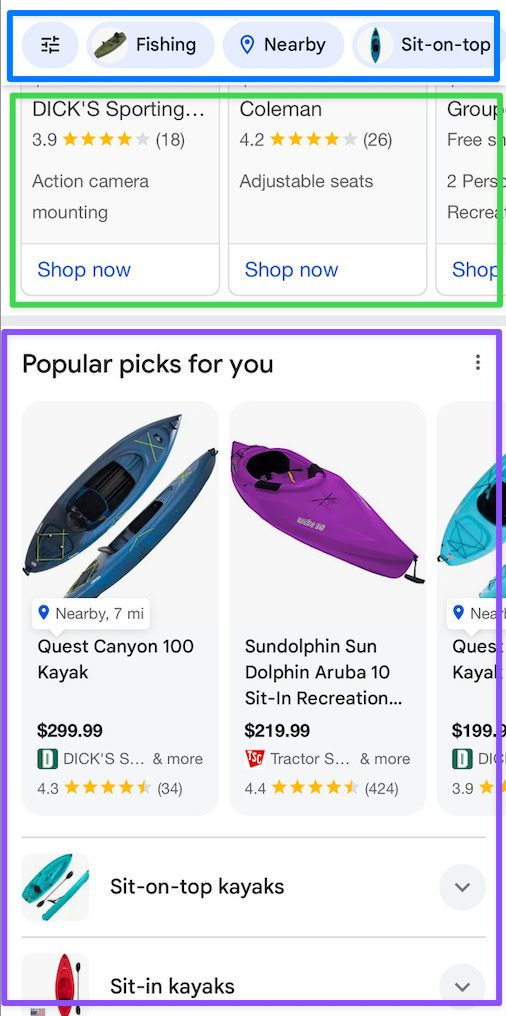

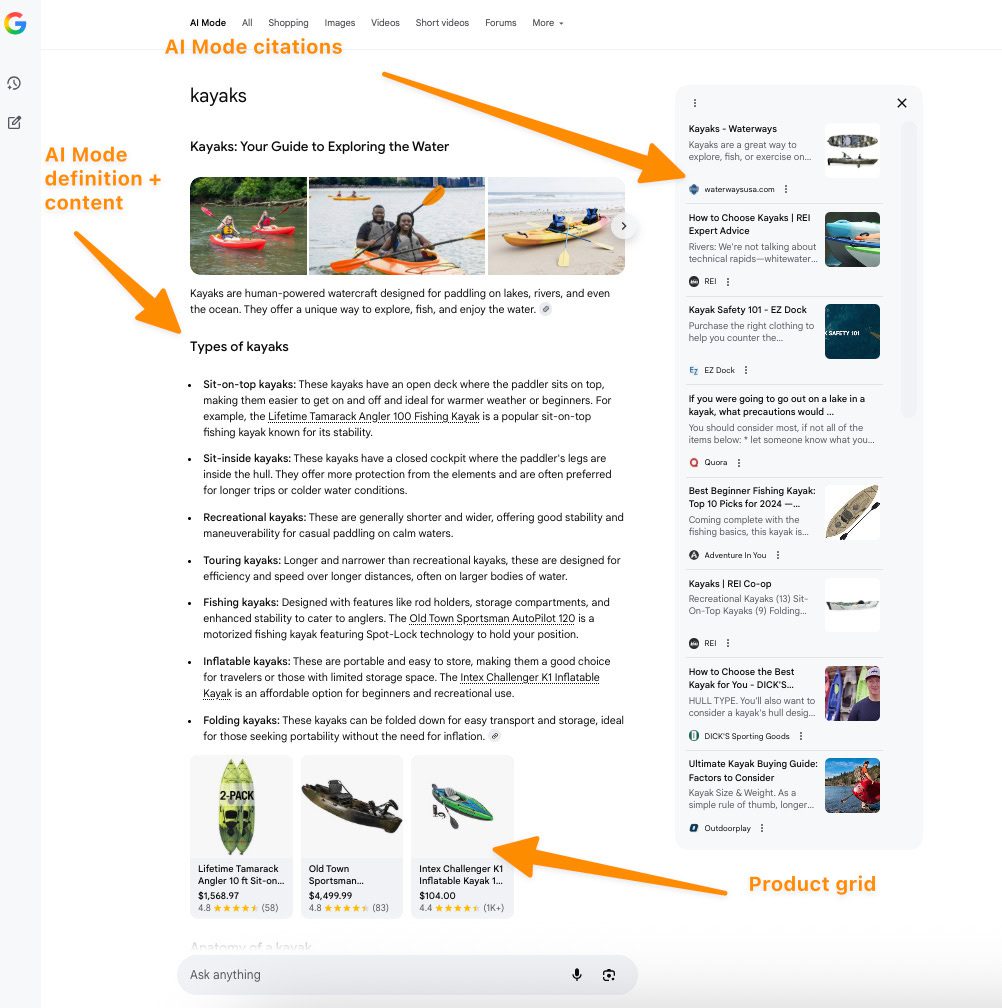

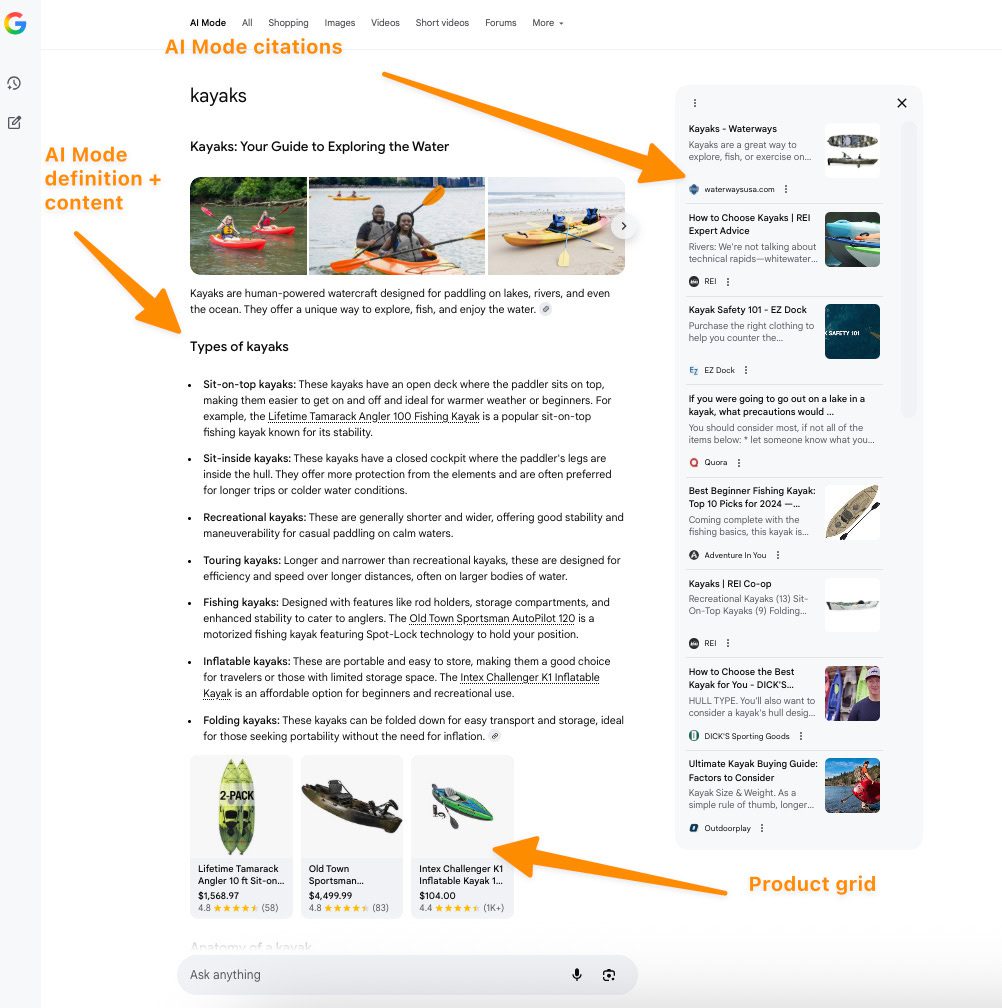

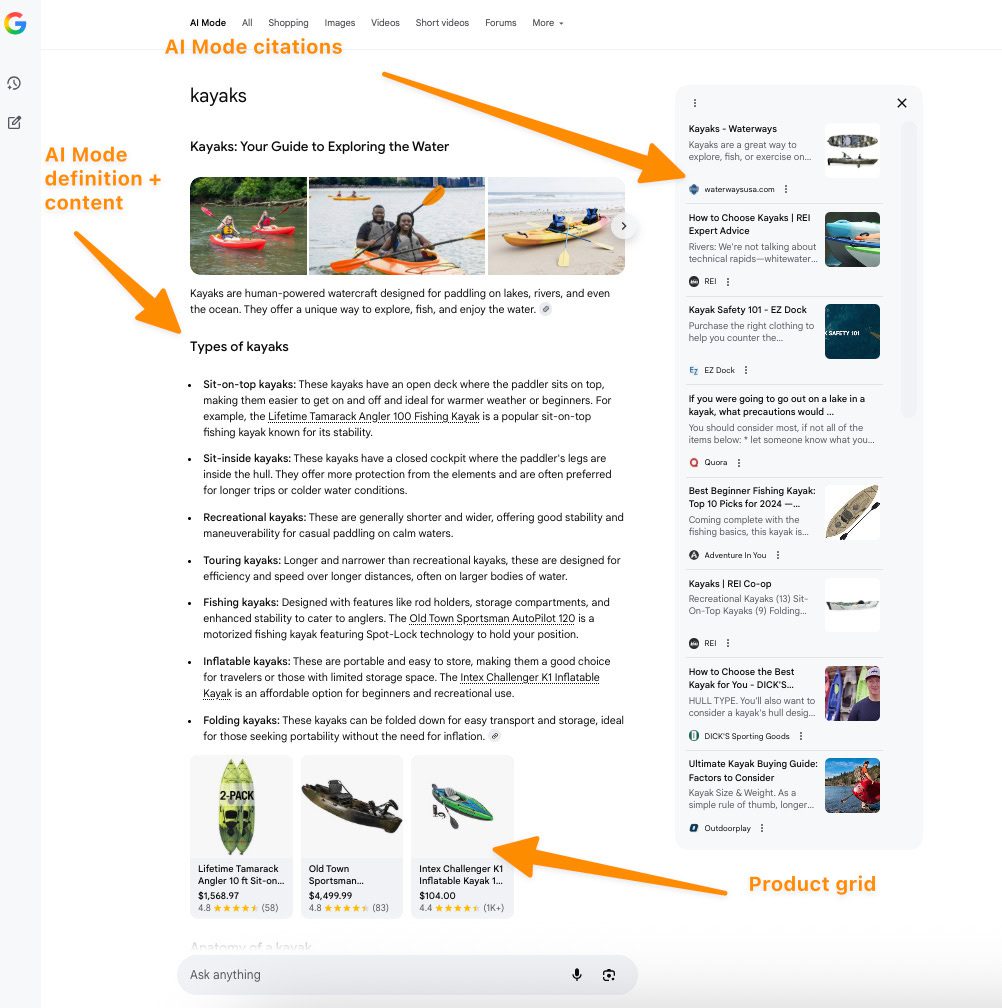

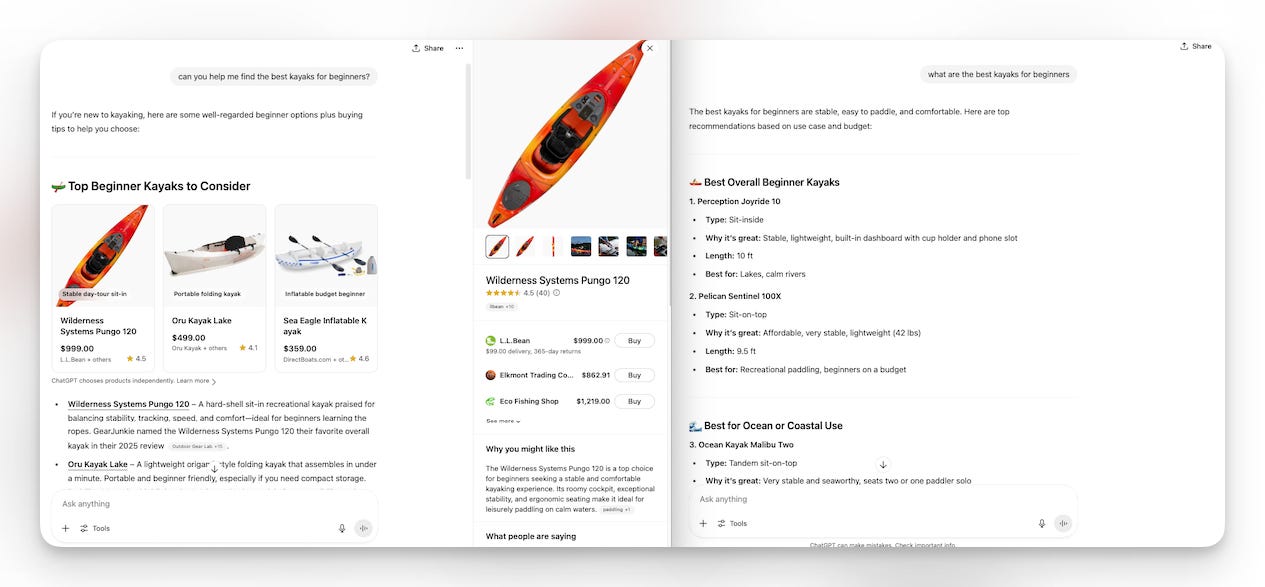

To illustrate with an example, let’s say you are looking for kayaks (summertime!).

On desktop (logged-in), Google will now show you product filters on the left sidebar and “Popular products” carousels in the middle on top of classic organic results, but under ads, of course.

Image Credit: Kevin Indig

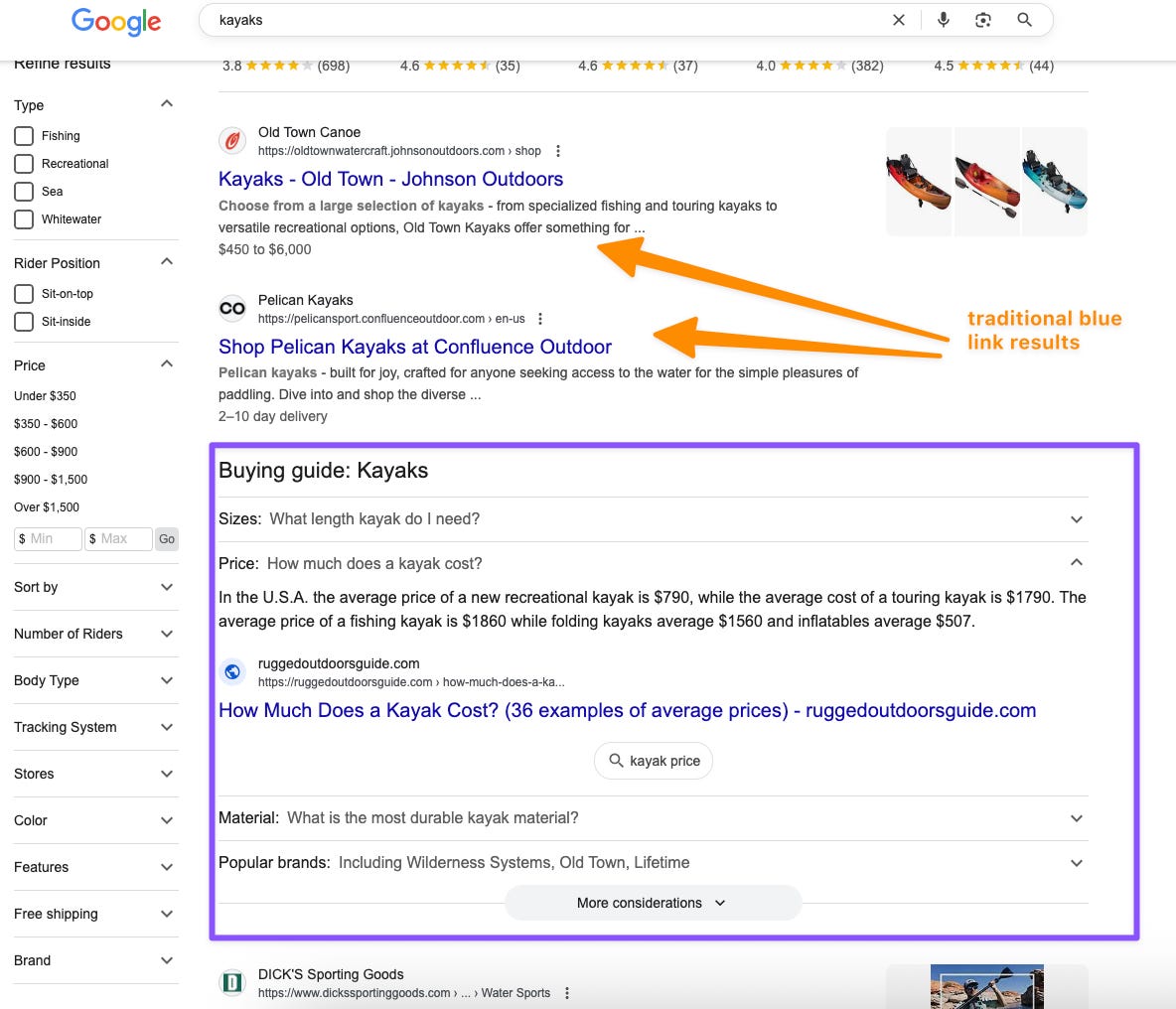

Image Credit: Kevin IndigDirectly under the shopping product grids, you have traditional organic results along with an on-SERP Buying Guide, similar to People Also Ask questions (which is also included further down the page).

Both the Buying Guide and People Also Ask features deliver answers with links to original content.

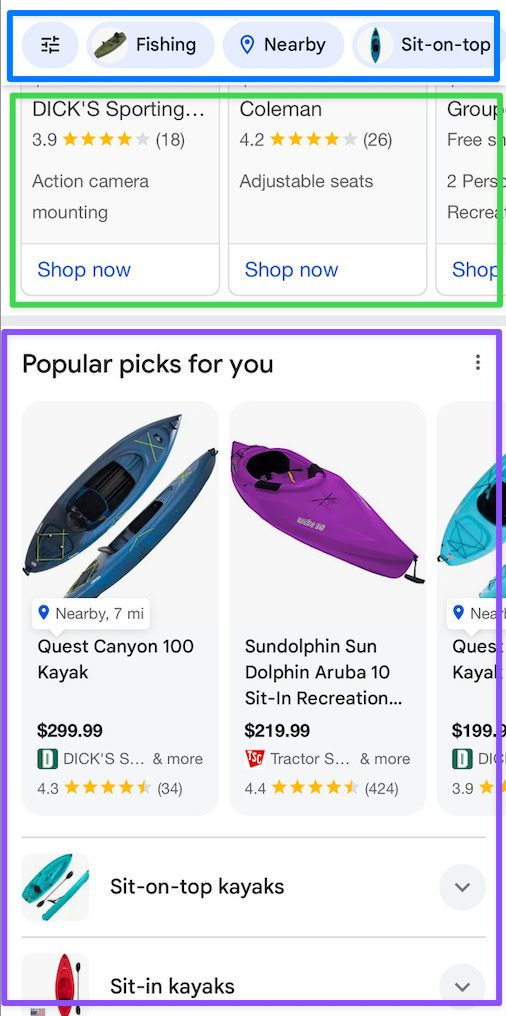

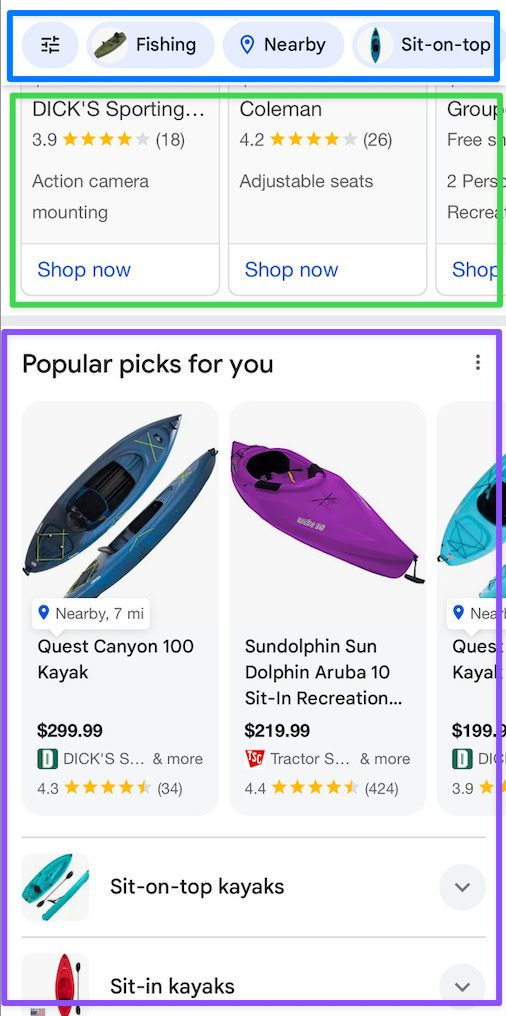

On mobile, you get product filters at the top, ads above organic results, and product carousels in the form of Popular products or “Products for you.”

This experience doesn’t look very different from Amazon … which is the whole point.

Google’s shopping experience lets users explore products on a variety of marketplaces, like Amazon, Walmart, eBay, Etsy, & Co.

From an SEO perspective, the prominent position of product grid (listings) and filters likely significantly impacts CTR, organic visibility, and ultimately, revenue.

But let’s take a look at the same search via AI Mode.

Below is the desktop experience via Chrome.

I’ve zoomed out here so you get the whole view, but it takes the user two to three scrolls to get to the product grid when in a standard view.

Here on mobile, getting to product recommendations takes several scrolls. In one instance, I received a result that included a list of places near me in my city where I could get a kayak.

Keeping the current Google shopping SERP experience in mind, here’s what the data shows.

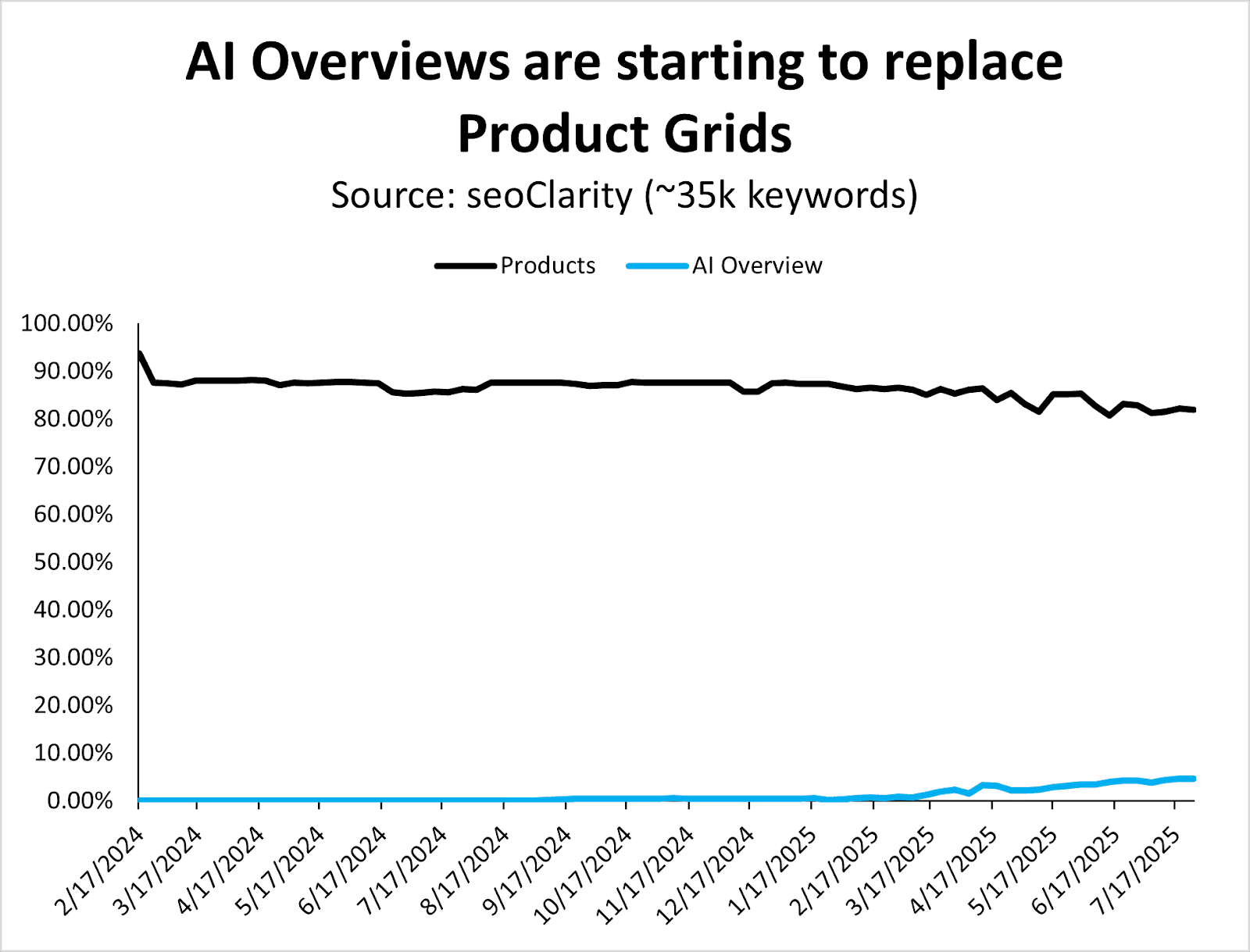

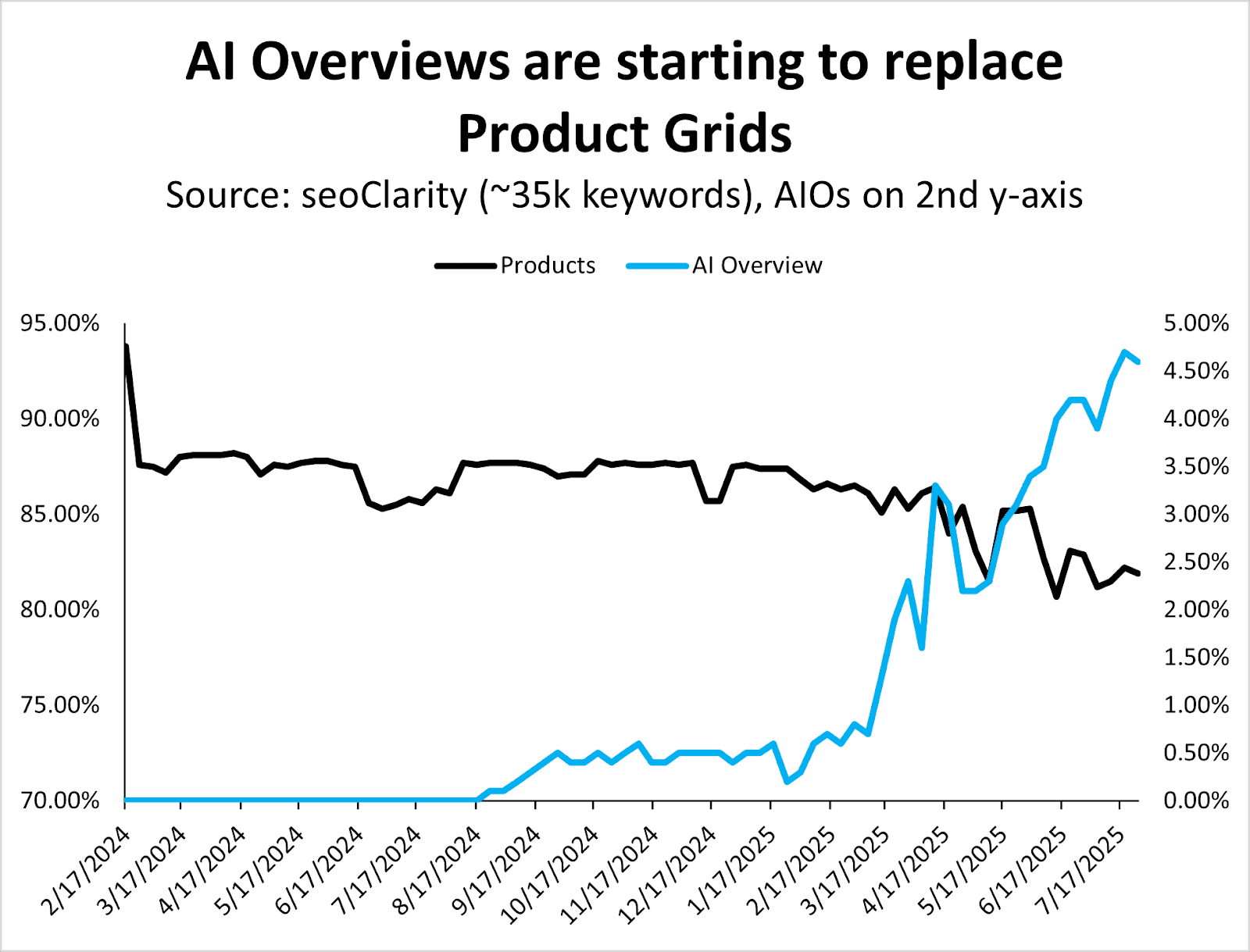

This is the most noteworthy shift found in the data, as you can probably guess.

Since March 2025, when Google began rolling out AI Overviews more aggressively, they’ve also started replacing (organic) product grids.

The graph above might look like it represents minimal changes when you examine it in a timeline view, but you can see the trend even better when moving AIOs to a second y-axis (below).

I expect AI Overviews to still show the product grids searchers have become accustomed to, although they might take a different form.

When searching for [which camera tripod should I buy?], for example, we find an AI Overview at the top with specific product recommendations.

Of course, AI Mode takes that a step further with richer product recommendations and buying guides.

(Shoutout to The New York Times and the other five sources for this AI Mode answer … which now don’t see an ad impression or affiliate click.)

As a result of this shift, which I predict will only increase over time, tracking your brand mentions and product links in AI Overviews becomes critical. Skip this at your own risk.

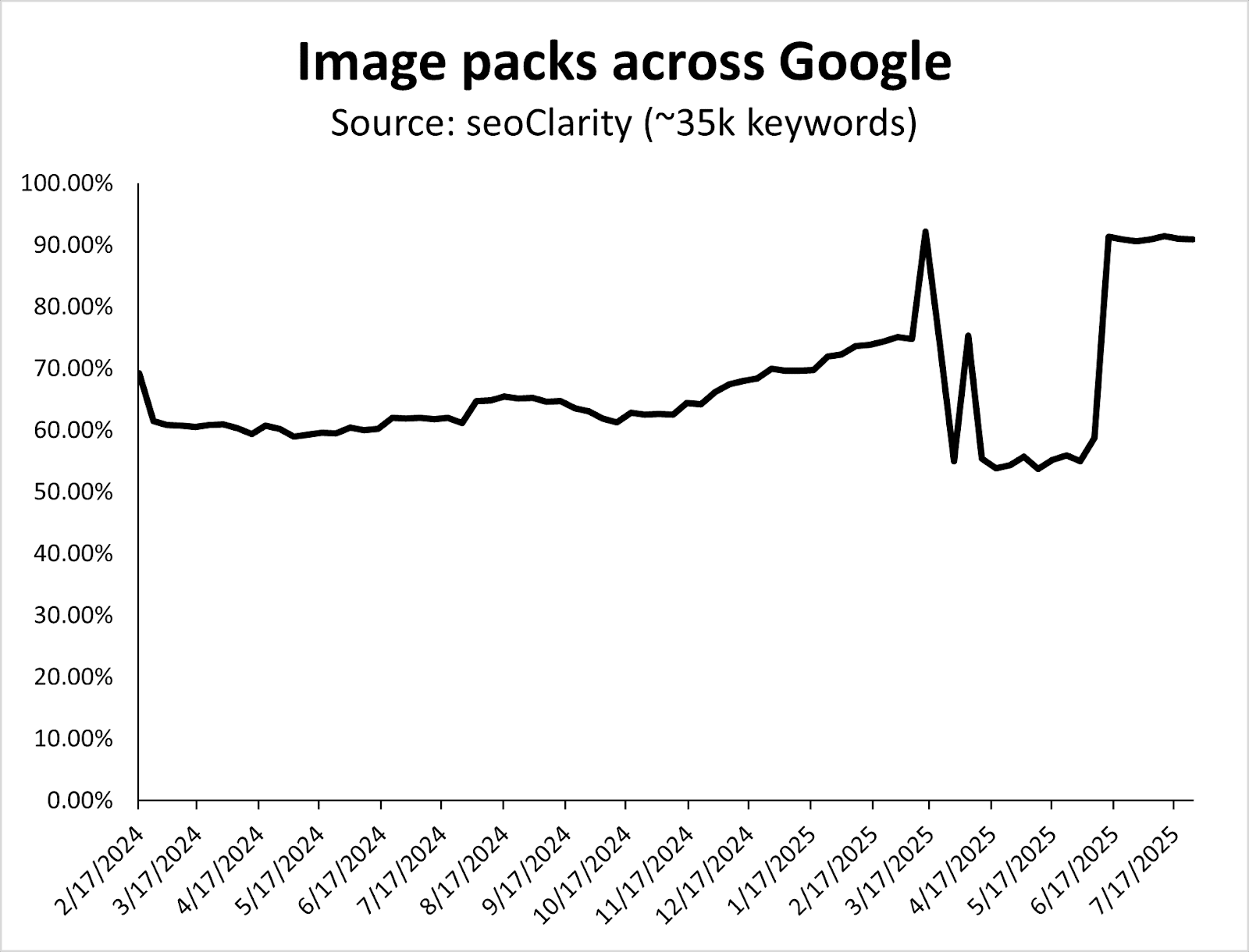

Here, you’ll see the increase in image packs over time, with a big shift in March 2025.

Image packs for ecommerce-related queries grew from ~60% in 2024 to a new baseline of over 90% of keywords in 2025.

Also, notice how Google systematically tests SERP layouts between core updates (e.g., the dip in the graph above happens between the March and June 2025 Core Updates).

Having strong product images, which are properly optimized, continues to be crucial for ecommerce search.

Since January 2025, Google has shown more People Also Asked (PAA) features at the cost of Discussions & Forums.

Even though Reddit is the second most visible site on the web, I’m surprised to see more PAA – two years after Google removed FAQ rich snippets from the SERPs.

This is something you want to consider tracking for queries that are directly related to your products, if you’re not doing so already. (You can do this in classic SEO tools like Semrush or Ahrefs, for example.)

Since August 2024, Google has systematically reduced the number of videos in the ecommerce search results.

It seems that images have taken a lot of the real estate videos that used to own.

As a result, videos are less important in ecommerce search, while images are increasingly more important.

If you’ve been creating and optimizing videos and haven’t seen the SEO results you wanted for your products/site, this could be your signal to invest in other types of content.

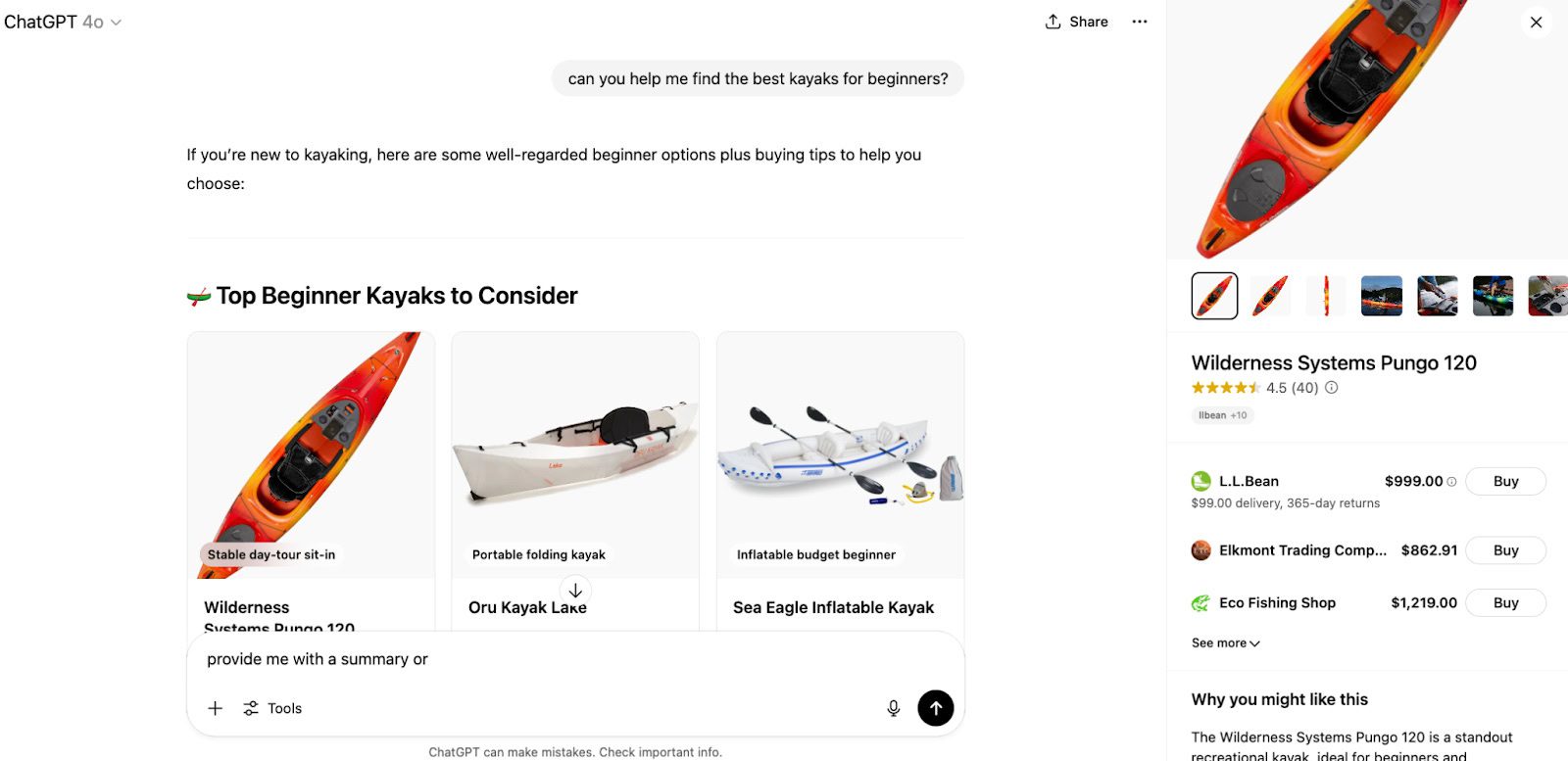

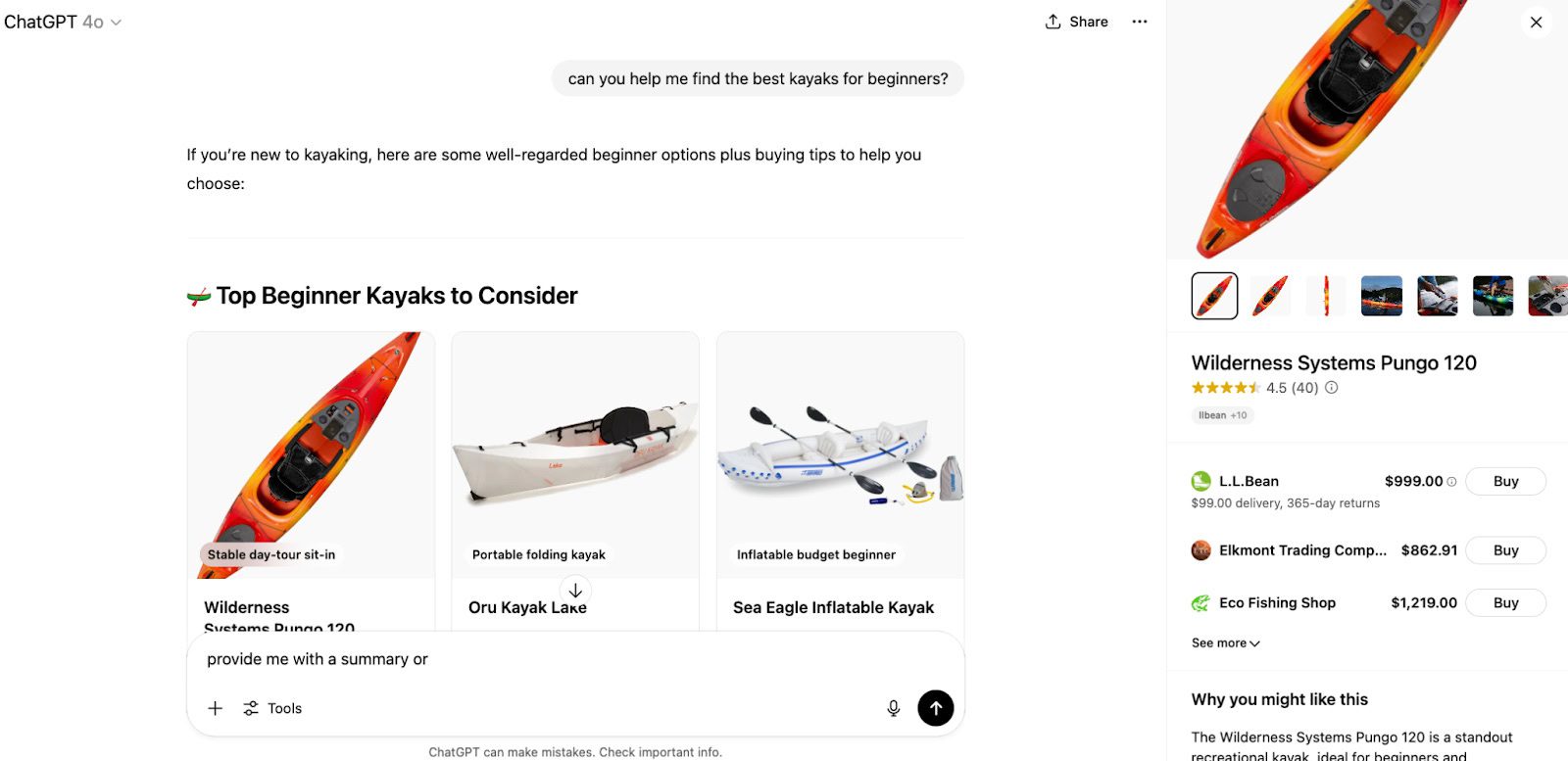

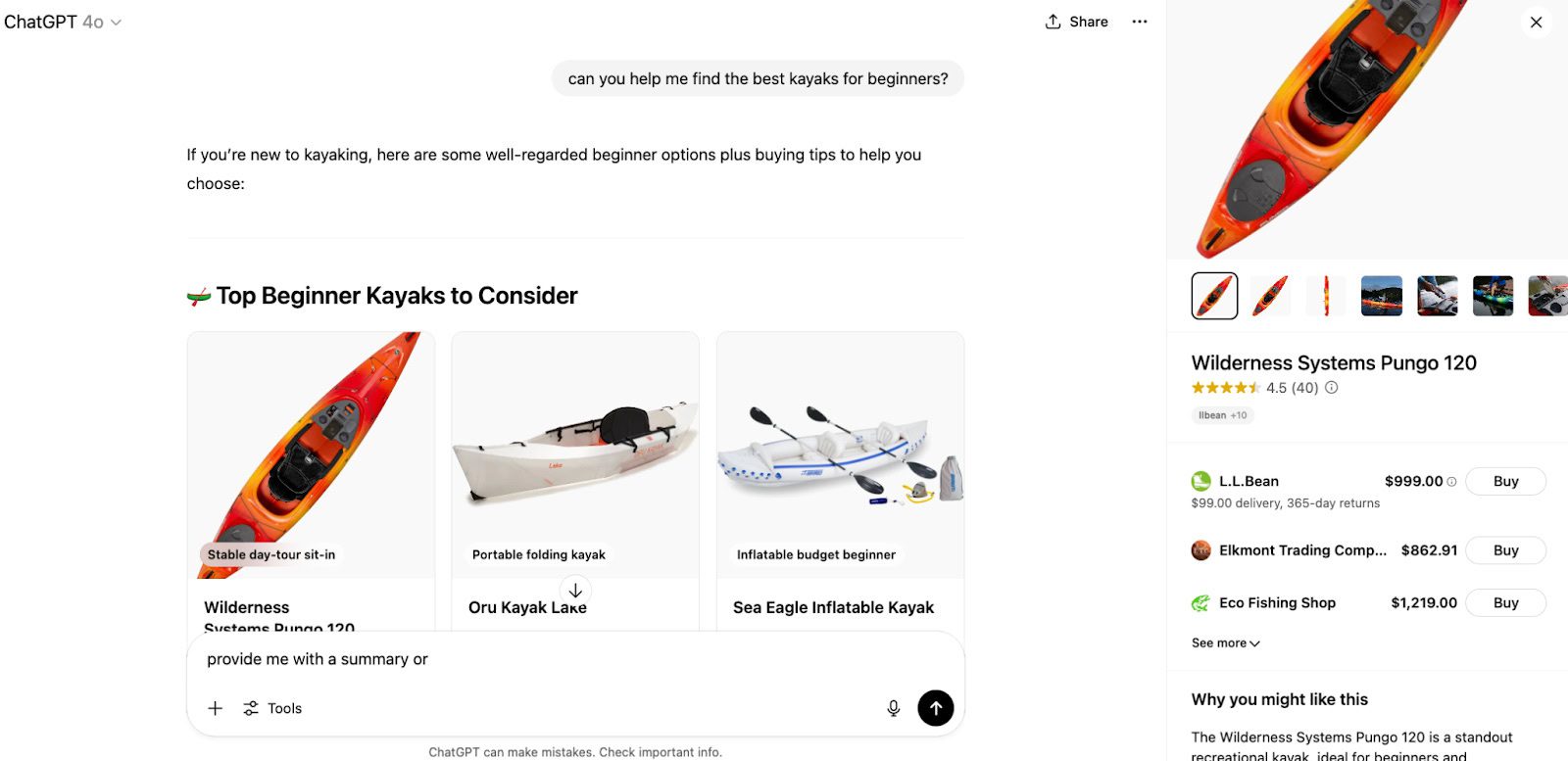

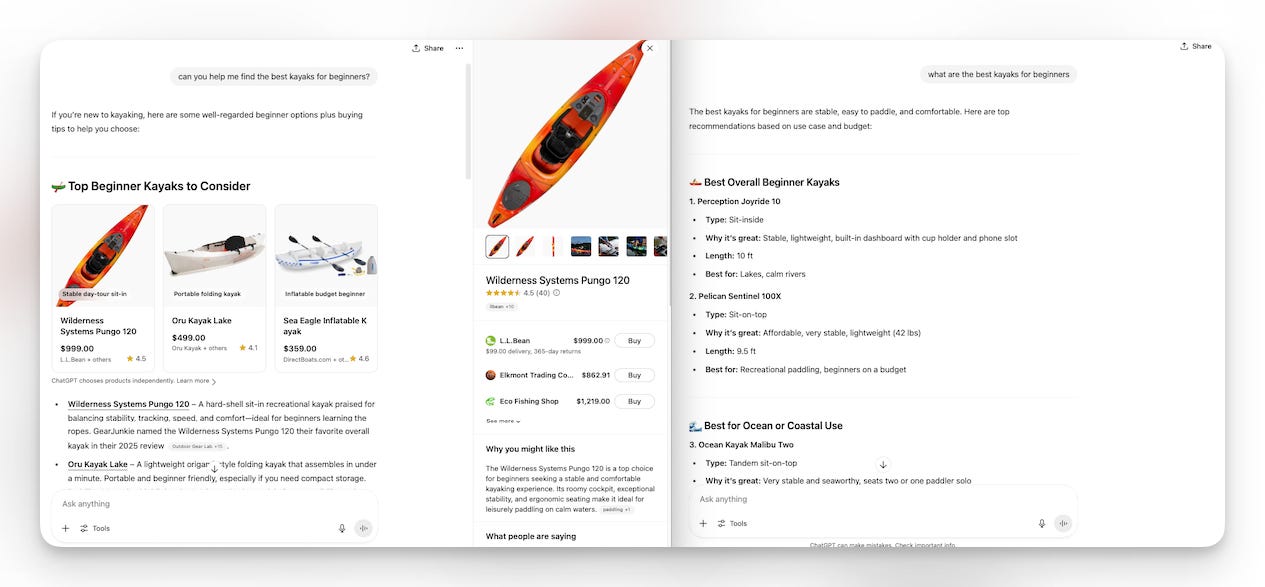

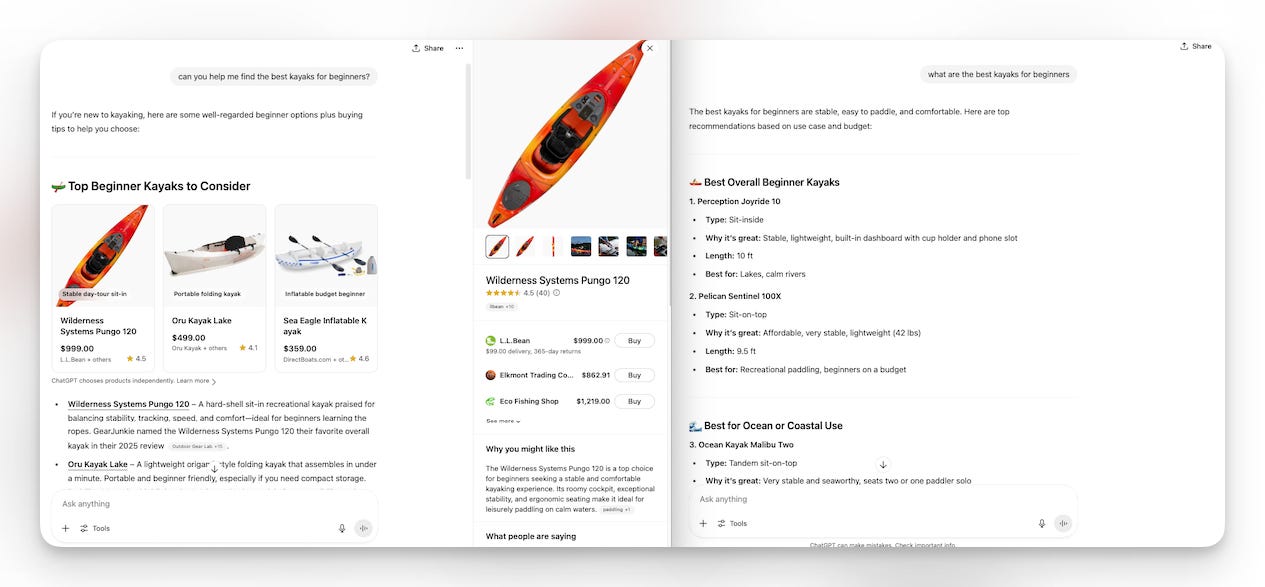

While this analysis covers Google SERP data specifically, it’d be a miss to not discuss the new shopping features in ChatGPT.

However, we don’t yet have months and months of data on LLM-based conversational product recommendations to give us good, clear information, so I anticipate there will be more analysis ahead once more time passes.

ChatGPT’s shopping experience is starting to look a lot like Google’s – but with a twist: Instead of viewing lists of blue links or multiple product grids, it curates a conversational shortlist with minimal product listings included.

No affiliate links and no paid ads (yet).

OpenAI integrates real-time product data from tools like Klarna and Shopify, allowing ChatGPT to surface up-to-date prices, availability, reviews, and product details in a shoppable card-style format.

ChatGPT also offers a “Why you might like this” and “What people are saying” generative summary when a specific product is clicked.

OpenAI offers the following guidance about how these products are selected [source]:

A product appears in the visual carousel when ChatGPT perceives it’s relevant to the user’s intent. ChatGPT assesses intent based on the user’s query and other available context, such as memories or custom instructions….

When determining which products to surface, ChatGPT considers:

• Structured metadata from third-party providers (e.g., price, product description) and other third-party content (e.g., reviews).

• Model responses generated by ChatGPT before it considers any new search results. Learn more.

• OpenAI safety standards.

Depending on the user’s needs, some of these factors will be more relevant than others. For example, if the user specifies a budget of $30, ChatGPT will focus more on price, whereas if price isn’t important, it may focus on other aspects instead.

OpenAI also explains how merchants are selected for products [source]:

When a user clicks on a product, we may show a list of merchants offering it. This list is generated based on merchant and product metadata we receive from third-party providers. Currently, the order in which we display merchants is predominantly determined by these providers….

To that end, we’re exploring ways for merchants to provide us their product feeds directly, which will help ensure more accurate and current listings. If you’re interested in participating, complete the interest form here, and we’ll notify you once submissions open.

That being said, it takes some trial and error to trigger product recommendations directly in the chat.

For instance, the prompt [can you help me find the best kayaks for beginners] results in an output that includes product recommendations, while the query [what are the best kayaks for beginners] results in a list without shopping results, features, or links.

Prompts with action-oriented language like “can you help me” and “will you find” may have a higher likelihood of offering shopping results directly in the chat, while queries like “what is the best” and “what are the best” and “compare the features of” may result in a variety of recommendations.

Featured Image: Paulo Bobita/Search Engine Journal