Research Confirms Google AIO Keyword Trends via @sejournal, @martinibuster

New research by enterprise search marketing company BrightEdge reveals dramatic changes to sites surfaced through Google’s AI Overviews search feature and though it maintains search market share, the data shows that AI search engine Perplexity is gaining ground at a remarkable pace.

Rapid & Dramatic Changes In AIO Triggers

The words that trigger AI Overviews are changing at an incredibly rapid pace. Some keyword trends in June may already changed in July.

AI Overviews were triggered 50% more times for keywords with the word “best” in them. But Google may have reversed that behavior because those phrases, when applied to products, don’t appear to be triggering AIOs in July.

Other AIO triggers for June 2024:

- “What Is” keywords increased by 20% more times

- “How to” queries increased by 15%

- Queries with the phrase “”symptoms of” increased by about 12%

- Queries with the word “treatment” increased by 10%

A spokesperson from BrightEdge responded to my questions about ecommerce search queries:

“AI’s prevalence in ecommerce is indeed increasing, with a nearly 20% rise in ecommerce keywords showing AI overviews since the beginning of July, and a dramatic 62.6% increase compared to the last week of June. Alongside this growth, we’re seeing a significant 66.67% uptick in product searches that contain both pros and cons from the AI overview. This dual trend indicates not only more prevalent use of AI in ecommerce search results but also more comprehensive and useful information being provided to consumers through features like the pros/cons modules.”

Google Search And AI Trends

BrightEdge used its proprietary BrightEdge Generative Parser™ (BGP) tool to identify key trends in search that may influence digital marketing for the rest of 2024. BGP is a tool that collects massive amounts of search trend data and turns it into actionable insights.

Their research estimates that each percentage point of search market share represents $1.2 billion, which means that gains as small as single digits are still incredibly valuable.

Jim Yu, founder and executive chairman of BrightEdge noted:

“There is no doubt that Google’s dominance remains strong, and what it does in AI matters to every business and marketer across the planet.

At the same time, new players are laying new foundations as we enter an AI-led multi-search universe. AI is in a constant state of progress, so the most important thing marketers can do now is leverage the precision of insights to monitor, prepare for changes, and adapt accordingly.

Google continues to be the most dominant source of search traffic, driving approximately 92% organic search referrals. A remarkable data point from the research is that AI competitors in all forms have not yet made a significant impact as a source of traffic, completely deflating speculation that AI competitors will cut into Google’s search traffic.

Massive Decrease In Reddit & Quora Referrals

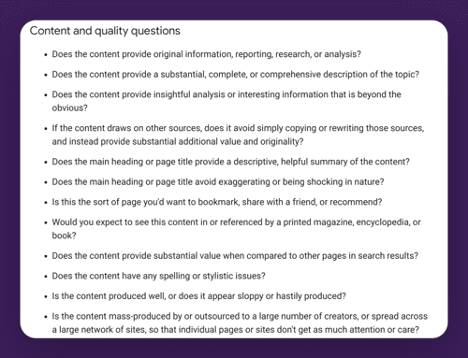

Back in May 2024 Google Of interest to search marketers is that Google has followed through in reducing the amount of user generated content (UGC) surfaced through its AI Overviews search feature. UGC is responsible for many of the outrageously bad responses that generated negative press. BrightEdge’s research shows that referrals to Reddit and Quora from AI Overviews declined to “near zero” in the month of June.

Citations to Quora from AI Overviews are reported to have decreased by 99.69%. Reddit fared marginally etter in June with an 85.71% decrease

BrightEdge’s report noted:

“Google is prioritizing established, expert content over user discussions and forums.”

Bing, Perplexity And Chatbot Impact

Market share for Bing continues to increase but only by fractions of a percentage point, growing from 4.2% to 4.5%. But as they say, it’s better to be moving forward than standing still.

Perplexity on the other hand is growing at a monthly rate of 31%. Percentages however can be misleading because 31% of a relatively small number is still a relatively small number. Most publishers aren’t talking about all the traffic they’re getting from Perplexity so they still have a way to go. Nevertheless, a monthly growth rate of 31% is movement in the right direction.

Traffic from Chatbots aren’t really a thing, so this comparison should be put into that perspective. Sending referral traffic to websites isn’t really what chatbots like Claude and ChatGPT are about (at this point in time). The data shows that both Claude and ChatGPT are not sending much traffic.

OpenAI however is hiding referrals from the websites that it’s sending traffic to which makes it difficult to track it. Therefore a full understanding of the impact of LLM traffic, because ChatGPT uses a rel=noreferrer HTML attribute which hides all traffic originating from ChatGPT to websites. The use of the rel=noreferrer link attribute is not unusual though because it’s an industry standard for privacy and security.

BrightEdge’s analysis looks at this from a long term perspective and anticipates that referral traffic from LLMs will become more prevalent and at some point will become a significant consideration for marketers.

This is the conclusion reached by BrightEdge:

“The overall number of referrals from LLMs is small and expected to have little industry impact at this time. However, if this incremental growth continues, BrightEdge predicts it will influence where people search online and how brands approach optimizing for different engines.”

Before the iPhone existed, many scoffed at the idea of the Internet on mobile devices. So BrightEdge’s conclusions about what to expect from LLMs are not unreasonable.

AIO trends have already changed in July, pointing to the importance of having fresh data for adapting to fast changing AIO keyword trends. BrightEdge delivers real-time data updated on a daily basis so that marketers can make better informed decisions.

Understand AI Overview Trends:

Ten Observations On AI Overviews For June 2024

Featured Image by Shutterstock/Krakenimages.com

![Google search results for [rutgers university]](https://ecommerceedu.com/wp-content/uploads/2024/07/rutgers-university-google-search-591.png)