Is Google Broken Or Are Googlers Right That It’s Working Fine? via @sejournal, @martinibuster

Recent statements by Googlers indicate that the algorithm is working the way it’s supposed to and that site owners should just focus more on their users and less on trying to give the algorithm what it’s looking for. But the same Googlers also say that the search team is working on a way to show more good content.

That can seem confusing because if the algorithm isn’t broken then why are they also working on it as if it’s broken in some way? The answer to the question is a bit surprising.

Google’s Point Of View

It’s important to try to understand how search looks like from Google’s point of view. Google makes it easier to do with their Search Off The Record (SOTR) podcast because it’s often just Googlers talking about search from their side of the search box.

And in a recent SOTR podcast Googlers Gary Illyes and John Mueller talked about how something inside Google might break but from their side of the search box it’s a minor thing, not worth making an announcement. But then people outside of Google notice that something’s broken.

It’s in that context that Gary Illyes made the following statement about deciding whether to “externalize” (communicate) that something is broken.

He shared:

“There’s also the flip side where we are like, “Well, we don’t actually know if this is going to be noticed,” and then two minutes later there’s a blog that puts up something about “Google is not indexing new articles anymore. What up?” And I say, “Okay, let’s externalize it.””

John Mueller then asks:

“Okay, so if there’s more pressure on us externally, we would externalize it?”

And Gary answered:

“Yeah. For sure. Yeah.”

John follows up with:

“So the louder people are externally, the more likely Google will say something?”

Gary then answered yes and no because sometimes nothing is broken and there’s nothing to announce, even though people are complaining that something is broken.

He explained:

“I mean, in certain cases, yes, but it doesn’t work all the time, because some of the things that people perceive externally as a failure on our end is actually working as intended.”

So okay, sometimes things are working as they should but what’s broken is on the site owner’s side and maybe they can’t see it for whatever reason and you can tell because sometimes people tweet about getting caught in an update that didn’t happen, like some people thought their sites were mistakenly caught in Site Reputation Abuse crackdown because their sites lost rankings at the same time that the manual actions went out.

The Non-Existent Algorithms

Then there are the people who continue to insist that their sites are suffering from the HCU (the helpful content update) even though there is no HCU system anymore.

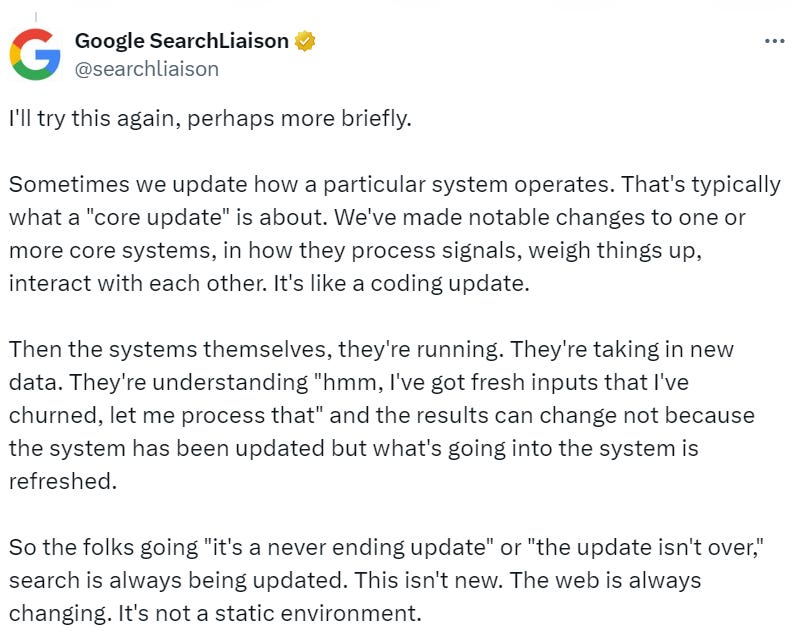

SearchLiaison recently tweeted about the topic of people who say they were caught in the HCU.

“I know people keep referring to the helpful content system (or update), and I understand that — but we don’t have a separate system like that now. It’s all part of our core ranking systems: https://developers.google.com/search/help/helpful-content-faq

It’s a fact, all the signals of the HCU are now a part of the core algorithm which consists of a lot of parts and there is no longer that one thing that used to be the HCU. So the algorithm is still looking for helpfulness but there are other signals as well because in a core update there are a lot of things changing.

So it may be the case that people should focus less on helpfulness related signals and be more open to the possibility of a wider range of issues instead of just one thing (helpfulness) which might not even be the reason why a site lost rankings.

Mixed Signals

But then there are the mixed signals where Googlers say that things are working the way they should but that the search team is working on showing more sites, which kind of implies the algorithm isn’t working the way it should be working.

On June 3rd SearchLiaison discussed how people who claim they have algorithmic actions against them don’t. The context of the statement was in answering a June 3rd tweet by someone who said they were hit by an algorithm update on May 6th and that they don’t know what to fix because they didn’t receive a manual action. Please note that the tweet has a type where they wrote June 6th when they meant May 6th.

The original June 3rd tweet refers to the site reputation abuse manual actions:

“I know @searchliaison says that there was no algorithmic change on June 6, but the hits we’ve taken since then have been swift and brutal.

Something changed, and we didn’t get the luxury of manual actions to tell us what we did wrong, nor did anyone else in games media.”

Before we get into what SearchLiason said, the above tweet could be seen as an example of focusing on the wrong “signal” or thing and instead it might be more productive to be open to a wider range of possible reasons why the site lost rankings.

SearchLiaison responded:

“I totally understand that thinking, and I won’t go back over what I covered in my long post above other than to reiterate that 1) some people think they have an algorithmic spam action but they don’t and 2) you really don’t want a manual action.”

In the same response, SearchLiaison left the door open that it’s possible search could do better and that they’re researching on how to do that.

He said:

“And I’ll also reiterate what both John and I have said. We’ve heard the concerns such as you’ve expressed; the search team that we’re both part of has heard that. We are looking at ways to improve.”

And it’s not just SearchLiaison leaving the door open to the possibility of something changing at Google so that more sites are shown, John Mueller also said something similar last month.

John tweeted:

“I can’t make any promises, but the team working on this is explicitly evaluating how sites can / will improve in Search for the next update. It would be great to show more users the content that folks have worked hard on, and where sites have taken helpfulness to heart.”

SearchLiaison said that they’re looking at ways to improve and Mueller said they’re evaluating how sites “can/will improve in Search for the next update.” So, how does one reconcile that something is working the way it’s supposed to and yet there’s room to be improved?

Well, one way to consider it is that the algorithm is functional and satisfactory but that it’s not perfect. And because nothing is perfect that means there is room for refinement and opportunities to improve, which is the case about everything, right?

Takeaways:

1. It may be helpful to consider that everything can be refined and made better is not necessarily broken because nothing is perfect.

2. It may also be productive to consider that helpfulness is just one signal out of many signals and what might look like an HCU issue might not be that at all, in which case a wider range of possibilities should be considered.

Featured Image by Shutterstock/ViDI Studio