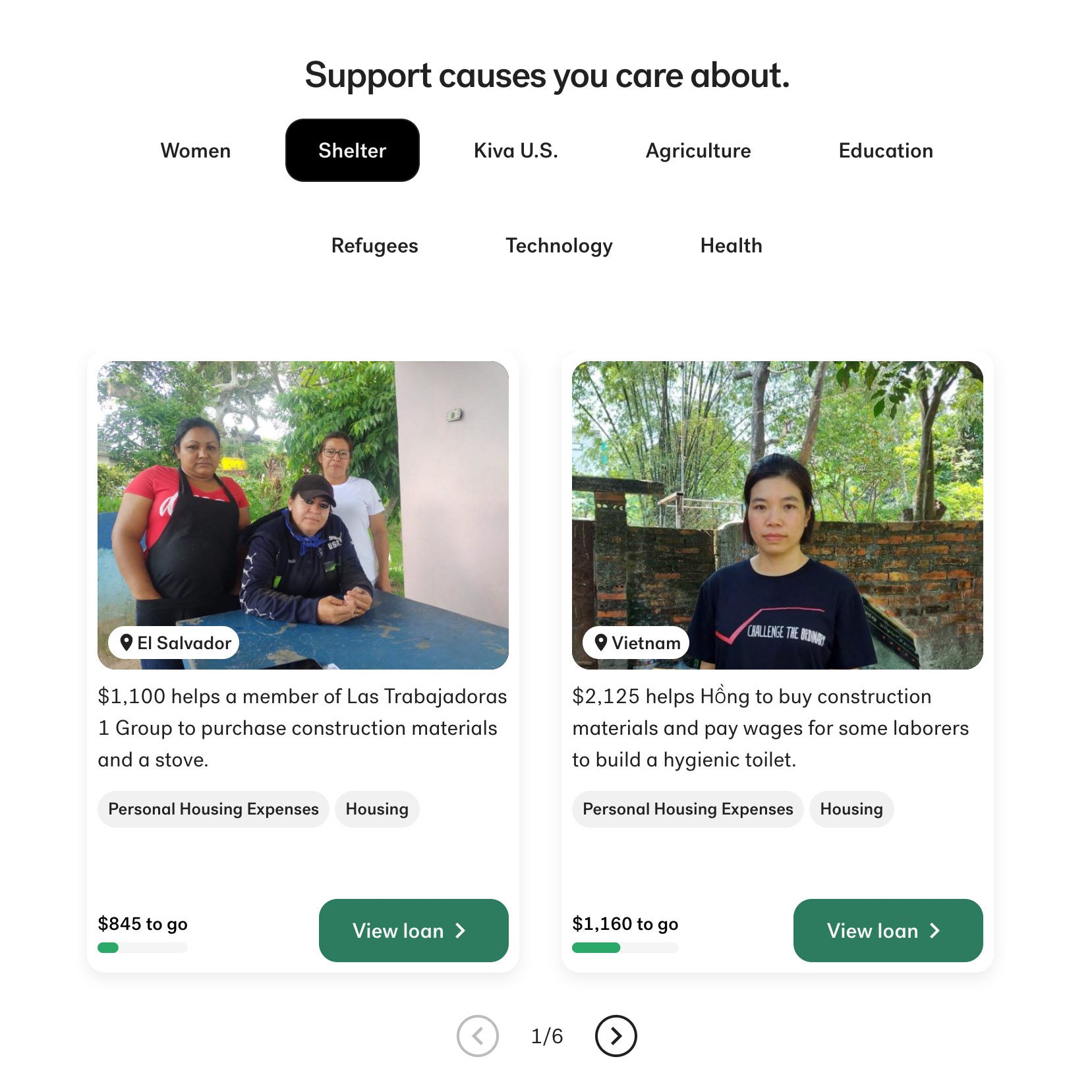

One morning in August 2021, as she had nearly every morning for about a decade, Janice Smith opened her computer and went to Kiva.org, the website of the San Francisco–based nonprofit that helps everyday people make microloans to borrowers around the world. Smith, who lives in Elk River, Minnesota, scrolled through profiles of bakers in Mexico, tailors in Uganda, farmers in Albania. She loved the idea that, one $25 loan at a time, she could fund entrepreneurial ventures and help poor people help themselves.

But on this particular morning, Smith noticed something different about Kiva’s website. It was suddenly harder to find key information, such as the estimated interest rate a borrower might be charged—information that had been easily accessible just the day before and felt essential in deciding who to lend to. She showed the page to her husband, Bill, who had also become a devoted Kiva lender. Puzzled, they reached out to other longtime lenders they knew. Together, the Kiva users combed through blog posts, press releases, and tax filings, but they couldn’t find a clear explanation of why the site looked so different. Instead, they learned about even bigger shifts—shifts that shocked them.

Kiva connects people in wealthier communities with people in poorer ones through small, crowdfunded loans made to individuals through partner companies and organizations around the world. The individual Kiva lenders earn no interest; money is given to microfinance partners for free, and only the original amount is returned. Once lenders get their money back, they can choose to lend again and again. It’s a model that Kiva hopes will foster a perennial cycle of microfinance lending while requiring only a small outlay from each person.

This had been the nonprofit’s bread and butter since its founding in 2005. But now, the Smiths wondered if things were starting to change.

The Smiths and their fellow lenders learned that in 2019 the organization had begun charging fees to its lending partners. Kiva had long said it offered zero-interest funding to microfinance partners, but the Smiths learned that the recently instituted fees could reach 8%. They also learned about Kiva Capital, a new entity that allows large-scale investors—Google is one—to make big investments in microfinance companies and receive a financial return. The Smiths found this strange: thousands of everyday lenders like them had been offering loans return free for more than a decade. Why should Google now profit off a microfinance investment?

Combined, Kiva’s top 10 executives made nearly $3.5 million in 2020. In 2021, nearly half of Kiva’s revenue went to staff salaries.

The Kiva users noticed that the changes happened as compensation to Kiva’s top employees increased dramatically. In 2020, the CEO took home over $800,000. Combined, Kiva’s top 10 executives made nearly $3.5 million in 2020. In 2021, nearly half of Kiva’s revenue went to staff salaries.

Considering all the changes, and the eye-popping executive compensation, “the word that kept coming up was ‘shady,’” Bill Smith told me. “Maybe what they did was legal,” he said, “but it doesn’t seem fully transparent.” He and Janice felt that the organization, which relied mostly on grants and donations to stay afloat, now seemed more focused on how to make money than how to create change.

Kiva, on the other hand, says the changes are essential to reaching more borrowers. In an interview about these concerns, Kathy Guis, Kiva’s vice president of investments, told me, “All the decisions that Kiva has made and is now making are in support of our mission to expand financial access.”

In 2021, the Smiths and nearly 200 other lenders launched a “lenders’ strike.” More than a dozen concerned lenders (as well as half a dozen Kiva staff members) spoke to me for this article. They have refused to lend another cent through Kiva, or donate to the organization’s operations, until the changes are clarified—and ideally reversed.

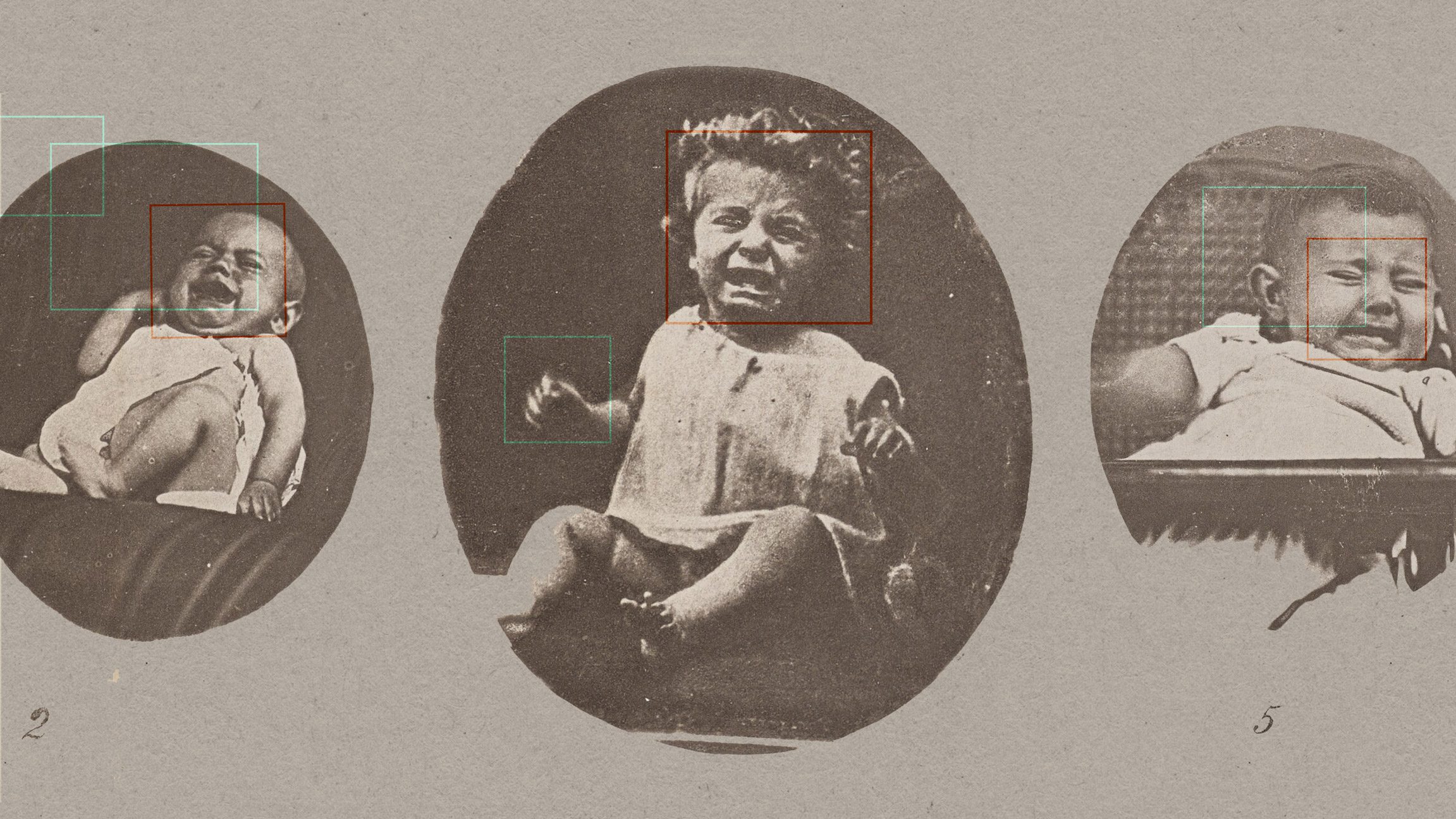

When Kiva was founded in 2005, by Matt Flannery and Jessica Jackley, a worldwide craze for microfinance—sometimes called microcredit—was at its height. The UN had dubbed 2005 the “International Year of Microcredit”; a year later, in 2006, Muhammad Yunus and the Grameen Bank he had founded in the 1980s won the Nobel Peace Prize for creating, in the words of the Nobel Committee, “economic and social development from below.” On a trip to East Africa, Flannery and Jackley had a lightbulb moment: Why not expand microfinance by helping relatively wealthy individuals in places like the US and Europe lend to relatively poor businesspeople in places like Tanzania and Kenya? They didn’t think the loans Kiva facilitated should come from grants or donations: the money, they reasoned, would then be limited, and eventually run out. Instead, small loans—as little as $25—would be fully repayable to lenders.

Connecting wealthier individuals to poorer ones was the “peer-to-peer” part of Kiva’s model. The second part—the idea that funding would be sourced through the internet via the Kiva.org website—took inspiration from Silicon Valley. Flannery and another Kiva cofounder, Premal Shah, both worked in tech—Flannery for TiVo, Shah for PayPal. Kiva was one of the first crowdfunding platforms, launched ahead of popular sites like GoFundMe.

But Kiva is less direct than other crowdfunding sites. Although lenders “choose” borrowers through the website, flipping through profiles of dairy farmers and fruit sellers, money doesn’t go straight to them. Instead, the loans that pass through Kiva are bundled together and sent to one of the partnering microfinance institutions. After someone in the US selects, say, a female borrower in Mongolia, Kiva funds a microfinance organization there, which then lends to a woman who wants to set up a business.

Even though the money takes a circuitous route, the premise of lending to an individual proved immensely effective. Stories about Armenian bakers and Moroccan bricklayers helped lenders like the Smiths feel connected to something larger, something with purpose and meaning. And because they got their money back, while the feel-good rewards were high, the stakes were low. “It’s not charity,” the website still emphasizes today. “It’s a loan.” The organization covered its operating expenses with funding from the US government and private foundations and companies, as well as donations from individual lenders, who could add a tip on top of their loan to support Kiva’s costs.

This sense of individual connection and the focus on facilitating loans rather than donations was what initially drew Janice Smith. She first heard of microfinance through Bill Clinton’s book Giving, and then again through Oprah Winfrey—Kiva.org was included as one of “Oprah’s Favorite Things” in 2010. Smith was particularly enticed by the idea that she could re-lend the same $25 again and again: “I loved looking through borrower profiles and feeling like I was able to help specific people. Even when I realized that the money was going to a [microfinance lender]”—not directly to a borrower—“it still gave me a feeling of a one-on-one relationship with this person.”

Kiva’s easy-to-use website and focus on repayments helped further popularize the idea of small loans to the poor. For many Americans, if they’ve heard of microfinance at all, it’s because they or a friend or family member have lent through the platform. As of 2023, according to a Kiva spokesperson, 2.4 million people from more than 190 countries have done so, ultimately reaching more than 5 million borrowers in 95 countries. The spokesperson also pointed to a 2022 study of 18,000 microfinance customers, 88% of whom said their quality of life had improved since accessing a loan or another financial service. A quarter said the loans and other services had increased their ability to invest and grow their business.

But Kiva has also long faced criticism, especially when it comes to transparency. There was the obvious issue that the organization suggests a direct connection between Kiva.org users and individual borrowers featured on the site, a connection that does not actually exist. But there were also complaints that the interest rates borrowers pay were not disclosed. Although Kiva initially did not charge fees to the microfinance institutions it funneled money through, the loans to the individual borrowers do include interest. The institutions Kiva partners with use that to cover operational costs and, sometimes, make a profit.

Critics were concerned about this lack of disclosure given that interest rates on microfinance loans can reach far into the double digits—for more than a decade, some have even soared above 100%. (Microlenders and their funders have long argued that interest rates are needed to make funding sustainable.) A Kiva spokesperson stressed that the website now mentions “average cost to borrower,” which is not the interest rate a borrower will pay but a rough approximation. Over the years, Kiva has focused on partnering with “impact-first” microfinance lenders—those that charge low interest rates or focus on loans for specific purposes, such as solar lights or farming.

Critics also point to studies showing that microfinance has a limited impact on poverty, despite claims that the loans can be transformative for poor people. For those who remain concerned about microfinance overall, the clean, easy narrative Kiva promotes is a problem. By suggesting that someone like Janice Smith can “make a loan, change a life,” skeptics charge, the organization is effectively whitewashing a troubled industry accused of high-priced loans and harsh collection tactics that have reportedly led to suicides, land grabs, and a connection to child labor and indebted servitude.

Over her years of lending through Kiva.org, Smith followed some of this criticism, but she says she was “sucked in” from her first loan. She was so won over by the mission and the method that she soon became, in her words, a “Kivaholic.” Lenders can choose to join “teams” to lend together, and in 2015 she launched one, called Together for Women. Eventually, the team would include nearly 2,500 Kiva lenders—including one who, she says, put his “whole retirement” into Kiva, totaling “millions of dollars.”

Smith soon developed a steady routine. She would open her computer first thing in the morning, scroll through borrowers, and post the profiles of those she considered particularly needy to her growing team, encouraging support from other lenders. In 2020, several years into her “Kivaholicism,” Kiva invited team captains like her to join regular calls with its staff, a way to disseminate information to some of the most active members. At first, these calls were cordial. But in 2021, as lenders like Smith noticed changes that concerned them, the tone of some conversations changed. Lenders wanted to know why the information on Kiva’s website seemed less accessible. And then, when they didn’t get a clear answer, they pushed on everything else, too: the fees to microfinance partners, the CEO salaries.

In 2021 Smith’s husband, Bill, became captain of a new team calling itself Lenders on Strike, which soon had nearly 200 concerned members. The name sent a clear message: “We’re gonna stop lending until you guys get your act together and address the stuff.” Even though they represented a small fraction of those who had lent through Kiva, the striking members had been involved for years, collectively lending millions of dollars—enough, they thought, to get Kiva’s attention.

On the captains’ calls and in letters, the strikers were clear about a top concern: the fees now charged to microfinance institutions Kiva works with. Wouldn’t the fees make the loans more expensive to the borrowers? Individual Kiva.org lenders still expected only their original money back, with no return on top. If the money wasn’t going to them, where exactly would it be going?

On one call, the Smiths recall, staffers explained that the fees were a way for Kiva to expand. Revenue from the fees—potentially millions of dollars—would go into Kiva’s overall operating budget, covering everything from new programs to site visits to staff salaries.

Some lenders were disappointed to learn that loans don’t go directly to the borrowers featured on Kiva’s website. Instead, they are pooled together with others’ contributions and sent to partner institutions to distribute.

But on a different call, Kiva’s Kathy Guis acknowledged that the fees could be bad for poor borrowers. The higher cost might be passed down to them; borrowers might see their own interest rates, sometimes already steep, rise even more. When I spoke to Guis in June 2023, she told me those at Kiva “haven’t observed” a rise in borrowers’ rates as a direct result of the fees. Because the organization essentially acts as a middleman, it would be hard to trace this. “Kiva is one among a number of funding sources,” Guis explained—often, in fact, a very small slice of a microlender’s overall funding. “And cost of funds is one among a number of factors that influence borrower pricing.” A Kiva spokesperson said the average fee is 2.53%, with fees of 8% charged on only a handful of “longer-term, high-risk loans.”

The strikers weren’t satisfied: it felt deeply unfair to have microfinance lenders, and maybe ultimately borrowers, pay for Kiva’s operations. More broadly, they took issue with new programs the revenue was being spent on. Kiva Capital, the new return-seeking investment arm that Google has participated in, was particularly concerning. Several strikers told me that it seemed strange, if not unethical, for an investor like Google to be able to make money off microfinance loans when everyday Kiva lenders had expected no return for more than a decade—a premise that Kiva had touted as key to its model.

A Kiva spokesperson told me investors “are receiving a range of returns well below a commercial investor’s expectations for emerging-market debt investments,” but did not give details. Guis said that thanks in part to Kiva Capital, Kiva “reached 33% more borrowers and deployed 33% more capital in 2021.” Still, the Smiths and other striking lenders saw the program less as an expansion and more as a departure from the Kiva they had been supporting for years.

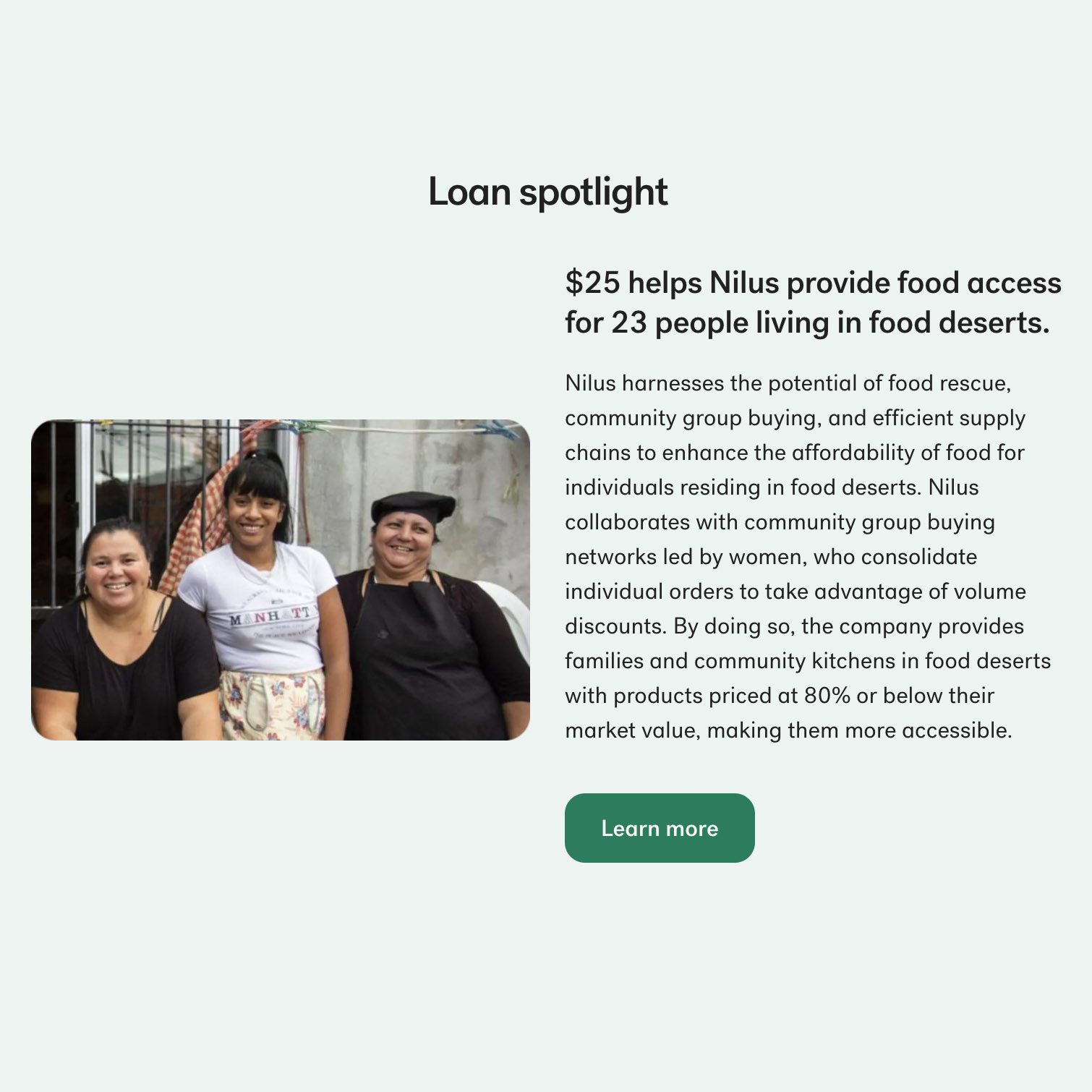

Another key concern, strikers told me, is Kiva US, a separate program that offers zero-interest loans to small businesses domestically. Janice Smith had no fundamental problem with the affordable rates, but she found it odd that an American would be offered 0% interest while borrowers in poorer parts of the world were being charged up to 70%, according to the estimates posted on Kiva’s website. “I don’t see why poor people in Guatemala should basically be subsidizing relatively rich people here in Minnesota,” she told me. Guis disagreed, telling me, “I take issue with the idea that systematically marginalized communities in the US are less deserving.” She said that in 2022, nearly 80% of the businesses that received US loans were “owned by Black, Indigenous, and people of color.”

After months of discussions, the strikers and Kiva staff found themselves at loggerheads. “They feel committed to fees as a revenue source, and we feel committed to the fact that it’s inappropriate,” Bill Smith told me. Guis stressed that Kiva had gone through many changes throughout its 18 years—the fees, Kiva Capital, and Kiva US being just a few. “You have to evolve,” she said.

The fees and the returns-oriented Kiva Capital felt strange enough. But what really irked the Lenders on Strike was how much Kiva executives were being paid for overseeing those changes. Lenders wanted to know why, according to Kiva’s tax return, roughly $3.5 million had been spent on executive compensation in 2020—nearly double the amount a few years previously. Bill Smith and others I spoke to saw a strong correlation: at the same time Kiva was finding new ways to make money, Kiva’s leadership was bringing home more cash.

The concerned lenders weren’t the only ones to see a connection. Several employees I spoke to pointed to questionable decisions made under the four-year tenure of Neville Crawley, who was named CEO in 2017 and left in 2021. Crawley made approximately $800,000 in 2020, his last full year at the organization, and took home just under $750,000 in 2021, even though he left the position in the middle of the year. When I asked Kathy Guis why Crawley made so much for about six months of work, she said she couldn’t answer but would pass that question along to the board.

Afterward, I received a written response that did not specifically address CEO compensation, instead noting in part, “As part of Kiva’s commitment to compensation best practices, we conduct regular org-wide compensation fairness research, administer salary surveys, and consult market data from reputable providers.” Chris Tsakalakis, who took over from Crawley, earned more than $350,000 in 2021, for about half a year of work. (His full salary and that of Vishtal Ghotge, his successor and Kiva’s newest CEO, are not yet publicly available in Kiva’s tax filings, nor would Kiva release these numbers to us when we requested them.) In 2021, nearly $20 million of Kiva’s $42 million in revenue went to salaries, benefits, and other compensation.

According to the striking lenders, Kiva’s board explained that as a San Francisco–based organization, it needed to attract top talent in a field, and a city, dominated by tech, finance, and nonprofits. The last three CEOs have had a background in business and/or tech; Kiva’s board is stacked with those working at the intersection of tech, business, and finance and headed by Julie Hanna, an early investor in Lyft and other Silicon Valley companies. This was especially necessary, the board argued, as Kiva began to launch new programs like Kiva Capital, as well as Protocol, a blockchain-enabled credit bureau launched in Sierra Leone in 2018 and then closed in 2022.

Someone taking home nearly a million dollars a year was steering the ship, not the lenders and their $25 loans.

The Smiths and other striking lenders didn’t buy the rationale. The leaders of other microlenders—including Kiva partners—make far less. For example, the president and CEO of BRAC USA, a Kiva partner and one of the largest nonprofits in the world, made just over $300,00 in 2020—not only less than what Kiva’s CEO earns, but also below what Kiva’s general counsel, chief investment officer, chief strategy officer, executive vice president of engineering, and chief officer for strategic partnerships were paid in 2021, according to public filings. Julie Hanna, the executive chair of Kiva’s board, made $140,000 for working 10 hours a week in 2021. Premal Shah, one of the founders, took home roughly $320,000 as “senior consultant” in 2020.

Even among other nonprofits headquartered in expensive American cities, Kiva’s CEO salary is high. For example, the head of the Sierra Club, based in Oakland, made $500,000 in 2021. Meanwhile, the executive director of Doctors Without Borders USA, based in New York City, had a salary of $237,000 in 2020, the same year that the Kiva top executive made roughly $800,000—despite 2020 revenue of $558 million, compared with Kiva’s $38 million.

The striking lenders kept pushing—on calls, in letters, on message boards—and the board kept pushing back. They had given their rationale, about the salaries and all the other changes, and as one Kiva lender told me, it was clear “there would be no more conversation.” Several strikers I spoke to said it was the last straw. This was, they realized, no longer their Kiva. Someone taking home nearly a million dollars a year was steering the ship, not them and their $25 loans.

The Kiva lenders’ strike is concentrated in Europe and North America. But I wanted to understand how the changes, particularly the new fees charged to microfinance lenders, were viewed by the microfinance organizations Kiva works with.

So I spoke to Nurhayrah Sadava, CEO of VisionFund Mongolia, who told me she preferred the fees to the old Kiva model. Before the lending fees were introduced, money was lent from Kiva to microfinance organizations in US dollars. The partner organizations then paid the loan back in dollars too. Given high levels of inflation, instability, and currency fluctuations in poorer countries, that meant partners might effectively pay back more than they had taken out.

But with the fees, Sadava told me, Kiva now took on the currency risk, with partners paying a little more up front. Sadava saw this as a great deal, even if it looked “shady” to the striking lenders. What’s more, the fees—around 7% to 8% in the case of VisionFund Mongolia—were cheaper than the organization’s other options: their only alternatives were borrowing from microfinance investment funds primarily based in Europe, which charged roughly 20%, or another VisionFund Mongolia lender, which charges the organization 14.5%.

Sadava told me that big international donors aren’t interested in funding their microfinance work. Given the context, VisionFund Mongolia was happy with the new arrangement. Sadava says the relatively low cost of capital allowed them to launch “resourcefulness loans” for poor businesswomen, who she says pay 3.4% a month.

VisionFund Mongolia’s experience isn’t necessarily representative—it became a Kiva partner after the fees were instituted, and it works in a country where it is particularly difficult to find funding. Still, I was surprised by how resoundingly positive Sadava was about the new model, given the complaints I’d heard from dozens of aggrieved Kiva staffers and lenders. That got me thinking about something Hugh Sinclair, a longtime microfinance staffer and critic, told me a few years back: “The client of Kiva is the American who gets to feel good, not the poor person.”

In a way, by designing the Kiva.org website primarily for the Western funder, not the faraway borrower, Kiva created the conditions for the lenders’ strike.

For years, Kiva has encouraged the feeling of a personal connection between lenders and borrowers, a sense that through the organization an American can alter the trajectory of a life thousands of miles away. It’s not surprising, then, that the changes at Kiva felt like an affront. (One striker cried when he described how much faith he had put into Kiva, only for Kiva to make changes he saw as morally compromising.) They see Kiva as their baby. So they revolted.

By designing the Kiva.org website primarily for the Western funder, not the faraway borrower, Kiva created the conditions for the lenders’ strike.

Kiva now seems somewhat in limbo. It’s still advertising its old-school, anyone-can-be-a-lender model on Kiva.org, while also making significant operational changes (a private investing arm, the promise of blockchain-enabled technology) that are explicitly inaccessible to everyday Americans—and employing high-flying CEOs with CVs and pedigrees that might feel distant, if not outright off-putting, to them. If Kiva’s core premise has been its accessibility to people like the Smiths, it is now actively undermining that premise, taking a chance that expansion through more complicated means will be better for microfinance than honing the simplistic image it’s been built on.

Several of the striking lenders I spoke to were primarily concerned that the Kiva model had been altered into something they no longer recognized. But Janice Smith, and several others, had broader concerns: not just about Kiva, but about the direction the whole microfinance sector was taking. In confronting her own frustrations with Kiva, Smith reflected on criticisms she had previously dismissed. “I think it’s an industry where, depending on who’s running the microfinance institution and the interaction with the borrowers, it can turn into what people call a ‘payday loan’ sort of situation,” she told me. “You don’t want people paying 75% interest and having debt collectors coming after them for the rest of their lives.” Previously, she trusted that she could filter out the most predatory situations through the Kiva website, relying on information like the estimated interest rate to guide her decisions. As information has become harder to come by, she’s had a harder time feeling confident in the terms the borrowers face.

In January 2022, Smith closed the 2,500-strong Together for Women group and stopped lending through Kiva. Dozens of other borrowers, her husband included, have done the same.

While these defectors represent a tiny fraction of the 2 million people who have used the website, they were some of its most dedicated lenders: of the dozen I spoke to, nearly all had been involved for nearly a decade, some ultimately lending tens of thousands of dollars. For them, the dream of “make a loan, change a life” now feels heartbreakingly unattainable.

Smith calls the day she closed her team “one of the saddest days of my life.” Still, the decision felt essential: “I don’t want to be one of those people that’s more like an impact investor who is trying to make money off the backs of the poorer.”

“I understand that I’m in the minority here,” she continued. “This is the way [microfinance is] moving. So clearly people feel it’s something that’s acceptable to them, or a good way to invest their money. I just don’t feel like it’s acceptable to me.”

Mara Kardas-Nelson is the author of a forthcoming book on the history of microfinance, We Are Not Able to Live in the Sky (Holt, 2024).