How To Get Verified on TikTok: Tips for a Successful Application

Learn the TikTok tricks that will help you stand out on the For You page with unique and engaging content.

Learn the TikTok tricks that will help you stand out on the For You page with unique and engaging content.

Remember the early days of the internet?

You could spend all day chatting with your friends on AOL messenger while you played solitaire on Yahoo games. And then your mom picked up the phone to make a call, and you were kicked off the web. Good times.

In those days, if you were doing some shopping, there was a good chance you were doing it on a site with an exact match domain (EMD). For example, if you needed a dog collar, you’d probably end up on a site with an address like www.buydogcollars.com.

In those primitive days of search engine optimization, it was common for companies to put their exact target keyword phrase right in their domain URL.

Unfortunately (or maybe fortunately, depending on how you feel about EMDs), scammers and bad actors took advantage of this, snatched up many of these domains, and linked them to low-quality sites.

So, what’s true today? Does your domain name have an impact on search results?

Let’s take a closer look at the debate.

[Download:] The Complete Google Ranking Factors Guide

Having an exact match domain used to be a big deal.

In 2010, CarInsurance.com sold for $49.7 million: still the most expensive domain name purchase of all time. So clearly, someone valued domains with that keyword.

It was (and sometimes still is) common for people in the SEO industry to advocate for EMDs. The claims around them usually being that they instantly generate credibility and generate a competitive edge.

But remember those bad actors we talked about in the last section? Eventually, Google got wise to their keyword-stuffing URLs and changed its algorithm to discount them. But that’s not to say your website’s domain name does not affect SEO.

There is a lot of mixed information about domain names and their impact on rankings.

There’s no question that domain names played a role in rankings at one point.

In a 2011 Webmaster Hangout, Matt Cutts, a software engineer on Google’s Search Quality group, acknowledged the role EMDs played in the tech giant’s search algorithm.

However, he also stated:

“And so, we have been thinking about adjusting that mix a little bit and sort of turning the knob down within the algorithm, so that given two different domains it wouldn’t necessarily help you as much to have a domain with a bunch of keywords in it.”

And just one year later, in 2012, Cutts tweeted that low-quality exact match domains would get reduced visibility in search results.

Finally, in 2020, Google Webmaster Trends Analyst John Mueller revealed keywords in domain names no longer play a role in determining search engine results rankings.

Answering a question if keywords in domain names impact rankings during an Ask Google Webmasters video, he said, “In short, no. You don’t get a special bonus like that from having a keyword in your top-level domain.”

But this doesn’t mean that domain names are unimportant. They’re just not direct ranking factors.

Learn more about Google Ranking Factors in our 2nd Edition ebook.

Now that we’ve established that domain names are NOT a part of your overall search engine rankings, SEO professionals can just forget about them, right?

Absolutely not.

Your choice of a domain name can be an important aspect of your UX and public image. Your domain name should usually be the most recognizable aspect of your business. Sometimes that’s not your business name but a particular brand or trademark.

You may want to consider subdomains or even separate domains for different properties. If you sell products that resellers carry, this can help your customers find you more easily.

Using keywords in your domain doesn’t help in terms of search ranking; if not done correctly, it could even hurt your SEO.

But, if your branding is heavily focused on a particular service or product, including a keyword in the domain could help users understand what you’re about at a glance. A carefully placed keyword could also help attract audiences likely to convert.

Don’t be afraid to use a keyword if it’s highly relevant or part of your branding.

So, here’s the TL;DR: Your domain name doesn’t directly impact your Google ranking but provides opportunities for savvy web marketers to reflect their brand’s values and create more positive user experiences.

For more help choosing a domain name, check out Roger Montti’s advice.

Featured Image: Paulo Bobita/Search Engine Journal

Google Trends is a surprisingly useful tool for keyword research, especially when using advanced search options that are virtually hidden in plain sight.

Explore the different Google Trends menus and options and discover seemingly endless ways to gain more keyword search volume insights.

Learn new ways to unlock the power of one of Google’s most important SEO tools.

While Google Trends is accurate, it doesn’t show the amount of traffic in actual numbers.

It shows the numbers of queries made in relative percentages on a scale of zero to 100.

Unlike Google Trends, paid SEO tools provide traffic volume numbers for keywords.

But those numbers are only estimates that are extrapolated from a mix of internet traffic data providers, Google Keyword Planner, scraped search results, and other sources.

The clickstream data usually comes from anonymized traffic data acquired from users of certain pop-up blockers, browser plugins, and some free anti-virus software.

The SEO tools then apply a calculation that corresponds to their best guess of how that data correlates with Google keyword search and traffic volume.

So, even though paid SEO tools provide estimates of keyword traffic, the data presented by Google Trends is based on actual search queries and not guesses.

That’s not to say that Google Trends is better than paid keyword tools. When used together with paid keyword tools, one can obtain a near-accurate idea of true keyword search volume.

There are other functions in Google Trends that can help dial in accurate segmentation of the keyword data that helps to understand what geographic locations are best for promotional efforts and also discover new and trending keywords.

Google Trends shows a relative visualization of traffic on a scale of zero to 100.

You can’t really know if the trend is reporting hundreds of keyword searches or thousands because the graph is on a relative scale of zero to one hundred.

However, the relative numbers can have more meaning when they are compared with keywords for which there are known traffic levels from another keyword phrase.

One way to do this is to compare keyword search volume with a keyword whose accurate traffic numbers are already known, for example, from a PPC campaign.

If the keyword volume is especially large for which you don’t have a keyword to compare, there’s another way to find a keyword to use for comparison.

A comparison keyword doesn’t have to be related. It can be in a completely different vertical and could even be the name of a trending celebrity.

The important thing is the general keyword volume data.

Google publishes a Google Trends Daily Trends webpage that shows trending search queries.

What’s useful about this page is that Google provides keyword volumes in numbers, like 100,000+ searches per day, etc.

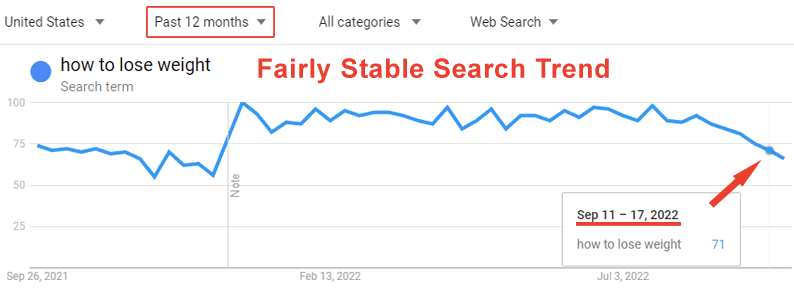

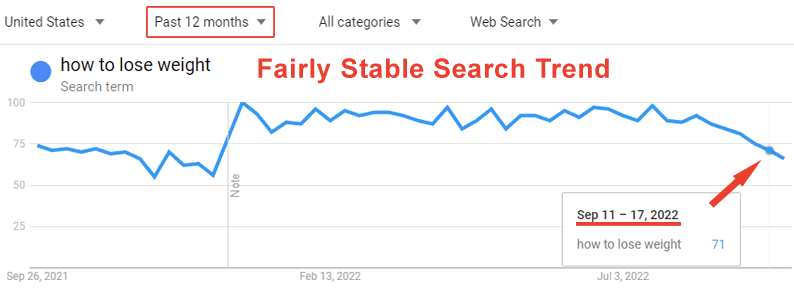

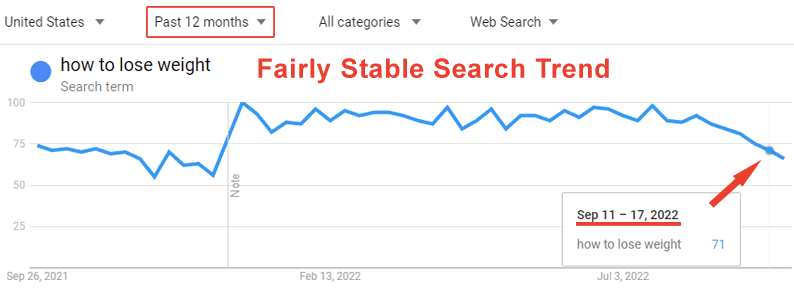

I’m going to use the search phrase [how to lose weight] as an example of how to use Google Trends to get a close idea of actual search volume.

The way I do it is by using known search volumes and comparing them to the target keyword phrase.

Google provides search volumes on its trending searches page, which can be adjusted for what’s trending in any country.

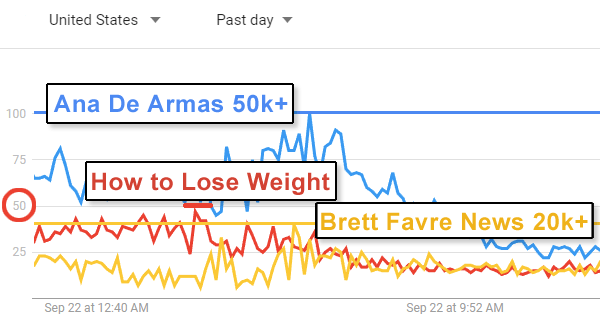

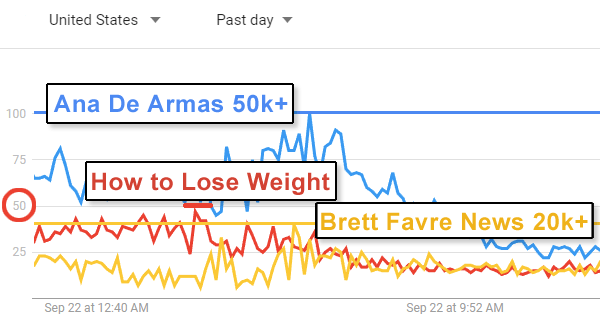

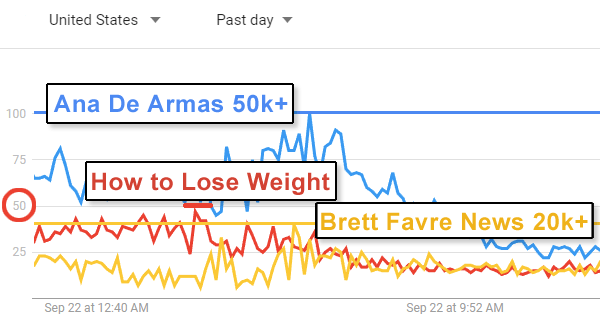

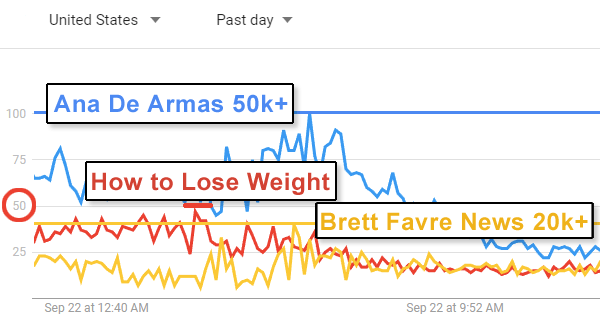

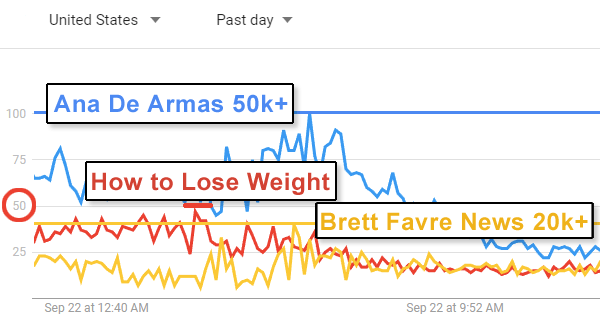

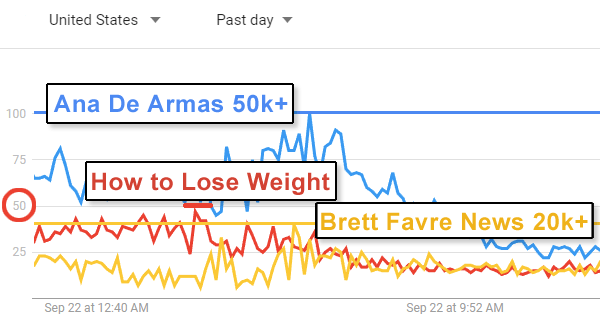

On this particular day (September 22, 2022), the actress Ana De Armas was trending with 50,000+ searches, and the American ex-football player (keyword phrase [Bret Favre News]) was trending with 20,000+ searches.

Step 1. Find Search Trends For Target Keyword Phrases

The target keyword phrase we’re researching is [how to lose weight].

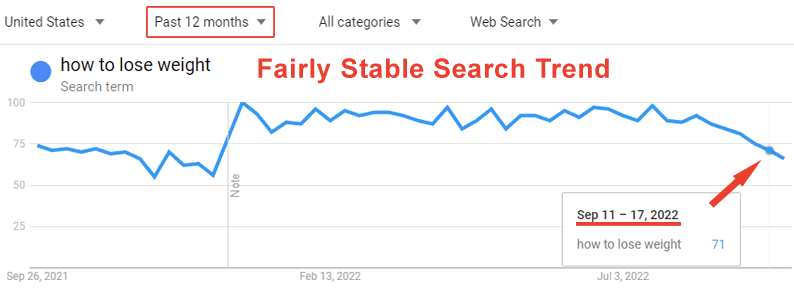

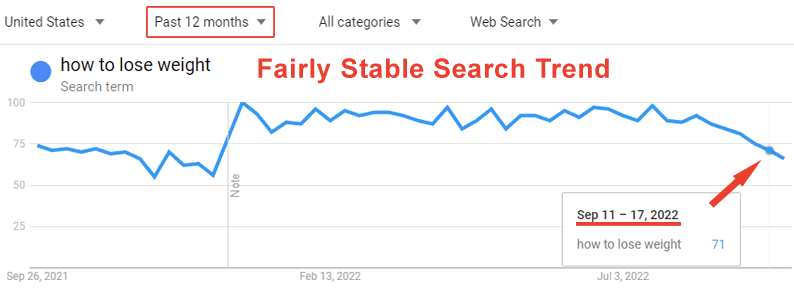

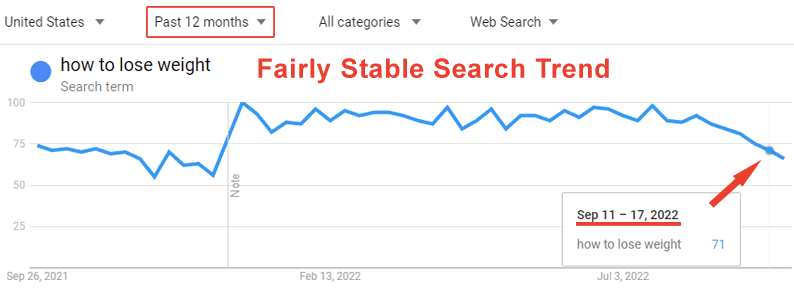

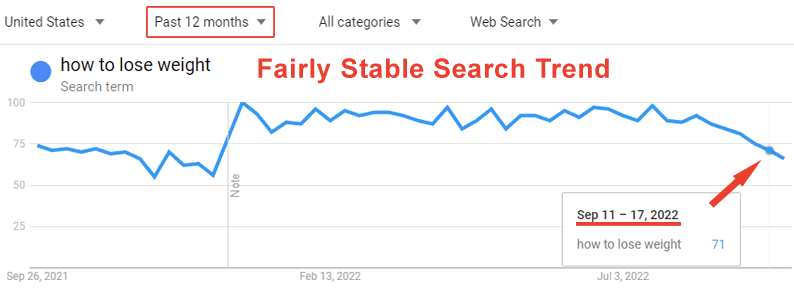

Below is a screenshot of the one-year trend for the target keyword phrase:

As you can see, it’s a fairly stable trend line from September 2021 to September 2022.

Then I added the two keyword phrases for which we have a close search volume count to compare all three, but for a 24-hour time period.

I use a 24-hour time period because the search volume for our comparison keywords is trending for this one day.

Our target keyword phrase, with a red trend line, is right in the middle, in between the keyword phrases [Ana De Armas] (blue) and [Bret Favre News] (yellow).

What the above comparison tells us is that the phrase [how to lose weight] has a keyword volume of more than 20,000+ searches but less than 50,000+ searches.

The relative search volume of [how to lose weight] is 50% of the keyword phrase [Ana De Armas].

Because we know that [Ana De Armas] has a search volume of approximately 50,000+ searches on this particular day, and [Bret Favre News] has a search volume of 20,000+ queries on the same day, we can say with reasonable accuracy that the keyword phrase, [how to lose weight] has approximately a daily search volume of around 30,000 on an average day, give or take a few thousand.

The actual numbers could be higher because Google Trends shows the highs and lows at particular points of the day. The total for the day is very likely higher.

The above hack isn’t 100% accurate. But it’s enough to give a strong ballpark idea and can be used to compare with and validate extrapolated data from a paid keyword research tool.

Related: How To Do Keyword Research For SEO

There are two general ways to look at the keyword data: stretched across over longer periods of time and shorter time periods.

Long Period Trends

You can set Google Trends to show you the traffic trends stretching back to 2004. This is valuable for showing you the audience trends.

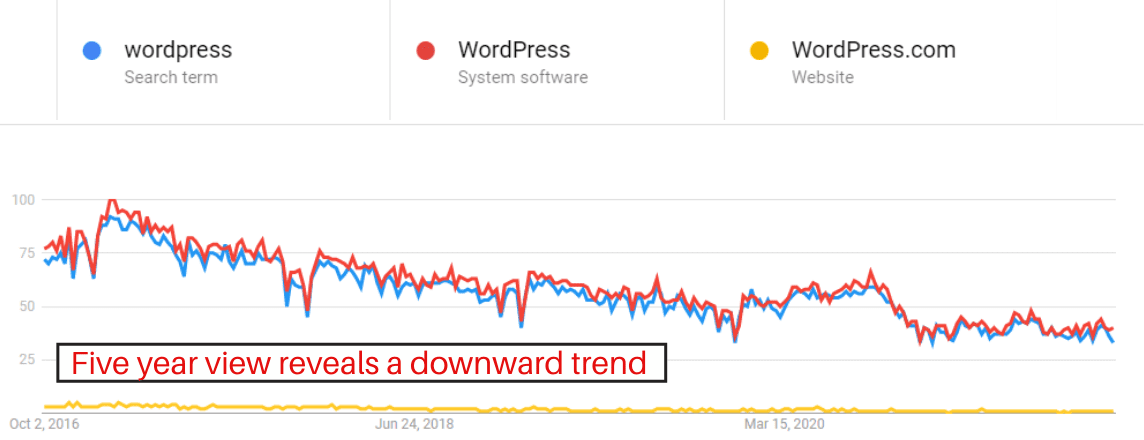

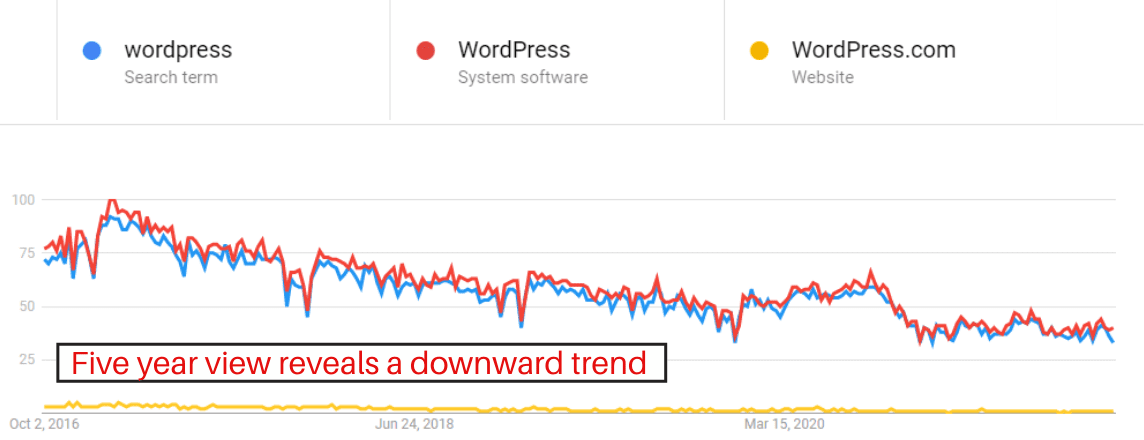

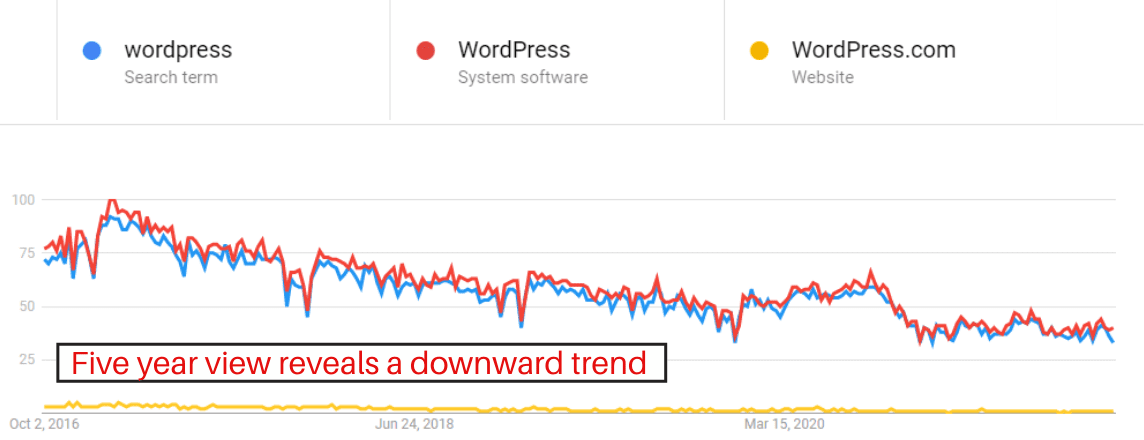

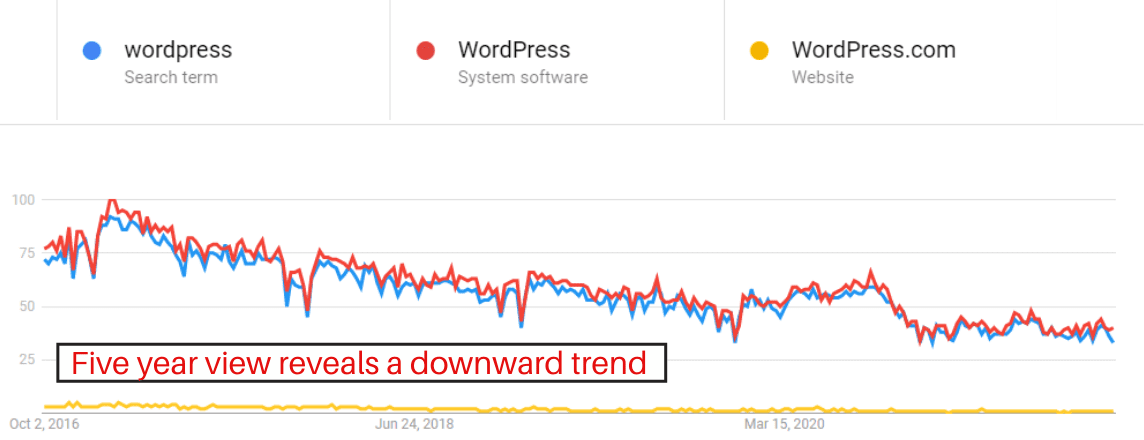

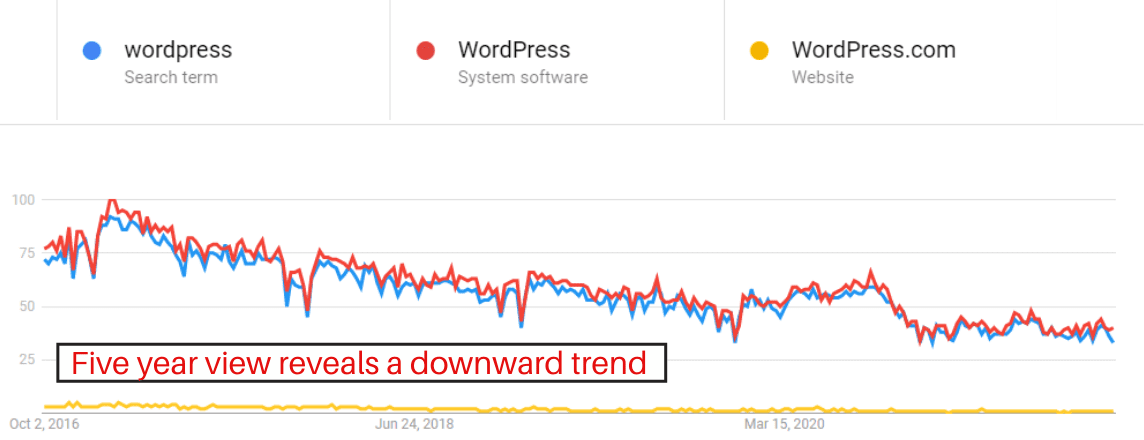

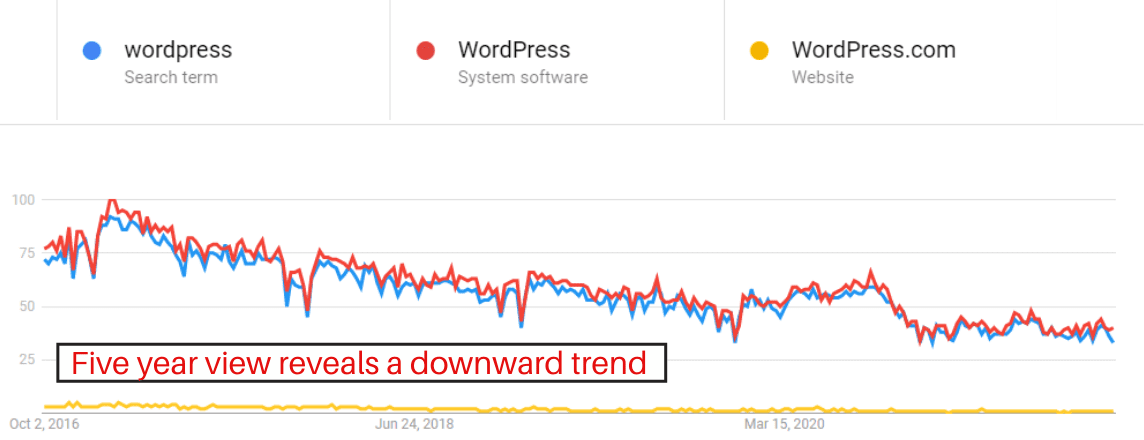

For example, review this five-year trend for [WordPress] the search term, WordPress the software, and WordPress the website:

There’s a clear downward trend for WordPress in all three variations.

The downward trend extends to related phrases such as:

There are many reasons why search trends go down. It can be that people lost interest, that the interest went somewhere else or that the trend is obsolete.

The digital camera product category is a good example of a downward spiral caused by a product being replaced by something else.

Knowing which way the wind is blowing could help a content marketer or publisher understand when it’s time to bail on a topic or product category and to pivot to upward-trending ones.

Related: Content Marketing: The Ultimate Beginner’s Guide

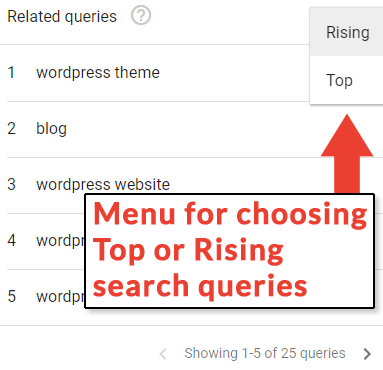

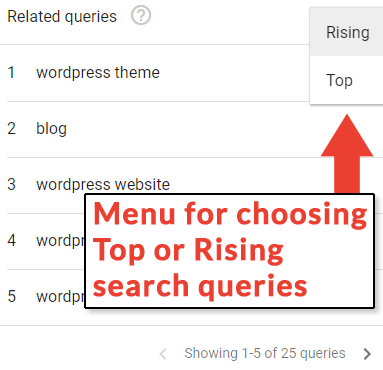

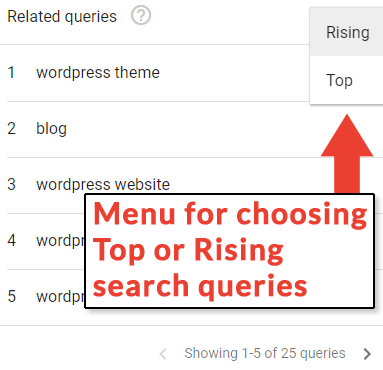

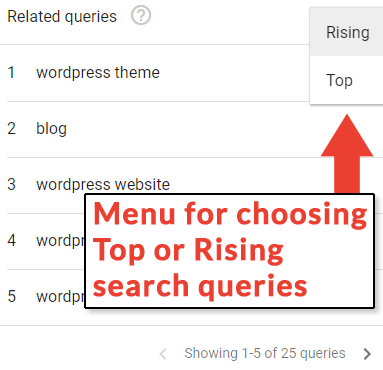

Google Trends has two great features, one called Related Topics and the other Related Queries.

Topics

Topics are search queries that share a concept.

Identifying related topics that are trending upwards is useful for learning how an audience or consumer demand is shifting.

This information can, in turn, provide ideas for content generation or new product selections.

According to Google:

“Related Topics

Users searching for your term also searched for these topics.

You Can View by the Following Metrics

Top – The most popular topics. Scoring is on a relative scale where a value of 100 is the most commonly searched topic and a value of 50 is a topic searched half as often as the most popular term, and so on.

Rising – Related topics with the biggest increase in search frequency since the last time period.

Results marked “Breakout” had a tremendous increase, probably because these topics are new and had few (if any) prior searches.”

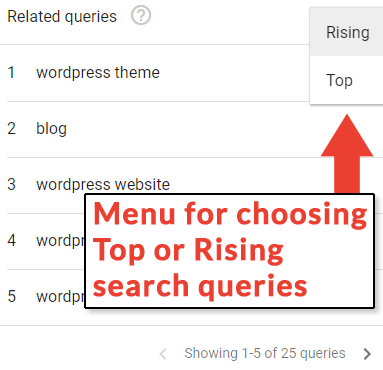

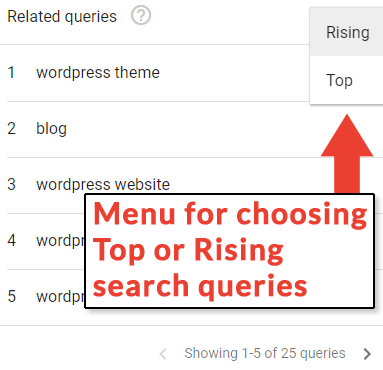

Related Queries

The description of Related Queries is similar to that of the Related Topics.

Top queries are generally the most popular searches. Rising Queries are queries that are becoming popular.

The data from Rising Queries are great for staying ahead of the competition.

Viewing keyword trends in the short view, such as the 90-day or even 30-day view, can reveal valuable insights for capitalizing on rapidly changing search trends.

There is a ton of traffic in Google Discover as well as in Google News.

Google Discover is tied to trending topics related to searches.

Google News is of the moment in terms of current events.

Sites that target either of those traffic channels benefit from knowing what the short-term trends are.

A benefit of viewing short-term trends (30 days and 90 trends) is that certain days of the week stand out when those searches are popular.

Knowing which days of the week interest spikes for a given topic can help in planning when to publish certain kinds of topics, so the content is right there when the audience is searching for it.

Google Trends has the functionality for narrowing down keyword search query inventory according to category topics.

This provides more accurate keyword data.

The Categories tab is important because it refines your keyword research to the correct context.

If your keyword context is [automobiles], then it makes sense to appropriately refine Google Trends to show just the data for the context of auto.

By narrowing the Google Trends data by category, you will be able to find more accurate information related to the topics you are researching for content within the correct context.

Google Trends keyword information by geographic location can be used for determining what areas are the best to outreach to for site promotion or for tailoring the content to specific regions.

For example, if certain kinds of products are popular in Washington D.C. and Texas, it makes sense to aim promotional activity and localized content to those areas.

In fact, it might be useful to focus link-building promotional activities in those areas first since the interest is higher in those parts of the country.

Keyword popularity information by region is valuable for link building, content creation, content promotion, and pay-per-click.

Localizing content (and the promotion of that content) can make it more relevant to the people who are interested in that content (or product).

Google ranks pages according to who it’s most relevant, so incorporating geographic nuance into your content can help it rank for the most people.

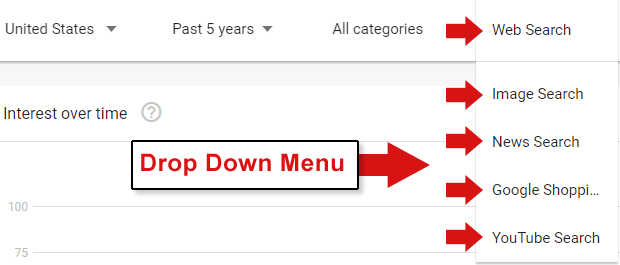

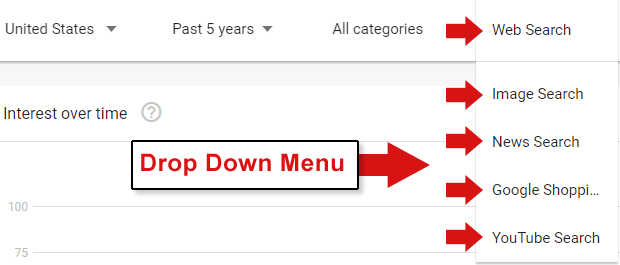

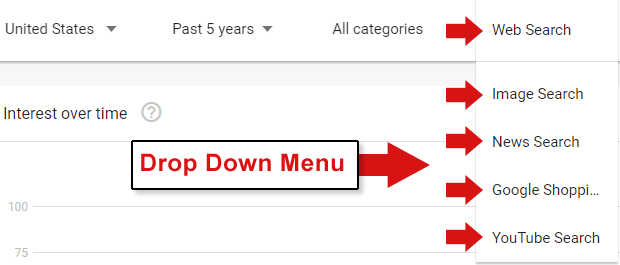

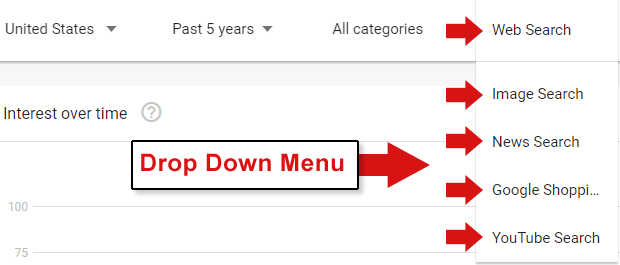

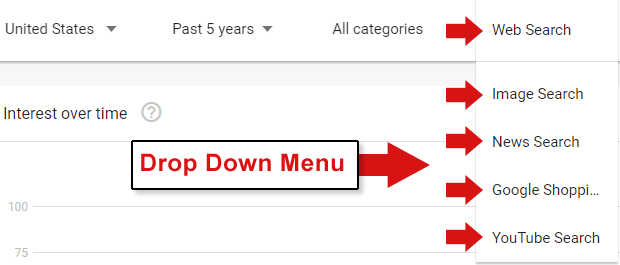

Google Trends gives you the ability to further refine the keyword data by segmenting it by the type of search the data comes from, the Search Type.

Refining your Google Trends research by the type of search allows you to remove the “noise” that might be making your keyword research fuzzy and help it become more accurate and meaningful.

Google Trends data can be refined by:

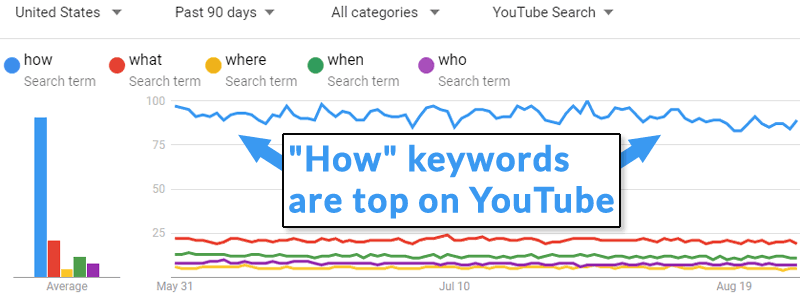

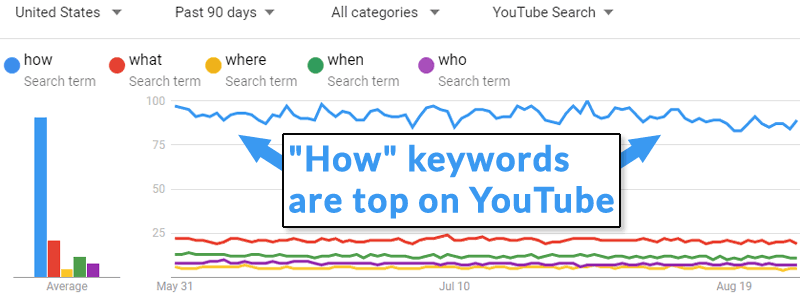

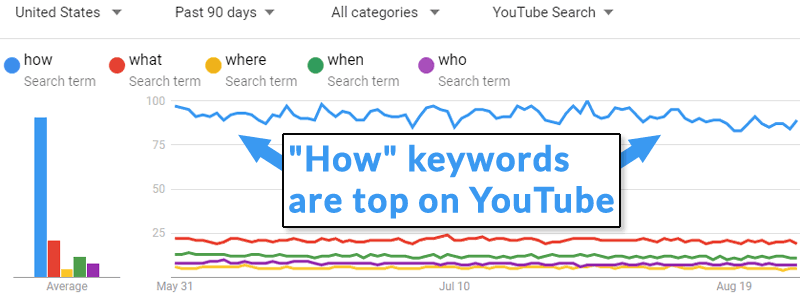

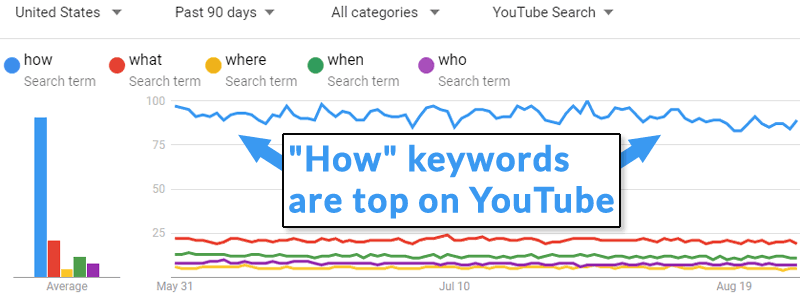

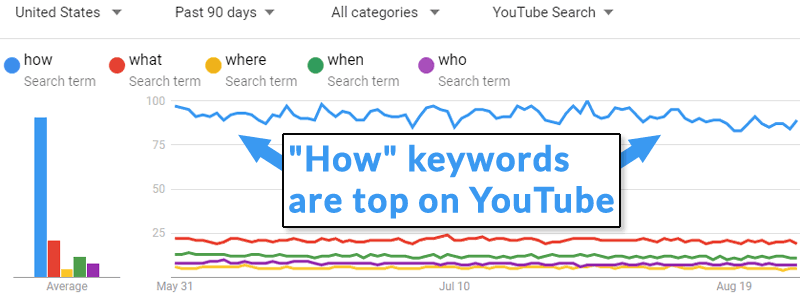

YouTube search is a fantastic way to identify search trends for content with the word “how” because a lot of people search on YouTube using phrases with the words “how” in them.

Although these are searches conducted on YouTube, the trends data is useful because it shows what users are looking for.

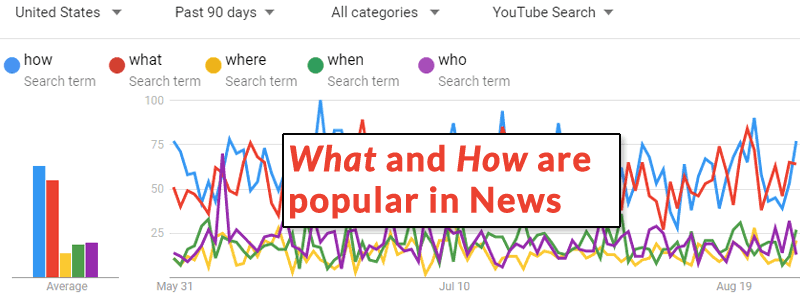

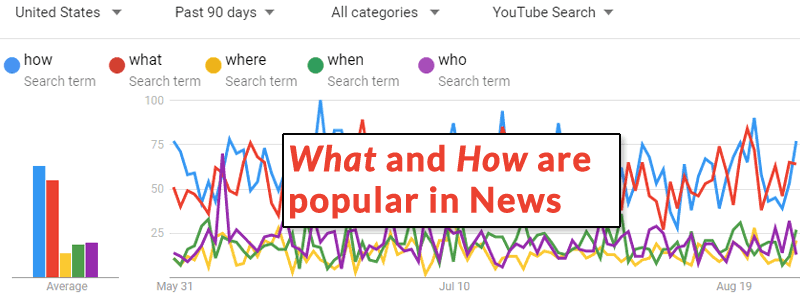

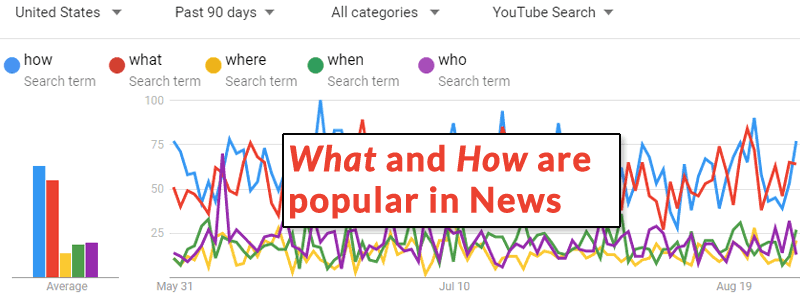

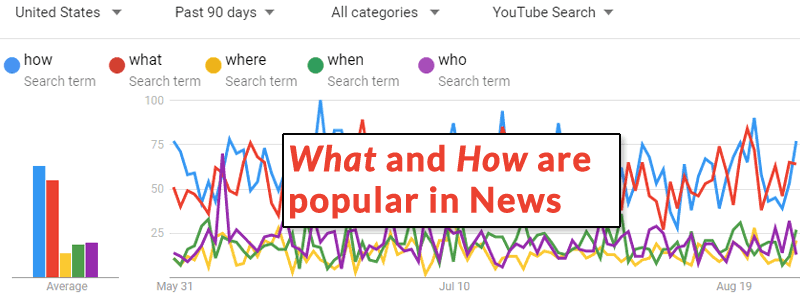

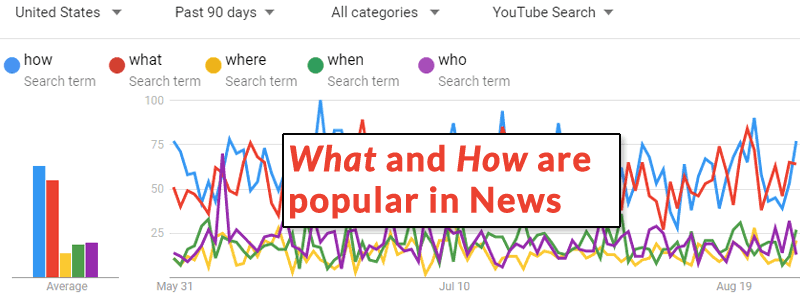

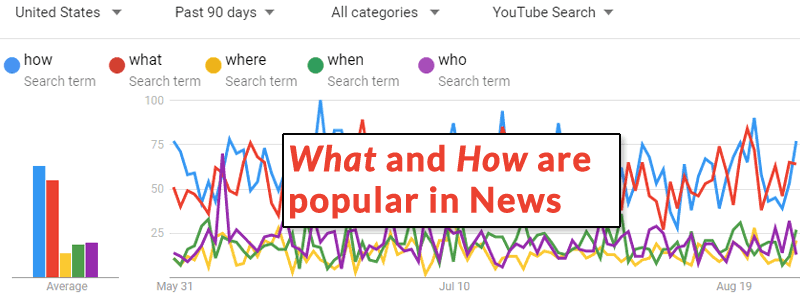

A Google Trends search for how, what, where, when, why, and who shows that search queries beginning with the word “how” are by far the most popular on YouTube.

Google Trends limits comparisons to five keywords, so the following screenshot omits that word.

If your keyword phrases involve instructional content that uses words like “how to,” refining your research with the YouTube search type may provide useful insights.

For example, I have found that YouTube Search shows more relevant “related topics” and “related queries” data than researching with “web search” selected.

Here’s another example of how using different kinds of search types helps refine Google Trends data.

I did the same how, what, where, when, why, and who searches but this time using the News Search refinement.

The search trends in Google News are remarkably different than the search patterns on YouTube. That’s because people want to know the “what” and “how” types of information in Google News.

When creating content related to news, identifying the correct angle to report a news item is important.

Knowing that the words “what” or “who” are most relevant to a topic can be useful for crafting the title to what the readers are most interested in.

The above is the view of search queries for the past 90 days.

When the same keywords are searched using the 5-year perspective, it becomes clear that the “who” type keywords tend to spike according to current events.

As an example of how current events influence trends, the biggest spike in searches with the word “who” occurred in the days after the 2020 presidential election.

Every Search Type query refinement shows a different help to refine the results so that they show more accurate information.

So, give the Search Type selections a try because the information that is provided may be more accurate and useful than the more general and potentially noisy “web search” version.

Free tools are generally considered to be less useful than paid tools. That’s not necessarily the case with Google Trends.

This article lists seven ways to discover useful search-related trends and patterns that are absolutely accurate, more than some search-related data from paid tools.

What’s especially notable is that this article only begins to scratch the surface of all the information that’s available.

Check out Google Trends and learn additional ways to mix different search patterns to obtain even more useful information.

More Resources:

Featured Image: Studio Romantic/Shutterstock

Digital marketing is about blending art and science, merging creative ideas with actionable, trackable steps.

But before tweaking your on-page content or restructuring your website, you need to know what’s working well already and where you have the potential for growth.

This is where search forecasting comes in.

Search forecasting is the practice of predicting what your organic traffic will look like.

All good SEO strategies start with hard data. That’s ultimately what should be shaping your next move – not best guesses and assumptions.

With data in hand, you’ll be able to predict what search traffic might look like for your business and use this to plan out your upcoming campaigns.

When working on organic traffic predictions, here are a few key details that you should keep in mind.

Beginning with keyword research is really the backbone of any SEO strategy.

You might think you know exactly what search phrases will be most beneficial for your business, but it’s best to set those assumptions aside in a separate column of your spreadsheet and look at the actual data.

There are dozens of possible metrics that you could look at when it comes to keyword data.

Regardless of the industry you’re working in or the type of content you’re working with, your research should include data or evidence on:

If you aren’t able to find data for some of this, your predictions won’t be as accurate but can still be valuable.

The most accessible piece will be search volume data – you need to know if your traffic goals match real user behavior in search results with the keywords you’re planning to use.

The rest of the metrics here will help you prioritize beyond search volume and come up with more realistic predictions.

They give you important insight into how competitive particular phrases are, where you stack up among the current players in search engine results pages (SERPs), and where there’s an opportunity for additional optimization to capitalize on changes in user intent.

You’re not expected to magic your keyword data out of thin air, and there’s only so much that your own site tracking can tell you.

But Google Search Console (GSC) is a good place to start.

Where other tools can tell you general keyword metrics, GSC will provide you with business-specific historical data to give you a good (internal) benchmark to work from.

Bot traffic can impact anything in GSC, and if you’re trying to rank for local results, the search volume is dependent on where a search is actually being made from in relation to the keyword being used.

There will also be differences in numbers pulled from GSC versus Semrush, Moz, Ahrefs, or any other SEO tools you might use.

Once you have everything together in a spreadsheet, though, averages will be enough for you to put together a reasonably confident prediction.

Google Keyword Planner can be another option to check out but has some questionable accuracy.

In many cases, search volume data is exaggerated due to combined estimates with similarly phrased keywords, so take this data with a grain of salt.

You may find this type of data is better used to calculate ad savings after capturing rankings as another data point of organic search return on investment (ROI).

Moving outside of the keyword data specifically, you should be using competitive analysis as part of your overall traffic prediction.

Look at who already appears on page one of the SERPs that you want to be on.

Plug competitor URLs into keyword tools to see what they’re ranking for and, crucially, what they’re not ranking for. Combine some of this data with your own keyword research to find opportunities.

This is where knowing keyword difficulty can be helpful.

If competitors are ranking for phrases that have a good volume but low difficulty, there may be a chance for you to produce better, more helpful content and move above that competitor in SERPs.

This will naturally change some of your predictions for search volume if you can move up from page two or three to page one.

This is also the time to assess if some related queries might also have content updates or development opportunities.

Are your competitors still using a single-keyword-per-page strategy? (You would be surprised!)

This might be where you can make up some competitive ground by building keyword families.

Whether you’re working on a year-long SEO strategy or a fixed-length campaign, understanding the seasonal pattern of both your business and keywords is essential.

One of the most important things to remember with seasonal traffic, and something that many people get wrong, is that your business’s busiest time of the year doesn’t always equal high search volume.

Customers don’t usually buy straight away, so you’ll often have weeks, even months, of lead time from high search volume to tangible sales increases.

Depending on what industry you work in, you may already work on this kind of accelerated marketing schedule. Retail is a prime example of this – fashion weeks in early fall are already debuting spring/summer lines for the following year.

And for most product businesses, you’ll be looking ahead to the holiday season around May or June, certainly no later than July to begin your planning.

It’s important to know what your search-to-sale lead time looks like because this will impact not only your predictions for search traffic but also the content strategy you put together based on these predictions.

Rolling out holiday gift guides in November in the hope that you’re going to rank instantly and make big sales within the first week because of good search engine rankings is simply not realistic.

(If that’s something you’re looking to do, paid advertising is going to be a better option.)

Tools like Google Trends can be helpful for getting overall estimates of when search volume starts to pick up for seasonal queries.

Use this data with what you know about your own business outputs to map out how far ahead of search increases you need to be putting out content and optimizing for jumps in traffic.

While we already know that we can’t account for mass changes to search algorithms or unexpected world events, there are also other unpredictable factors that need to be accounted for on a smaller scale.

Particularly in product-based businesses, other marketing efforts can have a positive or negative impact on your overall search predictions.

Products can quickly go viral on social media, even without any exhaustive marketing effort on your part.

When they do, search demand can significantly increase in ways that you were unprepared for.

And when you run those searches through SEO tools, they won’t be accounting for that unexpected rise in traffic.

Reactive versus predictive demand, particularly if you make a similar or dupe for a viral product, is almost impossible to plan for.

If you find yourself running into those situations, take this into account for search traffic predictions in future years where possible and reallocate your resources accordingly.

Forecasting your organic traffic means that you have a rough idea of expected results if conditions stay as predicted.

It allows you to better allocate internal resources, budget for your upcoming campaigns and set internal benchmarks. This can cover everything from expected new traffic if rankings are captured to increased revenue based on current conversion rates.

Knowing this information ahead of time can be critical in getting stakeholder buy-in, particularly if you work in enterprise SEO and your growth goals are set once or twice a year.

If estimates don’t align with expectations, you have the leverage to ask for a revised goal or additional resources to make those expectations more achievable.

Of course, there needs to be a disclaimer here.

Wide-scale algorithm updates, a new website design, changes in user behavior and search trends, or even another round of “unprecedented times” will all have drastic effects on what search results look like in reality.

Those are almost impossible to plan for or predict the exact impact of.

But issues aside, SEO forecasting is still worth investing time into.

You don’t have to be a data scientist to predict your search traffic.

With the right tools and approaches, you can start to get a good picture of what you can expect to see in the coming months and set more realistic benchmarks for organic search growth.

The goal of predicting your organic search traffic is to help you make more informed decisions about your ongoing SEO strategy.

Opportunities are out there, you just have to find them.

You’ll always come up against obstacles with forecasting, and it will never be 100% accurate, but with solid data to back you up, you’ll have a good benchmark to work from to build a strategically-sound search marketing plan.

More resources:

Featured Image: eamesBot/Shutterstock

Large language models are one of the hottest areas of AI research right now, with companies racing to release programs like GPT-3 that can write impressively coherent articles and even computer code. But there’s a problem looming on the horizon, according to a team of AI forecasters: we might run out of data to train them on.

Language models are trained using texts from sources like Wikipedia, news articles, scientific papers, and books. In recent years, the trend has been to train these models on more and more data in the hope that it’ll make them more accurate and versatile.

The trouble is, the types of data typically used for training language models may be used up in the near future—as early as 2026, according to a paper by researchers from Epoch, an AI research and forecasting organization, that is yet to be peer reviewed. The issue stems from the fact that, as researchers build more powerful models with greater capabilities, they have to find ever more texts to train them on. Large language model researchers are increasingly concerned that they are going to run out of this sort of data, says Teven Le Scao, a researcher at AI company Hugging Face, who was not involved in Epoch’s work.

The issue stems partly from the fact that language AI researchers filter the data they use to train models into two categories: high quality and low quality. The line between the two categories can be fuzzy, says Pablo Villalobos, a staff researcher at Epoch and the lead author of the paper, but text from the former is viewed as better-written and is often produced by professional writers.

Data from low-quality categories consists of texts like social media posts or comments on websites like 4chan, and greatly outnumbers data considered to be high quality. Researchers typically only train models using data that falls into the high-quality category because that is the type of language they want the models to reproduce. This approach has resulted in some impressive results for large language models such as GPT-3.

One way to overcome these data constraints would be to reassess what’s defined as “low” and “high” quality, according to Swabha Swayamdipta, a University of Southern California machine learning professor who specializes in dataset quality. If data shortages push AI researchers to incorporate more diverse datasets into the training process, it would be a “net positive” for language models, Swayamdipta says.

Researchers may also find ways to extend the life of data used for training language models. Currently, large language models are trained on the same data just once, due to performance and cost constraints. But it may be possible to train a model several times using the same data, says Swayamdipta.

Some researchers believe big may not equal better when it comes to language models anyway. Percy Liang, a computer science professor at Stanford University, says there’s evidence that making models more efficient may improve their ability, rather than just increase their size.

“We’ve seen how smaller models that are trained on higher-quality data can outperform larger models trained on lower-quality data,” he explains.

The UN climate conference just wrapped up over the weekend after a marathon negotiating session that stretched talks nearly 48 hours past their scheduled conclusion. (A question for my editor: the UN isn’t hitting deadlines, so do I still have to?)

The most notable outcome from the conference was establishment of a fund to help poor countries pay for climate damages. That piece is being hailed as a win. But beyond that victory, some leaders are concerned there wasn’t enough progress at this year’s talks.

And everyone is pointing fingers, blaming others for not taking action fast enough on climate funding. Activists are calling the US the ‘colossal fossil,’ and US leaders are complaining about being blamed while China is the current leading emitter. So let’s dig into some data and consider how researchers and climate analysts think about climate responsibility.

As I wrote about a couple of weeks ago in the newsletter, one of the major discussions at COP27 was about whether richer countries should help poorer, more vulnerable nations pay for the impacts of climate change. Climate disasters were top of mind this year, especially after devastating flooding in Pakistan killed over 1,000 people, displaced millions more. Total cost estimates topped $40 billion.

After two weeks of negotiations, delegates at COP27 reached an agreement on financing for loss and damage….sort of. There will be a fund, but how much is in it and how it will work is unclear. Details are set to be ironed out at, you guessed it, another UN climate conference—COP28 is scheduled for next year in Dubai.

Countries paying into the loss and damages fund aren’t admitting blame or accepting liability for climate damages. But the creation of the fund and all the discussions around climate damage have brought up the question: who got us into this mess? And who should be paying for it?

When it comes to greenhouse gas emissions, history matters. Here’s what I mean by that:

When I was first learning about climate science, this logic floored me. It’s so intuitive, but it recast the debate around national climate responsibility in my head. I’d always heard that China was the country we should all be talking about when it came to emissions. They’re the biggest climate polluter today, after all.

But when you add up total emissions, it’s super clear: the US is by far the greatest total emitter, responsible for about a quarter of all emissions ever. The EU is next, with about 17% of the total. Finally we have China, coming in third.

!function(){“use strict”;window.addEventListener(“message”,(function(e){if(void 0!==e.data[“datawrapper-height”]){var t=document.querySelectorAll(“iframe”);for(var a in e.data[“datawrapper-height”])for(var r=0;r<t.length;r++){if(t[r].contentWindow===e.source)t[r].style.height=e.data["datawrapper-height"][a]+"px"}}}))}();

So the US and EU together account for 40% of total emissions—that’s a huge chunk of what’s driving climate change today. This matters because energy from fossil fuels helped grow economies for centuries, so the status of the US and EU today are in large part because of all those fossil fuels. And now, those emissions are supercharging disasters around the world.

“A quarter of the CO2 in our atmosphere is red, white and blue.”

China’s emissions have shot up over the past couple decades—so you might be wondering, when will they catch up and take the top spot? I asked this question to Robbie Andrew, a senior researcher at the Center for International Climate Research in Norway and climate data expert.

“Just using some simplified scenarios, I think it could be another 30 years before China overtakes the US on cumulative emissions,” Andrew told me in an email. “The US has a good head start here.”

Simon Evans of CarbonBrief put it another way on Twitter: if emissions are constant, the US will still have a solid lead in total emissions in 2030. In fact, the US could emit nothing between now and 2030 and still be ahead of China.

A final datapoint to consider: by per capita emissions, the US again takes first place in the world by far. Check out that per capita data and some other great charts in my story from last week.

Now, I’m not saying that everyone else gets off the hook because the US leads the world on a few climate metrics. But I think it’s important that we consider the full context, both of history and the current state of things, as we get into conversations about how we can curb climate change and how we can deal with the impacts we’re seeing today.

It’s not fair that some countries have emitted far more than others, and will continue to do so, while others are the ones getting hit the hardest by climate change. But ultimately, the world needs to cut emissions to zero as quickly and equitably as possible, and we’ve got a long way to go.

Lab-grown meat just reached a huge milestone in the US: an FDA no-questions letter. After clearing a couple more regulatory hurdles, Upside Foods could begin selling its cultured meat as early as next year. (Wired)

→ This isn’t the first commercially-available cultured meat product, though. That honor goes to startup Just, which launched lab-grown chicken nuggets in Singapore in 2020. (MIT Technology Review)

→ Lab-grown meat arguably still isn’t a given: it’s likely going to be expensive, and winning over doubters could be difficult. (MIT Technology Review)

→ My colleague Niall Firth dug deep into the race to make a lab-grown steak in 2019. (MIT Technology Review)

Biofuels might intuitively seem like they’re good for the climate, but a total farming revamp might be necessary to keep the promise alive. (Nature)

The UN is calling for protection of a nuclear power plant in Ukraine. The reactors at the Zaporizhzhia plant have been shut down since September, which reduces but doesn’t totally eliminate the risk of an accident. (New York Times)

Energy Vault promised a new way of storing energy with rock elevators. But now, it’s selling a lot of batteries. (Canary Media)

Qatar says the World Cup is carbon neutral. But the carbon offsets it’s relying on are sketchy, at best. (Bloomberg)

→ The problem of low-quality offsets isn’t a new one. Read my colleague James Temple’s investigation into offsets in California, which was included in this year’s Best American Science and Nature Writing compilation. (MIT Technology Review with Propublica)

Three Midwestern states are leading the charge on equitable access to EV charging, with the help of new federal funding. (Inside Climate News)

India isn’t a monolith. Acknowledging differences in income and infrastructure across the country in climate policies will be a key piece of cutting India’s emissions. (Science)

→ India plans to reach net zero emissions by 2070. Here’s why that plan makes sense. (MIT Technology Review)

Advanced x-rays can be used to spot defects in batteries. They’re also fascinating to look at.

I recently came across this site called Scan of the Month, which drops a new set of CT scans monthly. And it just so happens that October’s edition was batteries. They took a look inside a Duracell alkaline battery, as well as two different formats of lithium-ion cells.

They’ve also scanned a golf club, AirPods, and Lego minifigures. Check out the site to satisfy your curiosity without pulling apart any of your personal electronics.

This is today’s edition of The Download, our weekday newsletter that provides a daily dose of what’s going on in the world of technology.

The US and China are pointing fingers at each other over climate change

The UN climate conference wrapped up over the weekend after marathon negotiations that ran way over. The most notable outcome was the establishment of a fund to help poor countries pay for climate damages, which was hailed as a win. Beyond that, some leaders are concerned there wasn’t enough progress at this year’s talks.

Consequently, everyone is pointing fingers, blaming others for not taking action fast enough on climate funding. Activists are calling the US the ‘colossal fossil,’ while US leaders complain about being blamed while China is the current leading emitter.

But when it comes to working out who should be paying what in accepting liability for climate damages, we need to look beyond current emissions. When you add up historic emissions, it’s super clear: the US is by far the greatest total emitter, responsible for about a quarter. Read the full story.

—Casey Crownhart

Casey’s story is from the Spark, her weekly newsletter delving into the tricky science of climate change. Sign up to receive it in your inbox every Wednesday.

We could run out of data to train AI language programs

What’s happening? Large language models are one of the hottest areas of AI research right now, with companies racing to release programs like GPT-3 that can write impressively coherent articles and even computer code. But there’s a problem looming on the horizon, according to a team of AI forecasters: we might run out of data to train them on.

How long have we got? As researchers build more powerful models with greater capabilities, they have to find ever more texts to train them on. The types of data typically used for these models may be used up in the near future—as early as 2026, according to a paper by researchers from Epoch, an AI research and forecasting organization. Read the full story.

—Tammy Xu

Podcast: Want a job? The AI will see you now.

In the past, hiring decisions were made by people. Today, some key decisions that lead to whether someone gets a job or not are made by algorithms. In this episode of our award-winning podcast, In Machines We Trust, we meet some of the big players making this technology including the CEOs of HireVue and myInterview—and test some of these tools ourselves.

Listen to it on Apple Podcasts, or wherever you usually listen.

The must-reads

I’ve combed the internet to find you today’s most fun/important/scary/fascinating stories about technology.

1 FTX’s collapse should be a major cautionary tale for the crypto industry

Unfortunately, it won’t necessarily result in better regulations. (New Yorker $)

+ Crypto isn’t known for heeding bad omens, after all. (Vox)

+ FTX has invested millions into, err, a tiny bank. (NYT $)

+ Sam Bankman-Fried’s favorite “longtermism” ideology sounds bogus. (Motherboard)

+ He hasn’t done the effective altruism movement any favors, either. (The Atlantic $)

2 Elon Musk probably won’t declare bankruptcy

That doesn’t mean his financial backers can rest easy, though. (The Atlantic $)

+ Here’s who’s paying for Twitter right now. (NYT $)

+ Former Twitter employees fear the platform might only last weeks. (MIT Technology Review)

3 Measles is a growing global threat

Vaccination rates are down, and it’s incredibly contagious. (Axios)

4 Maybe it’s time we stopped automatically trusting billionaires

Exercising healthy cynicism isn’t the same as being a hater. (Vox)

+ A lot of big tech bosses wrongly assumed their covid-highs would last forever. (Slate $)

5 The true cost of America’s war on China’s chips

The pricier the components, the more expensive the final product will be. (FT $)

+ Workers at the world’s biggest iPhone factory are rioting. (Bloomberg $)

+ Inside the software that will become the next battle front in the US-China chip war. (MIT Technology Review)

6 Rocks on Mars suggest it could once have been habitable

Organic molecules found in the rocks may have supported forms of life. (WP $)

+ A UK-made Mars rover is heading back to the red planet. (BBC)

7 Why future concrete may contain bacteria

Bioconcrete is strong, and—crucially—greener. (Economist $)

+ These living bricks use bacteria to build themselves. (MIT Technology Review)

8 The experience of shopping on Amazon really sucks these days

And it’s because everything is an advert. (WP $)

9 What it’s like to love the tech the world’s left behind

From walkmans to BlackBerrys, these ardent fans aren’t letting go. (The Guardian)

+ Smartphones have survived all the attempts to replace them. (The Verge)

10 The comments on YouTube’s videos are works of art

Literally—an artist has made them into actual art. (New Yorker $)

Quote of the day

“He’s always trying to get a laugh, that’s why he makes all his cars suicidal.”

—Dril, one of the seminal personalities of the humorous corner of “weird Twitter,” reflects on Elon Musk’s surreal leadership to the Washington Post.

The big story

What does breaking up Big Tech really mean?

June 2021

For Apple, Amazon, Facebook, and Alphabet, covid-19 was an economic blessing. Even as the pandemic sent the global economy into a deep recession and cratered most companies’ profits, these companies—often referred to as the “Big Four” of technology—not only survived but thrived.

Yet at the same time, they have come under unprecedented attack from politicians and government regulators in the US and Europe, in the form of new lawsuits, proposed bills, and regulations. There’s no denying that the pressure is building to rein in Big Tech’s power. But what would that entail? Read the full story.

—James Surowiecki

We can still have nice things

A place for comfort, fun and distraction in these weird times. (Got any ideas? Drop me a line or tweet ’em at me.)

+ This kitten’s goalkeeping is just extraordinary.

+ I really enjoy the color combos this Twitter bot comes up with (thanks Niall!)

+ Atarah Ben-Tovim sounded like an amazingly inspiring music teacher.

+ How to expand your movie-watching horizons and delve into something new.

+ After the recent chess cheating scandal, I can’t trust anyone anymore. Here’s how to spot a dodgy opponent.

An orphan page has no internal links to it. Most search engine optimization tools mark orphan pages as a critical issue, but it’s not that straightforward. The page may have a legitimate rationale, such as:

In those cases, insert a noindex meta tag on the page to prevent search engines from indexing it.

However, orphan pages are a problem when they (i) are indexed by Google and (ii) represent roughly 20% or more of a site’s overall indexed URLs.

Similarly, near-orphan pages — just one or two internal links — could also become an SEO problem.

Google evaluates internal links for the “importance” of a page. Google assumes a page has little value if it’s barely linked. And a site with a large percentage of low-value pages sends a bad Panda-algorithm signal to Google.

More importantly, low-value pages waste Googlebot’s crawl budget and dilute internal link authority, thus lowering organic search rankings for the entire site.

Plus, in my experience, orphan pages often signal larger structural problems. For example, a site with 20% or more orphan or poorly-linked pages likely has poor architecture or a migration gone wrong. Both require immediate attention.

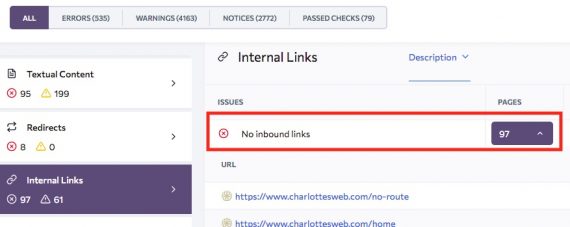

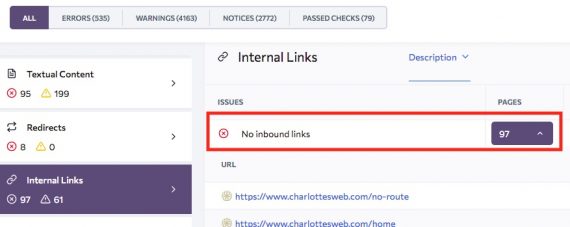

Use a crawler tool to find pages with no (or very few) internal backlinks. The free version of Screaming Frog works for sites with fewer than 500 pages. Larger sites require the paid version of Screaming Frog or other solutions. For example, JetOctopus has many useful features, such as log analysis and Search Console integration.

A site crawler lists all URLs (via log file search) and their number of internal links. Sorting results to show URLs by the fewest internal links quickly identifies orphan and near orphan pages.

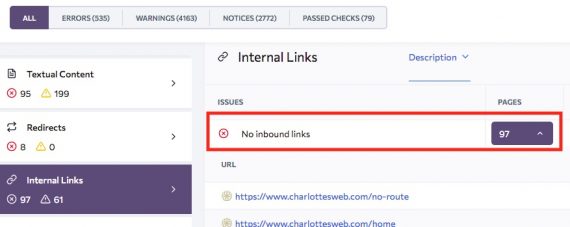

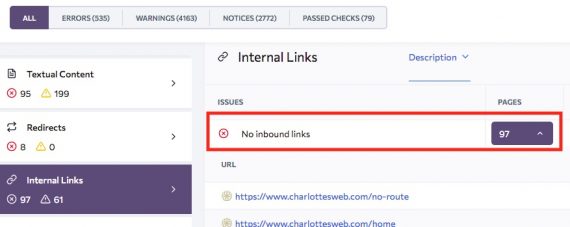

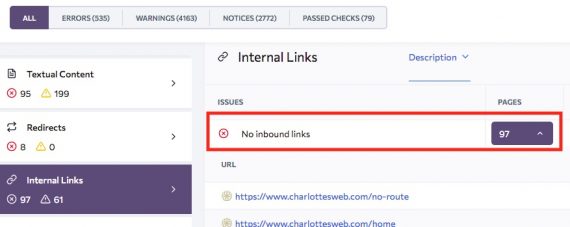

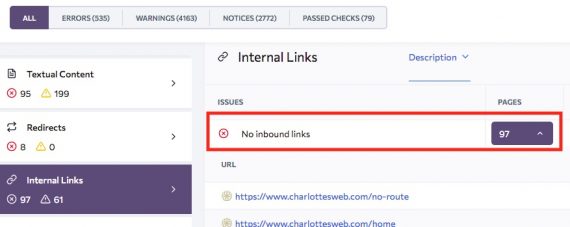

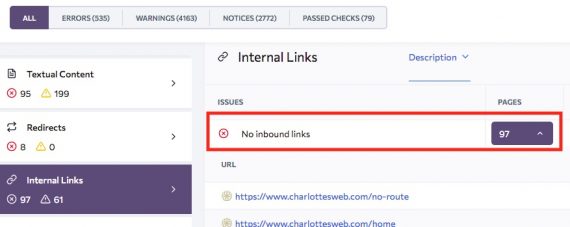

An easier solution is SE Ranking’s Website Audit tool. It will find problems such as orphan pages and suggest solutions.

SE Ranking’s Website Audit tool identifies pages with no inbound links. This example is for a site with 97 such pages.

Fixing an orphan page depends on the circumstances.

Expired pages with content, such as products removed from the catalog or expired offers. Make sure these pages have clear links to similar products. I wouldn’t remove or noindex these pages as they may still drive (and convert) traffic from external backlinks and organic search results.

Dynamic, indexed URLs occur when a site’s internal search creates a unique URL with every query or filtered browse. Again, these orphan pages are a problem only if indexed by Google. Use Search Console’s Page Inspection tool to determine the status and how Google discovered it. If indexed, add a noindex tag or a rel=canonical directive pointing to a static alternative.

The causes for indexed dynamic URLs could be a malfunctioning sitemap that includes them or external links pointing to them. Sometimes a malware attack produces many indexed (dynamic) orphan pages. Sometimes it’s a faulty content management system or plugin. All require immediate attention.

Indexed non-existent pages. This occurs due to CMS bugs or improper site migrations. First, make sure these pages return 404 status codes so Google will eventually remove them from its index. Then fix your CMS or migration errors.

Pages orphaned accidentally — usually after a redesign or a site migration — are the top priority. Once identified, include them as soon as possible in the site’s architecture and internal linking structure to recover rankings.

Orphan page analysis typically identifies broader problems such as a faulty site structure, a broken CMS, or even a malware attack. The analysis is essential after a site migration or redesign. Regardless, fixing an orphan page is necessary only if it should rank in organic search results.