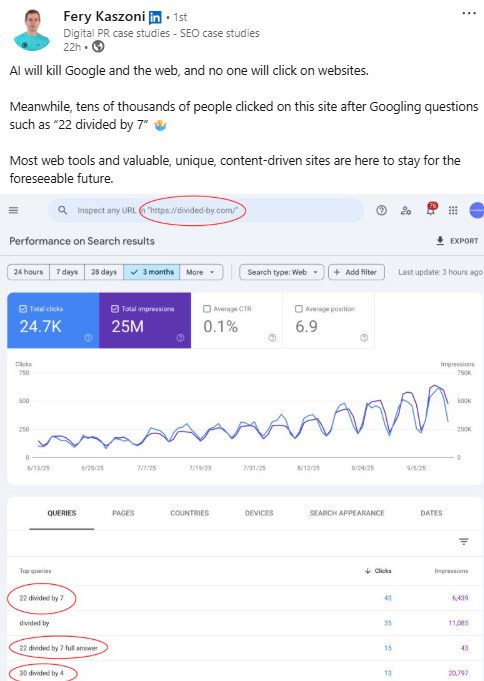

Google rolled out AI Overviews to all U.S. users in May 2024. Since then, publishers have reported significant traffic losses, with some seeing click-through rates drop by as much as 89%. The question isn’t whether AI Overviews impact traffic, but how much damage they’re doing to specific content types.

Search (including Google Discover and traditional Google Search) consistently accounts for between 20% and 40% of referral traffic to most major publishers, making it their largest external traffic source. When DMG Media, which owns MailOnline and Metro, reports nearly 90% declines for certain searches, it’s a stark warning for traditional publishing.

After more than a year of AI Overviews (and Search Generative Experience), we have extensive data from publishers, researchers, and industry analysts. This article pulls together findings from multiple studies covering hundreds of thousands of keywords, tens of thousands of user searches, and real-world publisher experiences.

The evidence spans from Pew Research’s 46% average decline to DMG Media’s 89% worst-case scenarios. Educational platforms like Chegg report a 49% decline. But branded searches are actually increasing for some, suggesting there are survival strategies for those who adapt.

This article explains what’s really happening and why, including the types of content that face the biggest changes and which are staying relatively stable. You’ll understand why Google says clicks are “higher quality” even as publishers see traffic declines, and you’ll see what changes might make sense based on real data rather than guesses.

AI Overviews are the biggest change to search since featured snippets were introduced in 2014. They’re affecting the kinds of content publishers produce, and they’re increasing zero-click searches, which now make up 69% of all queries, according to Similarweb.

Whether your business relies on search traffic or you’re just watching industry trends, these patterns are significantly impacting digital marketing.

What we’re seeing is a new era in search and a change that is reshaping how online information is shared and how users interact with it.

AI Overview Studies: The Overwhelming Evidence

Google’s AI Overviews (AIO) have impacted traffic across most verticals and altered search behavior.

The feature, which was first introduced as Search Generative Experience (SGE) announced at Google I/O in May 2023, now appears in over 200 countries and 40 languages following a May 2025 expansion.

Independent research conducted throughout 2024 and 2025 shows click-through rate reductions ranging from 34% to 46% when AI summaries appear on search results pages.

Evidence from a variety of independent studies outlines the impact of AIO and shows a range of effects depending on the type of content and how it’s measured:

Reduced Click Through Rates – Pew Research Center

A study by Pew Research Center provides a rigorous analysis. By tracking 68,000 real search queries, researchers found that users clicked on results 8% of the time when AI summaries appeared, compared to 15% without them. That’s a 46.7% relative reduction.

Pew’s study tracked actual user behavior, rather than relying on estimates or keyword tools, validating publisher concerns.

Google questioned Pew’s methodology, claiming that the analysis period overlapped with algorithm testing unrelated to AI Overviews. However, the decline and its connection to AI Overview presence suggest a notable relationship, even if other factors played a role.

Position One Eroded – Ahrefs

Ahrefs’ analysis found that position one click-through rates dropped for informational keywords triggering AI Overviews.

Ryan Law, Director of Content Marketing at Ahrefs, stated on LinkedIn:

“AI Overviews reduce clicks by 34.5%. Google says being featured in an AI Overview leads to higher click-through rates… Logic disagrees, and now, so does our data.”

Law’s observation gets to the heart of a major contradiction: Google says appearing in AI Overviews helps publishers, but the math of fewer clicks suggests this is just corporate doublespeak to appease content creators.

His post garnered over 8,200 reactions, indicating widespread industry agreement with these findings.

More Zero-Click Searches – Similarweb

According to Similarweb data, zero-click searches increased from 56% to 69% between May 2024 and May 2025. While this captures trends beyond AI Overviews, the timing aligns with the rollout.

Zero-click searches work because they meet user needs. For example, when someone searches for “weather today” or a stock price, getting an instant answer without clicking is helpful. The issue comes when zero-click searches creep into areas where publishers used to offer in-depth content.

Stuart Forrest, global director of SEO digital publishing at Bauer Media, confirms the trend, telling the BBC:

“We’re definitely moving into the era of lower clicks and lower referral traffic for publishers.”

Forrest’s admitting to this new reality shows that the industry as a whole is coming to terms with the end of the golden age of search traffic. Not with a dramatic impact, but with a steady decline in clicks as AI meets users’ needs before they ever leave Google’s ecosystem.

Search Traffic Decline – Digital Content Next

An analysis by Digital Content Next found a 10% overall search traffic decline among member publishers between May and June.

Although modest compared to DMG’s worst-case scenarios, this represents millions of lost visits across major publishers.

AIO Placement Volatility – Authoritas

An Authoritas report finds that AI Overview placements are more volatile than organic ones. Over a two- to three-month period, about 70% of the pages cited in AI Overviews changed, and these changes weren’t linked to traditional organic rankings.

This volatility is why some sites experience sudden traffic drops even when their blue-link rankings seem stable.

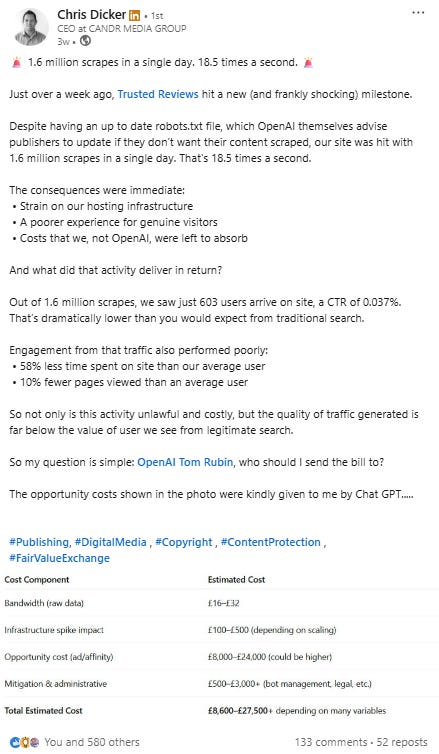

Click-Based Economy Collapse For News Publishers – DMG Media

A statement from DMG Media to the UK’s Competition and Markets Authority reveals click-through rates dropped by as much as 89% when AI Overviews appeared for their content.

Although this figure represents a worst-case scenario rather than an average, it highlights the potential for traffic losses for certain search types.

Additionally, there are differences in how AI Overviews affect click-through rates depending on the device type.

The Daily Mail’s desktop CTR dropped from 25.23% to 2.79% when an AI Overview surfaced above a visible link (-89%), with mobile traffic declining by 87%; U.S. figures were similar.

These numbers indicate we’re facing more than just a temporary adjustment period. We’re witnessing a structural collapse of the click-based economy that has supported digital publishing since the early 2000s. With traffic declines approaching 90%, we’ve gone beyond optimization tactics and into existential crisis mode territory.

The submission to regulatory authorities suggests they’re confident in these numbers, despite their magnitude.

Educational Site Disruption – Chegg

Educational platforms are experiencing disruption from AI Overviews.

Learning platform Chegg reported a 49% decline in non-subscriber traffic between January 2024 and January 2025 in company statements accompanying their February antitrust lawsuit.

The decline coincided with AI Overviews answering homework and study questions that previously drove traffic to educational sites. Chegg’s lawsuit alleges that Google used content from educational publishers to train AI systems that now compete directly with those publishers.

Chegg’s case is a warning sign for educational content creators: If AI systems can successfully replace structured learning platforms, what’s the future for smaller publishers?

Reduced Visibility For Top Ranking Sites – Advanced Web Ranking

AI Overviews are dense and tall, impacting the visibility of organic results.

Advanced Web Ranking found that across 8,000 keywords, AI Overviews average around 169 words and include about seven links when expanded.

Once expanded, the first organic result often appears about 1,674px down the page. That’s well below the fold on most screens, reducing visibility for even top-ranked pages.

Branded Searches: The Surprising Exception

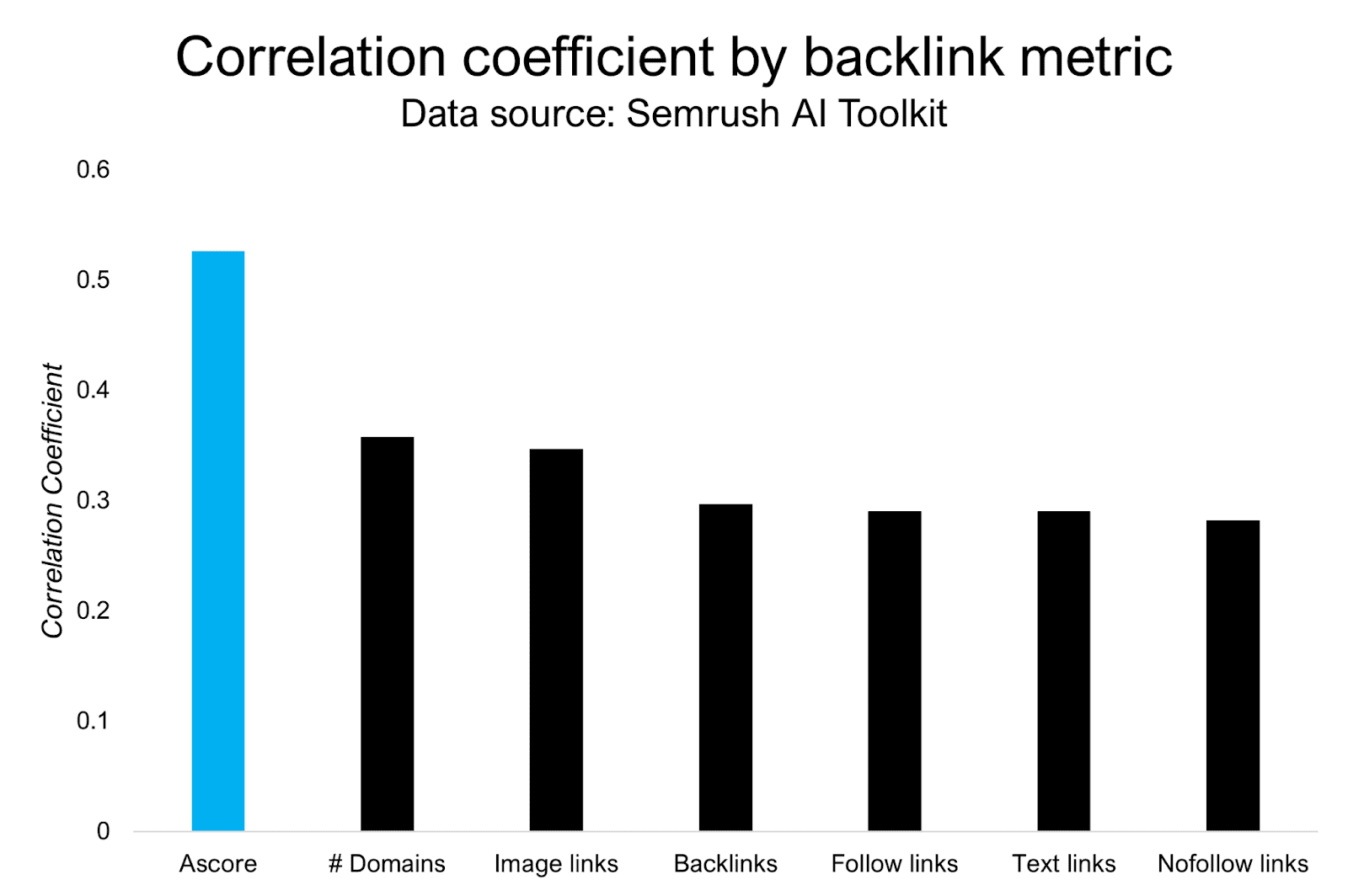

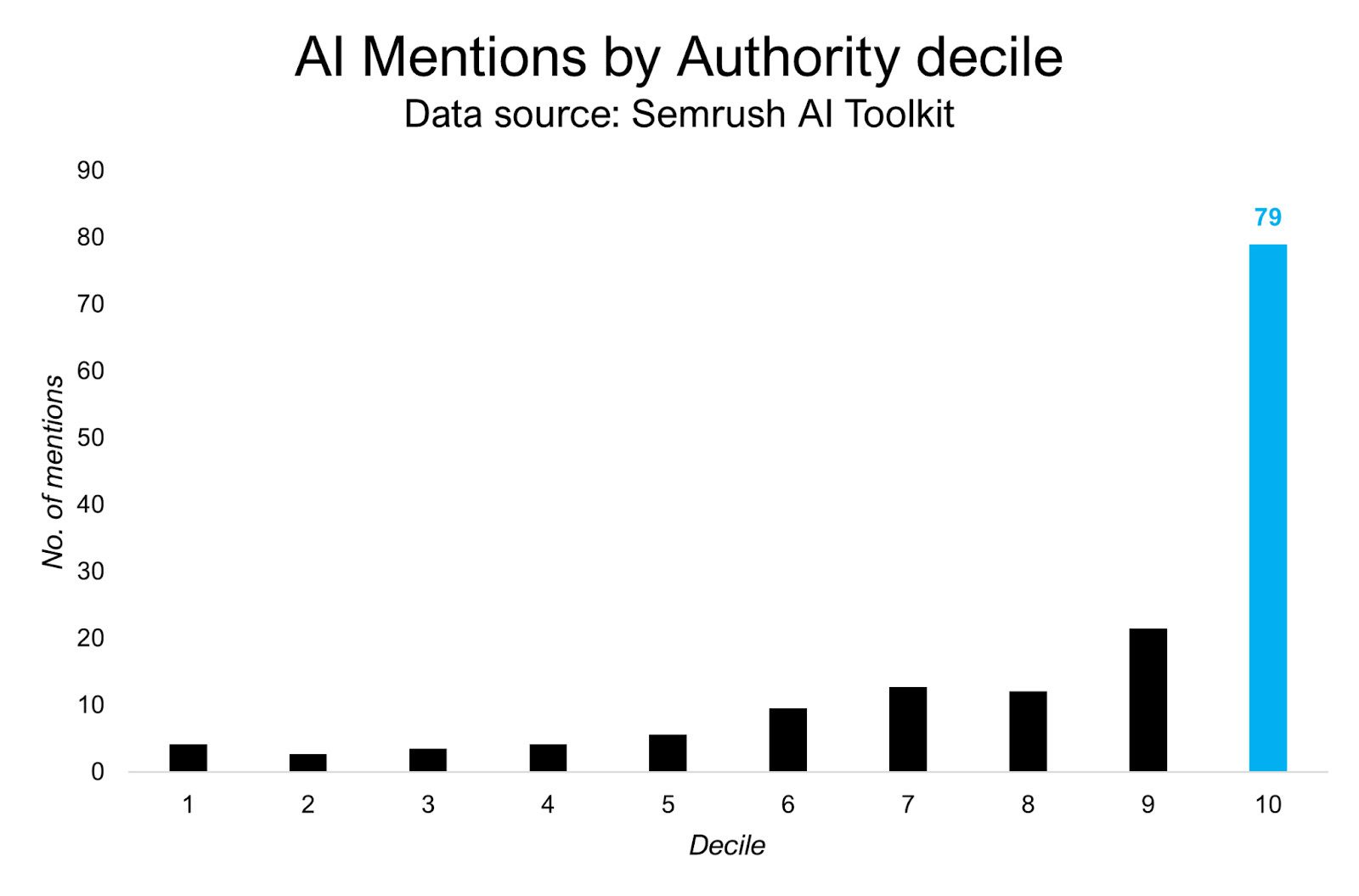

While most query types are seeing traffic declines, branded searches show the opposite trend. According to Amsive’s research, branded queries with AI Overviews see an 18% increase in click-through rate.

Several related factors likely contribute to this brand advantage. When AI Overviews mention specific brands, it conveys authority and credibility in ways that generic content can’t replicate.

People seeing their preferred brand in an AI Overview may be more likely to click through to the official site. Additionally, AI Overviews for branded searches often include rich information like store hours, contact details, and direct links, making it easier for users to find what they need.

This pattern has strategic implications as companies that have invested in brand building have a strong defense against AI disruption. The 18% increase in branded terms versus a 34-46% decrease in generic terms (as shown above) creates a performance gap that will likely impact marketing budgets.

The brand advantage extends beyond direct brand searches. Queries combining brand names with product categories show smaller traffic declines than purely generic searches. This suggests that even partial brand recognition provides some protection against AI Overview disruption. Companies with strong brands can leverage this by ensuring their brand appears naturally in relevant conversations and content.

This brand premium creates a two-tier internet, where established brands flourish while smaller content creators struggle financially. The impact on information diversity and market competition is troubling.

Google’s Defense: Stable Traffic, Better Quality

Google maintains a consistent three-part defense of AI Overviews:

- Increased search usage.

- Improved click quality.

- Stable overall traffic.

The company frames AI Overviews as enhancing rather than replacing traditional search, though this narrative faces increasing skepticism from publishers experiencing traffic declines.

The company’s blog post from May, introducing the global expansion, stated:

“AI Overviews is driving over 10% increase in usage of Google for the types of queries that show AI Overviews. This means that once people use AI Overviews, they are coming to do more of these types of queries.”

Although this statistic shows a rise in Google Search engagement, it’s sparked intense debate and skepticism in the search and publishing worlds. Many experts agree that a 10% boost in AI Overview-driven searches could be due to changes in user behavior, but also warn that higher search volumes don’t automatically mean more traffic for content publishers.

A number of LinkedIn industry voices have publicly pushed back on Google’s 10% usage increase narrative. For example, Devansh Parashar writes:

“Google’s claim that AI Overviews have driven 10% more searches masks a troubling trend. Data from independent research firms, such as Pew, show that a majority of users do not click beyond the AI Overview— a figure that suggests Google’s LLM layer is quietly eating the web’s traffic pie.”

Similarly, Trevin Shirey points out concerns about the gap between increased engagement with search queries and the actual traffic publishers see:

“Although Google reports a surge in usage, many publishers are experiencing declines in organic click-through rates. This signals a silent crisis where users get quick answers from AI, but publishers are left behind.”

Google’s claim about increased usage needs to be read carefully. The increase is only for certain types of queries that show AI overviews, not overall search volume.

If users have to make multiple searches to find information they could have gotten in one click, their overall usage might go up, but their satisfaction could actually decrease.

In an August blog post, Google’s head of search, Liz Reid, claimed the volume of clicks from Google search to websites had been “relatively stable” year-over-year.

Reid also asserted that click quality had improved:

“With AI Overviews, people are searching more and asking new questions that are often longer and more complex. In addition, with AI Overviews people are seeing more links on the page than before. More queries and more links mean more opportunities for websites to surface and get clicked.”

A Google spokesperson told the BBC:

“More than any other company, Google prioritises sending traffic to the web, and we continue to send billions of clicks to websites every day.”

Google’s developer documentation states:

“We’ve seen that when people click from search results pages with AI Overviews, these clicks are higher quality (meaning, users are more likely to spend more time on the site).”

Publishers are understandably concerned and question the differences between Google’s description of stability and the actual data showing otherwise.

Jason Kint, CEO of Digital Content Next, notes:

“Since Google rolled out AI Overviews in your search results, median year-over-year referral traffic from Google Search to premium publishers down 10%.”

Kint’s data shatters Google’s carefully crafted image of stability, exposing what many publishers already suspect: The search giant’s promises are increasingly at odds with the realities reflected in their analytics dashboards and revenue reports.

The argument that higher-quality clicks are more valuable doesn’t provide much comfort when revenue is falling short. Even if engagement increases, losing such a large portion of clicks is a serious challenge for many ad-supported businesses.

Echoing these concerns, SEO Lead Jeff Domansky states:

“For publishers, AI Overviews are a direct hit to traffic and revenue models built around clicks and pageviews.”

Although Google claims that AI Overview clicks are of higher quality, many industry experts are skeptical.

Lily Ray, Vice President, SEO Strategy & Research at Amsive, highlights the lack of quality control on Google’s end:

“Since Google’s AI Overviews were launched, I (and many others) have shared dozens of examples of spam, misinformation, and inaccurate, biased, or incomplete results appearing in live AI Overview responses.”

And SEO specialist Barry Adams raises concerns about the quality and sustainability:

“Google’s AI Overviews are terrible at quoting the right sources… There is nothing intelligent about LLMs. They’re advanced word predictors, and using them for any purpose that requires a basis in verifiable facts – like search queries – is fundamentally wrong.”

Adams highlights a philosophical contradiction in AI Overviews: By relying on probabilistic language models to answer factual questions, Google may be misaligning technology with user needs.

This range of voices highlights a growing disconnect between Google’s hopeful engagement claims and the tough realities many publishers are facing as their referral traffic and revenue decrease.

Google hasn’t provided specific metrics defining “higher quality.” Publishers can’t verify these claims without access to comparative engagement data from AI Overview versus traditional search traffic.

Legal Challenges Mount

Publishers are seeking relief through regulatory and legal channels. In July, the Independent Publishers Alliance, tech justice nonprofit Foxglove, and the campaign group Movement for an Open Web filed a complaint with the UK’s Competition and Markets Authority. They claim that Google AI Overviews misuse publisher content, causing harm to newspapers.

The complaint urges the CMA to impose temporary measures that prevent Google from using publisher content in AI-generated responses without compensation.

It’s still unclear whether courts and regulators, which often move at a slow pace, can take action quickly enough to help publishers before market forces make any potential solutions irrelevant. A classic example of regulation trying to keep up with technological advancements.

The rapid growth of AI Overviews suggests that market realities may outstrip legal solutions.

Publisher Adaptations: Beyond Google Dependence

With threats looming, publishers are rushing to cut their reliance on Google. David Higgerson shares Reach’s approach in a statement to the BBC:

“We need to go and find where audiences are elsewhere and build relationships with them there. We’ve got millions of people who receive our alerts on WhatsApp. We’ve built newsletters.”

Instead of creating content for Google discovery, publishers need to develop direct relationships. Email newsletters, mobile apps, and podcast subscriptions provide traffic sources that aren’t affected by AI Overview disruptions.

Stuart Forrest stresses the importance of quality as a key differentiator:

“We need to make sure that it’s us being cited and not our rivals. Things like writing good quality content… it’s amazing the number of publishers that just give up on that.”

However, quality alone may not be enough if users never leave Google’s search results page. Publishers also need to master AI Overview optimization and understand how to make the most of remaining click opportunities.

Higgerson notes:

“Google doesn’t give us a manual on how to do it. We have to run tests and optimise copy in a way that doesn’t damage the primary purpose of the content.”

Another path that’s emerging is content licensing. Following News Corp and The Atlantic partnering with OpenAI, more publishers are exploring direct licensing relationships. These deals typically provide upfront payments and ongoing royalties for content usage in AI training, though terms remain confidential.

What We Don’t Know

There are still many uncertainties. The long-term trajectory of AI Mode, for example, could alter current patterns.

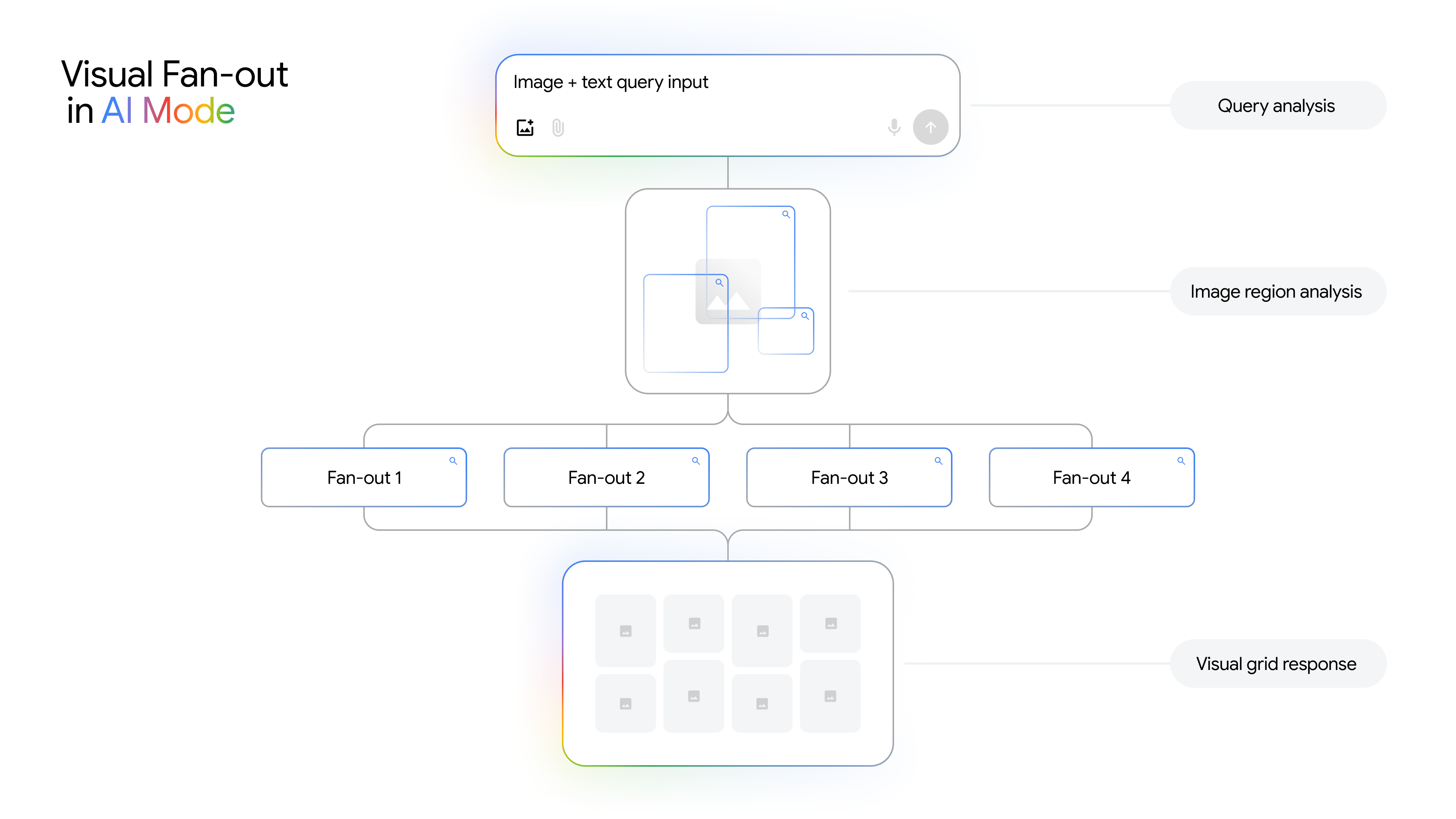

AI Mode

Google’s AI Mode may pose an even bigger threat than AI Overviews. This new interface displays search results in a conversational format instead of 10 blue links. Searchers have a back-and-forth with AI, with occasional reference links thrown in.

For publishers already struggling with AI-powered overviews, AI Mode could wipe out the rest of their traffic.

International Impact

The international effects outside English-language markets remain unmeasured. Since AI Overviews are available in over 200 countries and 40 languages, the impact likely varies by market. Factors like cultural differences in search behavior, language complexity, local competition dynamics, and varying digital literacy levels could lead to vastly different outcomes.

Most current research focuses on English-language markets in developed economies.

Content Creation

The feedback loop between AI Overviews and content creation could reshape what content gets produced and how information flows online.

If publishers stop creating certain types of content due to traffic losses, will AI Overview quality suffer as training data becomes stale?

Looking Ahead: Expanded AI Features

Google intends to continue expanding AI features despite mounting publisher concerns and legal challenges.

The company’s roadmap includes AI Mode international expansion and enhanced interactive features, including voice-activated AI conversations and multi-turn query refinement. Publishers should prepare for continued evolution rather than expecting stability in search traffic patterns.

Regulatory intervention may force greater transparency in the coming months. The Independent Publishers Alliance’s EU complaint requests detailed impact assessments and content usage documentation.

These proceedings could establish precedents affecting how AI systems can use publisher content.

Final Thoughts

The question isn’t whether AI Overviews affect traffic. Evidence overwhelmingly confirms they do. The question is how publishers adapt business models while maintaining sustainable operations.

The web is at a turning point, where the core agreement is being rewritten by the platforms that once promoted the open internet. Publishers who don’t acknowledge this change are jeopardizing their relevance in an AI-driven future.

Those who understand the impact, invest in brand building, and diversify traffic sources will be best positioned for success.

More Resources:

Featured Image: Roman Samborskyi/Shutterstock