This edited excerpt is from Ethical AI in Marketing by Nicole Alexander ©2025 and is reproduced and adapted with permission from Kogan Page Ltd.

Recent research highlights intriguing paradoxes in consumer attitudes toward AI-driven marketing. Consumers encounter AI-powered marketing interactions frequently, often without realizing it.

According to a 2022 Pew Research Center survey, 27% of Americans reported interacting with AI at least several times a day, while another 28% said they interact with AI about once a day or several times a week (Pew Research Center, 2023).

As AI adoption continues to expand across industries, marketing applications – from personalized recommendations to chatbots – are increasingly shaping consumer experiences.

According to McKinsey & Company (2023), AI-powered personalization can deliver five to eight times the ROI on marketing spend and significantly boost customer engagement.

In this rapidly evolving landscape, trust in AI has become a crucial factor for successful adoption and long-term engagement.

The World Economic Forum underscores that “trust is the foundation for AI’s widespread acceptance,” and emphasizes the necessity for companies to adopt self-governance frameworks that prioritize transparency, accountability, and fairness (World Economic Forum, 2025).

The Psychology Of AI Trust

Consumer trust in AI marketing systems operates fundamentally differently from traditional marketing trust mechanisms.

Where traditional marketing trust builds through brand familiarity and consistent experiences, AI trust involves additional psychological dimensions related to automation, decision-making autonomy, and perceived control.

Understanding these differences is crucial for organizations seeking to build and maintain consumer trust in their AI marketing initiatives.

Cognitive Dimensions

Neurological studies offer intriguing insights into how our brains react to AI. Research from Stanford University reveals that we process information differently when interacting with AI-powered systems.

For example, when evaluating AI-generated product recommendations, our brains activate distinct neural pathways compared to those triggered by recommendations from a human salesperson.

This crucial difference highlights the need for marketers to understand how consumers cognitively process AI-driven interactions.

There are three key cognitive factors that have emerged as critical influences on AI trust, including perceived control, understanding of mechanisms, and value recognition.

Emotional Dimensions

Consumer trust in AI marketing is deeply influenced by emotional factors, which often override logical evaluations. These emotional responses shape trust in several key ways:

- Anxiety and privacy concerns: Despite AI’s convenience, 67% of consumers express anxiety about how their data is used, reflecting persistent privacy concerns (Pew Research Center, 2023). This tension creates a paradoxical relationship where consumers benefit from AI-driven marketing while simultaneously fearing its potential misuse.

- Trust through repeated interactions: Emotional trust in AI systems develops iteratively through repeated, successful interactions, particularly when systems demonstrate high accuracy, consistent performance, and empathetic behavior. Experimental studies show that emotional and behavioral trust accumulate over time, with early experiences strongly shaping later perceptions. In repeated legal decision-making tasks, users exhibited growing trust toward high-performing AI, with initial interactions significantly influencing long-term reliance (Kahr et al., 2023). Emotional trust can follow nonlinear pathways – dipping after failures but recovering through empathetic interventions or improved system performance (Tsumura and Yamada, 2023).

- Honesty and transparency in AI content: Consumers increasingly value transparency regarding AI-generated content. Companies that openly disclose when AI has been used – for instance, in creating product descriptions – can empower customers by helping them feel more informed and in control of their choices. Such openness often strengthens customer trust and fosters positive perceptions of brands actively embracing transparency in their marketing practices.

Cultural Variations In AI Trust

The global nature of modern marketing requires a nuanced understanding of cultural differences in AI trust. These variations arise from deeply ingrained societal values, historical relationships with technology, and norms around privacy, automation, and decision-making.

For marketers leveraging AI in customer engagement, recognizing these cultural distinctions is crucial for developing trustworthy AI-driven campaigns, personalized experiences, and region-specific data strategies.

Diverging Cultural Trust In AI

Research reveals significant disparities in AI trust across global markets. A KPMG (2023) global survey found that 72% of Chinese consumers express trust in AI-driven services, while in the U.S., trust levels plummet to just 32%.

This stark difference reflects broader societal attitudes toward government-led AI innovation, data privacy concerns, and varying historical experiences with technology.

Another study found that AI-related job displacement fears vary greatly by region. In countries like the U.S., India, and Saudi Arabia, consumers express significant concerns about AI replacing human roles in professional sectors such as medicine, finance, and law.

In contrast, consumers in Japan, China, and Turkey exhibit lower levels of concern, signaling a higher acceptance of AI in professional settings (Quantum Zeitgeist, 2025).

The Quantum Zeitgeist study shows that regions like Japan, China, and Turkey exhibit lower levels of concern about AI replacing human jobs compared to regions like the U.S., India, and Saudi Arabia, where such fears are more pronounced.

This insight is invaluable for marketers crafting AI-driven customer service, financial tools, and healthcare applications, as perceptions of AI reliability and utility vary significantly by region.

As trust in AI diverges globally, understanding the role of cultural privacy norms becomes essential for marketers aiming to build trust through AI-driven services.

Cultural Privacy Targeting In AI Marketing

As AI-driven marketing becomes more integrated globally, the concept of cultural privacy targeting – the practice of aligning data collection, privacy messaging, and AI transparency with cultural values – has gained increasing importance. Consumer attitudes toward AI adoption and data privacy are highly regional, requiring marketers to adapt their strategies accordingly.

In more collectivist societies like Japan, AI applications that prioritize societal or community well-being are generally more accepted than those centered on individual convenience.

This is evident in Japan’s Society 5.0 initiative – a national vision introduced in 2016 that seeks to build a “super-smart” society by integrating AI, IoT, robotics, and big data to solve social challenges such as an aging population and strains on healthcare systems.

Businesses are central to this transformation, with government and industry collaboration encouraging companies to adopt digital technologies not just for efficiency, but to contribute to public welfare.

Across sectors – from manufacturing and healthcare to urban planning – firms are reimagining business models to align with societal needs, creating innovations that are both economically viable and socially beneficial.

In this context, AI is viewed more favorably when positioned as a tool to enhance collective well-being and address structural challenges. For instance, AI-powered health monitoring technologies in Japan have seen increased adoption when positioned as tools that contribute to broader public health outcomes.

Conversely, Germany, as an individualistic society with strong privacy norms and high uncertainty avoidance, places significant emphasis on consumer control over personal data. The EU’s GDPR and Germany’s support for the proposed Artificial Intelligence Act reinforce expectations for robust transparency, fairness, and user autonomy in AI systems.

According to the OECD (2024), campaigns in Germany that clearly communicate data usage, safeguard individual rights, and provide opt-in consent mechanisms experience higher levels of public trust and adoption.

These contrasting cultural orientations illustrate the strategic need for contextualized AI marketing – ensuring that data transparency and privacy are not treated as one-size-fits-all, but rather as culture-aware dimensions that shape trust and acceptance.

Hofstede’s (2011) cultural dimensions theory offers further insights into AI trust variations:

- High individualism + high uncertainty avoidance (e.g., Germany, U.S.) → Consumers demand transparency, data protection, and human oversight in AI marketing.

- Collectivist cultures with lower uncertainty avoidance (e.g., Japan, China, South Korea) → AI is seen as a tool that enhances societal progress, and data-sharing concerns are often lower when the societal benefits are clear (Gupta et al., 2021).

For marketers deploying AI in different regions, these insights help determine which features to emphasize:

- Control and explainability in Western markets (focused on privacy and autonomy).

- Seamless automation and societal progress in East Asian markets (focused on communal benefits and technological enhancement).

Understanding the cultural dimensions of AI trust is key for marketers crafting successful AI-powered campaigns.

By aligning AI personalization efforts with local cultural expectations and privacy norms, marketers can improve consumer trust and adoption in both individualistic and collectivist societies.

This culturally informed approach helps brands tailor privacy messaging and AI transparency to the unique preferences of consumers in various regions, building stronger relationships and enhancing overall engagement.

Avoiding Overgeneralization In AI Trust Strategies

While cultural differences are clear, overgeneralizing consumer attitudes can lead to marketing missteps.

A 2024 ISACA report warns against rigid AI segmentation, emphasizing that trust attitudes evolve with:

- Media influence (e.g., growing fears of AI misinformation).

- Regulatory changes (e.g., the EU AI Act’s impact on European consumer confidence).

- Generational shifts (younger, digitally native consumers are often more AI-trusting, regardless of cultural background).

For AI marketing, this highlights the need for flexible, real-time AI trust monitoring rather than static cultural assumptions.

Marketers should adapt AI trust-building strategies based on region-specific consumer expectations:

- North America and Europe: AI explainability, data transparency, and ethical AI labels increase trust.

- East Asia: AI-driven personalization and seamless automation work best when framed as benefiting society.

- Islamic-majority nations and ethical consumer segments: AI must be clearly aligned with fairness and ethical governance.

- Global emerging markets: AI trust is rapidly increasing, making these markets prime opportunities for AI-driven financial inclusion and digital transformation.

The data, drawn from the 2023 KPMG International survey, underscores how cultural values such as collectivism, uncertainty avoidance, and openness to innovation, shape public attitudes toward AI.

For example, trust levels in Germany and Japan remain low, reflecting high uncertainty avoidance and strong privacy expectations, while countries like India and Brazil exhibit notably higher trust, driven by optimism around AI’s role in societal and economic progress.

Measuring Trust In AI Marketing Systems

As AI becomes central to how brands engage customers – from personalization engines to chatbots – measuring consumer trust in these systems is no longer optional. It’s essential.

And yet, many marketing teams still rely on outdated metrics like Net Promoter Score (NPS) or basic satisfaction surveys to evaluate the impact of AI. These tools are helpful for broad feedback but miss the nuance and dynamics of trust in AI-powered experiences.

Recent research, including work from MIT Media Lab (n.d.) and leading behavioral scientists, makes one thing clear: Trust in AI is multi-dimensional, and it’s shaped by how people feel, think, and behave in real-time when interacting with automated systems.

Traditional metrics like NPS and CSAT (Customer Satisfaction Score) tell you if a customer is satisfied – but not why they trust (or don’t trust) your AI systems.

They don’t account for how transparent your algorithm is, how well it explains itself, or how emotionally resonant the interaction feels. In AI-driven environments, you need a smarter way to understand trust.

A Modern Framework For Trust: What CMOs Should Know

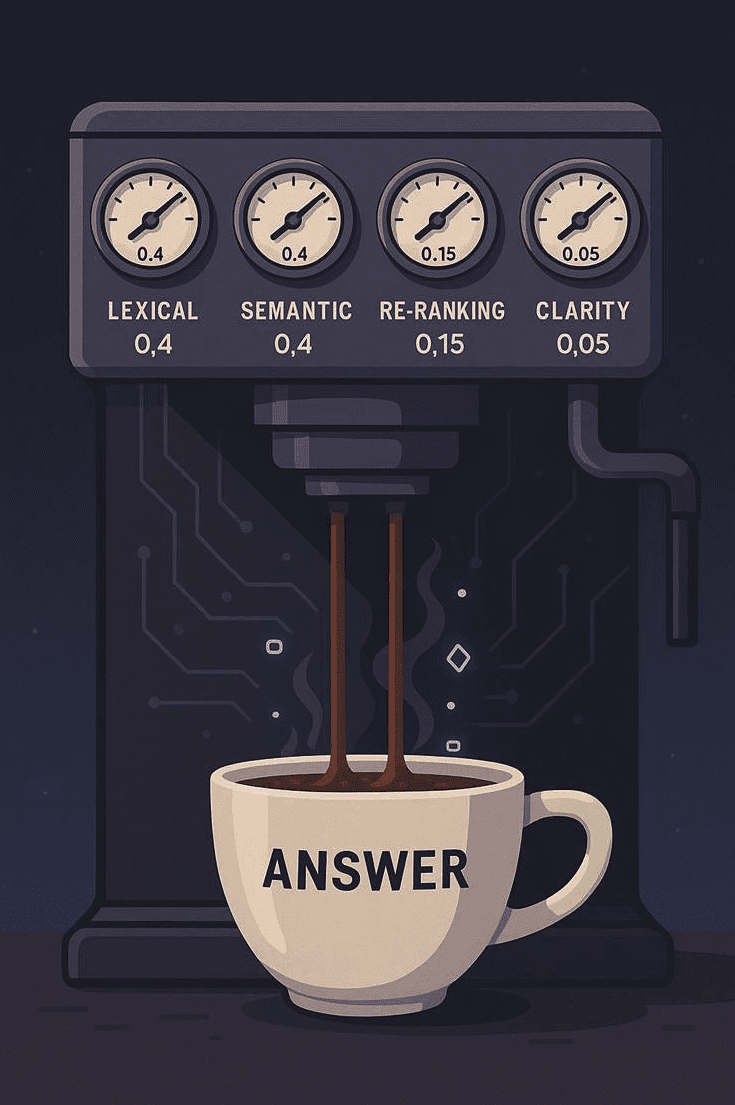

MIT Media Lab’s work on trust in human-AI interaction offers a powerful lens for marketers. It breaks trust into three key dimensions:

Behavioral Trust

This is about what customers do, not what they say. When customers engage frequently, opt in to data sharing, or return to your AI tools repeatedly, that’s a sign of behavioral trust. How to track it:

- Repeat engagement with AI-driven tools (e.g., product recommenders, chatbots).

- Opt-in rates for personalization features.

- Drop-off points in AI-led journeys.

Emotional Trust

Trust is not just rational, it’s emotional. The tone of a voice assistant, the empathy in a chatbot’s reply, or how “human” a recommendation feels all play into emotional trust. How to track it:

- Sentiment analysis from chat transcripts and reviews.

- Customer frustration or delight signals from support tickets.

- Tone and emotional language in user feedback.

Cognitive Trust

This is where understanding meets confidence. When your AI explains itself clearly – or when customers understand what it can and can’t do –they’re more likely to trust the output. How to track it:

- Feedback on explainability (“I understood why I got this recommendation”).

- Click-through or acceptance rates of AI-generated content or decisions.

- Post-interaction surveys that assess clarity.

Today’s marketers are moving toward real-time trust dashboards – tools that monitor how users interact with AI systems across channels. These dashboards track behavior, sentiment, and comprehension all at once.

According to MIT Media Lab researchers, combining these signals provides a richer picture of trust than any single survey can. It also gives teams the agility to address trust breakdowns as they happen – like confusion over AI-generated content or friction in AI-powered customer journeys.

Customers don’t expect AI to be perfect. But they do expect it to be honest and understandable. That’s why brands should:

- Label AI-generated content clearly.

- Explain how decisions like pricing, recommendations, or targeting are made.

- Give customers control over data and personalization.

Building trust is less about tech perfection and more about perceived fairness, clarity, and respect.

Measuring that trust means going deeper than satisfaction. Use behavioral, emotional, and cognitive signals to track trust in real-time – and design AI systems that earn it.

To read the full book, SEJ readers have an exclusive 25% discount code and free shipping to the US and UK. Use promo code ‘SEJ25’ at koganpage.com here.

More Resources:

References

- Hofstede, G (2011) Dimensionalizing Cultures: The Hofstede Model in Context, Online Readings in Psychology and Culture, 2 (1), scholarworks.gvsu.edu/cgi/viewcontent. cgi?article=1014&context=orpc (archived at https://perma.cc/B7EP-94CQ)

- ISACA (2024) AI Ethics: Navigating Different Cultural Contexts, December 6, www.isaca. org/resources/news-and-trends/isaca-now-blog/2024/ai-ethics-navigating-different-cultural-contexts (archived at https://perma.cc/3XLA-MRDE)

- Kahr, P K, Meijer, S A, Willemsen, M C, and Snijders, C C P (2023) It Seems Smart, But It Acts Stupid: Development of Trust in AI Advice in a Repeated Legal Decision-Making Task, Proceedings of the 28th International Conference on Intelligent User Interfaces. doi.org/10.1145/3581641.3584058 (archived at https://perma.cc/SZF8-TSK2)

- KPMG International and The University of Queensland (2023) Trust in Artificial Intelligence: A Global Study, assets.kpmg.com/content/dam/kpmg/au/pdf/2023/ trust-in-ai-global-insights-2023.pdf (archived at https://perma.cc/MPZ2-UWJY)

- McKinsey & Company (2023) The State of AI in 2023: Generative AI’s Breakout Year, www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai-in-2023- generative-ais-breakout-year (archived at https://perma.cc/V29V-QU6R)

- MIT Media Lab (n.d.) Research Projects, accessed April 8, 2025

- OECD (2024) OECD Artificial Intelligence Review of Germany, www.oecd.org/en/ publications/2024/06/oecd-artificial-intelligence-review-of-germany_c1c35ccf.html (archived at https://perma.cc/5DBS-LVLV)

- Pew Research Center (2023) Public Awareness of Artificial Intelligence in Everyday Activities, February, www.pewresearch.org/wp-content/uploads/sites/20/2023/02/ PS_2023.02.15_AI-awareness_REPORT.pdf (archived at https://perma.cc/V3SE-L2BM)

- Quantum Zeitgeist (2025) How Cultural Differences Shape Fear of AI in the Workplace, Quantum News, February 22, quantumzeitgeist.com/how-cultural-differences-shape-fear-of-ai-in-the-workplace-a-global-study-across-20-countries/ (archived at https://perma.cc/3EFL-LTKM)

- Tsumura, T and Yamada, S (2023) Making an Agent’s Trust Stable in a Series of Success and Failure Tasks Through Empathy, arXiv. arxiv.org/abs/2306.09447 (archived at https://perma.cc/L7HN-B3ZC)

- World Economic Forum (2025) How AI Can Move from Hype to Global Solutions, www. weforum.org/stories/2025/01/ai-transformation-industries-responsible-innovation/ (archived at https://perma.cc/5ALX-MDXB)

Featured Image: Rawpixel.com/Shutterstock