“If AI can write, why are we still paying writers?” For any CMO or senior manager on a budget, you’ve probably already had a version of this conversation. It’s a seductive idea. After all, humans are expensive and can take hours or even days to write a single article. So, why not replace them with clever machines and watch the costs go down while productivity goes up?

It’s understandable. Buffeted by years of high inflation, high interest rates, and disrupted supply chains, organizations around the world are cutting costs wherever they can. These days, instead of “cost cutting,” CFOs and executive teams prefer the term “cost transformation,” a new jargon for the same old problem.

Whatever you call it, marketing is one department that is definitely feeling the impact. According to Gartner, in 2020, the average marketing budget was 11% of overall company revenue. By 2023, this had fallen to 9.1%. Today, the average budget is 7.7%.

Of course, some organizations will have made these cuts under the assumption that AI makes larger teams and larger budgets unnecessary. I’ve already seen some companies slash their content teams to the bone; no doubt believing that all you need is a few people capable of crafting a decent prompt. Yet a different Gartner study found that 59% of CMOs say they lack the budget to execute their 2025 strategy. I guess they didn’t get the memo.

Meanwhile, some other organizations refuse to let AI near their content at all, for a variety of reasons. They might have concerns over quality control, data privacy, complexity, and so on. Or perhaps they’re hanging onto the belief that this AI thing is a fad or a bubble, and they don’t want to implement something that might come crashing down at any moment.

Both camps likely believe they’ve adopted the correct, rational, financially prudent approach to AI. Both are dangerously wrong. AI might not be the solution, but it’s also not the problem.

Beeching’s Axe

Spanish philosopher George Santayana once wrote: “Those who cannot remember the past are condemned to repeat it.” With that in mind, let me share a cautionary tale.

In the 1960s, British Railways (later British Rail) made one of the most short-sighted decisions in transport history. With the railway network hemorrhaging money, the Conservative Government appointed Dr. Richard Beeching, a physicist from ICI with no transport experience, as the new chairman of the British Transport Commission, tasked with cutting costs and making the railways profitable.

Beeching’s solution was simple; do away with all unprofitable routes, identified by assessing the passenger numbers and operational costs of each route in isolation. Between 1963 and 1970, Beeching’s cost-cutting axe led to the closure of 2,363 stations and over 5,000 miles of track (~30% of the rail network), with the loss of 67,700 jobs.

Decades later, the country is spending billions rebuilding some of those same routes. As it turned out, many of those “unprofitable” routes were vital not only to the health of the wider rail network, but also to the communities in those regions in ways that Beeching’s team of bean counters simply didn’t have the imagination to value.

I’m telling you this because, right now, a lot of businesses are carrying out their own version of the Beeching cuts.

The Data-Led Trap

There’s a crucial distinction between being data-led and data-informed. Understanding this could be the difference between implementing a sound content production strategy and repeating Beeching’s catastrophe.

Data-led thinking treats the available data as the complete picture. It looks for a pattern and adopts it as an undeniable truth that points towards a clear course of action. “AI generates content for a fraction of our current costs. Therefore, we should replace the writers.”

Data-informed thinking sets out to understand what might be behind the pattern, extrapolate what’s missing from the picture, and stress-test the conclusions. The data becomes a starting point for inquiry, not an endpoint for decisions. “What value isn’t captured in this data? What would replacing our writers with AI actually mean for the effectiveness of our content when our competitors can do the exact the same thing with the exact same tools?”

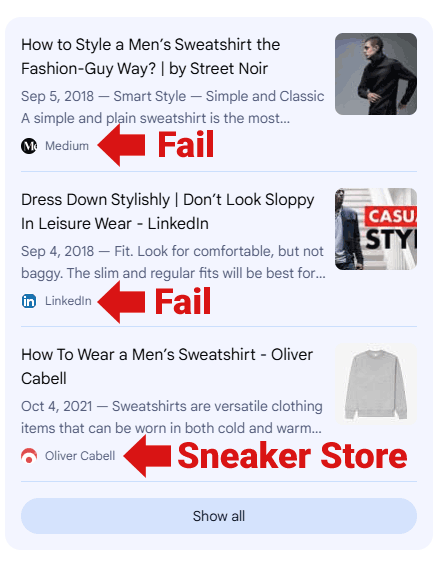

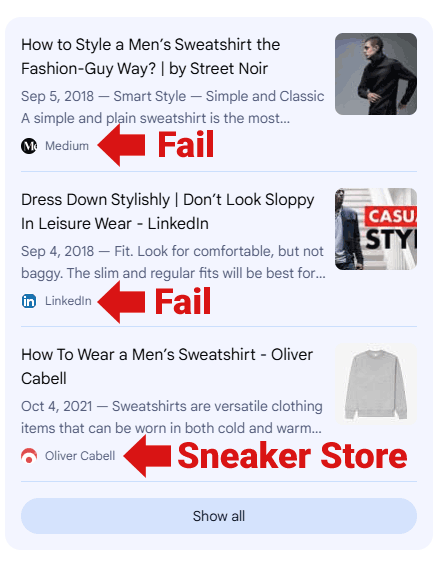

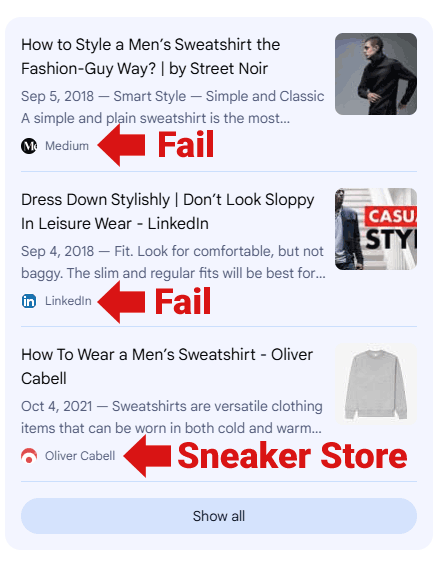

That last question is the real challenge facing companies considering AI-generated content, but the answer won’t be found in a spreadsheet. If you can use AI to generate your content with minimal human input, so can everyone else. Very soon, everyone is generating similar content on similar topics to target the same audiences, with recycled information and reheated “insights” drawn from the same online sources.

Why would ChatGPT somehow generate a better blog post for you than for anyone else asking for 1,200 words on the same topic? It wouldn’t. You need to add your own secret sauce.

There is no competitive advantage to be gained by relying on AI-generated content alone. None.

AI-generated content is not a silver bullet. It’s the minimum benchmark your content needs to significantly exceed if your brand and your content is to have any chance of standing out in today’s noisy online marketplace.

Unfortunately, while organizations know they need to have content, far too many senior decision-makers don’t fully understand why, never mind all the things an effective content strategy needs to accomplish.

Content Isn’t A Cost, It’s An Infrastructure

Marketing content is often looked down upon as somehow easier or less worthy than other forms of writing. Yet it arguably has the hardest job of all. Every article, ebook, LinkedIn post, brochure, and landing page has to tick off a veritable to-do list of strategic requirements.

Of course, your content needs to have something to say. It must work on an informational level, backed by solid research and journalism. However, each asset or article also has a strategic role to play: attracting audiences, nurturing prospects, or converting customers, while aligning with the brand’s carefully mapped out messaging at every stage.

Your content must build authority, earn trust, and demonstrate expertise. It must be memorable enough to aid brand awareness and recall, and distinctive enough to differentiate the brand from its competitors. It must be structured for search engines with the right entities, topics, and relationships, without losing the attention of busy humans who can click away at any second. Ideally, it should also include a couple of quote-worthy lines or interesting stats capable of attracting attention when the content is distributed on social media.

ChatGPT or Claude can certainly string a bunch of convincing sentences together. But if you think they can spin all those other plates for you at the same time, and to the same standard as a skilled content creator, you’re going to be disappointed. No matter how detailed and nuanced your prompt, something will always be missing. You’re still asking AI to synthesize something brilliant by recycling what’s already out there.

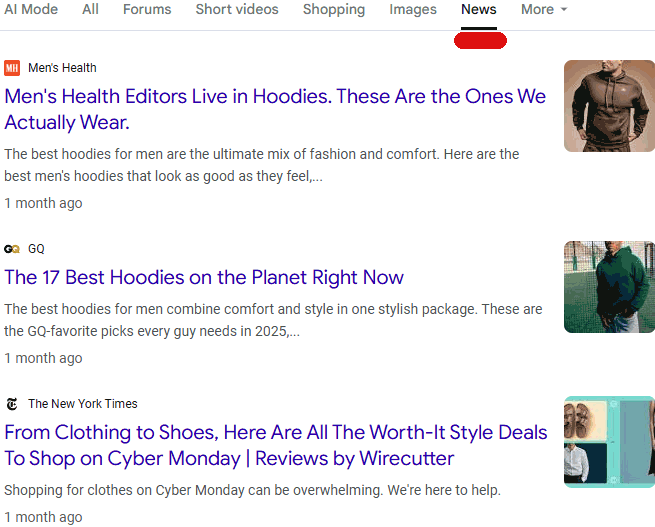

Which brings me to the most ironic part of this discussion. With the rapid adoption of AI-mediated search, your content now needs to become a source that large language models will confidently cite in responses to relevant queries.

Expecting AI to create content likely to be cited by AI is like watching a dog chasing its tail: futile and frustrating. If AI provided the information and insights contained in your content, it already has better, more authoritative sources. Why would AI cite content that contains little if any fresh information or insight?

If your goal is to increase your brand’s visibility in AI responses, then your content needs to offer what can’t easily be found elsewhere.

The Limitations Of Online Knowledge

Despite appearances, AI cannot think. It cannot understand, in the sense we usually mean it. As it currently stands, it cannot reason. It certainly cannot imagine. Words like these have emerged as common euphemisms for how AI generates responses, but they also set the wrong expectations.

AI also cannot use information that isn’t already available and crawlable online. While we like to think that somehow the internet is a massive store of the entirety of human knowledge, the reality is that it’s not even close.

So much of the world we live in simply cannot be captured as structured, digitized information. While AI can tell you when and where the next local collectables market is on, it can’t tell you which dealer has that hard-to-find comic you’ve been chasing for years. That’s the kind of information you can only find out by digging through lots of comic boxes on the day.

And then there are cultural histories and localized experiences that exist more in verbal traditions than in history books. AI can tell me plenty of stuff about the First World War. But if I ask it about the Iranian famine during WW1, it’s going to struggle because it’s not that well documented outside of Iranian history books. Most of my knowledge of the famine comes almost entirely from stories my great grandma told my mother, who then passed them on to me, like how she had to survive on just one almond per day. But you won’t find her stories in any book.

How can AI draw upon the wealth of personal experience and memories we all have? The greatest source of knowledge is human. It’s us. It’s always us.

But while AI can’t do your thinking for you, it can still help in many other ways.

→ Read More: Can You Use AI To Write For YMYL Sites? (Read The Evidence Before You Do)

You Still Need A Brain Behind The Bot

Let me be clear: I use AI every day. My team uses AI every day. You should, too. The problem isn’t the tool. The problem is treating the tool as a strategy, and an efficiency or cost reduction strategy at that. Of course, it isn’t only marketing teams hoping to reduce costs and boost productivity with generative AI. Another industry has already discovered that AI doesn’t actually replace anything.

A recent survey conducted by the Australian Financial Review (AFR) found that most law firms reported using AI tools. However, far from reducing headcount, 70% of surveyed firms increased their hiring of lawyers to vet, review, and sign off on AI-generated outputs.

This isn’t a failure in their AI strategy, because the strategy was never about reducing headcount. They’re using AI tools as digital assistants (research, drafting, document handling, etc.) to free up more time and headspace for the kinds of strategic and insightful thinking that generates real business value.

Similarly, AI isn’t a like-for-like replacement for your writers, designers, and other content creators. It’s a force multiplier for them, helping your team reduce the drudgery that can so often get in the way of the real work.

- Summarizing complex information.

- Transcribing interviews.

- Creating outlines.

- Drafting related content like social media posts.

- Checking your content against the brand style guide to catch inconsistencies.

Some writers might even use AI to generate a very rough first draft of an article to get past that blank page. The key is to treat that copy as a starting point, not the finished article.

All these tasks are massive time-savers for content creators, freeing up more of their mental bandwidth for the high-value work AI simply can’t do as well.

AI can only synthesize content from existing information. It cannot create new knowledge or come up with fresh ideas. It cannot interview subject matter experts within your business to draw out hidden wisdom and insights. It cannot draw upon personal experiences or perspectives to make your content truly yours.

AI is also riddled with algorithmic biases, potentially skewing your content and your messaging without you even realizing. For example, the majority of AI training data is in the English language, creating a huge linguistic and cultural bias. It might require an experienced and knowledgeable eye to spot the subtle hallucinations or distortions.

While AI can certainly accelerate execution, you still need skilled, experienced creatives to do the real thinking and crafting.

You Don’t Know What You Have, Until It’s Gone

Until Beeching closed the line in 1969, the route between Edinburgh and Carlisle was a vital transport artery for the Scottish Borders. On paper, the line was unprofitable, at least according to Beeching’s simplistic methodology. However, the closure had massive knock-on effects, reducing access to jobs, education, and social services, as well as impacting tourism. Meanwhile, forcing people onto buses or into cars placed greater strain on other transport infrastructures.

While Beeching might have solved one narrowly defined problem, he had undermined the broader purpose of British Railways: the mobility of people in all parts of Great Britain. In effect, Beeching had shifted the consequences and cost pressures elsewhere.

The route was partially reopened in 2015 as The Borders Railway, costing an estimated £300 million to reinstate just 30 miles of line with seven stations.

Beeching’s cuts illustrate the folly of evaluating infrastructure (or content strategy) purely on narrow, short-term financial metrics.

Organizations that cut their teams in favor of AI are likely to find it isn’t so easy to reverse course and undo the damage a few years from now. Replacing your writers with AI risks eroding the connective tissue that characterizes your content ecosystem and anchors long-term performance: authority, context, nuance, trust, and brand identity.

Experienced content creators aren’t going to wait around for organizations to realize their true value. If enough of them leave the industry, and with fewer opportunities available for the next generation of creators to gain the necessary skills and experience, the talent pool is likely to shrink massively.

As with the Beeching cuts, rebuilding your content team is likely to cost you far more in the long term than you saved in the short term, particularly when you factor in the months or years of low-performing content in the meantime.

Know What You’re Cutting Before You Wield The Axe

According to your spreadsheet, AI-generated content may well be cheaper to produce. But the effectiveness of your content strategy doesn’t hinge on whether you can publish more for less. This isn’t a case of any old content will do.

So, beware of falling into the Beeching trap. Your content workflows might only seem “loss-making” on paper because the metrics you’re looking at don’t adequately capture all the ways your content delivers strategic value to your business.

Content is not a cost center. It never was. Content is the infrastructure of your brand’s discoverability, which makes it more important than ever in the AI era.

This isn’t a debate about “human vs. AI content.” It’s about equipping skilled people with the tools to help them create work worthy of being found, cited, and trusted.

So, before you start swinging the axe, ask yourself: Are you cutting waste, or are you dismantling the very system that makes your brand visible and credible in the first place?

More Resources:

Featured Image: IM Imagery/Shutterstock