Google’s AI Mode Personal Context Features “Still To Come” via @sejournal, @MattGSouthern

Seven months after Google teased “personal context” for AI Mode at Google I/O, Nick Fox, Google’s SVP of Knowledge and Information, says the feature still is not ready for a public rollout.

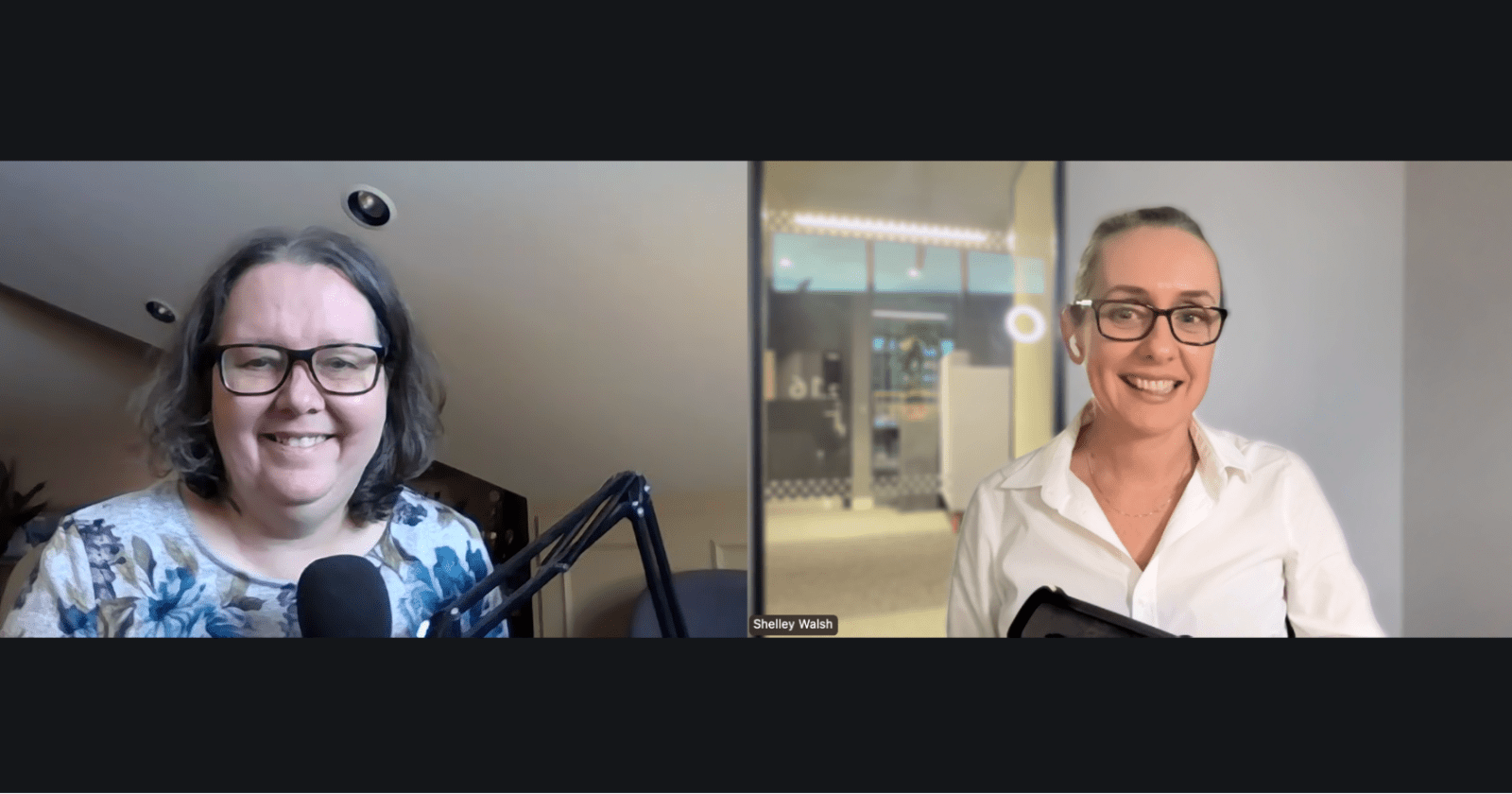

In an interview with the AI Inside podcast, Fox framed the delay as a product and permissions issue rather than a model-capability issue. As he put it: “It’s still to come.”

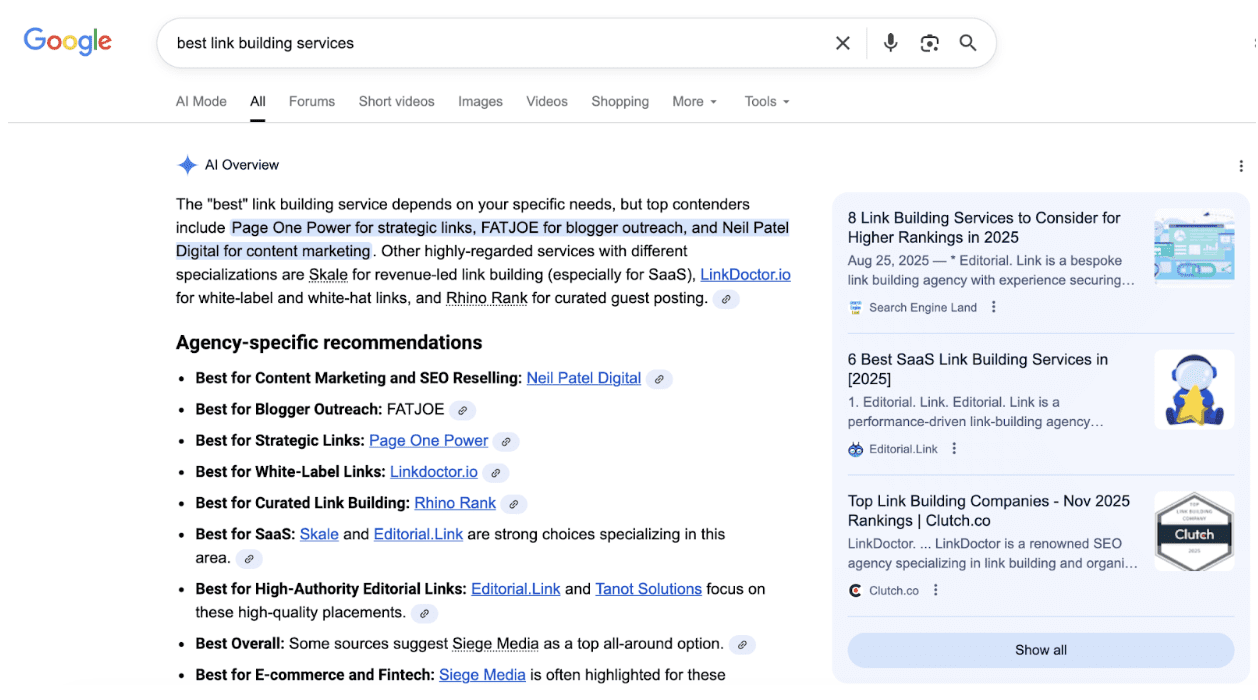

What Google Promised At I/O

At Google I/O, Google said AI Mode would “soon” incorporate a user’s past searches to improve responses. It also said you would be able to opt in to connect other Google apps, starting with Gmail, with controls to manage those connections.

The idea was that you wouldn’t need to restate context in every query if you wanted Google to use relevant details already sitting in your account.

On timing, Fox said some internal testing is underway, but he did not share a public rollout date:

“Some of us are testing this internally and working through it, but you know, still to come in terms of the in terms of the public roll out.”

You can hear the question and Fox’s response in the video below starting around the 37-minute mark:

AI Mode Growth Continues Without Personal Context

Even without that personalization layer, Fox pointed to rapid adoption, describing AI Mode as having “grown to 75 million daily active users worldwide.”

The bigger change may be in how people phrase queries. Fox described questions that are “two to three times as long,” with more explicit first-person context.

Instead of relying on AI Mode to infer intent, people are writing the context into the prompt, Fox says:

“People are trying to put put the right context into the query”

That matters because the “personal context” feature was designed to reduce that manual effort.

Geographic Patterns In Adoption

Adoption also appears uneven by market, with the strongest traction in regions that received AI Mode first. Fox described the U.S. as the most “mature” market because the product has had more time to become part of people’s routines.

He also pointed to strong adoption in markets where the web is less developed in certain languages or regions, naming India, Brazil, and Indonesia. The argument there is that AI Mode can stitch together information across languages and borders in ways traditional search results may not have for those markets.

Younger users, he added, are adopting the experience faster across regions.

Publisher Relationship Updates

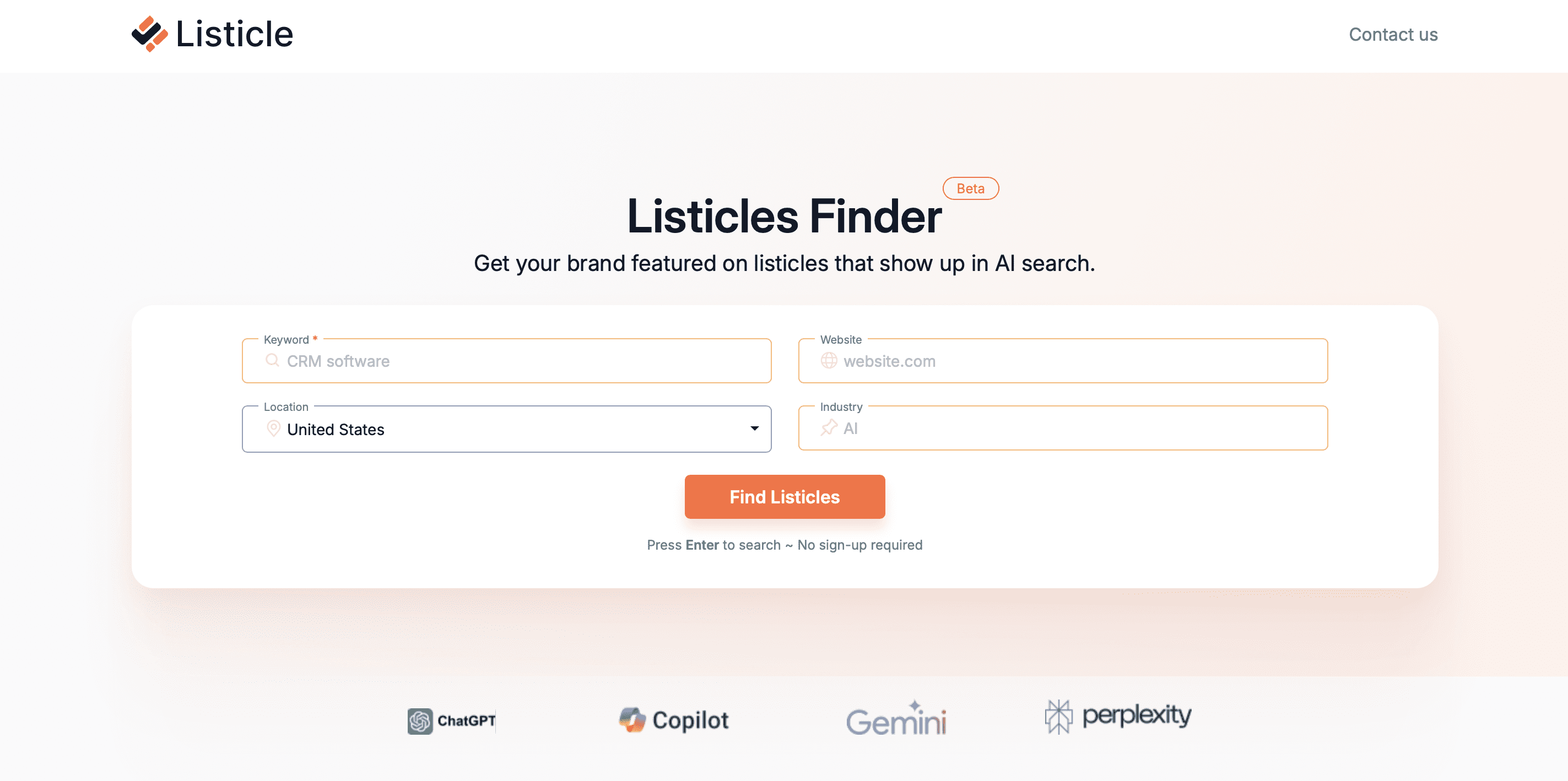

The interview also included updates tied to how AI Mode connects people back to publisher content.

Preferred Sources is one of them. The feature lets you choose specific publications you want to see more prominently in Google’s Top Stories unit, and Google describes it as available worldwide in English.

Fox also described ongoing work on links in AI experiences, including increasing the number of links shown and adding more context around them:

“We’re actually improving the links within our within our AI experience, increasing the number of them…”

On the commercial side, he noted Google has partnerships with “over 3,000 organizations” across “50 plus countries.”

Technical Updates

Fox talked through product and infrastructure changes now powering AI Mode and related experiences.

One was shipping Gemini 3 Pro in Search on day one, which he described as the first time Google has shipped a frontier model” in Search on launch day.

He also described generative layouts,” where the model can generate UI code on the fly for certain queries.

To keep the experience fast, he emphasized model routing, where simpler queries go to smaller, faster models and heavier work is reserved for more complex prompts.

Why This Matters

A version of AI Mode that personalizes answers using opt-in Gmail context is still not available and doesn’t have a public timeline.

In the meantime, people appear to be compensating by typing more context into their queries. If that becomes the norm, it may push publishers toward satisfying longer, more situation-specific questions.

Looking Ahead

While AI Mode is still in its early stages, the 75 million daily active users figure suggests it’s large enough to monitor for visibility.

Featured Image: Jackpress/Shutterstock