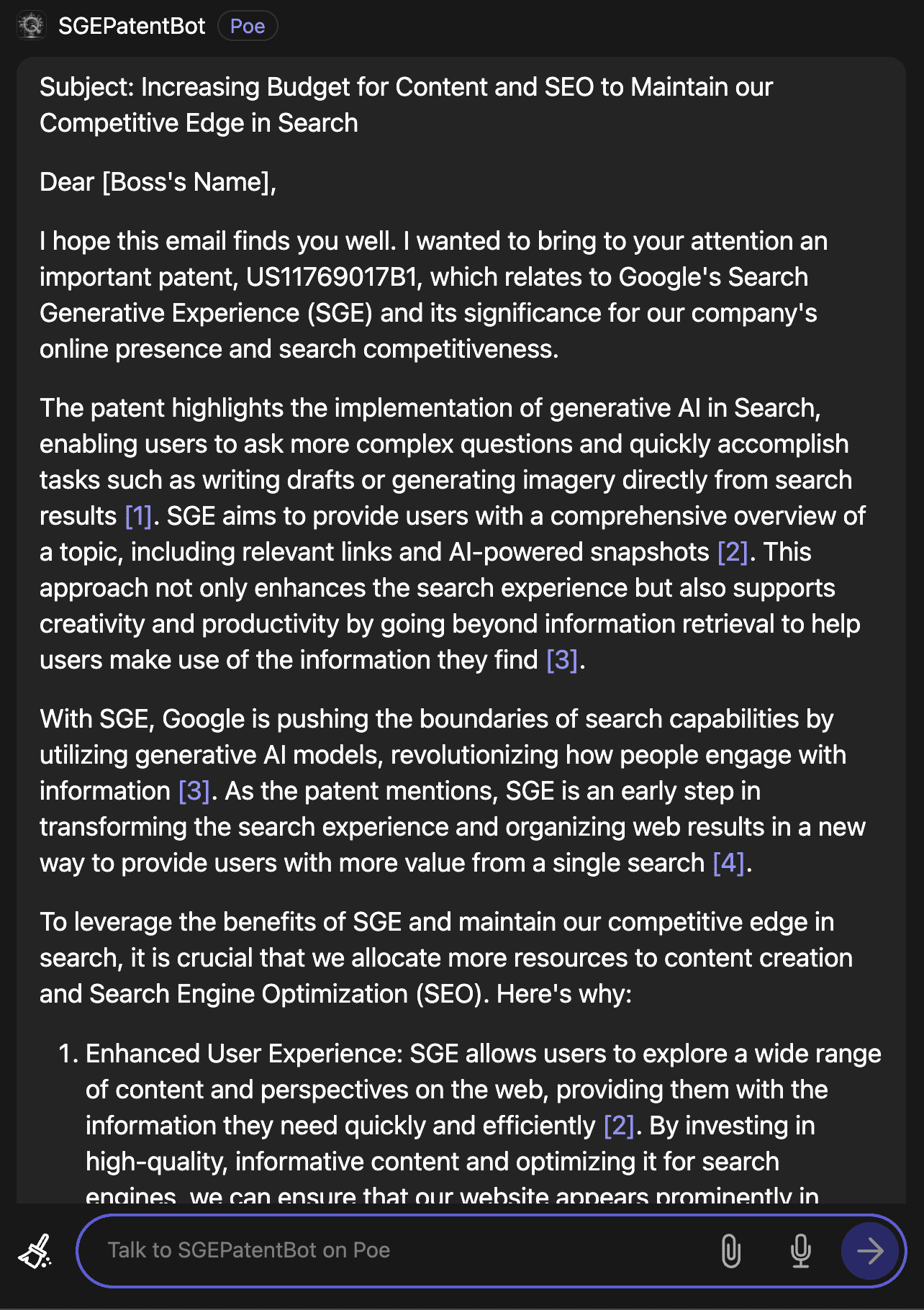

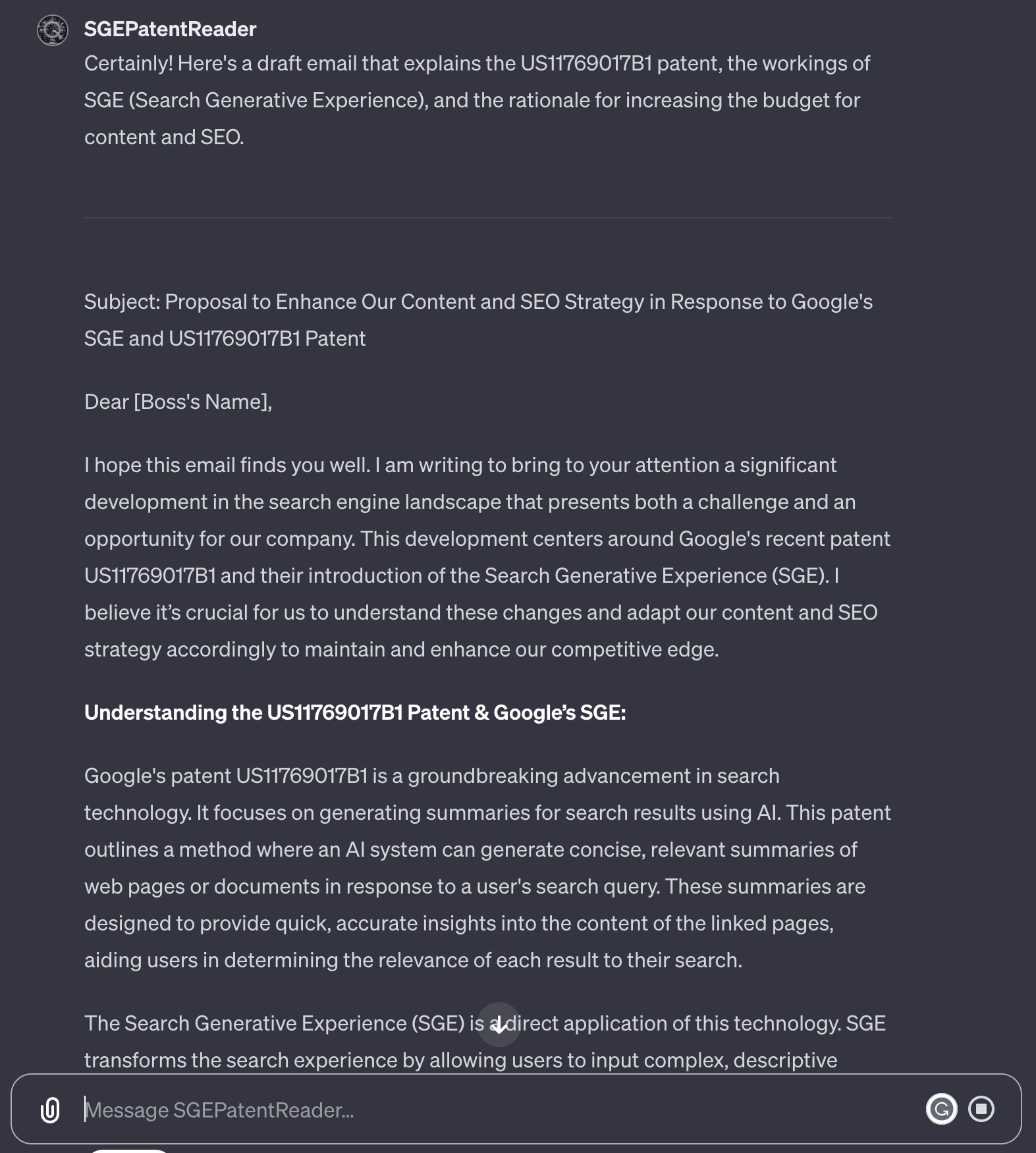

Reading and understanding patents filed by Google can be challenging but this guide will help you to understand what the patents are about and to as avoid the many common mistakes that lead to misunderstandings.

How To Understand Google Patents

Before starting to read a patent it’s important to understand how to read the patents. The following rules will form the foundation upon which you can build a solid understanding of what patents mean.

Step #1 Do Not Scan Patents

One of the biggest mistakes I see people make when reading patents is to approach the task as if it’s a treasure hunt. They scan the patents looking for tidbits and secrets about Google’s algorithms.

I know people do this because I’ve seen so many wrong conclusions made by SEOs who I can tell didn’t read the patent because they only speak about the one or two sentences that jump out at them.

Had they read the entire patent they would have understood that the passage they got excited about had nothing to do with ranking websites.

Reading a patent is not like a treasure hunt with a metal detector where the treasure hunter scans an entire field and then stops in one spot to dig up a cache of gold coins.

Don’t scan a patent. Read it.

Step #2 Understand The Context Of The Patent

A patent is like an elephant. An elephant has a trunk, big ears, a little tail and legs thick as trees. Similarly, a patent is made up of multiple sections that are each very important because they create the context of what the patent is about. Each section of a patent is important.

And just like how each part of an elephant in the context of the entire animal helps to better understand the creature, so it is with patents that every section only makes sense within the context of the entire patent.

In order to understand the patent it’s important to read the entire patent several times in order to be able step back and see the entire patent, not just one part of the patent.

Reading the entire patent reveals what the context of the entire patent is, which is the most important thing about the patent, what the entire thing means.

Step #3 Not Every Patent Is About Ranking

If there’s any one thing I wish the reader to take away from this article is this rule. When I read tweets or articles by people who don’t know how to read patents, this is the rule that they haven’t understood. Consequently, the interpretation of the patent is wrong.

Google Search is not just one ranking algorithm. There are many algorithms that comprise different parts of Search. The Ranking Engine and the Indexing Engine are just two parts of Search.

Other elements of search that may be referred to are:

- Ranking engine

- Modification engine

- Indexing engine

- Query reviser engine

Those are just a few of the kinds of software engines that are a part of a typical search engine. While the different software engines are not necessarily a part of the ranking part of Google’s algorithms, that does not minimize their importance.

Back in 2020 Gary Illyes of Google tweeted that Search consists of thousands of different systems working together.

He tweeted about the indexing engine:

“The indexing system, Caffeine, does multiple things:

1. ingests fetchlogs,

2. renders and converts fetched data,

3. extracts links, meta and structured data,

4. extracts and computes some signals,

5. schedules new crawls,

6. and builds the index that is pushed to serving.”

He followed up with another tweet about the thousands of systems in search:

“Don’t oversimplify search for it’s not simple at all: thousands of interconnected systems working together to provide users high quality and relevant results…

…the last time i did this exercise I counted off the top of my head about 150 different systems from crawling to ranking, so thousands is likely not an exaggeration. Yes, some things are micro services”

Here’s The Important Takeaway:

There are many parts of Search. But not all parts of Search are a part of the ranking systems.

A very important habit to cultivate when reading a patent is to let the patent tell you what it’s about.

Equally important is to not make assumptions or assume that something is implied. Patents don’t generally imply. They may be broad and and they may seem to be so repetitive that it almost feels like a deliberate attempt obfuscate (make it hard to understand) and they consistently describe the inventions in extremely broad terms, but they don’t really imply what they are describing.

Patents, for legal purposes, are actually quite specific about what the patents are about.

If something is used for ranking then it will not be implied, the patent will say so because that’s an important quality to describe in a patent application.

Step #4 Entity & Entities: Understand The Use Of Abstraction

One of the biggest mistakes that happens to people who read patents is to overlook the context of where the invention can be used. For example, let’s review a specific patent called “Identifying subjective attributes by analysis of curation signals.”

This patent mentions entities 52 times and the word “entity” is mentioned in the patent itself 124 times. One can easily guess that this patent is probably about entities, right? It makes sense that if the patent mentions the words “entities” and “entity” nearly 200 times that the patent is about entities.

But that would be an unfortunate assumption because the patent is not about entities at all because the context of the use of the words “entity” and “entities” in this patent is to refer to a broad and inclusive range of items, subjects, or objects to which the invention can be applied.

Patents often cast a wide net in terms of how the invention can be used, which helps to ensure that the patent’s claims aren’t limited to one type of use but can be applied in many ways.

The word “entity” in this patent is used as a catch-all term that allows the patent to cover a wide range of different types of content or objects. It is used in the sense of an abstraction so that it can be applied to multiple objects or forms of content. This frees the patent to focus on the functionality of the invention and how it can be applied.

The use of abstraction keeps a patent from being tied down to the specifics of what it is being applied to because in most cases the patent is trying to communicate how it can be applied in many different ways.

In fact, the patent places the invention in the context of different forms of content entities such as videos, images, and audio clips. The patent also refers to text-based content (like articles, blog posts), as well as more tangible entities (like products, services, organizations, or even individuals).

Here is an example from the patent where it explicitly refers to video clips as one of the entities that the patent is concerned with:

“In one implementation, the above procedure is performed for each entity in a given set of entities (e.g., video clips in a video clip repository, etc.), and an inverse mapping from subjective attributes to entities in the set is generated based on the subjective attributes and relevancy scores.”

In this context, “video clips” are explicitly mentioned as an example of the entities to which the invention can be applied. The passage indicates that the procedure described in the patent (identifying and scoring subjective attributes of entities) is applicable to video clips.”

Here is another passage where the word entity is used to denote a type of content:

“Entity store 120 is a persistent storage that is capable of storing entities such as media clips (e.g., video clips, audio clips, clips containing both video and audio, images, etc.) and other types of content items (e.g., webpages, text-based documents, restaurant reviews, movie reviews, etc.), as well as data structures to tag, organize, and index the entities.”

That part of the patent describes “content items” as entities and gives examples like webpages, text-based documents, restaurant reviews, and movie reviews, alongside media clips such as video and audio clips. This and other similar passages show that the term “entity” within the context of this patent broadly encompasses multiple forms of digital content.

That patent, titled Identifying subjective attributes by analysis of curation signals, is actually related to a recommender system or search that leverages User Generated Content like comments for the purpose of tagging digital content with the subjective opinions of those users.

The patent specifically uses the example of users describing an entity (like an image or a video) as funny, which can then be used to surface a video that has the subjective quality of funny as a part of a recommender system.

The most obvious application of this patent is for finding videos on YouTube that users and authors have described as funny. The use of this patent isn’t limited to just YouTube videos, it can also be used in other scenarios that intersect with user generated content.

The patent explicitly mentions the application of the invention in the context of a recommender system in the following passage:

“In one implementation, the above procedure is performed for each entity in a given set of entities (e.g., video clips in a video clip repository, etc.), and an inverse mapping from subjective attributes to entities in the set is generated based on the subjective attributes and relevancy scores.

The inverse mapping can then be used to efficiently identify all entities in the set that match a given subjective attribute (e.g., all entities that have been associated with the subjective attribute ‘funny’, etc.), thereby enabling rapid retrieval of relevant entities for processing keyword searches, populating playlists, delivering advertisements, generating training sets for the classifier, and so forth.”

Some SEOs, because the patent mentions authors three times have claimed that this patent has something to do with ranking content authors and because of that they also associate the patent it with E-A-T.

Others, because the patent mentions the words “entity” and “entities” so many times have come to believe it has something to do with natural language processing and semantic understanding of webpages.

But neither of those are true and now that I’ve explained some of this patent it should be apparent how a lack of understanding of how to read a patent plus approaching patents with the mindset of treasure hunting for spicy algorithm clues can lead to unfortunate and misleading errors in understanding what the patents are actually about.

In a future article I will walk through different patents and I think doing that will help readers understand how to read a patent. If that’s something you are interested in then please share this article on social media and let me know!

I’m going to end this article with a description of the different parts of a patent, which should go some way to building an understanding of patents.

Step #5 Know The Parts Of A Patent

Every patent is comprised of multiple parts, a beginning, a middle and an end that each have a specific purpose. Many patents are also accompanied by illustrations that are helpful for understanding what the patent is about.

Patents typically follow this pattern:

Abstract:

A concise summary of the patent, giving a quick overview of what the invention is and what it does. It’s provides a brief explanation. This part is actually important because it tells what the patent is about. Do not be one of those SEOs who skip this part to go treasure hunting in the middle parts for clues about the algorithm. Pay attention to the Abstract.

Background:

This section offers context for the invention. It typically gives an overview of the field related to the invention and in a direct or indirect way explains how the invention fits into the context. This is another important part of the patent. It doesn’t give up clues about the algorithm but it tells what part of the system it belongs to and what it’s trying to do.

Summary:

The Summary provides a more detailed overview of the invention than the Abstract. We often say you can step back and view the forest, can step closer and see the trees. The Summary can be said to be stepping forward to see the leaves and just like a tree has a lot of leaves, a Summary can contain a lot of details.

The Summary outlines the invention’s primary objectives, features, and the minutiae of how it does it and all the variations of how it does it. It is almost always an eye-wateringly comprehensive description.

The very first paragraph though can often be the most descriptive and understandable part, after which the summary deep-dives into fine detail. One can feel lost in the seemingly redundant descriptions of the invention. It can be boring but read it at least twice, more if you need to.

Don’t be dismayed if you can’t understand it all because this part isn’t about finding the spicy bits that make for good tweets. This part of reading a patent is sometimes more about kind of absorbing the ideas and getting a feel for it.

Brief Description Of The Drawings:

In patents where drawings are included, this section explains what each drawing represents, sometimes with just a single sentence. It can be as brief as this:

“FIG. 1 is a diagram that illustrates obtaining an authoritative search result.

FIG. 2 is a diagram that illustrates resources visited during an example viewing session.

FIG. 3 is a flow chart of an example process for adjusting search result scores.”

The descriptions provide valuable information and are just as important as the illustrations themselves. They both can communicate a sharper understanding of the function of the patent invention.

What may seem like an invention about choosing authoritative sites for search results might in the illustrations turn out to be about finding the right files on a mobile phone and not have anything to do with information retrieval.

This where my advice to let the patent tell you what it’s about pays off. People too often skip these parts because they don’t contain spicy details. What happens next is that they miss the context for the entire patent and reach completely mistaken conclusions.

Detailed Description Of The Patent:

This is an in-depth description of the invention that uses the illustrations (figure 1, figure 2, etc.) as the organizing factor. This section may include technical information, how the invention works, how it is organized in relation to other parts, and how it can be used.

This section is intended to be thorough enough that someone skilled in the field could replicate the invention but also general enough so that it can be broadly applied in different ways.

Embodiment Examples:

Here is where specific examples of the invention are provided. The word “embodiment” refers to a particular implementation or an example of the invention. It is a way for the inventor to describe specific ways the invention can be used.

There are different contexts of the word embodiment that make it clear what the inventor considers a part of the invention, it is used in the context of illustrating the real-world use of the invention, define technical aspects and to show different ways the invention can be made or used.

That last one you’ll see a lot of paragraphs describing “in another embodiment the invention can bla bla bla…”

So when you see that word “embodiment” try to think of the word “body” and then “embody” in the sense of making something tangible and that will help you to better understand the “Embodiment” section of a patent.

Claims:

The Claims are the legal part of the patent. This section defines the scope of protection that the patent is looking for and it also offers insights into what the patent is about because this section often talks about what’s new and different about the invention. So don’t skip this part.

Citations:

This part lists other patents that are relevant to the invention. It’s used to acknowledge similar inventions but also to show how this invention is different from them and how it improves on what came before.

Firm Starting Point For Reading Patents

You should by this point have a foundation for practicing how to read a patent. Don’t be discouraged if the patent seems opaque and hard to understand. That’s normal.

I asked Jeff Coyle (LinkedIn), cofounder of MarketMuse (LinkedIn) for tips about reading patents because he’s filed some patent applications.

Jeff offered this advice:

“Use Google Patent’s optional ‘non-patent literature’ Google Scholar search to find articles that may reference or support your knowledge of a patent.

Also understand that sometimes understanding a patent in isolation is nearly impossible, which is why it’s important to build context by collecting and reviewing connected patent and non-patent citations, child/priority patents/applications.

Another way that helps me to understand patents is to research other patents filed by the same authors. These are my core methods for understanding patents.”

That last tip is super important because some inventors tend to invent one kind of thing. So if you’re in doubt about whether a patent is about a certain thing, take a look at other patents that the inventor has filed to see if they tend to file patents on what you think a patent is about.

Patents have their own kind of language, with a formal structure and purpose to each section. Anyone who has learned a second language knows how important it is to look up words and to understand the structure that’s inherent in what’s written.

So don’t be discouraged because with practice you will be able to read patents better than many in the SEO industry are currently able to.

I intend at some point to walk through several patents with the hope that this will help you improve on reading patents. And remember to let me know on social media if this is something you want me to write!