Google’s latest Search Off the Record shared a wealth of insights on how Google Search actually works. Google’s John Mueller and Lizzi Sassman spoke with Elizabeth Tucker, Director, Product Management at Google, who shared insights into the many systems that work together to rank web pages, including a mention of a topicality system.

Google And Topicality

The word “topicality” means how something is relevant in the present moment. But when used in search the word “topicality” is about matching the topic of a search query with the content on a web page. Machine learning models play a strong role in helping Google understand what users mean.

An example that Elizabeth Tucker mentions is BERT (Bidirectional Encoder Representations from Transformers) which is a language model that helps Google understand a word within the context of the words that come before and after it (it’s more, that’s a thumbnail explanation).

Elizabeth explains the importance of matching topically relevant content to a search query within the context of user satisfaction.

Googler Lizzi Sassman asked about user satisfaction and Tucker mentioned that there are many dimensions to search, with many systems, using as an example the importance of the concept of topical relevance.

Lizzi asked (at about the 4:20 minute mark):

“In terms of the satisfaction bit that you mentioned, are there more granular ways that we’re looking at? What does it mean to be satisfied when you come away from a search?”

Elizabeth answered:

“Absolutely, Lizzi. Inside Search Quality, we think about so many important dimensions of search. We have so many systems. Obviously we want to show content that’s topically relevant to your search. In the early days of Google Search, that was sometimes a challenge.

Our systems have gotten much better, but that is still sometimes, for especially really difficult searches, we can struggle with. People search in so many ways: Everything from, of course, typing in keywords, to speaking to Google and using normal everyday language. I’ve seen amazing searches. “Hey Google, who is that person who, years ago, did this thing, and I don’t remember what it was called.” You know, these long queries that are very vague. And it’s amazing now that we have systems that can even answer some of those.”

Takeaway:

An important takeaway from that exchange is that there are many systems working together, with topicality being just one of them. Many in the search marketing community tend to focus on the importance of one thing like Authority or Helpfulness but in reality there are many “dimensions” to search and it’s counterproductive to reduce the factors that go into search to one, two or three concepts.

Biases In Search

Google’s John Mueller asked Elizabeth about biases in search and if that’s something that Google thinks about and she answered that there are many kinds of biases that Google watches out for and tries to catch. Tucker explains the different kinds of search results that may be topically relevant (such as evergreen and fresh) and then explains how it’s a balance that Google focuses on getting correctly.

John asked (at the 05:24 minute mark):

“When you look at the data, I assume biases come up. Is that a topic that we think about as well?”

Elizabeth answered:

“Absolutely. There are all sorts of biases that we worry about when you’re looking for information. Are we disproportionately showing certain types of sites, are we showing more, I don’t know, encyclopedias and evergreen results or are we showing more fresh results with up-to-date information, are we showing results from large institutional sites, are we showing results from small blogs, are we showing results from social media platforms where we have everyday voices?

We want to make sure we have an appropriate mix that we can surface the best of the web in any shape or size, modest goals.”

Core Topicality Systems (And Many Others)

Elizabeth next reiterated that she works with many kinds of systems in search. This is something to keep in mind because the search community only knows about a few systems when in fact there are many, many more systems.

That means it’s important to not focus on just one, two or three systems when trying to debug a ranking problem but instead to keep an open mind that it might be something else entirely, not just helpfulness or EEAT or some other reasons.

John Mueller asked whether Google Search responds by demoting a site when users complain about certain search results.

She speaks about multiple things, including that most of the systems she works on don’t have anything to do with demoting sites. I want to underline how she mentions that she works with many systems and many signals (not just the handful of signals that the search marketing community tends to focus on).

One of those systems she mentions is the core topicality systems. What does that mean? She explains that it’s about matching the topic of the search query. She says “core topicality systems” so I that probably means multiple systems and algorithms.

John asked (at the 11:20 minute mark):

“When people speak up loudly, is the initial step to do some kind of a demotion where you say “Well, this was clearly a bad site that we showed, therefore we should show less of it”? Or how do you balance the positive side of things that maybe we should show more of versus the content we should show less of?”

Elizabeth answered:

“Yeah, that’s a great question. So I work on many different systems. It’s a fun part of my job in Search Quality. We have many signals, many systems, that all need to work together to produce a great search result page.

Some of the systems are by their nature demotative, and webspam would be a great example of this. If we have a problem with, say, malicious download sites, that’s something we would probably want to fix by trying to find out which sites are behaving badly and try to make sure users don’t encounter those sites.

Most of the systems I work with, though, actually are trying to find the good. An example of this: I’ve worked with some of our core topicality systems, so systems that try to match the topic of the query.

This is not so hard if you have a keyword query, but language is difficult overall. We’ve had wonderful breakthroughs in natural language understanding in recent years with ML

models, and so we want to leverage a lot of this technology to really make sure we understand people’s searches so that we can find content that matches that. This is a surprisingly hard problem.

And one of the interesting things we found in working on, what we might call, topicality, kind of a nerdy word, is that the better we’re able to do this, the more interesting and difficult searches people will do.”

How Google Is Focused On Topics In Search

Elizabeth returns to discussing Topicality, this time referring to it as the “topicality space” and how much effort Google has expended on getting this right. Of particular importance she highlights how Google used to be very focused on keywords, with the clear implication that they’re not as focused on it any more, explaining the importance of topicality.

She discusses it at the 13:16 minute mark:

“So Google used to be very keyword focused. If you just put together some words with prepositions, we were likely to go wrong. Prepositions are very difficult or used to be for our systems. I mean, looking back at this, this is laughable, right?

But, in the old days, people would type in one, two, three keywords. When I started at Google, if a search had more than four words, we considered it long. I mean, nowadays I routinely see long searches that can be 10-20 words or more. When we have those longer searches, understanding what words are important becomes challenging.

For example, this was now years and years ago, maybe close to ten years ago, but we used to be challenged by searches that were questions. A classic example is “how tall is Barack Obama?” Because we wanted pages that would provide the answer, not just match the words how tall, right?

And, in fact, when our featured snippets first came about, it was motivated by this kind of problem. How can we match the answer, not just keyword match on the words in the question? Over the years, we’ve done a lot of work in, what we might call, the topicality space. This is a space that we continue to work in even now.”

The Importance Of Topics And Topicality

There are a lot to understand in Tucker’s answer, including that it may be helpful that, when thinking about Google’s search ranking algorithms, to also consider the core topicality systems which help Google understand search query topics and match those to web page content because it underlines the importance of thinking in terms of topics instead of focusing hard on ranking for keywords.

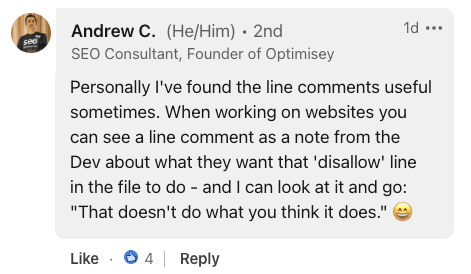

A common mistake I see is in what people who are struggling with ranking is they are strongly focused on keywords. I’ve been encouraging an alternate approach for the past many years that stresses the importance of thinking in terms of Topics. That’s a multidimensional way to think of SEO. Optimizing for keywords is one dimensional. Optimizing for a topic is multidimensional and aligns with how Google Search is ranking web pages in that topicality is an important part of ranking.

Listen to the Search Off The Record podcast starting at about the 4:20 minute mark and then fast forward to the 11:20 minute mark:

Featured Image by Shutterstock/dekazigzag

Screenshot from LinkedIn, July 2024.

Screenshot from LinkedIn, July 2024.