OpenAI Expected to Integrate Real-Time Data In ChatGPT via @sejournal, @martinibuster

Sam Altman, CEO of OpenAI, dispelled rumors that a new search engine would be announced on Monday, May 13. Recent deals have raised the expectation that OpenAI will announce the integration of real-time content from English, Spanish, and French publications into ChatGPT, complete with links to the original sources.

OpenAI Search Is Not Happening

Many competing search engines have tried and failed to challenge Google as the leading search engine. A new wave of hybrid generative AI search engines is currently trying to knock Google from the top spot with arguably very little success.

Sam Altman is on record saying that creating a search engine to compete against Google is not a viable approach. He suggested that technological disruption was the way to replace Google by changing the search paradigm altogether. The speculation that Altman is going to announce a me-too search engine on Monday never made sense given his recent history of dismissing the concept as a non-starter.

So perhaps it’s not a surprise that he recently ended the speculation by explicitly saying that he will not be announcing a search engine on Monday.

He tweeted:

“not gpt-5, not a search engine, but we’ve been hard at work on some new stuff we think people will love! feels like magic to me.”

“New Stuff” May Be Iterative Improvement

It’s quite likely that what’s going to be announced is iterative which means it improves ChatGPT but not replaces it. This fits into how Altman recently expressed his approach with ChatGPT.

He remarked:

“And it does kind of suck to ship a product that you’re embarrassed about, but it’s much better than the alternative. And in this case in particular, where I think we really owe it to society to deploy iteratively.

There could totally be things in the future that would change where we think iterative deployment isn’t such a good strategy, but it does feel like the current best approach that we have and I think we’ve gained a lot from from doing this and… hopefully the larger world has gained something too.”

Improving ChatGPT iteratively is Sam Altman’s preference and recent clues point to what those changes may be.

Recent Deals Contain Clues

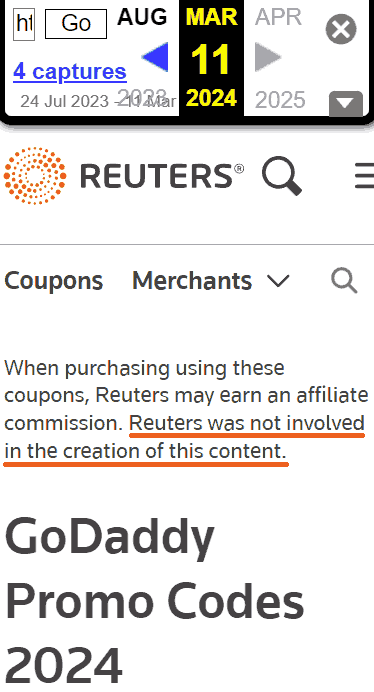

OpenAI has been making deals with news media and User Generated Content publishers since December 2023. Mainstream media has reported these deals as being about licensing content for training large language models. But they overlooked a a key detail that we reported on last month which is that these deals give OpenAI access to real-time information that they stated will be used to give attribution to that real-time data in the form of links.

That means that ChatGPT users will gain the ability to access real-time news and to use that information creatively within ChatGPT.

Dotdash Meredith Deal

Dotdash Meredith (DDM) is the publisher of big brand publications such as Better Homes & Gardens, FOOD & WINE, InStyle, Investopedia, and People magazine. The deal that was announced goes way beyond using the content as training data. The deal is explicitly about surfacing the Dotdash Meredith content itself in ChatGPT.

The announcement stated:

“As part of the agreement, OpenAI will display content and links attributed to DDM in relevant ChatGPT responses. …This deal is a testament to the great work OpenAI is doing on both fronts to partner with creators and publishers and ensure a healthy Internet for the future.

Over 200 million Americans each month trust our content to help them make decisions, solve problems, find inspiration, and live fuller lives. This partnership delivers the best, most relevant content right to the heart of ChatGPT.”

A statement from OpenAI gives credibility to the speculation that OpenAI intends to directly show licensed third-party content as part of ChatGPT answers.

OpenAI explained:

“We’re thrilled to partner with Dotdash Meredith to bring its trusted brands to ChatGPT and to explore new approaches in advancing the publishing and marketing industries.”

Something that DDM also gets out of this deal is that OpenAI will enhance DDM’s in-house ad targeting in order show more tightly focused contextual advertising.

Le Monde And Prisa Media Deals

In March 2024 OpenAI announced a deal with two global media companies, Le Monde and Prisa Media. Le Monde is a French news publication and Prisa Media is a Spanish language multimedia company. The interesting aspects of these two deals is that it gives OpenAI access to real-time data in French and Spanish.

Prisa Media is a global Spanish language media company based in Madrid, Spain that is comprised of magazines, newspapers, podcasts, radio stations, and television networks. It’s reach extends from Spain to America. American media companies include publications in the United States, Argentina, Bolivia, Chile, Colombia, Costa Rica, Ecuador, Mexico, and Panama. That is a massive amount of real-time information in addition to a massive audience of millions.

OpenAI explicitly announced that the purpose of this deal was to bring this content directly to ChatGPT users.

The announcement explained:

“We are continually making improvements to ChatGPT and are supporting the essential role of the news industry in delivering real-time, authoritative information to users. …Our partnerships will enable ChatGPT users to engage with Le Monde and Prisa Media’s high-quality content on recent events in ChatGPT, and their content will also contribute to the training of our models.”

That deal is not just about training data. It’s about bringing current events data to ChatGPT users.

The announcement elaborated in more detail:

“…our goal is to enable ChatGPT users around the world to connect with the news in new ways that are interactive and insightful.”

As noted in our April 30th article that revealed that OpenAI will show links in ChatGPT, OpenAI intends to show third party content with links to that content.

OpenAI commented on the purpose of the Le Monde and Prisa Media partnership:

“Over the coming months, ChatGPT users will be able to interact with relevant news content from these publishers through select summaries with attribution and enhanced links to the original articles, giving users the ability to access additional information or related articles from their news sites.”

There are additional deals with other groups like The Financial Times which also stress that this deal will result in a new ChatGPT feature that will allow users to interact with real-time news and current events .

OpenAI’s Monday May 13 Announcement

There are many clues that the announcement on Monday will be that ChatGPT users will gain the ability to interact with content about current events. This fits into the terms of recent deals with news media organizations. There may be other features announced as well but this part is something that there are many clues pointing to.

Watch Altman’s interview at Stanford University

Featured Image by Shutterstock/photosince