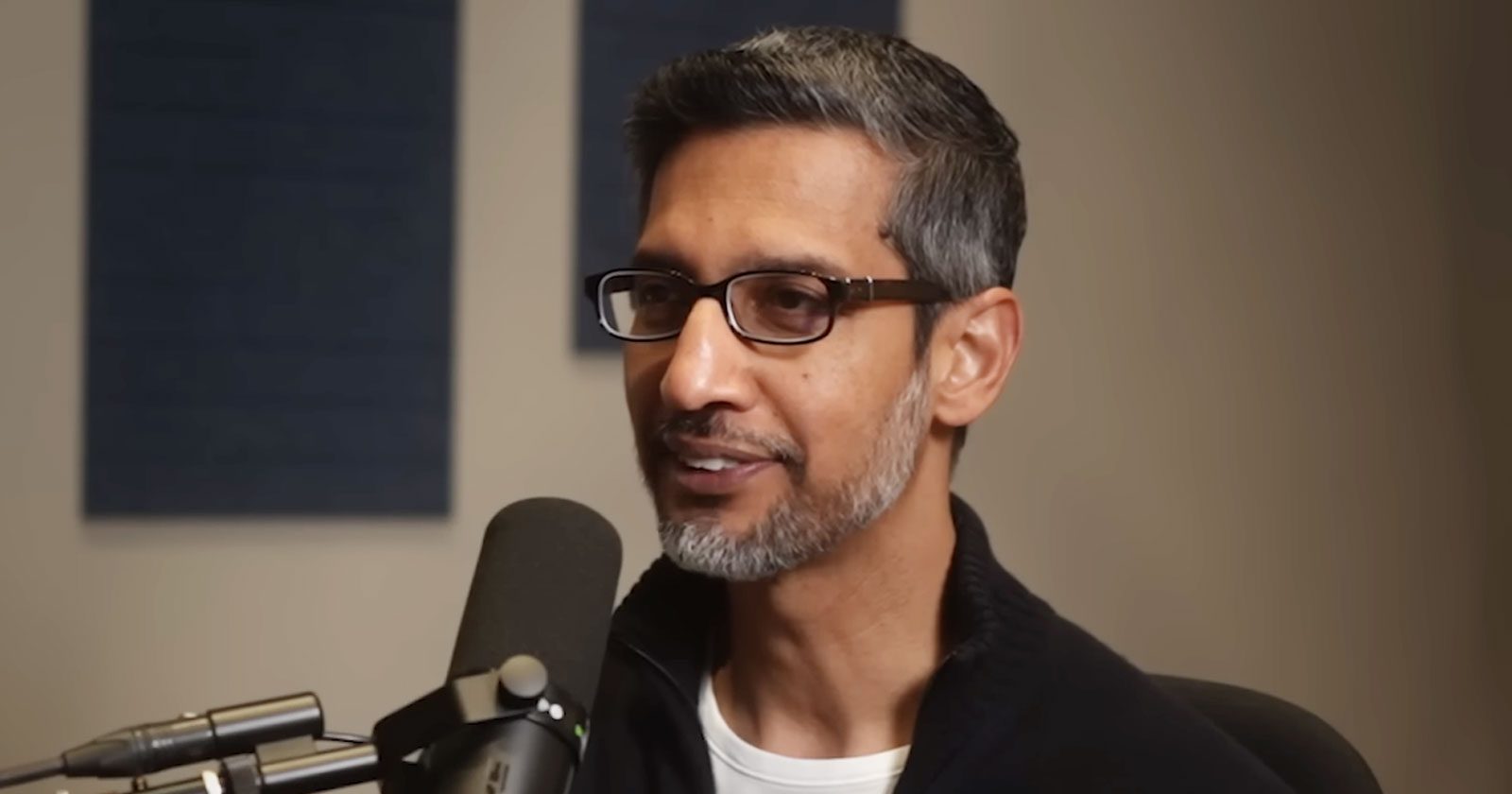

Google’s CEO, Sundar Pichai, responded to concerns about the impact of recent changes in Search and was repeatedly asked to clarify his position on the web ecosystem and how it fits into what he calls the next chapter of search. Pichai’s responses were given in the context of a recent interview on the Lex Fridman podcast.

Google CEO’s Commitment To Web Ecosystem Challenged

Lex Fridman challenged Pichai on whether Google will continue sending users to the human-created web. Pichai responded that supporting the web ecosystem is something he feels deeply about.

Fridman said:

“And the idea that AI mode will still take you to the web, to the human-created web?”

Pichai responded:

“Yes, that’s going to be a core design principle for us.”

Fridman followed up by noting that he’s been asking more questions from Google’s AI Overviews and AI Mode and exploring but he still wants to end up on the “human-created web.”

Pichai responded:

“It helps us deliver higher quality referrals, right? You know where people are like they have a much higher likelihood of finding what they’re looking for. They’re exploring. They’re curious. Their intent is getting satisfied more… That’s what all our metrics show.”

The interviewer added:

“It makes the humans that create the web nervous. The journalists are getting they’ve already been nervous.”

Sundar Pichai answered:

“Look, I think news and journalism will play an important role, you know, in the future we’re pretty committed to it, right? And so I think making sure that ecosystem… In fact, I think we’ll be able to differentiate ourselves as a company over time because of our commitment there. So it’s something I think you know I definitely value a lot and as we are designing we’ll continue prioritizing approaches.”

AI Is The Next Chapter Of Search?

Pichai mentioned that user metrics of AI search are “encouraging” and referred to it as the “next chapter of search,” underlining that AI Search is an inevitability and is not going away.

Search technologies have consistently been in a steady state of change. The strongest effects were visible in the 2004 Florida update, the 2012 Penguin links update, the 2018 Medic update, and the more recent series of helpful content updates, all of which brought massive changes to search rankings. None of those changes are as ambitious and consequential as what the human-created web is facing with Google’s AI Overviews and AI Mode.

Speaking as someone who has been a part of search marketing for over 25 years, I believe Pichai may be understating the situation by calling it the next chapter in search. It may well be that Google AI Search is an entirely new book.

Search Is Evolving To More Context

Lex Fridman remarked on how Google was legendary for its simple layout and the ten blue links, saying that Google is starting to “mess with that” and that surely there must have been battles within Google about that.

Pichai subtly corrected Fridman’s suggestion that Google was moving away from the ten blue links, which hasn’t been a thing for nearly 15 years by stating that the shift to mobile is the reason why Google shifted away from ten blue links, evolving along with the pace of technological advancements and user’s expectations for answers, not links.

Pichai emphasized that Google remains the “front page of the Internet” as Fridman put it, because of their commitment to making it easier for users to explore the web, only with more context.

Pichai answered:

“Look… in some ways when mobile came… people wanted answers to more questions, so we’re …constantly evolving it. But you’re right, this moment, …that evolution, because underlying technology is becoming much more capable. You can have AI give a lot of context.

But one of our important design goals though, is when you come to Google search. You’re going to get a lot of context. But you’re going to go and find a lot of things out on the web. So that will be true in AI mode. In AI overviews and so on.

But I think to our earlier conversation, we are still giving you access to links, but think of the AI as a layer which is giving you context summary. Maybe in AI mode you can have a dialogue with it back and forth on your journey.

But through it all, you’re kind of learning what’s out there in the world. So those core principles don’t change, but I think AI mode allows us to push… we have our best models there, models which are using search as a deep tool.

Really, for every query you’re asking, fanning out doing multiple searches, assembling that knowledge in a way so you can go and consume what you want to and that’s how we think about it.”

Advertising In AI Mode

Something that isn’t immediately apparent is that Google treats advertising as a form of content that is relevant to users. Advertising is not seen as an intrusion but as something relevant to users within a context of their interests.

Fridman next asked him about advertising in AI Mode. Pichai responded that they are currently focusing on getting the “organic experience” right but he also turned to the concept of context.

Pichai’s response:

“Two things.

Early part of AI mode will obviously focus more on the organic experience to make sure we are getting it right. I think the fundamental value of ads are it enables access to deploy the services to billions of people.

Second is, the reason we’ve always taken ads seriously is we view ads as commercial information, but it’s still information. And so we bring the same quality metrics to it.

I think with AI mode, to our earlier conversation, I think AI itself will help us over time, figure out the best way to do it.

Given we are giving context around everything, I think it will give us more opportunities to also explain, okay, here’s some commercial information. Like today, as a podcaster, you do it at certain spots and you probably figure out what’s best in your podcast.

There are aspects of that, but I think the underlying need of people value commercial information. Businesses are trying to connect to users. All that doesn’t change in an AI moment. But look, we will rethink it.”

Will AI Mode Replace Everything?

Lex Fridman asked if Pichai sees a time where AI Mode will become the interface through which the Internet is filtered, asking if there’s a future where it completely replaces the current combination of AI Overviews and ten blue links.

Pichai answered:

“Our current plan is AI Mode is going to be there as a separate tab for people who really want to experience that, but it’s not yet at the level where our main search pages, but as features work, we’ll keep migrating it to the main page. And so you can view it as a continuum. AI model offer you the bleeding edge experience. But things that work will keep overflowing to AI Overviews in the main experience.”

Takeaways

The questions posed by Lex Fridman echo the fears and negative sentiment felt by many publishers about Google’s evolution to providing answers to queries instead of links to the open web.

Sundar Pichai repeatedly stated that Google intends to keep sending users to the human-created web, explaining that AI provides more context that encourages users to explore topics on the web in greater depth.

Those statements, however, are undermined by Google’s delay in enabling web publishers to accurately track referrals from AI Overviews and AI Mode. This creates the impression that publishers are an afterthought and feeds web publisher skepticism about Google’s commitment to the human-created web. While it’s refreshing to hear Google’s CEO emphatically declare his concern for the web ecosystem, I believe it will take more positive actions from Google to overcome web publishers’ negative outlook on the current state of AI search.