When business leaders talk about digital transformation, their focus often jumps straight to cloud platforms, AI tools, or collaboration software. Yet, one of the most fundamental enablers of how organizations now work, and how employees experience that work, is often overlooked: audio.

As Genevieve Juillard, CEO of IDC, notes, the shift to hybrid collaboration made every space, from corporate boardrooms to kitchen tables, meeting-ready almost overnight. In the scramble, audio quality often lagged, creating what research now shows is more than a nuisance. Poor sound can alter how speakers are perceived, making them seem less credible or even less trustworthy.

“Audio is the gatekeeper of meaning,” stresses Julliard. “If people can’t hear clearly, they can’t understand you. And if they can’t understand you, they can’t trust you, and they can’t act on what you said. And no amount of sharp video can fix that.” Without clarity, comprehension and confidence collapse.

For Shure, which has spent a century advancing sound technology, the implications extend far beyond convenience. Chris Schyvinck, Shure’s president and CEO, explains that ineffective audio undermines engagement and productivity. Meetings stall, decisions slow, and fatigue builds.

“Use technology to make hybrid meetings seamless, and then be clear on which conversations truly require being in the same physical space,” says Juillard. “If you can strike that balance, you’re not just making work more efficient, you’re making it more sustainable, you’re also making it more inclusive, and you’re making it more resilient.”

When audio is prioritized on equal footing with video and other collaboration tools, organizations can gain something rare: frictionless communication. That clarity ensures the machines listening in, from AI transcription engines to real-time translation systems, can deliver reliable results.

The research from Shure and IDC highlights two blind spots for leaders. First, buying decisions too often privilege price over quality, with costly consequences in productivity and trust. Second, organizations underestimate the stress poor sound imposes on employees, intensifying the cognitive load of already demanding workdays. Addressing both requires leaders to view audio not as a peripheral expense but as core infrastructure.

Looking ahead, audio is becoming inseparable from AI-driven collaboration. Smarter systems can already filter out background noise, enhance voices in real time, and integrate seamlessly into hybrid ecosystems.

“We should be able to provide improved accessibility and a more equitable meeting experience for people,” says Schyvinck.

For Schyvinck and Juillard, the future belongs to companies that treat audio transformation as an integral part of digital transformation, building workplaces that are more sustainable, equitable, and resilient.

This episode of Business Lab is produced in partnership with Shure.

Full Transcript

Megan Tatum: From MIT Technology Review, I’m Megan Tatum, and this is Business Lab, the show that helps business leaders make sense of new technologies coming out of the lab and into the marketplace.

This episode is produced in partnership with Shure.

As companies continue their journeys towards digital transformation, audio modernization is an often overlooked but key component of any successful journey. Clear audio is imperative not only for quality communication, but also for brand equity, both for internal and external stakeholders and even the company as a whole.

Two words for you: audio transformation.

My guests today are Chris Schyvinck, President and CEO at Shure. And Genevieve Juillard, CEO at IDC.

Welcome Chris and Genevieve.

Chris Schyvinck: It’s really nice to be here. Thank you very much.

Genevieve Juillard: Yeah, thank you so much for having us. Great to be here.

Megan Tatum: Thank you both so much for being here. Genevieve, we could start with you. Let’s start with some history perhaps for context. How would you describe the evolution of audio technology and how use cases and our expectations of audio have evolved? What have been some of the major drivers throughout the years and more recently, perhaps would you consider the pandemic to be one of those drivers?

Genevieve: It’s interesting. If you go all the way back to 1976, Norman Macrae of The Economist predicted that video chat would actually kill the office, that people would just work from home. Obviously, that didn’t happen then, but the core technology for remote collaboration has actually been around for decades. But until the pandemic, most of us only experienced it in very specific contexts. Offices had dedicated video conferencing rooms and most ran on expensive proprietary systems. And then almost overnight, everything including literally the kitchen table had to be AV ready. The cultural norms shifted just as fast. Before the pandemic, it was perfectly fine to keep your camera off in a meeting, and now that’s seen as disengaged or even rude, and that changes what normalized video conferencing and my hybrid meetings.

But in a rush to equip a suddenly remote workforce, we hit two big problems. Supply chain disruptions and a massive spike in demand. High-quality gear was hard to get so low-quality audio and video became the default. And here’s a key point. We now know from research that audio quality matters more than video quality for meeting outcomes. You can run a meeting without video, but you can’t run a meeting without clear audio. Audio is the gatekeeper of meaning. If people can’t hear clearly, they can’t understand you. And if they can’t understand you, they can’t trust you and they can’t act on what you said. And no amount of sharp video can fix that.

Megan: Oh, true. It’s fascinating, isn’t it? And Chris, Shure and IDC recently released some research titled “The Hidden Influencer Rethinking Audio Could Impact Your Organization Today, Tomorrow, and Forever.” The research highlighted that importance of audio that Genevieve’s talking about in today’s increasingly virtual world. What did you glean from those results and did anything surprise you?

Chris: Yeah, well, the research certainly confirmed a lot of hunches we’ve had through the years. When you think about a company like Shure that’s been doing audio for 100 years, we just celebrated that anniversary this year.

Megan: Congratulations.

Chris: Our legacy business is over more in the music and performance arena. And so just what Genevieve said in terms of, “Yeah, you can have a performance and look at somebody, but that’s like 10% of it, right? 90% is hearing that person sing, perform, and talk.” We’ve always, of course, from our perspective, understood that clean, clear, crisp audio is what is needed in any setting. When you translate what’s happening on the stage into a meeting or collaboration space at a corporation, we’ve thought that that is just equally as important.

And we always had this hunch that if people don’t have the good audio, they’re going to have fatigue, they’re going to get a little disengaged, and the whole meeting is going to become quite unproductive. The research just really amplified that hunch for us because it really depicted the fact that people not only get kind of frustrated and disengaged, they might actually start to distrust what the other person with bad audio is saying or just cast it in a different light. And the degree to which that frustration becomes almost personal was very surprising to us. Like I said, it validated some hunches, but it really put an exclamation point on it for us.

Megan: And Genevieve, based on the research results, I understand that IDC pulled together some recommendations for organizations. What is it that leaders need to know and what is the biggest blind spot for them to overcome as well?

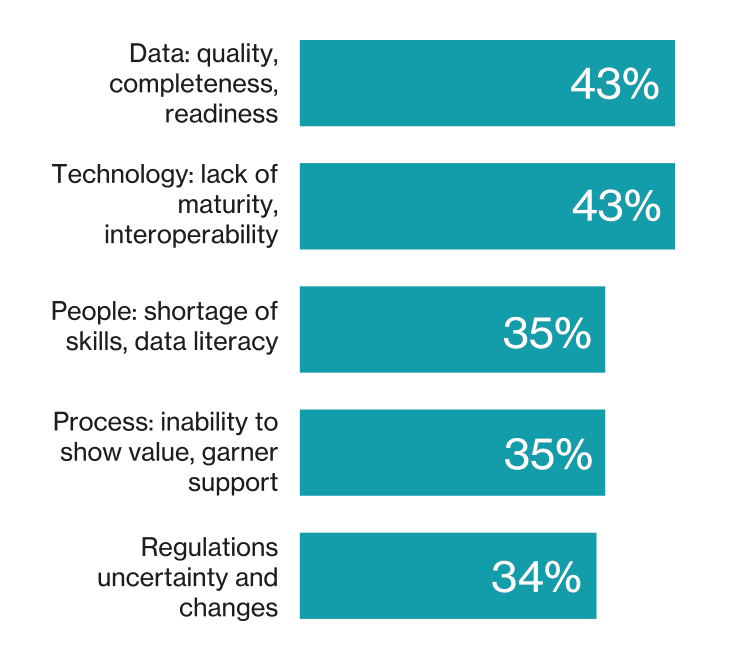

Genevieve: The biggest blind spot is this. If your microphone has poor audio quality, like Chris said, people will literally perceive you as less intelligent and less trustworthy. And by the way, that’s not an opinion. It’s what the science says. But yet, when we surveyed first time business buyers, the number one factor they used to choose audio gear was price. However, for repeat buyers, the top factor flipped to audio quality. My guess is they learn the lesson the hard way. The second blind spot is to Chris’s point, it’s the stress that bad audio creates. Poor sound forces your brain to work harder to decode what’s being said. That’s a cognitive load and it creates stress. And over a full day of meetings, that stress adds up. Now, we don’t have long-term studies yet on the effects, but we do know that prolonged stress is something that every company should be working to reduce.

Good audio lightens that cognitive load. It keeps people engaged and it levels the playing field. Whether you’re in a room or you’re halfway across the world, and here’s one that’s often overlooked, bad audio can sabotage AI transcription tools. As AI becomes more and more central to everyday work, that starts to become really critical. If your audio isn’t clear, the transcription won’t be accurate. And there’s a world of difference between working, for example, the consulting department and the insulting department, and that is an actual example from the field.

The bottom line is you fix the audio, you cut friction, you save time, and you make meetings more productive.

Megan: I mean, it’s just a huge game changer, isn’t it, really? I mean, and given that, Chris, in your experience across industries, are audio technologies being included in digital transformation strategies and also artificial intelligence implementation? Do we need a separate audio transformation perhaps?

Chris: Well, like I mentioned earlier, yes, people tend to initially focus on that visual platform, but increasingly the attention to audio is really coming into focus. And I’d hate to tear apart audio as a separate sort of strategy because at the same time, we, as an audio expert, are trying to really seamlessly integrate audio into the rest of the ecosystem. It really does need to be put on an equal footing with the rest of the components in that ecosystem. And to Genevieve’s point, as we are seeing audio and video systems with more AI functionalities, the importance of real-time translations that are being used, voice recognition, being able to attribute who said what in a meeting and take action items, it’s really, I think starting to elevate the importance of that clear audio. And it’s got to be part of a comprehensive, really collaboration plan that helps some company figure out what’s their whole digital transformation about. It just really has to be included in that comprehensive plan, but put on equal footing with the rest of the components in that system.

Megan: Yeah, absolutely. And in the broader landscape, Genevieve, in terms of discussing the importance of audio quality, what have you noticed across research projects about the effects of good and bad audio, not only from that company perspective, but from employee and client perspectives as well?

Genevieve: Well, let’s start with employees.

Megan: Sure.

Genevieve: Bad audio adds friction you don’t need, we’ve talked about this. When you’re straining to hear or make sense of what’s being said, your brain is burning energy on decoding instead of contributing. That frustration, it builds up, and by the end of the day, it hurts productivity. From a company perspective, the stakes get even higher. Meetings are where decisions happen or at least where they’re supposed to happen. And if people can’t hear clearly, decisions get delayed, mistakes creep in, and the whole process slows down. Poor audio doesn’t just waste time, it chips away at the ability to move quickly and confidently. And then there’s the client experience. So whether it’s in sales, customer service, or any external conversation, poor audio can make you sound less credible and yet less trustworthy. Again, that’s not my opinion. That’s what the research shows. So that’s quite a big risk when you’re trying to close a deal or solve a major problem.

The takeaway is good audio, it matters, it’s a multiplier. It makes meetings more productive and it can help decisions happen faster and client interactions be stronger.

Megan: It’s just so impactful, isn’t it, in so many different ways. I mean, Chris, how are you seeing these research results reflected as companies work through digital and AI transformations? What is it that leaders need to understand about what is involved in audio implementation across their organization?

Chris: Well, like I said earlier, I do think that audio is finally maybe getting its place in the spotlight a little bit up there with our cousins over in the video side. Audio, it’s not just a peripheral aspect anymore. It’s a very integral part of that sort of comprehensive collaboration plan I was talking about earlier. And when we think about how can we contribute solutions that are really more easy to use for our end users, because if you create something complicated, we were talking about the days gone by of walking into a room. It’s a very complicated system, and you need to find the right person that knows how to run it. Increasingly, you just need to have some plug and play kind of solutions. We’re thinking about a more sustainable strategy for our solutions where we make really high-quality hardware. We’ve done that account for a hundred years. People will come up to me and tell the story of the SM58 microphone they bought in 1980 and how they’re still using it every day.

We know how to do that part of it. If somebody is willing to make that investment upfront, put some high-quality hardware into their system, then we are getting to the point now where updates can be handled via software downloads or cloud connectivity. And just really being able to provide sort of a sustainable solution for people over time.

More in our industry, we’re collaborating with other industry partners to go in that direction, make something that’s very simple for anybody to walk into a room or on their individual at home setup and do something pretty simple. And I think we have the right industry groups, the right industry associations that can help make sure that the ecosystems have the proper standards, the right kind of ways to make sure everything is interoperable within a system. We’re all kind of heading in that direction with that end user in mind.

Megan: Fantastic. And when the internet of things was emerging, efforts began to create sort of these data ecosystems, it seems there’s an argument to be made that we need audio ecosystems as well. I wonder, Chris, what might an audio ecosystem look like and what would be involved in implementation?

Chris: Well, I think it does have to be part of that bigger ecosystem I was just talking about where we do collaborate with others in industry and we try to make sure that we’re all playing by the kind of same set of rules and protocols and standards and whatnot. And when you think about compatibility across all the devices that sit in a room or sit in your, again, maybe your at home setup, making sure that the audio quality is as good as it can be, that you can interoperate with everything else in the system. That’s just become very paramount in our day-to-day work here. Your hardware has to be scalable like I just alluded to a moment ago. You have to figure out how you can integrate with existing technologies, different platforms.

We were joking when we came into this session that when you’re going from the platform at your company, maybe you’re on Teams and you go into a Zoom setting or you go into a Google setting, you really have to figure out how to adapt to all those different sort of platforms that are out there. I think the ecosystem that we’re trying to build, we’re trying to be on that equal footing with the rest of the components in that system. And people really do understand that if you want to have extra functionalities in meetings and you want to be able to transcribe or take notes and all of that, that audio is an absolutely critical piece.

Megan: Absolutely. And speaking of bit of all those different platforms and use cases, that sort of audio is so relevant to Genevieve that goes back to this idea of in audio one size does not fit all and needs may change. How can companies also plan their audio implementations to be flexible enough to meet current needs and to be able to grow with future advancements?

Genevieve: I’m glad you asked this question. Even years after the pandemic, many companies, they’re still trying to get the balance right between remote, in office, how to support it. But even if a company has a strict return to office in-person policy, the reality is that work still isn’t going away for that company. They may have teams across cities or countries, clients and external stakeholders will have their own office preferences that they have to adapt to. Supporting hybrid work is actually becoming more important, not less. And our research shows that companies are leaning into, not away from, hybrid setups. About one third of companies are now redesigning or resizing office spaces every single year. For large organizations with multiple sites, staggered leases, that’s a moving target. It’s really important that they have audio solutions that can work before, during, after all of those changes that they’re constantly making. And so that’s where flexibility becomes really important. Companies need to buy not just for right now, but for the future.

And so here’s IDC’s kind of pro-tip, which is make sure as a company that you go with a provider that offers top-notch audio quality and also has strong partnerships and certifications with the big players and communications technology because that will save you money in the long run. Your systems will stay compatible, your investments will last longer, and you won’t be scrambling when that next shift happens.

Megan: Of course. And speaking of building for the future, as companies begin to include sustainability in their company goals, Chris, I wonder how can audio play a role in those sustainability efforts and how might that play into perhaps the return on investment in building out a high-quality audio ecosystem?

Chris: Well, I totally agree with what Genevieve just said in terms of hybrid work is not going anywhere. You get all of those big headlines that talk about XYZ company telling people to get back into the office. And I saw a fantastic piece of data just last week that showed the percent of in-office hours of the American workers versus out-of-office remote kind of work. It has basically been flatlined since 2022. This is our new way of working. And of course, like Genevieve mentioned, you have people in all these different locations. And in a strange way, living through the pandemic did teach us that we can do some things by not having to hop on an airplane and travel to go somewhere. Certainly that helps with a more sustainable strategy over time, and you’re saving on travel and able to get things done much more quickly.

And then from a product offering perspective, I’ll go back to the vision I was painting earlier where we and others in our industry see that we can create great solid hardware platforms. We’ve done it for decades, and now that advancements around AI and all of our software that enables products and everything else that has happened in the last probably decade, we can get enhancements and additions and new functionality to people in simpler ways on existing hardware. I think we’re all careening down this path of having a much more sustainable ecosystem for all collaboration. It’s really quite an exciting time, and that pays off with any company implementing a system, their ROI is going to be much better in the long run.

Megan: Absolutely. And Genevieve, what trends around sustainability are you seeing? What opportunities do you see for audio to play into those sustainability efforts going forward?

Genevieve: Yeah, similar to Chris. In some industries, there’s still a belief that the best work happens when everyone’s in the same room. And yes, face-to-face time is really important for building relationships, for brainstorming, for closing big deals, but it does come at a cost. The carbon footprint of daily commutes, the sales visits, the constant business travel. And then there’s the basic consideration, as we’ve talked about, of just pure practicality. The good news is with the right AV setup, especially high-quality audio, many of those interactions can happen virtually without losing effectiveness, as Chris said it, but our research shows it.

Our research shows that virtual meetings can be just as productive as in-person ones, and every commute or flight you avoid, of course makes a measurable sustainability impact. I don’t think, personally, that the takeaway is replace all in-person meetings, but instead it’s to be intentional. Use technology to make hybrid meetings seamless, and then be clear on which conversations truly require being in the same physical space. If you can strike that balance, you’re not just making work more efficient, you’re making it more sustainable, you’re also making it more inclusive, and you’re making it more resilient.

Megan: Such an important point. And let’s close with a future forward look, if we can. Genevieve, what innovations or advancements in the audio field are you most excited to see to come to fruition, and what potential interesting use cases do you see on the horizon?

Genevieve: I’m especially interested in how AI and audio are converging. We’re now seeing AI that can identify and isolate human voices in noisy environments. For example, right now, there are some jets flying overhead. It’s very loud in here, but I suspect you may not even know that that’s happening.

Megan: We can’t hear a thing. No.

Genevieve: Right. That technology, it’s pulling voices forward so that conversations like ours are crystal clear. And that’s a big deal, especially as companies invest more and more in AI tools, especially for that translating, transcribing and summarizing meetings. But as we’ve talked before, AI is only as good as the audio it hears. If the sound is poor or a word gets misheard, the meaning can shift entirely. And sometimes that’s just inconvenient, or it can even be funny. But in really high stakes settings, like healthcare for example, a single mis-transcribed word can have serious consequences. So that’s why our position as high quality audio is critical and it’s necessary for making AI powered communication accurate, trustworthy, and useful because when the input is clean, the output can actually live up to its promise.

Megan: Fantastic. And Chris, finally, what are you most excited to see developed? What advancements are you most looking forward to seeing?

Chris: Well, I really do believe that this is one of the most exciting times that I know I’ve lived through in my career. Just the pace of how fast technology is moving, the sudden emergence of all things AI. I was actually in a roundtable session of CEOs yesterday from lots of different industries, and the facilitator was talking about change management internally in companies as you’re going through all of these technology shifts and some of the fear that people have around AI and things like that. And the facilitator asked each of us to give one word that describes how we’re feeling right now. And the first CEO that went used the word dread. And that absolutely floored me because you enter into these eras with some skepticism and trying to figure out how to make things work and go down the right path. But my word was truly optimism.

When I look at all the ways that we are able to deliver better audio to people more quickly, there’s so many opportunities in front of us. We’re working on things outside of AI like algorithms that Genevieve just mentioned that filter out the bad sounds that you don’t want entering into a meeting. We’ve been doing that for quite a long time now. There’s also opportunities to do real time audio improvements, enhancements, make audio more personal for people. How do they want to be able to very simply, through voice commands perhaps, adjust their audio? There shouldn’t have to be a whole lot of techie settings that come along with our solutions.

We should be able to provide improved accessibility and a little bit more equitable meeting experience for people. And we’re looking at tech technology solutions around immersive audio. How can you maybe feel like you’re a bit more engaged in the meeting, kind of creating some realistic virtual experiences, if you will. There’s just so many opportunities in front of us, and I can just picture a day when you walk into a room and you tell the room, “Hey, call Genevieve. We’re going to have a meeting for an hour, and we might need to have Megan on call to come in at a certain time.”

And all of this will just be very automatic, very seamless, and we’ll be able to see each other and talk at the same time. And this isn’t years away. This is happening really, really quickly. And I do think it’s a really exciting time for audio and just all together collaboration in our industry.

Megan: Absolutely. Sounds like there’s plenty of reason to be optimistic. Thank you both so much.

That was Chris Schyvinck, President and CEO at Shure. And Genevieve Juillard, CEO at IDC, whom I spoke with from Brighton, England.

That’s it for this episode of Business Lab. I’m your host, Megan Tatum. I’m a contributing editor at Insights, the custom publishing division of MIT Technology Review. We were founded in 1899 at the Massachusetts Institute of Technology, and you can find us in print on the web and at events each year around the world. For more information about us and the show, please check out our website at technologyreview.com.

This show is available wherever you get your podcasts. And if you enjoy this episode, we hope you’ll take a moment to rate and review us. Business Lab is a production of MIT Technology Review, and this episode was produced by Giro Studios. Thanks for listening.

This content was produced by Insights, the custom content arm of MIT Technology Review. It was not written by MIT Technology Review’s editorial staff. It was researched, designed, and written entirely by human writers, editors, analysts, and illustrators. This includes the writing of surveys and collection of data for surveys. AI tools that may have been used were limited to secondary production processes that passed thorough human review.