SEO and marketing are driven by the choices that you make, and those choices should be guided by clear, trustworthy data.

Having a range of sources that you track on a regular basis helps you to stay informed and to speak with authority in meetings with C-suite and clients.

Enable your strategies with real-world insights and answer questions such as, Should paid search budgets go up or down? Which international markets are worth expanding into? And how is traffic shifting towards social platforms or retail media networks?

The following is a list of some well-known and some lesser-known reports that you should make yourself familiar with to always have qualified answers to your choices.

Financial & Markets Data

These high-level reports provide the map of the digital economy. They show where advertising dollars are flowing, where they are pooling, and where they might flow next.

They give you the “big picture” context for your own budget decisions, allowing you to speak the language of finance and justify your strategy with market-wide data.

IAB/PwC Internet Advertising Revenue Report

Cadence: Annual

Typical release: April

Access: Free, no registration required

Link

Why It Matters:

This report answers the question: Is the digital ad market still growing? For over 25 years, the IAB has been the definitive source for U.S. internet advertising revenue. Its historical data charted the shifts from dial-up to broadband, and then from desktop to mobile. Today, it’s charting the next great reallocation of capital. When your CFO wants authoritative numbers on the industry’s health, this is the gold standard.

The report surveys companies representing over 86% of U.S. internet ad revenue, meaning its figures are based on actual, verified spending. The format breakdowns show how much capital is flowing from established channels like traditional search into high-growth areas like social video, connected TV, and retail media.

Methodology & Limitations:

U.S.-only data; reflects reported revenue from participating companies, which may be delayed by one quarter compared to actual spending; excludes international markets and smaller ad networks below the survey threshold.

MAGNA Global Ad Forecast

Cadence: Biannual

Typical release: June & December

Access: Free summary; full datasets for IPG Mediabrands clients

Link

Why It Matters:

If the IAB report is a photograph of last year, MAGNA’s forecast is a detailed blueprint of the next 18 months. It helps you anticipate whether paid search costs (CPCs) are likely to spike based on an influx of advertiser demand. Their analysis is global, allowing you to see which regions are heating up and which are cooling down.

Their retail media breakouts are useful for making the case to invest in product feed optimization and marketplace SEO. For example, if MAGNA forecasts a 20% surge in retail media while projecting only 5% growth in search, it’s a signal that commercial intent is migrating.

The twice-yearly cadence is its secret weapon. The December update gives you fresh data for annual planning, while the June update allows for mid-year course corrections, making your strategy more agile.

Methodology & Limitations:

The forecast model relies on historical patterns, economic indicators, and advertiser surveys. It is subject to revision due to macroeconomic changes. Coverage varies globally, with the most robust data in North America and Europe, while forecasts for the China market carry higher uncertainty.

Global Entertainment & Media Outlook (PwC)

Cadence: Annual

Typical release: July

Access: Paid subscription; free overview and highlights

Link

Why It Matters:

This is your five-year planning guide, the ultimate tool for long-term strategic thinking.

While other reports focus on the next year, PwC projects revenue and user growth across 53 countries and 15+ media segments five years ahead. This macro view can help build a business case for large, multi-year investments.

Are you considering a major push into podcasting or developing a streaming video channel? This report’s audio and video forecasts can help you size the market and project a realistic timeline for ROI. Its search advertising forecasts by region can help you de-risk international expansion by prioritizing countries with high-growth projections.

The methodology takes into account regulatory changes, technology adoption curves, and demographic shifts. It can help you build strategies that are resilient to short-term fluctuations because they’re aligned with long-term trends.

Methodology & Limitations:

Full access is paid and limits how broadly information can be shared. Keep in mind, forecasts about the next five years are naturally uncertain and get updated every year. Also, these projections assume that regulatory environments stay stable, but changes can always happen.

Company Earnings Reports

While market reports offer an overview of the economy, the quarterly earnings from key companies reveal the reality of the platforms that are integral to the industry.

Financial data exposes the strategic priorities and weaknesses of search platforms, which can offer insights into where they might make significant changes.

Alphabet Quarterly Earnings

Cadence: Quarterly (fiscal year ends December 31)

Typical release: Q1 (late Apr), Q2 (late Jul), Q3 (late Oct), Q4 (late Jan/early Feb)

Access: Free

Link

Why It Matters:

This is the single most important quarterly report for anyone in search.

The key metric is revenue for the “Google Search & other” segment; its growth rate tells you if the core business is healthy, plateauing, or declining. Compare this to the growth rate of “YouTube ads” to see where user attention and ad dollars are shifting.

A secondary indicator to watch is “Google Cloud” revenue. As it grows, expect more integrations between Google’s enterprise tools and its core search products.

Pay close attention to Traffic Acquisition Costs (TAC), which includes the billions Google pays partners like Apple and Samsung to be the default search engine. If TAC is growing faster than Search revenue, it’s a major red flag that Google is paying more for traffic that is becoming less profitable.

In the current environment, the most critical part of the report is the management commentary and the analyst Q&A. Look for specific language about AI Overviews’ impact on query volume, user satisfaction, and any hint of revenue cannibalization.

Methodology & Limitations:

The “Google Search & other” bundles search with Maps, Gmail, and other properties, which prevents isolated analysis of search revenue. AI Overviews metrics are disclosed selectively and not on a comprehensive quarterly basis. Geographic revenue breakdowns are limited to broad regions.

Microsoft Quarterly Earnings

Cadence: Quarterly (fiscal year ends June 30)

Typical release: Q1 (Oct), Q2 (Jan), Q3 (Apr), Q4 (Jul)

Access: Free

Link

Why It Matters:

Microsoft’s report provides a direct scorecard for Bing’s performance via its “search and news advertising revenue” figures. This tells you whether the search engine is gaining or losing ground. Their integration of OpenAI’s models into Bing has made this a number to watch.

However, the bigger story often lies in their Intelligent Cloud and Productivity segments. Pay attention to commentary on the growth of Microsoft 365 Copilot and enterprise search features within Teams and SharePoint. This reveals how millions of professionals are finding information and getting answers without opening a traditional web browser.

Methodology & Limitations:

Search revenue is only reported as a percentage growth, not in actual dollar amounts, which makes market share calculations more complex. Details about enterprise search usage metrics are rarely shared openly. Geographic breakdowns are also limited. To estimate Bing’s market share, we need to infer from revenue growth compared to traffic data.

Amazon Quarterly Results

Cadence: Quarterly

Typical release: Q1 (late Apr), Q2 (late Jul), Q3 (late Oct), Q4 (late Jan/early Feb)

Access: Free

Link

Why It Matters:

This report tells you how much commercial search is shifting from Google to Amazon.

For years, Amazon’s advertising business has grown faster than its renowned AWS cloud unit. That’s an indicator of where brands are investing to capture customers at the point of purchase. The year-over-year ad revenue growth rate can help with justifying investment in Amazon SEO and enhanced content.

Look beyond the ad revenue to their commentary on logistics. When they discuss the expansion of their same-day delivery network, they are talking about widening their competitive moat against all other ecommerce and search players. A Prime member who can get a product in four hours has little incentive to start their product search on Google.

Also, look for the percentage of units sold by third-party sellers (typically 60-62%) to quantify the scale of the opportunity for brands on their marketplace.

Methodology & Limitations:

Advertising revenue is not separated by format (such as sponsored products, display, or video), and there is no disclosure of revenue by product category. International advertising revenue breakdowns are limited, and delivery network metrics are provided only selectively.

Apple Quarterly Results

Cadence: Quarterly

Typical release: Q1 (late Jan/early Feb), Q2 (late Apr/early May), Q3 (late Jul/early Aug), Q4 (late Oct/early Nov)

Access: Free

Link

Why It Matters:

Apple’s report is a barometer for the health of the mobile ecosystem and the impact of privacy. The key number is “Services” revenue, which includes the App Store, Apple Pay, and their burgeoning advertising business. When this number accelerates, expect more aggressive App Store features and search ads that can siphon traffic and clicks away from the mobile web.

Apple’s management commentary on privacy can have meaningful consequences for the digital marketing industry. Look for any hints about upcoming privacy features that could further limit tracking and attribution in search. Prior announcements around features like App Tracking Transparency on earnings calls gave marketers several months to prepare for the attribution shifts.

Snap Quarterly Results

Cadence: Quarterly

Typical release: Q1 (late Apr), Q2 (late Jul), Q3 (late Oct), Q4 (late Jan/early Feb)

Access: Free

Link

Why It Matters:

Snap’s daily active user growth and engagement patterns tell you where Gen Z discovers information. When DAU growth accelerates in markets where your organic search traffic is flat, younger audiences may not be using traditional search in those regions.

Snap reports specific metrics on AR lens usage. These metrics show you how users interact with visual and augmented reality content, previewing how visual search might evolve.

Methodology & Limitations:

Geographic breakdowns are limited to broad regions. Engagement metrics emphasize time spent rather than search or discovery behavior specifically. Revenue per user varies significantly by region, making it difficult to draw global conclusions. The data mainly reflects Gen Z behavior, not wider demographics.

Pinterest Quarterly Results

Cadence: Quarterly

Typical release: Q1 (late Apr/early May), Q2 (late Jul/early Aug), Q3 (late Oct/early Nov), Q4 (late Jan/early Feb)

Access: Free

Link

Why It Matters:

Pinterest’s monthly active user (MAU) growth shows you which markets embrace visual discovery. Their MAU growth rates by region reveal geographic patterns in visual search adoption, often previewing trends that influence how people search everywhere.

Their average revenue per user by region indicates where visual commerce drives revenue compared to just browsing, helping you decide whether Pinterest optimization deserves resources for your business.

Methodology & Limitations:

MAU counts only authenticated users, excluding logged-out traffic. ARPU includes all revenue types, not just search or discovery-related income. There is limited disclosure regarding search query volume or conversion behavior.

Internet Usage & Infrastructure

While earnings reports reveal the financial outcomes, they are lagging indicators of a more fundamental resource: human attention.

The following reports measure the underlying user behavior that drives these financial results, offering insight into audience attention and interaction.

Digital 2025 – Global Overview (We Are Social & Meltwater)

Cadence: Annual

Typical release: February

Access: Free with registration

Link

Why It Matters:

This report settles internal debates about platform usage with definitive, global data. It provides country-by-country breakdowns of everything from social media penetration and time spent on platforms to the most-visited websites and most-used search queries. It’s a reality check against media hype.

The platform adoption curves reveal which social networks are gaining momentum and which are stagnating. The data on time spent in social apps versus time on the “open web” is worth watching, as it provides some explanation for why website engagement metrics may be declining.

Methodology & Limitations:

Data is compiled from a variety of sources that use different methods. Some countries have smaller sample sizes, and certain metrics come from self-reported surveys. Generally, developed markets enjoy higher data quality compared to emerging markets. Additionally, how platform usage is defined can differ depending on the source.

Measuring Digital Development (ITU)

Cadence: Annual

Typical release: November

Access: Free

Link

Why It Matters:

The ITU, a specialized agency of the United Nations, provides the data for sizing total addressable markets for international SEO.

Its connectivity metrics show which countries have the infrastructure to support video-heavy or interactive content strategies, versus emerging markets where mobile-first, lightweight content is still essential.

The most actionable metric for spotting future growth is “broadband affordability.” History shows that when the cost of a basic internet plan in a developing country drops below the 2% threshold of its average monthly income, that market is poised for growth.

Methodology & Limitations:

Government-reported data quality differs across countries, with some nations providing infrequent or incomplete reports. Affordability calculations rely on national averages that might not account for regional differences. Additionally, infrastructure metrics often lag behind actual deployment by one to two years.

Global Internet Phenomena

Cadence: Annual

Typical release: March-April

Access: Free with registration

Link

Why It Matters:

This report helps you understand what people are actually doing online by tracking which applications consume the most internet bandwidth. Its findings are often staggering. In nearly every market analyzed, video streaming is the number one consumer of bandwidth, often accounting for over 50-60% of all traffic.

This provides proof that optimizing for video is no longer a niche strategy; it’s the main way people consume information and entertainment. The application rankings show whether YouTube or TikTok is the dominant force in your target markets, revealing which platform deserves the lion’s share of your video optimization priority.

This data provides the “why” behind other trends, such as the explosive growth of YouTube’s ad revenue seen in Alphabet’s earnings.

Methodology & Limitations:

Based on ISP-level traffic data from Sandvine’s partner networks, coverage varies by region, with the strongest data in North America and Europe. Mobile and fixed broadband breakdowns are not always comparable. The data excludes encrypted traffic that can’t be categorized. Sampling includes large ISPs but does not cover the entire market.

Privacy & Policy

Knowing where your audience is operating is only part of the challenge; understanding the rules of engagement is equally important. As privacy regulations and policies evolve, they set new guidelines for digital marketing, fundamentally altering how we target and measure audiences.

Data Privacy Benchmark Study (Cisco)

Cadence: Annual

Typical release: January

Access: Free with registration

Link

Why It Matters:

This report helps turn the idea of privacy into business results that everyone can understand. It highlights how good privacy practices can positively influence sales, customer loyalty, and brand reputation.

Whenever you’re making a case for investing in responsible data handling or privacy-focused technologies, this report offers valuable ROI insights. It reveals how many consumers might turn away from a brand if privacy isn’t clear, giving you strong support to promote user-friendly policies that also boost profits.

Methodology & Limitations:

The survey methodology relies on self-reported data. Respondents mainly come from large enterprises. The geographic focus is on developed markets. ROI figures are based on correlation rather than establishing causation. Privacy maturity levels are self-assessed by respondents rather than independently verified.

Ads Safety Report (Google)

Cadence: Annual

Typical release: March

Access: Free

Link

Why It Matters:

Google’s enforcement data provides a glimpse into what may soon impact organic search results. Often, policies first appear in Google Ads before making their way into search quality guidelines. Violations by publishers show which types of sites might get banned from AdSense, sometimes acting as a warning sign for future manual actions in organic search.

When enforcement becomes more active in fields like crypto, healthcare, or financial services, it usually indicates that stricter E-E-A-T standards are on their way for organic results. Google’s actions to block ads due to misrepresentation or coordinated deceptive behavior are sometimes followed by similar issues in organic results.

Methodology & Limitations:

Google-reported data shows enforcement priorities, not industry violation rates. Detection methods, thresholds, and policies lack transparency and are not fully disclosed. Enforcement patterns are unclear, with no independent verification of metrics.

Media Use & Trust

Digital News Report (Reuters Institute)

Cadence: Annual

Typical release: June

Access: Free

Link

Why It Matters:

The Reuters Institute report explores how content discovery differs across countries and demographics. The analysis of over 40 nations details the ways people find information, whether directly, through search, social media, or aggregators.

A key insight is the concept of “side-door” traffic, referring to visitors who arrive via social feeds, mobile alerts, or aggregator apps, rather than visiting a homepage or using traditional search. In most developed nations, this type of traffic now makes up the majority, even for leading publishers.

This highlights the need for a distributed content strategy, emphasizing that your brand and expertise should be discoverable in many channels beyond Google.

Methodology & Limitations:

Survey-based methodology with ~2,000 online respondents per country, excluding offline or low-connectivity users, and overrepresents developed markets. Self-reported news habits may differ from actual behaviors, and the definition of “news” varies by culture and person.

Edelman Trust Barometer

Cadence: Annual

Typical release: January

Access: Free

Link

Why It Matters:

In today’s world of AI-generated content and widespread misinformation, trust is more important than ever. Edelman has been tracking public trust in four key institutions – Business, Government, Media, and NGOs – for over 20 years. Their findings offer a helpful guide for establishing your content’s authority.

When the data shows that “Business” is trusted more than “Media” in a particular country, it suggests that thought leadership from your company’s own qualified experts can be more believable and relatable than just quoting traditional news outlets.

The differences across generations and regions are especially useful to understand. They reveal which types of authority signals and credentials matter most for different audiences, giving you a clear, data-driven way to build E-E-A-T.

Methodology & Limitations:

Survey sample favors educated, high-income populations in most countries, with 1,000-1,500 respondents per nation. Trust is a self-reported perception, not behavioral; country choice focuses on larger economies. Trust in institutions may not reflect trust in brands or sources.

Digital Media Trends (Deloitte Insights)

Cadence: Annual

Typical release: April

Access: Free executive summary

Link

Why It Matters:

Deloitte monitors streaming service adoption, content consumption trends, and attention fragmentation, all of which influence your content strategy. Their research on subscription usage and churn rates shows how users distribute their entertainment budgets.

Their insights into ad-supported versus subscription preferences indicate which business models resonate with different demographics and content types. Data on cord-cutting and cord-never behaviors illustrate how various generations consume media.

Their analysis of social media patterns reveals declining platform popularity, providing early signals to diversify channels.

Methodology & Limitations:

This U.S.-focused study mainly involves higher-income digital adopters, with streaming behavior centered on entertainment, which may not reflect overall information habits. The sample size of 2,000-3,000 limits detailed demographics, and trends may lag six to 12 months behind mainstream adoption.

How To Use These Reports

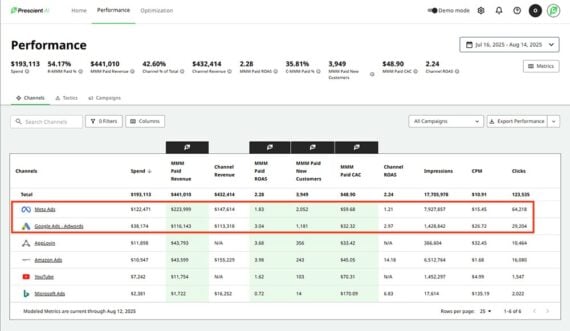

Use this set of reports to connect market trends and signals with your search and content decisions.

Start with quarterly earnings and ad forecasts to calibrate budgets after core updates or seasonal swings. When planning campaigns, check platform adoption and discovery trends to decide where your audience is shifting and how they’re finding information.

For content format choices, compare attention and creative studies with what’s working in Search, YouTube, and short-form video to guide what you produce next.

Review earnings and forecasts each quarter when you set goals. Scan broader landscape studies when you refresh your annual plan. When something changes fast, cross-check at least two independent sources before you move resources. Look for consistency in your data and don’t act on one-off spikes.

Looking Ahead

The best SEO and marketing strategies are built on more than instinct; they’re grounded in data that stands up to scrutiny. By making these reports part of your regular reading cycle, you have a basis to make solid decisions that you can justify.

Each dataset offers a different lens that allows you to see both the macro trends shaping the industry and the micro signals to guide your next move.

The marketers who know where to find the right data and information are the ones who can be strategic and not reactionary.

More Resources:

Featured Image: Roman Samborskyi/Shutterstock