In a post “growth-at-all-costs” era, B2B go-to-market (GTM) teams face a dual mandate: operate with greater efficiency while driving measurable business outcomes.

Many organizations see AI as the definitive means of achieving this efficiency.

The reality is that AI is no longer a speculative investment. It has emerged as a strategic enabler to unify data, align siloed teams, and adapt to complex buyer behaviors in real time.

According to an SAP study, 48% of executives use generative AI tools daily, while 15% use AI multiple times per day.

The opportunity for modern Go-to-Market (GTM) leaders is not just to accelerate legacy tactics with AI, but to reimagine the architecture of their GTM strategy altogether.

This shift represents an inflection point. AI has the potential to power seamless and adaptive GTM systems: measurable, scalable, and deeply aligned with buyer needs.

In this article, I will share a practical framework to modernize B2B GTM using AI, from aligning internal teams and architecting modular workflows to measuring what truly drives revenue.

The Role Of AI In Modern GTM Strategies

For GTM leaders and practitioners, AI represents an opportunity to achieve efficiency without compromising performance.

Many organizations leverage new technology to automate repetitive, time-intensive tasks, such as prospect scoring and routing, sales forecasting, content personalization, and account prioritization.

But its true impact lies in transforming how GTM systems operate: consolidating data, coordinating actions, extracting insights, and enabling intelligent engagement across every stage of the buyer’s journey.

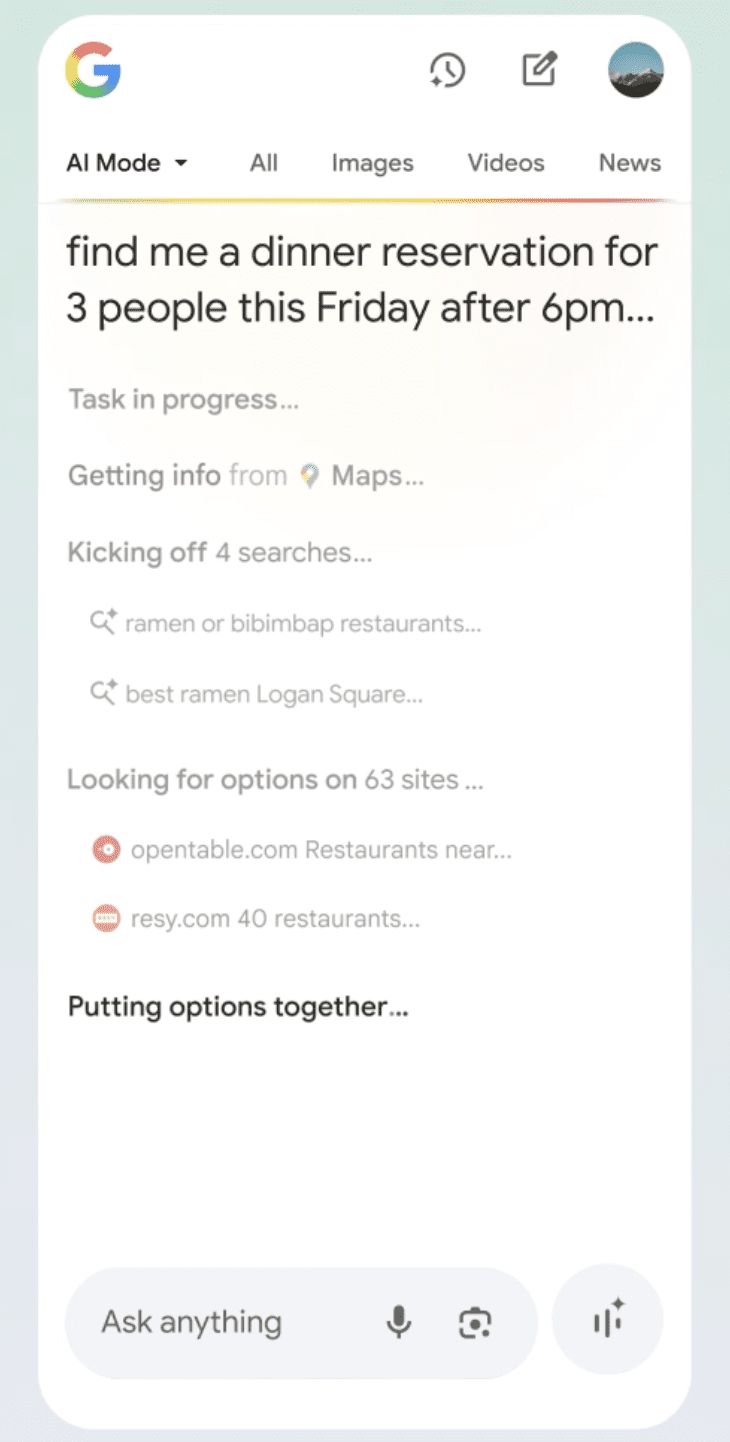

Where previous technologies offered automation, AI introduces sophisticated real-time orchestration.

Rather than layering AI onto existing workflows, AI can be used to enable previously unscalable capabilities such as:

- Surfacing and aligning intent signals from disconnected platforms.

- Predicting buyer stage and engagement timing.

- Providing full pipeline visibility across sales, marketing, client success, and operations.

- Standardizing inputs across teams and systems.

- Enabling cross-functional collaboration in real time.

- Forecasting potential revenue from campaigns.

With AI-powered data orchestration, GTM teams can align on what matters, act faster, and deliver more revenue with fewer resources.

AI is not merely an efficiency lever. It is a path to capabilities that were previously out of reach.

Framework: Building An AI-Native GTM Engine

Creating a modern GTM engine powered by AI demands a re-architecture of how teams align, how data is managed, and how decisions are executed at every level.

Below is a five-part framework that explains how to centralize data, build modular workflows, and train your model:

1. Develop Centralized, Clean Data

AI performance is only as strong as the data it receives. Yet, in many organizations, data lives in disconnected silos.

Centralizing structured, validated, and accessible data across all departments at your organization is foundational.

AI needs clean, labeled, and timely inputs to make precise micro-decisions. These decisions, when chained together, power reliable macro-actions such as intelligent routing, content sequencing, and revenue forecasting.

In short, better data enables smarter orchestration and more consistent outcomes.

Luckily, AI can be used to break down these silos across marketing, sales, client success, and operations by leveraging a customer data platform (CDP), which integrates data from your customer relationship management (CRM), marketing automation (MAP), and customer success (CS) platforms.

The steps are as follows:

- Appoint a data steward who owns data hygiene and access policies.

- Select a CDP that pulls records from your CRM, MAP, and other tools with client data.

- Configure deduplication and enrichment routines, and tag fields consistently.

- Establish a shared, organization-wide dashboard so every team works from the same definitions.

Recommended starting point: Schedule a workshop with operations, analytics, and IT to map current data sources and choose one system of record for account identifiers.

2. Build An AI-Native Operating Model

Instead of layering AI onto legacy systems, organizations will be better suited to architect their GTM strategies from the ground up to be AI-native.

This requires designing adaptive workflows that rely on machine input and positioning AI as the operating core, not just a support layer.

AI can deliver the most value when it unifies previously fragmented processes.

Rather than simply accelerating isolated tasks like prospect scoring or email generation, AI should orchestrate entire GTM motions, seamlessly adapting messaging, channels, and timing based on buyer intent and journey stage.

Achieving this transformation demands new roles within the GTM organization, such as AI strategists, workflow architects, and data stewards.

In other words, experts focused on building and maintaining intelligent systems rather than executing manual processes.

AI-enabled GTM is not about automation alone; it’s about synchronization, intelligence, and scalability at every touchpoint.

Once you have committed to building an AI-native GTM model, the next step is to implement it through modular, data-driven workflows.

Recommended starting point: Assemble a cross-functional strike team and map one buyer journey end-to-end, highlighting every manual hand-off that could be streamlined by AI.

3. Break Down GTM Into Modular AI Workflows

A major reason AI initiatives fail is when organizations do too much at once. This is why large, monolithic projects often stall.

Success comes from deconstructing large GTM tasks into a series of focused, modular AI workflows.

Each workflow should perform a specific, deterministic task, such as:

- Assessing prospect quality on certain clear, predefined inputs.

- Prioritizing outreach.

- Forecasting revenue contribution.

If we take the first workflow, which assesses prospect quality, this would entail integrating or implementing a lead scoring AI tool with your model and then feeding in data such as website activity, engagement, and CRM data. You can then instruct your model to automatically route top-scoring prospects to sales representatives, for example.

Similarly, for your forecasting workflow, connect forecasting tools to your model and train it on historical win/loss data, pipeline stages, and buyer activity logs.

To sum up:

- Integrate only the data required.

- Define clear success criteria.

- Establish a feedback loop that compares model output with real outcomes.

- Once the first workflow proves reliable, replicate the pattern for additional use cases.

When AI is trained on historical data with clearly defined criteria, its decisions become predictable, explainable, and scalable.

Recommended starting point: Draft a simple flow diagram with seven or fewer steps, identify one automation platform to orchestrate them, and assign service-level targets for speed and accuracy.

4. Continuously Test And Train AI Models

An AI-powered GTM engine is not static. It must be monitored, tested, and retrained continuously.

As markets, products, and buyer behaviors shift, these changing realities affect the accuracy and efficiency of your model.

Plus, according to OpenAI itself, one of the latest iterations of its large language model (LLM) can hallucinate up to 48% of the time, emphasizing the importance of embedding rigorous validation processes, first-party data inputs, and ongoing human oversight to safeguard decision-making and maintain trust in predictive outputs.

Maintaining AI model efficiency requires three steps:

- Set clear validation checkpoints and build feedback loops that surface errors or inefficiencies.

- Establish thresholds for when AI should hand off to human teams and ensure that every automated decision is verified. Ongoing iteration is key to performance and trust.

- Set a regular cadence for evaluation. At a minimum, conduct performance audits monthly and retrain models quarterly based on new data or shifting GTM priorities.

During these maintenance cycles, use the following criteria to test the AI model:

- Ensure accuracy: Regularly validate AI outputs against real-world outcomes to confirm predictions are reliable.

- Maintain relevance: Continuously update models with fresh data to reflect changes in buyer behavior, market trends, and messaging strategies

- Optimize for efficiency: Monitor key performance indicators (KPIs) like time-to-action, conversion rates, and resource utilization to ensure AI is driving measurable gains.

- Prioritize explainability: Choose models and workflows that offer transparent decision logic so GTM teams can interpret results, trust outputs, and make manual adjustments as needed.

By combining cadence, accountability, and testing rigor, you create an AI engine for GTM that not only scales but improves continuously.

Recommended starting point: Put a recurring calendar invite on the books titled “AI Model Health Review” and attach an agenda covering validation metrics and required updates.

5. Focus On Outcomes, Not Features

Success is not defined by AI adoption, but by outcomes.

Benchmark AI performance against real business metrics such as:

- Pipeline velocity.

- Conversion rates.

- Client acquisition cost (CAC).

- Marketing-influenced revenue.

Focus on use cases that unlock new insights, streamline decision-making, or drive action that was previously impossible.

When a workflow stops improving its target metric, refine or retire it.

Recommended starting point: Demonstrate value to stakeholders in the AI model by exhibiting its impact on pipeline opportunity or revenue generation.

Common Pitfalls To Avoid

1. Over-Reliance On Vanity Metrics

Too often, GTM teams focus AI efforts on optimizing for surface-level KPIs, like marketing qualified lead (MQL) volume or click-through rates, without tying them to revenue outcomes.

AI that increases prospect quantity without improving prospect quality only accelerates inefficiency.

The true test of value is pipeline contribution: Is AI helping to identify, engage, and convert buying groups that close and drive revenue? If not, it is time to rethink how you measure its efficiency.

2. Treating AI As A Tool, Not A Transformation

Many teams introduce AI as a plug-in to existing workflows rather than as a catalyst for reinventing them. This results in fragmented implementations that underdeliver and confuse stakeholders.

AI is not just another tool in the tech stack or a silver bullet. It is a strategic enabler that requires changes in roles, processes, and even how success is defined.

Organizations that treat AI as a transformation initiative will gain exponential advantages over those who treat it as a checkbox.

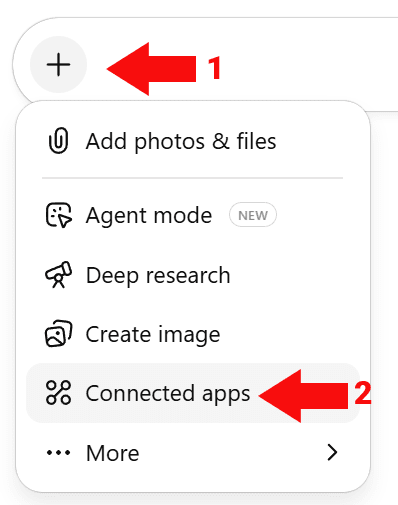

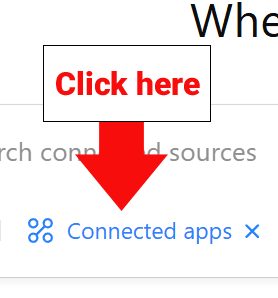

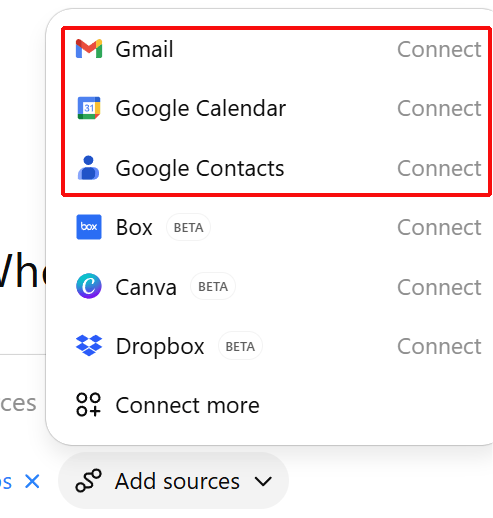

A recommended approach for testing workflows is to build a lightweight AI system with APIs to connect fragmented systems without needing complicated development.

3. Ignoring Internal Alignment

AI cannot solve misalignment; it amplifies it.

When sales, marketing, and operations are not working from the same data, definitions, or goals, AI will surface inconsistencies rather than fix them.

A successful AI-driven GTM engine depends on tight internal alignment. This includes unified data sources, shared KPIs, and collaborative workflows.

Without this foundation, AI can easily become another point of friction rather than a force multiplier.

A Framework For The C-Level

AI is redefining what high-performance GTM leadership looks like.

For C-level executives, the mandate is clear: Lead with a vision that embraces transformation, executes with precision, and measures what drives value.

Below is a framework grounded in the core pillars modern GTM leaders must uphold:

Vision: Shift From Transactional Tactics To Value-Centric Growth

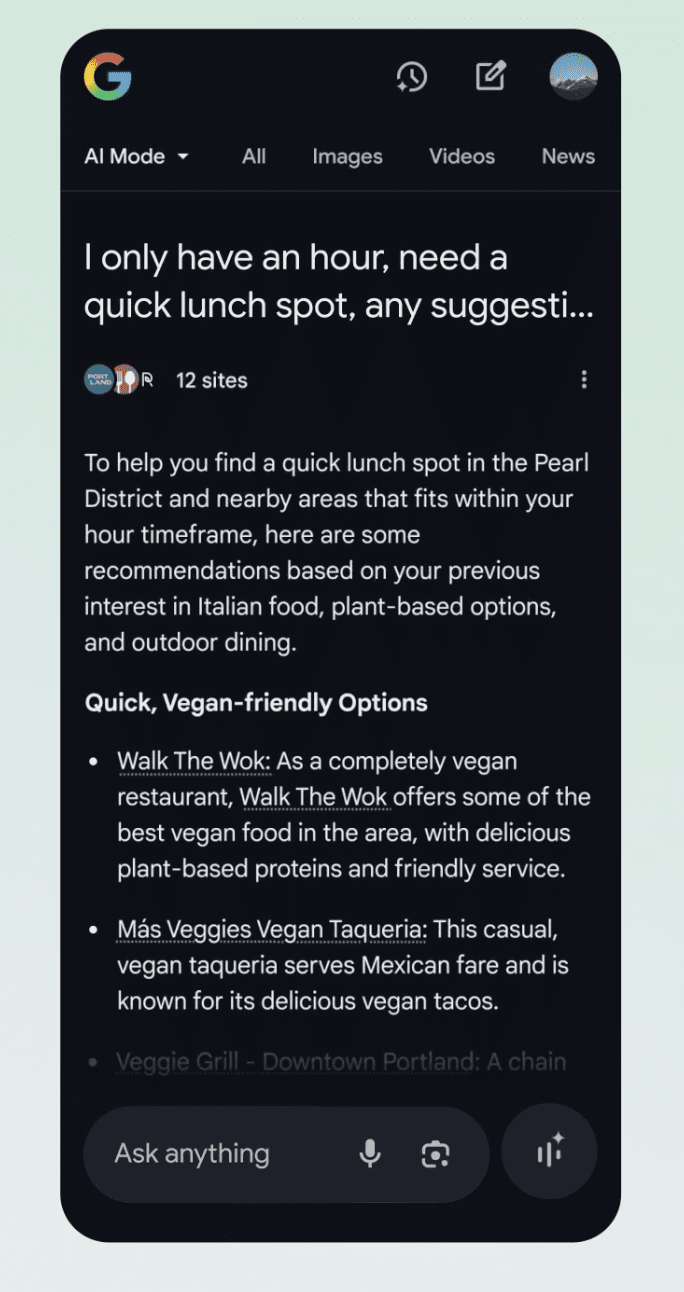

The future of GTM belongs to those who see beyond prospect quotas and focus on building lasting value across the entire buyer journey.

When narratives resonate with how decisions are really made (complex, collaborative, and cautious), they unlock deeper engagement.

GTM teams thrive when positioned as strategic allies. The power of AI lies not in volume, but in relevance: enhancing personalization, strengthening trust, and earning buyer attention.

This is a moment to lean into meaningful progress, not just for pipeline, but for the people behind every buying decision.

Execution: Invest In Buyer Intelligence, Not Just Outreach Volume

AI makes it easier than ever to scale outreach, but quantity alone no longer wins.

Today’s B2B buyers are defensive, independent, and value-driven.

Leadership teams that prioritize technology and strategic market imperative will enable their organizations to better understand buying signals, account context, and journey stage.

This intelligence-driven execution ensures resources are spent on the right accounts, at the right time, with the right message.

Measurement: Focus On Impact Metrics

Surface-level metrics no longer tell the full story.

Modern GTM demands a deeper, outcome-based lens – one that tracks what truly moves the business, such as pipeline velocity, deal conversion, CAC efficiency, and the impact of marketing across the entire revenue journey.

But the real promise of AI is meaningful connection. When early intent signals are tied to late-stage outcomes, GTM leaders gain the clarity to steer strategy with precision.

Executive dashboards should reflect the full funnel because that is where real growth and real accountability live.

Enablement: Equip Teams With Tools, Training, And Clarity

Transformation does not succeed without people. Leaders must ensure their teams are not only equipped with AI-powered tools but also trained to use them effectively.

Equally important is clarity around strategy, data definitions, and success criteria.

AI will not replace talent, but it will dramatically increase the gap between enabled teams and everyone else.

Key Takeaways

- Redefine success metrics: Move beyond vanity KPIs like MQLs and focus on impact metrics: pipeline velocity, deal conversion, and CAC efficiency.

- Build AI-native workflows: Treat AI as a foundational layer in your GTM architecture, not a bolt-on feature to existing processes.

- Align around the buyer: Use AI to unify siloed data and teams, delivering synchronized, context-rich engagement throughout the buyer journey.

- Lead with purposeful change: C-level executives must shift from transactional growth to value-led transformation by investing in buyer intelligence, team enablement, and outcome-driven execution.

More Resources:

Featured Image: BestForBest/Shutterstock