James LePage, Dir Engineering AI, co-lead of the WordPress AI Team, described the future of the Agentic AI Web, where websites become interactive interfaces and data sources and the value add that any site brings to their site becomes flattened. Although he describes a way out of brand and voice getting flattened, the outcome for informational, service, and media sites may be “complex.”

Evolution To Autonomy

One of the points that LePage makes is that of agentic autonomy and how that will impact what it means to have an online presence. He maintains that humans will still be in the loop but at a higher and less granular level, where agentic AI interactions with websites are at the tree level dealing with the details and the humans are at the forest level dictating the outcome they’re looking for.

LePage writes:

“Instead of approving every action, users set guidelines and review outcomes.”

He sees agentic AI progressing on an evolutionary course toward greater freedom with less external control, also known as autonomy. This evolution is in three stages.

He describes the three levels of autonomy:

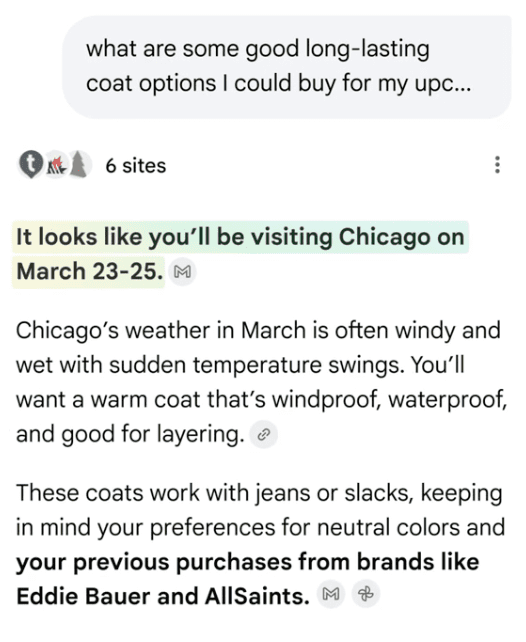

- What exists now is essentially Perplexity-style web search with more steps: gather content, generate synthesis, present to user. The user still makes decisions and takes actions.

- Near-term, users delegate specific tasks with explicit specifications, and agents can take actions like purchases or bookings within bounded authority.

- Further out, agents operate more autonomously based on standing guidelines, becoming something closer to economic actors in their own right.”

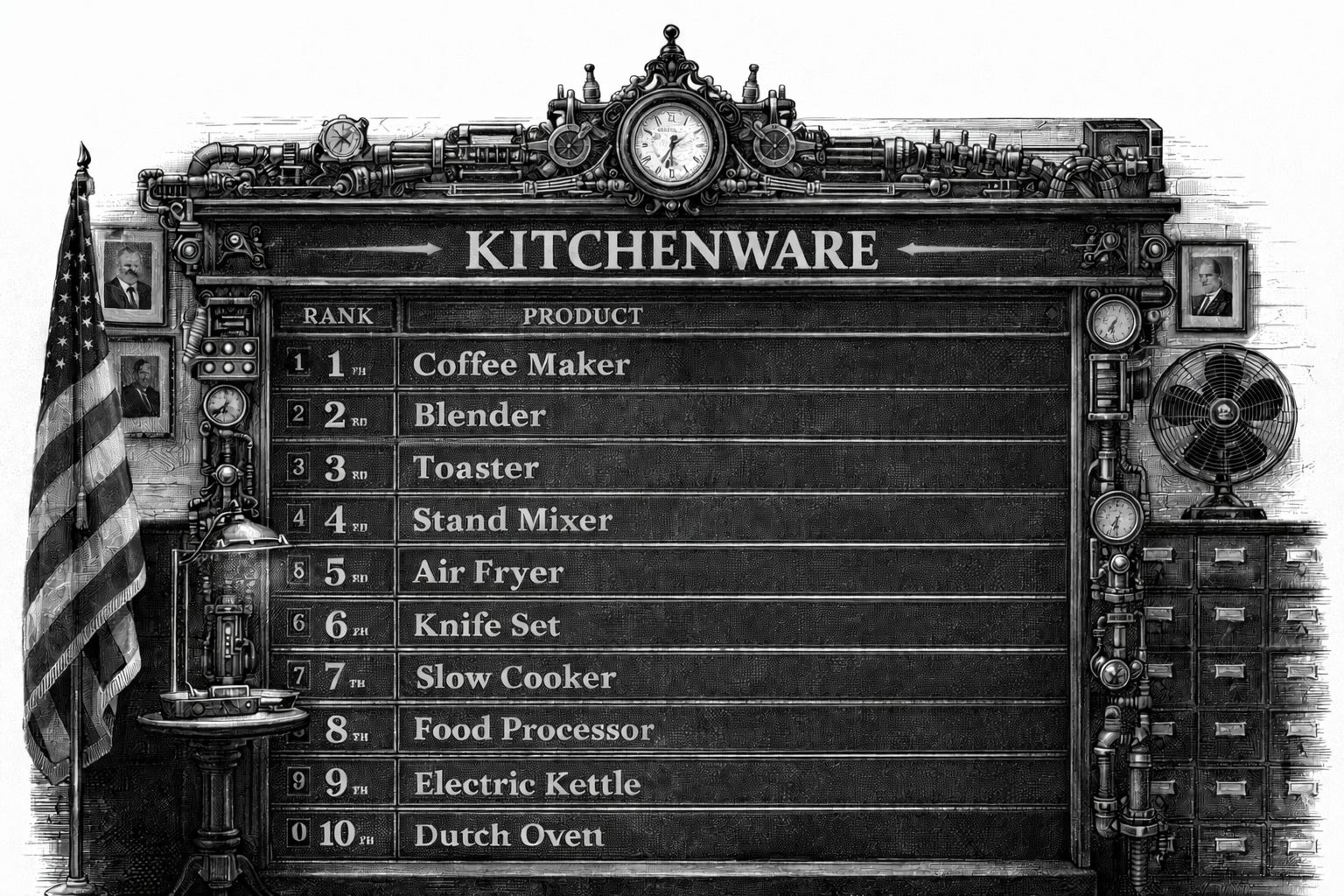

AI Agents May Turn Sites Into Data Sources

LePage sees the web in terms of control, with Agentic AI experiences taking control of how the data is represented to the user. The user experience and branding is removed and the experience itself is refashioned by the AI Agent.

He writes:

“When an agent visits your website, that control diminishes. The agent extracts the information it needs and moves on. It synthesizes your content according to its own logic. It represents you to its user based on what it found, not necessarily how you’d want to be represented.

This is a real shift. The entity that creates the content loses some control over how that content is presented and interpreted. The agent becomes the interface between you and the user.

Your website becomes a data source rather than an experience.”

Does it sound problematic that websites will turn into data sources? As you’ll see in the next paragraph, LePage’s answer for that situation is to double down on interactions and personalization via AI, so that users can interact with the data in ways that are not possible with a static website.

These are important insights because they’re coming from the person who is the director of AI engineering at Automattic and co-leads the team in charge of coordinating AI integration within the WordPress core.

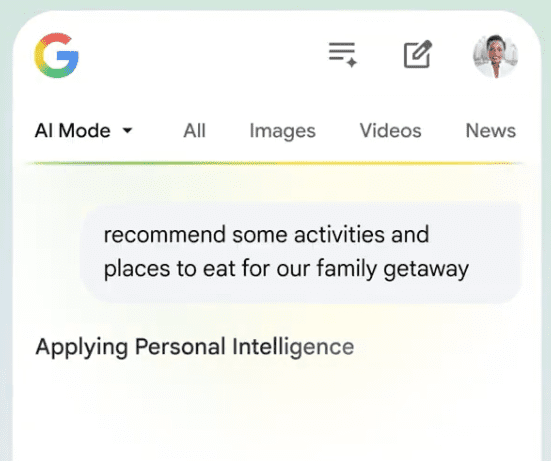

AI Will Redefine Website Interactions

LePage, who is the co-lead of WordPress’s AI Team, which coordinates AI-related contributions to the WordPress core, said that AI will enable websites to offer increasingly personalized and immersive experiences. Users will be able to interact with the website as a source of data refined and personalized for the individual’s goals, with website-side AI becoming the differentiator.

He explained:

“Humans who visit directly still want visual presentation. In fact, they’ll likely expect something more than just content now. AI actually unlocks this.

Sites can create more immersive and personalized experiences without needing a developer for every variation. Interactive data visualizations, product configurators, personalized content flows. The bar for what a “visit” should feel like is rising.

When AI handles the informational layer, the experiential layer becomes a differentiator.”

That’s an important point right there because it means that if AI can deliver the information anywhere (in an agent user interface, an AI generated comparison tool, a synthesized interactive application), then information alone stops separating you from everyone else.

In this kind of future, what becomes the differentiator, your value add, is the website experience itself.

How AI Agents May Negatively Impact Websites

LePage says that Agentic AI is a good fit for commercial websites because they are able to do comparisons and price checks and zip through the checkout. He says that it’s a different story for informational sites, calling it “more complex.”

Regarding the phrase “more complex,” I think that’s a euphemism that engineers use instead of what they really mean: “You’re probably screwed.”

Judge for yourself. Here’s how LePage explains websites lose control over the user experience:

“When an agent visits your website, that control diminishes. The agent extracts the information it needs and moves on. It synthesizes your content according to its own logic. It represents you to its user based on what it found, not necessarily how you’d want to be represented.

This is a real shift. The entity that creates the content loses some control over how that content is presented and interpreted. The agent becomes the interface between you and the user. Your website becomes a data source rather than an experience.

For media and services, it’s more complex. Your brand, your voice, your perspective, the things that differentiate you from competitors, these get flattened when an agent summarizes your content alongside everyone else’s.”

For informational websites, the website experience can be the value add but that advantage is eliminated by Agentic AI and unlike with ecommerce transactions where sales are the value exchange, there is zero value exchange since nobody is clicking on ads, much less viewing them.

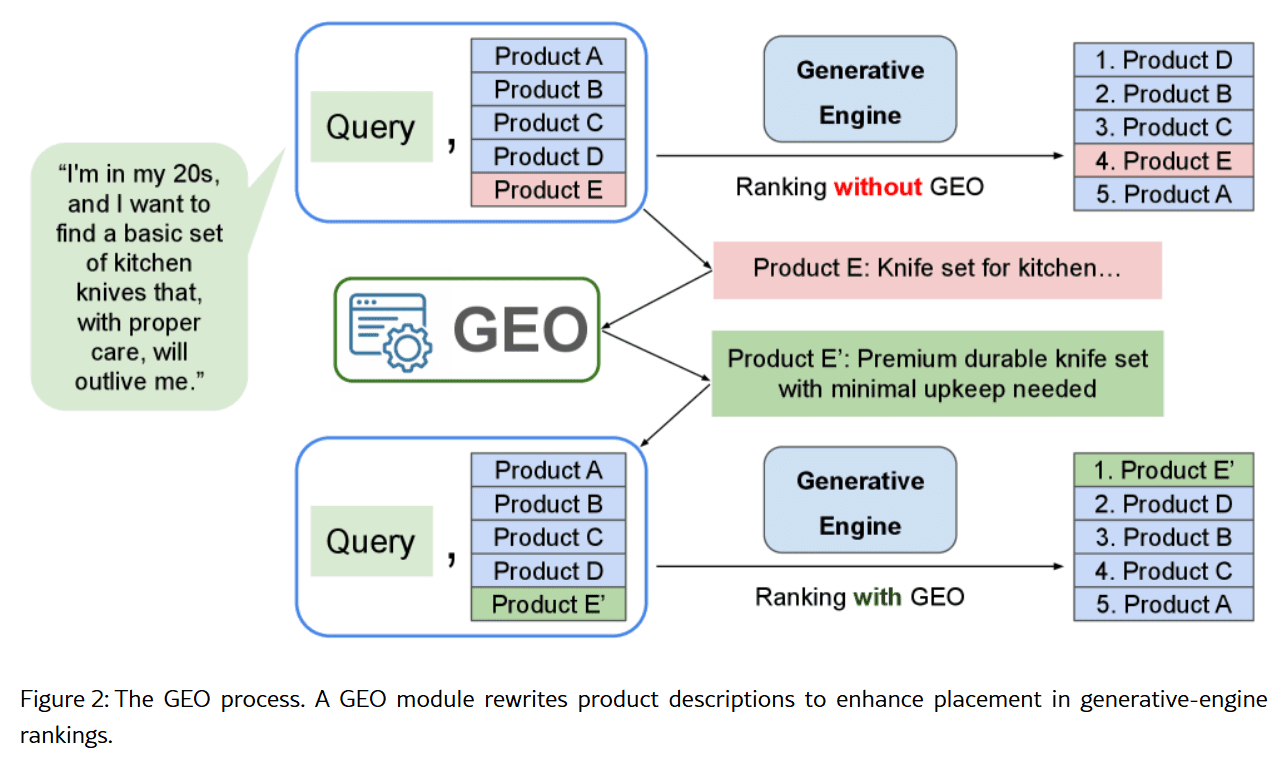

Alternative To Flattened Branding

LePage goes on to present an alternative to brand flattening by imagining a scenario where websites themselves wield AI Agents so that users can interact with the information in ways that are helpful, engaging, and useful. This is an interesting thought because it represents what may be the biggest evolutionary step in website presence since responsive design made websites engaging regardless of device and browser.

He explains how this new paradigm may work:

“If agents are going to represent you to users, you might need your own agent to represent you to them.

Instead of just exposing static content and hoping the visiting agent interprets it well, the site could present a delegate of its own. Something that understands your content, your capabilities, your constraints, and your preferences. Something that can interact with the visiting agent, answer its questions, present information in the most effective way, and even negotiate.

The web evolves from a collection of static documents to a network of interacting agents, each representing the interests of their principal. The visiting agent represents the user. The site agent represents the entity. They communicate, they exchange information, they reach outcomes.

This isn’t science fiction. The protocols are being built. MCP is now under the Linux Foundation with support from Anthropic, OpenAI, Google, Microsoft, and others. Agent2Agent is being developed for agent-to-agent communication. The infrastructure for this kind of web is emerging.”

What do you think about the part where a site’s AI agent talks to a visitor’s AI agent and communicates “your capabilities, your constraints, and your preferences,” as well as how your information will be presented? There might be something here, and depending on how this is worked out, it may be something that benefits publishers and keeps them from becoming just a data source.

AI Agents May Force A Decision: Adaptation Versus Obsolescence

LePage insists that publishers, which he calls entities, that evolve along with the Agentic AI revolution will be the ones that will be able to have the most effective agent-to-agent interactions, while those that stay behind will become data waiting to be scraped .

He paints a bleak future for sites that decline to move forward with agent-to-agent interactions:

“The ones that don’t will still exist on the web. But they’ll be data to be scraped rather than participants in the conversation.”

What LePage describes is a future in which product and professional service sites can extract value from agent-to-agent interactions. But the same is not necessarily true for informational sites that users depend on for expert reviews, opinions, and news. The future for them looks “complex.”