Google May Rely Less On Hreflang, Shift To Auto Language Detection via @sejournal, @MattGSouthern

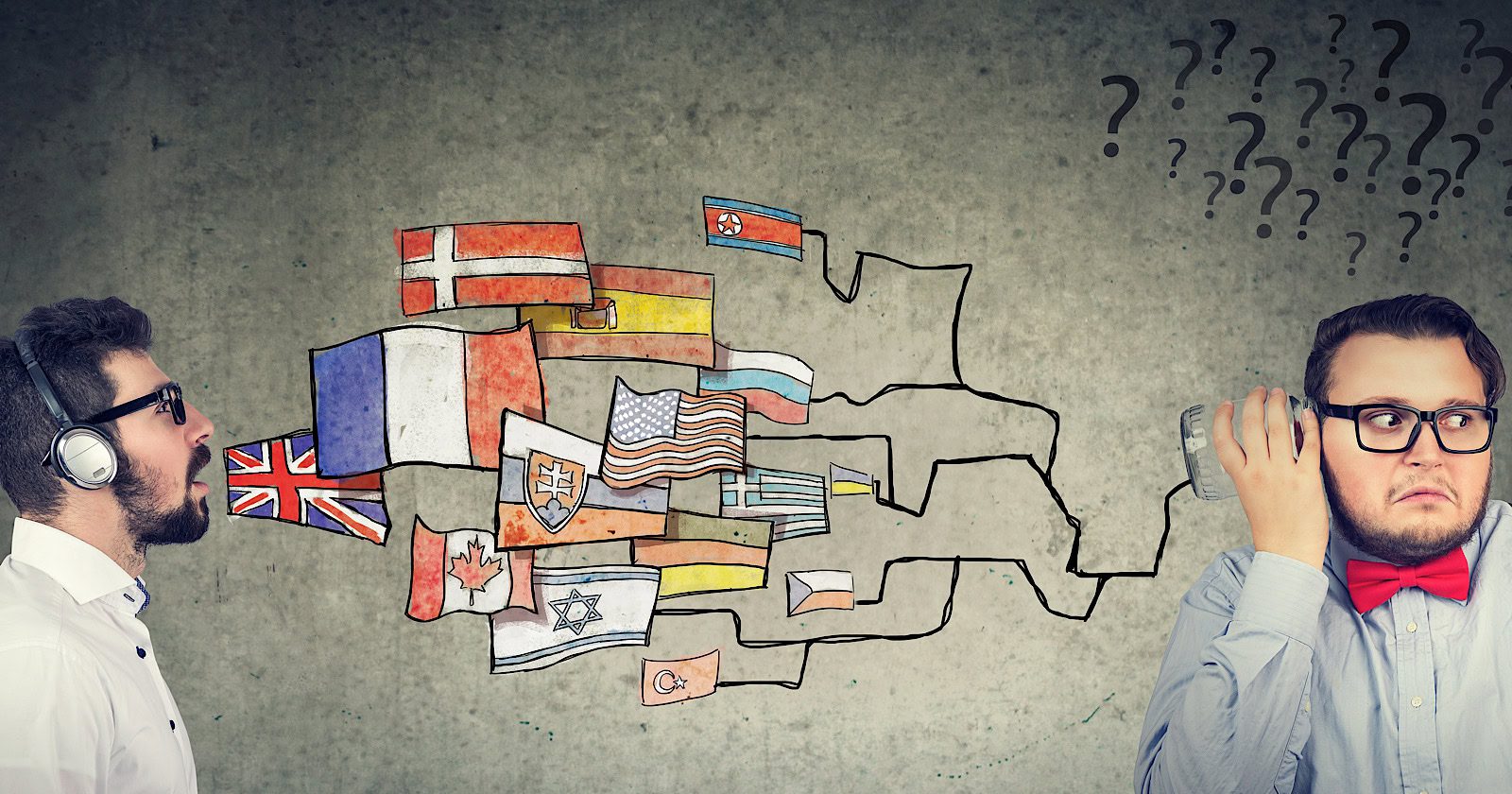

In the latest episode of Google’s “Search Off The Record” podcast, a member of the Search Relations team suggested that Google may be moving towards automatically detecting language versions of web pages, potentially reducing the need for manual hreflang annotations.

Google’s Stance On Automatic Language Detection

Gary Illyes, a Google analyst, believes that search engines should rely less on annotations like hreflang and more on automatically learned signals.

Illyes stated during the podcast:

“Ultimately, I would want less and less annotations, site annotations, and more automatically learned things.”

He argued that this approach is more reliable than the current system of manual annotations.

Illyes elaborated on the existing capabilities of Google’s systems:

“Almost ten years ago, we could already do that, and this was what, almost ten years ago.”

Illyes emphasized the potential for improvement in this area:

“If, almost ten years ago, we could already do that quite reliably, then why would we not be able to do it now.”

The Current State Of Hreflang Implementation

The discussion also touched on the current state of hreflang implementation.

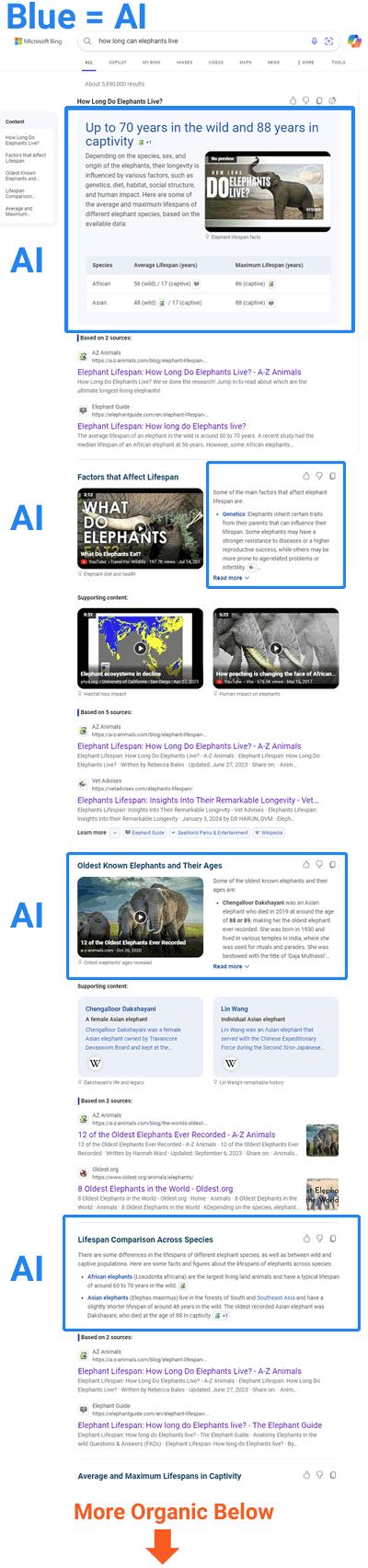

According to data cited in the podcast, only about 9% of websites currently use hreflang annotations on their home pages.

This relatively low adoption rate might be a factor in Google’s consideration of alternative methods for detecting language and regional targeting.

Potential Challenges & Overrides

While advocating for automatic detection, Illyes acknowledged that website owners should be able to override automatic detections if necessary.

He conceded, “I think we should have overrides,” recognizing the need for manual control in some situations.

The Future Of Multilingual SEO

While no official changes have been announced, this discussion provides insight into the potential future direction of Google’s approach to multilingual and multi-regional websites.

Stay tuned for any official updates from Google on this topic.

What This Means For You

This potential shift in Google’s language detection and targeting approach could have significant implications for website owners and SEO professionals.

It could reduce the technical burden of implementing hreflang annotations, particularly for large websites with multiple language versions.

The top takeaways from this discussion include the following:

- It’s advisable to continue following Google’s current guidelines on implementing hreflang annotations.

- Ensure that your multilingual content is high-quality and accurately translated. This will likely remain crucial regardless of how Google detects language versions.

- While no immediate changes are planned, be ready to adapt your SEO strategy if Google moves towards more automatic language detection.

- If you’re planning a new multilingual site or restructuring an existing one, consider a clear and logical structure that makes language versions obvious, as this may help with automatic detection.

Remember, while automation may increase, having a solid understanding of international SEO principles will remain valuable for optimizing your global web presence.

Listen to the full podcast episode below: