Google: Proximity Not A Factor For Local Service Ads Rankings via @sejournal, @MattGSouthern

Google has clarified that a business’s proximity to a searcher isn’t a primary factor in how Local Services Ads are ranked.

This change reflects Google’s evolving understanding of what’s relevant to users searching for local service providers.

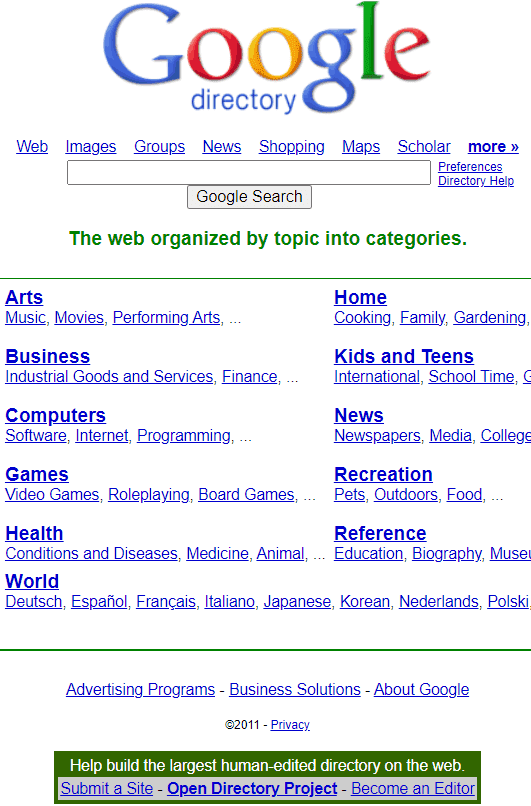

Chris Barnard, a Local SEO Analyst at Sterling Sky, started the discussion by pointing out an update to a Google Help Center article.

In a screenshot, he highlights that Google removed the section stating proximity is a factor in local search ad rankings.

so this is definitely new 😅

Proximity no longer a thing in LSAs pic.twitter.com/zaSuqT6ZJP

— Chris Barnard (@CPBarnard) May 8, 2024

Ginny Marvin, Google’s Ads Liaison, responded to clarify the change.

In a statement, Marvin said:

“LSA ranking has evolved over time as we have learned what works best for consumers and advertisers. We’ve seen that proximity of a business’ location is often not a key indicator of relevancy.

For example, the physical location of a home cleaning business matters less to potential customers than whether their home is located within the business’ service area.”

To clarify, LSA ranking has evolved over time as we have learned what works best for consumers and advertisers. We’ve seen that proximity of a business’ location is often not a key indicator of relevancy. For example, the physical location of a home cleaning business matters less…

— AdsLiaison (@adsliaison) May 9, 2024

Marvin confirmed this wasn’t a sudden change but an update to “more accurately reflect these ranking considerations” based on Google’s learnings.

The updated article now states that location relevance factors include:

“…the context of a customer’s search… the service or job a customer is searching for, time of the search, location, and other characteristics.”

Proximity Still A Factor For Service Areas

Google maintains policies requiring service providers to limit their ad targeting to areas they can service from their business locations.

As Marvin cites, Google’s Local Services platform policies state:

“Local Services strives to connect consumers with local service providers. Targeting your ads to areas that are far from your business location and/or that you can’t reasonably serve creates a negative and potentially confusing experience for consumers.”

Why SEJ Cares

By de-emphasizing proximity, Google is giving its ad-serving algorithms the flexibility to surface the most relevant and capable providers.

This allows the results to match user intent better and connect searchers with companies that can realistically service their location.

FAQ

What should businesses do in response to the change in Local Services Ads ranking factors?

With the recent changes to how Google ranks Local Services Ads, businesses should update the service areas listed for their ads to reflect the regions they can realistically provide services. You’ll want to match the service areas to what’s listed on your Google Business Profile.

Companies should also ensure their service offerings and availability information are up-to-date, as these are other key factors that will impact how well their Local service ads rank and show up for relevant local searches.

Why is it important for marketers to understand changes to Local Services Ads ranking?

These changes affect how businesses get matched with potential customers. Google no longer heavily prioritizes closeness when ranking local service ads. Instead, it focuses more on other relevant factors.

Understanding this shift allows businesses to update their local service ad strategies. By optimizing for Google’s new priorities, companies can get their ads in front of the right audience.

Can a business still target areas far from their location with Local Services Ads?

No, Google doesn’t allow businesses to target areas they can’t realistically service.

This is to prevent customers from being matched with providers who are too far away to help them. Businesses can only advertise in areas close to their location or service areas.

Featured Image: Mamun sheikh K/Shutterstock