Big Brands Receive Site Abuse Manual Actions via @sejournal, @martinibuster

Google indicated that manual actions were coming to webpages that host third party webpages and according to some, the effects of those manual actions may be showing up in the search results.

Site Reputation Abuse Manual Actions

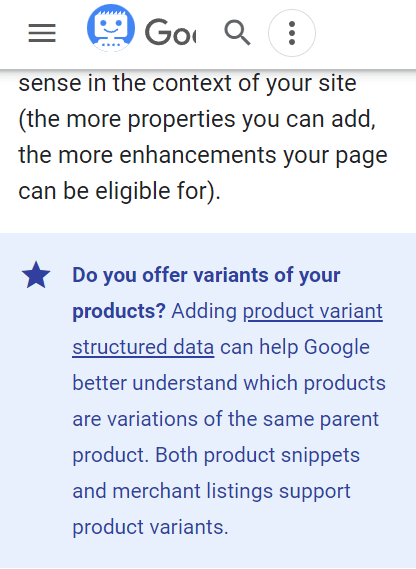

Google’s SearchLiaison tweeted late on May 6th that Google was enforcing the new site reputation abuse policy with manual actions. Manual actions are when someone at Google inspects a webpage to determine if the page is in violation of a spam policy.

Th Reputation Abuse policy affects sites that host third party content that is published with little to no oversight from the hosting website. The purpose of the arrangement is for the third party to take advantage of the host site’s reputation so that both receive a share of affiliate sales. An example could be a news website that’s hosting coupon code content that’s entirely created by a third party.

What Are Manual Actions?

A manual action is when a human at Google visually inspects a website to determine if they engaged in violations of Google’s spam policies. The result of a manual action is typically but not always a removal from Google’s search index. Sometimes the offending webpages are completely removed and sometimes they are only prevented from ranking.

Sites With Manual Actions

Google communicates to the site publisher if a site has been issued a manual action. Only the site publisher and those with access to a website’s search console account is able to know. Google generally doesn’t announce which sites have received a manual action. So unless a site has completely disappeared from Google Search it’s not possible to say with any degree of certainty if a site has received a manual action.

The fact that a webpage has disappeared from Google’s search results is not confirmation that it has received a manual action, especially if other pages from the site can still be found.

It’s important then to understand that unless a website or Google publicly acknowledges a manual action anyone on the outside can only speculate if a site has received one. The only exception is in the case when a site is completely removed from the search index, in which case there’s a high probability that the site has indeed penalized.

Big Brands Dropped From Search Results

It can’t be said with certainty that a site received a manual action if the page is still in the search index. But Aleyda Solis noticed that some big brand websites have recently stopped ranking for coupon related search queries.

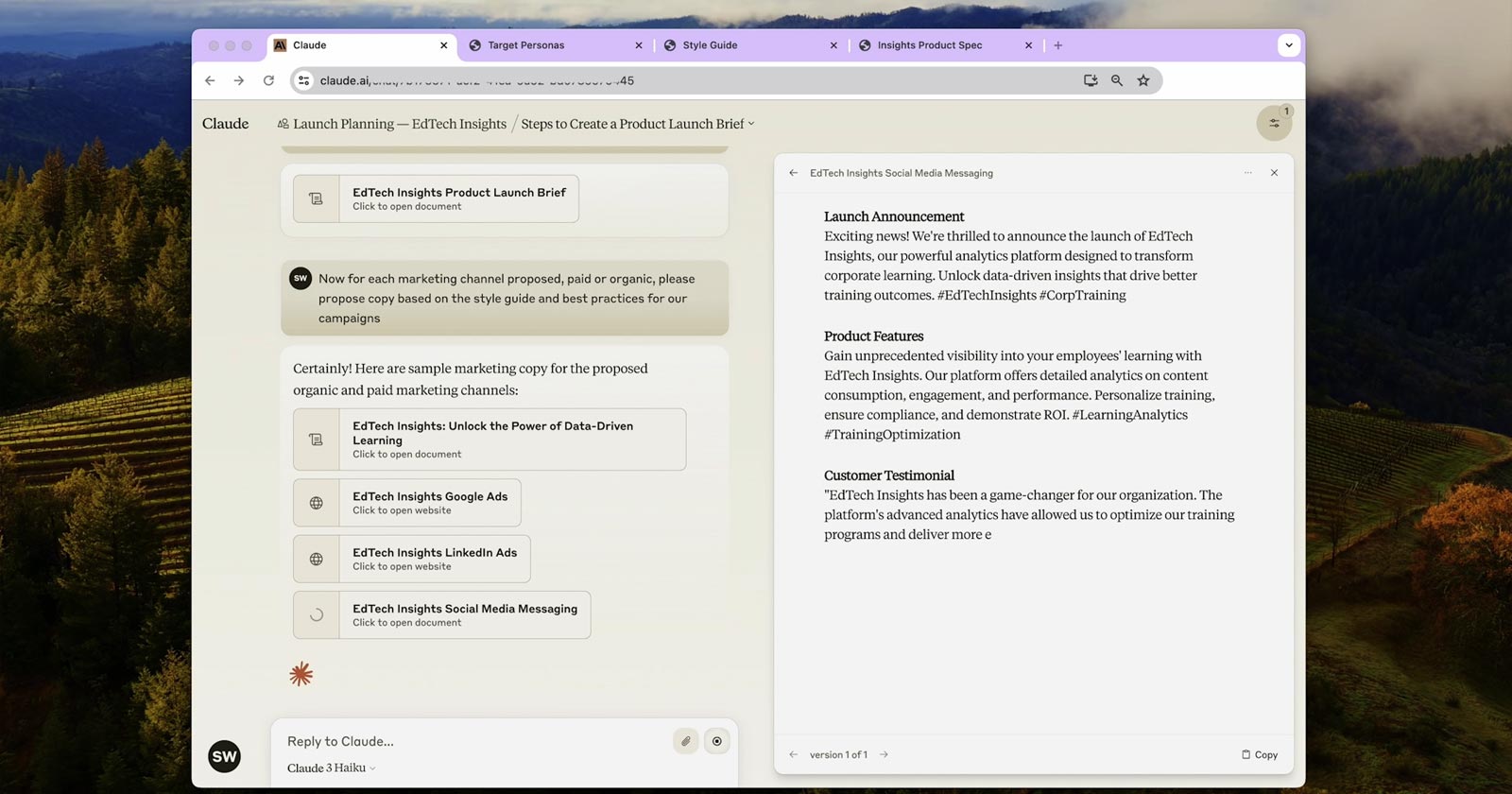

Aleyda shared screenshots of coupon related search results before and after the Site Abuse policies were enforced. Her tweets showed screenshots of sites that were no longer ranking. Some of the sites appear to have removed their coupon webpages (highlighted in red) and sites that still hosted coupon pages but were no longer ranking in the search results were highlighted in orange in Aleyda’s screenshots.

It should be noted that Aleyda does not accuse any site of having received a manual action. She only shows that some sites are no longer ranking for coupon code search queries.

Aleyda tweeted:

“Google has already started taking action for the new site reputation abuse policy 👀👇 See the before/after for many of the most popular “promo code(s)” queries:

* carhartt promo code

* postmates promo code

* samsung promo code

* godaddy promo codeSites that were ranking before and not anymore:

* In Orange (with still existing coupon sections): Cnet, Glamour, Reuters, USA Today, CNN, Business Insider

* In Red (with removed coupon sections): LA Times, Time Magazine, Wired, Washington Post”

Did Reuters Receive A Manual Action?

The global news agency Reuters formerly took the number one ranking spot for the keyword phrase “GoDaddy promo code” (as seen in the “before” screenshot posted by Aleyda to Twitter).

But Reuters is completely removed from the search results today.

Did the Reuters GoDaddy page receive a manual action? Manual actions typically result in a webpage’s complete removal from Google’s search index, But that’s not the case with the Reuters GoDaddy coupon page. A site search for the GoDaddy coupon page still shows webpages from Reuters are currently still in Google’s index. It’s just not ranking anymore.

Reuters Coupon Page Remains In Search Index

It’s hard to say with certainty if the Reuters page received a manual action but what is clear is that the page is no longer ranking, as Aleyda correctly points out.

Did Reuters GoDaddy Page Violate Google’s Spam Policy?

Google’s Site Reputation Abuse policy says that a characteristic of site reputation abuse is the lack of oversight of the third party content.

“Site reputation abuse is when third-party pages are published with little or no first-party oversight or involvement…”

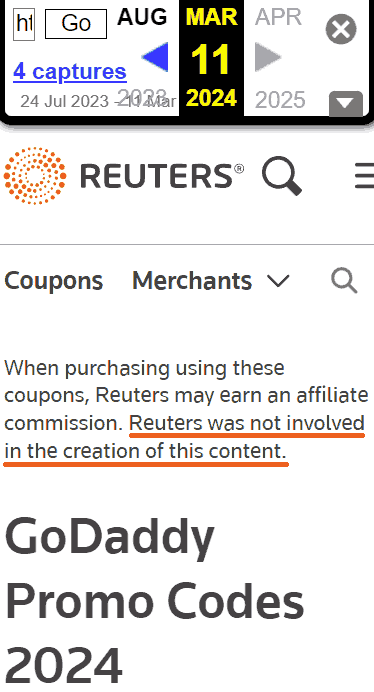

Reuter’s current GoDaddy page contains a disclaimer that asserts oversight over the third party content.

This is the current disclaimer:

“The Reuters newsroom staff have no role in the production of this content. It was checked and verified by the coupon team of Reuters Plus, the brand marketing studio of Reuters, in collaboration with Upfeat.”

Reuters’ disclaimer shows that there is first-party oversight which indicates that Reuters is in full compliance with Google’s spam policy.

But there’s a problem. There was a completely different disclaimer prior to Google’s Site Reputation Abuse policy announcement. This raises the question as to whether Reuters changed their disclaimer in order to give the appearance that there was oversight.

Fact: Reuters Changed The Disclaimer

The current disclaimer on the Reuters coupon page asserts that there was some oversight of the third party content. If that’s true then Reuters complies with Google’s spam policy.

But from March 11, 2024 and prior, the Reuters published a disclaimer that clearly disavowed involvement with the third party content.

This is what Google’s site reputation abuse policy says:

“Site reputation abuse is when third-party pages are published with little or no first-party oversight or involvement…”

And this is the March 11, 2024 disclaimer on the Reuters coupon page:

“Reuters was not involved in the creation of this content.”

Reuters Previously Denied Oversight Of 3rd Party Content

Reuters changed their disclaimer about a week after Google’s core update was announced. That disclaimer had always distanced Reuters from involvement prior to Google’s spam policy announcement.

This is their 2023 disclaimer on the same GoDaddy Coupon page:

“This service is operated under license by Upfeat Media Inc. Retailers listed on this page are curated by Upfeat. Reuters editorial staff is not involved.”

Why did that disclaimer change after Google’s Site Reputation Abuse announcement? If Reuters is in violation did they receive a manual action but were spared from having those pages removed from Google’s search index?

Manual Actions

Manual actions can result in a complete removal of the offending webpage from Google’s search index. That’s not what happened to Reuters and other big brand coupon pages highlighted by Aleyda so it could be possible that the big brand coupon pages only received a ranking demotion and not a full blown de-indexing as is common for regular sites. Or it could be that the demotion of those pages in the rankings are complete coincidence.

Featured Image by Shutterstock/Mix and Match Studio