WordPress security scanner WPScan’s 2024 WordPress vulnerability report calls attention to WordPress vulnerability trends and suggests the kinds of things website publishers (and SEOs) should be looking out for.

Some of the key findings from the report were that just over 20% of vulnerabilities were rated as high or critical level threats, with medium severity threats, at 67% of reported vulnerabilities, making up the majority. Many regard medium level vulnerabilities as if they are low-level threats but they’re not and should be regarded as deserving attention.

The WPScan report advised:

“While severity doesn’t translate directly to the risk of exploitation, it’s an important guideline for website owners to make an educated decision about when to disable or update the extension.”

WordPress Vulnerability Severity Distribution

Critical level vulnerabilities, the highest level of threat, represented only 2.38% of vulnerabilities, which is (essentially good news for WordPress publishers. Yet as mentioned earlier, when combined with the percentages of high level threats (17.68%) the number or concerning vulnerabilities rises to almost 20%.

Here are the percentages by severity ratings:

- Critical 2.38%

- Low 12.83%

- High 17.68%

- Medium 67.12%

Authenticated Versus Unauthenticated

Authenticated vulnerabilities are those that require an attacker to first attain user credentials and their accompanying permission levels in order to exploit a particular vulnerbility. Exploits that require subscriber-level authentication are the most exploitable of the authenticated exploits and those that require administrator level access present the least risk (although not always a low risk for a variety of reasons).

Unauthenticated attacks are generally the easiest to exploit because anyone can launch an attack without having to first acquire a user credential.

The WPScan vulnerability report found that about 22% of reported vulnerabilities required subscriber level or no authentication at all, representing the most exploitable vulnerabilities. On the other end of the scale of the exploitability are vulnerabilities requiring admin permission levels representing a total of 30.71% of reported vulnerabilities.

Permission Levels Required For Exploits

Vulnerabilities requiring administrator level credentials represented the highest percentage of exploits, followed by Cross Site Request Forgery (CSRF) with 24.74% of vulnerabilities. This is interesting because CSRF is an attack that uses social engineering to get a victim to click a link from which the user’s permission levels are acquired. If they can trick an admin level user to follow a link then they will be able to assume that level of privileges to the WordPress website.

The following is the percentages of exploits ordered by roles necessary to launch an attack.

Ascending Order Of User Roles For Vulnerabilities

- Author 2.19%

- Subscriber 10.4%

- Unauthenticated 12.35%

- Contributor 19.62%

- CSRF 24.74%

- Admin 30.71%

Most Common Vulnerability Types Requiring Minimal Authentication

Broken Access Control in the context of WordPress refers to a security failure that can allow an attacker without necessary permission credentials to gain access to higher credential permissions.

In the section of the report that looks at the occurrences and vulnerabilities underlying unauthenticated or subscriber level vulnerabilities reported (Occurrence vs Vulnerability on Unauthenticated or Subscriber+ reports), WPScan breaks down the percentages for each vulnerability type that is most common for exploits that are the easiest to launch (because they require minimal to no user credential authentication).

The WPScan threat report noted that Broken Access Control represents a whopping 84.99% followed by SQL injection (20.64%).

The Open Worldwide Application Security Project (OWASP) defines Broken Access Control as:

“Access control, sometimes called authorization, is how a web application grants access to content and functions to some users and not others. These checks are performed after authentication, and govern what ‘authorized’ users are allowed to do.

Access control sounds like a simple problem but is insidiously difficult to implement correctly. A web application’s access control model is closely tied to the content and functions that the site provides. In addition, the users may fall into a number of groups or roles with different abilities or privileges.”

SQL injection, at 20.64% represents the second most prevalent type of vulnerability, which WPScan referred to as both “high severity and risk” in the context of vulnerabilities requiring minimal authentication levels because attackers can access and/or tamper with the database which is the heart of every WordPress website.

These are the percentages:

- Broken Access Control 84.99%

- SQL Injection 20.64%

- Cross-Site Scripting 9.4%

- Unauthenticated Arbitrary File Upload 5.28%

- Sensitive Data Disclosure 4.59%

- Insecure Direct Object Reference (IDOR) 3.67%

- Remote Code Execution 2.52%

- Other 14.45%

Vulnerabilities In The WordPress Core Itself

The overwhelming majority of vulnerability issues were reported in third-party plugins and themes. However, there were in 2023 a total of 13 vulnerabilities reported in the WordPress core itself. Out of the thirteen vulnerabilities only one of them was rated as a high severity threat, which is the second highest level, with Critical being the highest level vulnerability threat, a rating scoring system maintained by the Common Vulnerability Scoring System (CVSS).

The WordPress core platform itself is held to the highest standards and benefits from a worldwide community that is vigilant in discovering and patching vulnerabilities.

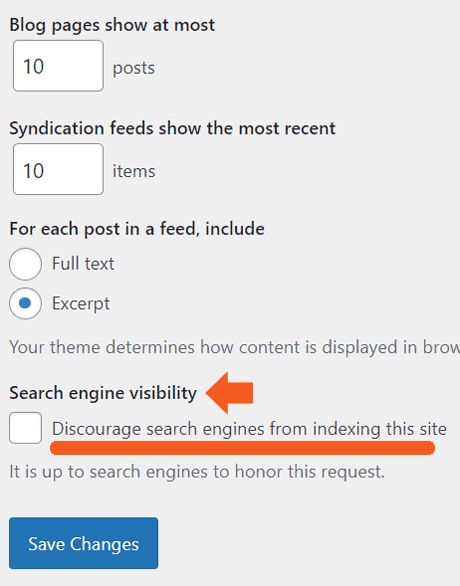

Website Security Should Be Considered As Technical SEO

Site audits don’t normally cover website security but in my opinion every responsible audit should at least talk about security headers. As I’ve been saying for years, website security quickly becomes an SEO issue once a website’s ranking start disappearing from the search engine results pages (SERPs) due to being compromised by a vulnerability. That’s why it’s critical to be proactive about website security.

According to the WPScan report, the main point of entry for hacked websites were leaked credentials and weak passwords. Ensuring strong password standards plus two-factor authentication is an important part of every website’s security stance.

Using security headers is another way to help protect against Cross-Site Scripting and other kinds of vulnerabilities.

Lastly, a WordPress firewall and website hardening are also useful proactive approaches to website security. I once added a forum to a brand new website I created and it was immediately under attack within minutes. Believe it or not, virtually every website worldwide is under attack 24 hours a day by bots scanning for vulnerabilities.

Read the WPScan Report:

WPScan 2024 Website Threat Report

Featured Image by Shutterstock/Ljupco Smokovski

![]()