WordPress released the results of their annual user and developer survey which showed mixed feelings about the direction the software is going and an increasing sense of not being welcome in the overall WordPress community.

The Gutenberg Editor

Gutenberg is the modernized version of the the default site editor which brings the paradigm of a visual editor to the WordPress core.

Third party visual WordPress editors have revolutionized the process of building websites with WordPress, making it relatively easy to create websites with intuitive interfaces.

That was the goal behind Gutenberg, which introduced the full site editor in 2022. The WordPress core development team have spent the last two years making incremental improvements to the user interface to make it more intuitive as well as adding more features.

What was reflected in the 2023 annual survey, especially in contrast the previous year, is a sense that users are feeling less confidence in Gutenberg, even though more publishers are using Gutenberg now than at any other time.

Which Editor Do You Use?

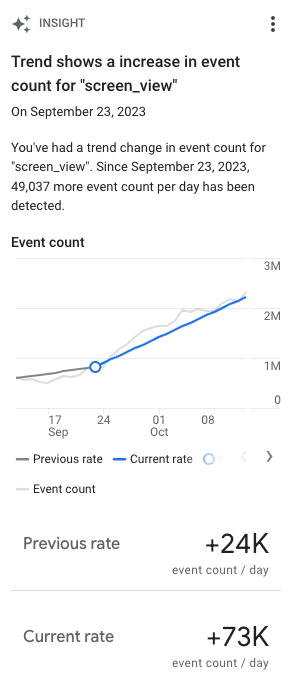

Question nine tracks the percentage of users adopting Gutenberg, showing a steady increase of users from 37% in 2020 to 60% in 2023.

But according to the answers to question 10 that asks whether WordPress needs their needs, 29% disagree that WordPress meets their needs and less than half of users (45%) agreed that WordPress met their needs. A full 26% of respondents answered that they were neutral.

Those results mean that 55% of WordPress users did not answer that WordPress meets their needs. This was the first year the question was asked so there’s no data to show whether that’s an increase or a decrease but it’s still an underwhelming result.

Less Users Believe WordPress As Good As Others

Question #19 asked if WordPress was as good as or better than other site builders and content management systems.

In 2022 68% of users agreed that WordPress was as good as or better. That number dropped to 63% in 2023.

The number of users who disagreed that WordPress is as good as or better increased from 9% in 2022 to 13% in 2023 and the number of people who were neutral increased by 1% to 24% of respondents.

That means that in 2023 37% of WordPress users responding to the survey did not agree with the statement that WordPress is as good as or better, an increase by five percentage points from the previous year.

Clearly the results about how users feel about Gutenberg and WordPress in general indicate that users are losing confidence in WordPress.

That response must surely be a disappointment to the core development team because the 2023 version of Gutenberg is actually more intuitive to use than it has ever been the WordPress performance scores are also at all-time highs.

So what’s going on, why is are user satisfaction signals trending downwards?

Why User Satisfaction Is Trending Downward

A clue as to why user happiness and confidence in WordPress is trending downward may have something to do with users looking over the fence at the Wix and Duda platforms that boast significantly better performance scores and are also easier to build websites with.

On the other side of the fence are third-party website builders (like Bricks Builder, Breakdance Website Builder, and Elementor) and WordPress hosts (Bluehost) that offer an arguably superior website building experience for developers who need advanced flexibility and for users who don’t know how to code.

Perhaps a clue to why users satisfaction is dropping can be found in the answers for question 20 which asks what the three best things are about WordPress.

The biggest declines were for:

- Ease of use

- Flexibility

- Cost

- Block themes

Ease Of Use

In 2022 32% of users cited Ease Of Use as one of the three best things about WordPress. In 2023 that number dropped to 21.7%

Flexibility

Flexibility ranked 31% in 2022 but by 2023 that ranking dropped to 18.5%.

Cost

In 2022 37% of users cited Cost as one of the best things but by 2023 that number collapsed to 17%.

Block Themes

Block Themes went from 10% citing block themes as one of the three best things to only 5.3% in 2023.

Users aren’t feeling it for WordPress and that lack of “feels” is reflected in the market share statistics reported by W3Techs that indicate a two year negative downward trend in market share.

Market share dropped from 43.3% in 2022 (cited in an article by Joost deValk) and (according to W3Techs) it dropped further to 43.2% February 2023 and from there it dropped further 43.1% in February 2024.

Wix usage increased from 2.5% in February 2023 to 2.6% in 2024. Shopify went from 3.8% in 2023 to 4.3% in 2023.

Joost deValk, co-founder of Yoast SEO sounded the alarm back in 2022 when he noted that WordPress market share was shrinking, pointing to the slow pace of performance improvements and the difficulty of using WordPress as two major reasons for the shrinking market share.

The article written by Joost explained:

“WordPress has a performance team now, and it has made some progress. But the reality is that it hasn’t really made big strides yet… I think WordPress, for the first time in a decade, is being out-‘innovated’.”

What Frustrates WordPress Users

Another clue as to why WordPress users are increasingly expressing dissatisfaction is what they feel most frustrated about WordPress, noted in question 21 where survey respondents were asked to choose the top three most frustrating things.

The answer of “too many plugins (finding the right one)” experienced a whopping 133% change, with 8% citing too many plugins in 2022 and 18.6% in 2023.

Site editing experience (17%), security (16.4%), and performance (16.2%) were top sources of frustration with WordPress.

One bright spot is that the number of respondents who were frustrated because site editing is difficult to learn dropped from 26% in 2022 to 15% in 2023.

Those answers were echoed in question 25 that asked which three areas of WordPress need more attention.

Here are the top five areas users say need more attention:

- Performance 19%

- Security 18%

- Developer resources (examples, demos, docs, tutorials, etc.) 16%

- Design/UI 14%

- Core functionality/stability 13%

The Future Of WordPress

WordPress was at a crossroads two years ago with regards to site performance and they took steps to address those problems. But their competitors are “out-innovating” them by improving at a faster pace, not just in site speed but in ease of use, SEO and features.

The results of this survey provide clear direction to the WordPress community who have a history of being responsive to user needs. Part of the solution is acknowledging search marketing, affiliate and publishing communities who are influential but not recognized in the annual surveys.

When I saw the survey last year I offered the core development team feedback about question number five which asked how respondents used WordPress.

These were the choices:

- A personal or passion project

- A service offering for my clients

- A platform for running my business

- A website for my employer or place of work

- School or academics or research

- None of the above

What was missing were the categories of content publishing, affiliate marketing, recipe bloggers and local businesses.

Lumping WordPress users like Disney with family-run restaurants and recipe bloggers into the category of a “platform for running my business” is unhelpful and provides little actionable insights. That oversight feeds into the perception that WordPress is aloof to the millions of users that the survey seeks to understand.

The good news is that WordPress is not aloof. The survey provides feedback on how the publishing community feels. My email conversations with members of the core development team make it clear to me that they are keen to embrace all their users as part of the greater WordPress community.

Read the summary of the WordPress survey:

2023 Annual Survey Results and Next Steps

Download the PDF version with more details:

Report for 2023 WordPress Annual Survey

Featured Image by Shutterstock/Krakenimages.com