New data from the Audience Key content marketing platform indicates that Amazon’s visibility has suffered a significant drop. The decline follows two changes Amazon made to its presence in Google Shopping, although it is uncertain whether those changes are direct or indirect causes.

The first change was the discontinuation of its paid Shopping ads, and the second was the consolidation of its three merchant store names (Amazon, Amazon.com, and Amazon.com – Seller) into a single store identity, “Amazon.” These changes appear to have had a measurable effect on how often Amazon product cards appear in Google’s organic Shopping results.

Audience Key is a content marketing platform that fills a gap in competitive intelligence by tracking and reporting on Google’s organic product grid rankings at scale. This is a new product that has recently rolled out.

According to Audience Key:

“Across 79,000+ keywords, Audience Key’s first-of-its-kind tracking showed the effects of Amazon’s changes to its merchant feed — the approach initially wiped out 31% of its organic product card rankings. Weeks later, Amazon has now disappeared completely — creating a seismic shift that is immediately reshaping e-commerce SERPs and freeing up prime shelf space for rivals.”Tom Rusling, founder of Audience Key notified me today that Amazon has subsequently completely dropped out of the organic search results, beginning on August 18th.

Anecdotally, I’ve seen Amazon completely dropped out of Google’s organic product grids, including for search queries I know for certain they used to rank for and are now completely gone from the search engine results pages (SERPs).

Overall Impact

The most immediate change was the overall scale of Amazon’s presence. Before July 25, Amazon’s listings appeared in 428,984 organic product cards. After the change, that presence dropped to 294,983.

- Before July 25: 428,984 product cards

- After July 25: 294,983 product cards

Net change: -134,001 cards (31% decline)

This shows that Amazon’s move was not just a brand consolidation but also a large reduction in visibility. It is possible that the brand consolidation triggered a temporary drop in visibility because it’s such a wide-scale change.

Category-Level Changes

The reduction was not spread evenly. Some product categories were hit harder than others. Apparel had the steepest losses, while categories like Home Goods and Laptop Computers also fell sharply.

Smaller categories such as Tires and Indoor Decor declined more moderately, but all showed the same downward trend.

Apparel Category Experiences The Largest Declines

Apparel stands out as the category where Amazon saw the steepest reductions, with its presence cut by more than half across several tracked segments.

Below is the data I currently have, I’m waiting for clarification from Audience Key about whether the following apparel categories are more specific:

- Apparel: 4,571 → 1,804 (-60%)

- Apparel: 4,503 → 1,859 (-59%)

- Apparel: 31,852 → 13,632 (-57%)

- Apparel: 6,932 → 3,029 (-56%)

Several Other Major Categories Affected

The losses were also large in high-volume categories. Home Goods, Laptop Computers, and Outdoor Furnishings all saw reductions, while Business Supplies and Technology products also suffered visibility declines.

- Business Supplies: 12,510 → 9,786 (-22%)

- Home Goods: 133,717 → 73,833 (-45%)

- Laptop Computers: 30,520 → 19,615 (-36%)

- Outdoor Furnishings: 58,416 → 41,995 (-28%)

- Scientific and Technology: 58,880 → 50,666 (-14%)

Smaller Categories Also Affected

Even niche verticals were affected, though the percentage losses were less severe than in Apparel or Home Goods. These declines show Amazon’s reductions were spread across both major and smaller categories.

- Structures: 6,241 → 4,229 (-32%)

- Tires: 3,063 → 2,609 (-15%)

- Indoor Decor: 23,634 → 19,789 (-16%)

- Indoor Decor (variant): 6,626 → 5,926 (-11%)

Merchant Store Consolidation

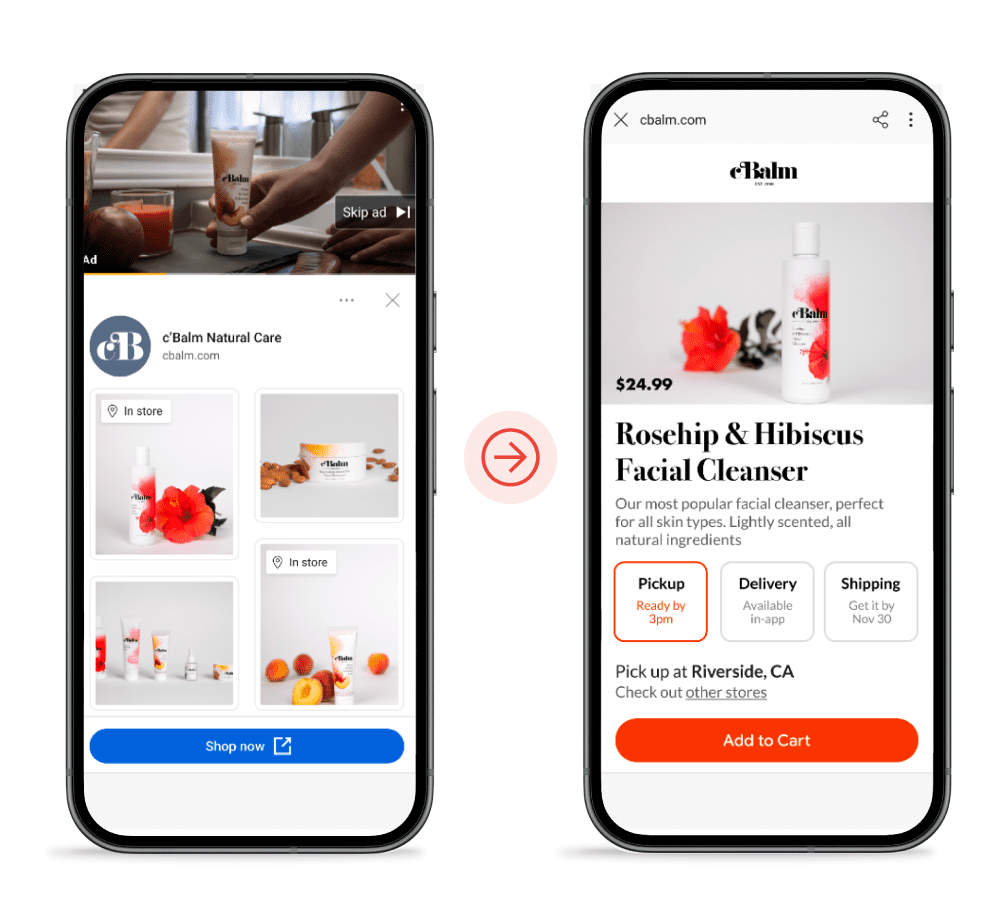

Another change came from how Amazon presented itself in Shopping results. Before July 25, the company appeared under three names: Amazon, Amazon.com, and Amazon.com – Seller. Afterward, only the unified “Amazon” label remained.

- Total before consolidation (all three names): 428,984 product cards

- After consolidation (single “Amazon”): 294,980 product cards

This simplified Amazon’s presence by unifying it under one name, but it also coincided with a decline in overall coverage.

Where Amazon Is At Today?

Even with the July drops in visibility, Amazon remained the most visible merchant in Google Shopping, with smaller visibility than before. But that’s not longer the case, the situation for Amazon appears to have worsened.

Audience Key speculated on what is going on:

“We thought the first chapter of this story was complete, but just as we prepared this study for publication, everything changed. Again. Our latest U.S. search data reveals a stunning shift: Amazon vanished from the organic product grids.

Whether this is a short-term anomaly or a more permanent new normal, only time will tell. We will continue to monitor and report on our findings. The sudden removal leaves us — and the industry — asking one big question: WHY???

That is certainly a topic for speculation.”

Audience Key speculates that Amazon may be withholding their product feed from Google or that this is a technical or strategic change on Amazon’s part.

One thing that we know about Google organic search is that large-scale changes can have a dramatic impact on search visibility. Audience Key has a unique product that is focused on tracking Google’s product grid, something that many ecommerce companies may find useful. They are apparently well-positioned to notice this kind of change.

Read Audience Key’s blog post about these changes:

Beyond Paid: The Hidden Organic Shockwave from Amazon’s Google Shopping Exit

Featured Image by Shutterstock/Sergei Elagin