New research from BrightEdge shows that Google AI Overviews, AI Mode, and ChatGPT recommend different brands nearly 62% of the time. BrightEdge concludes that each AI search platform is interpreting the data in different ways, suggesting different ways of thinking about each AI platform.

Methodology And Results

BrightEdge’s analysis was conducted with its AI Catalyst tool, using tens of thousands of the same queries across ChatGPT, Google AI Overviews (AIO), and Google AI Mode. The research documented a 61.9% overall disagreement rate, with only 33.5% of queries showing the exact same brands in all three AI platforms.

Google AI Overviews averaged 6.02 brand mentions per query, compared to ChatGPT’s 2.37. Commercial intent search queries containing phrases like “buy,” “where,” or “deals” generated brand mentions 65% of the time across all platforms, suggesting that these kinds of high-intent keyword phrases continue to be reliable for ecommerce, just like in traditional search engines. Understandably, e-commerce and finance verticals achieved 40% or more brand-mention coverage across all three AI platforms.

Three Platforms Diverge

Not all was agreement between the three AI platforms in the study. Many identical queries led to very different brand recommendations depending on the AI platform.

BrightEdge shares that:

- ChatGPT cites trusted brands even when it’s not grounding on search data, indicating that it’s relying on LLM training data.

- Google AI Overviews cites brands 2.5 times more than ChatGPT.

- Google AI Mode cites brands less often than both ChatGPT and AIO.

The research indicates that ChatGPT favors trusted brands, Google AIO emphasizes breadth of coverage with more brand mentions per query, and Google AI Mode selectively recommends brands.

Next we untangle why these patterns exist.

Differences Exist

BrightEdge asserts that this split across the three platforms is not random. I agree that there are differences, but I disagree that “authority” has anything to do with it and offer an alternate explanation later on.

These are the conclusions that they draw from the data:

- “The Brand Authority Play:

ChatGPT’s reliance on training data means established brands with strong historical presence can capture mentions without needing fresh citations. This creates an “authority dividend” that many brands don’t realize they’re already earning—or could be earning with the right positioning.

- The Volume Opportunity:

Google AI Overview’s hunger for brand mentions means there are 6+ available slots per relevant query, with clear citation paths showing exactly how to earn visibility. While competitors focus on traditional SEO, innovative brands are reverse-engineering these citation networks.

- The Quality Threshold:

Google AI Mode’s selectivity means fewer brands make the cut, but those that do benefit from heavy citation backing that reinforces their authority across the web.”

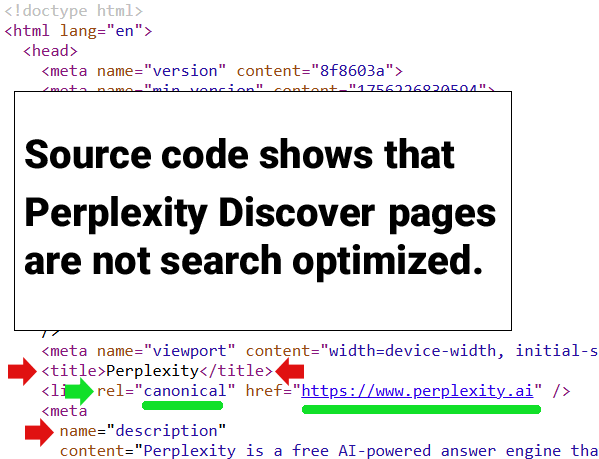

Not Authority – It’s About Training Data

BrightEdge refers to “authority signals” within ChatGPT’s underlying LLM. My opinion differs in regard to an LLM’s generated output, not retrieval-augmented responses that pull in live citations. I don’t think there are any signals in the sense of ranking-related signals. In my opinion, the LLM is simply reaching for the entity (brand) related to a topic.

What looks like “authority” to someone with their SEO glasses on is more likely about frequency, prominence, and contextual embedding strength.

- Frequency:

How often the brand appears in the training data.

- Prominence:

How central the brand is in those contexts (headline vs. footnote).

- Contextual Embedding Strength:

How tightly the brand is associated with certain topics based on the model’s training data.

If a brand appears widely in appropriate contexts within the training data, then, in my opinion, it is more likely to be generated as a brand mention by the LLM, because this reflects patterns in the training data and not authority.

That said, I agree with BrightEdge that being authoritative is important, and that quality shouldn’t be minimized.

Patterns Emerge

The research data suggests that there are unique patterns across all three platforms that can behave as brand citation triggers. One pattern all three share is that keyword phrases with a high commercial intent generate brand mentions in nearly two-thirds of cases. Industries like e-commerce and finance achieve higher brand coverage, which, in my opinion, reflects the ability of all three platforms to accurately understand the strong commercial intents for keywords inherent to those two verticals.

A little sunshine in a partly cloudy publishing environment is the finding that comparison queries for “best” products generate 43% brand citations across all three AI platforms, again reflecting the ability of those platforms to understand user query contexts.

Citation Network Effect

BrightEdge has an interesting insight about creating presence in all three platforms that it calls a citation network effect. BrightEdge asserts that earning citations in one platform could influence visibility in the others.

They share:

“A well-crafted piece… could:

Earn authority mentions on ChatGPT through brand recognition

Generate 6+ competitive mentions on Google AI Overview through comprehensive coverage

Secure selective, heavily-cited placement on Google AI Mode through third-party validation

The citation network effect means that earning mentions on one platform often creates the validation needed for another. “

Optimizing For Traditional Search Remains

Nevertheless, I agree with BrightEdge that there’s a strategic opportunity in creating content that works across all three environments, and I would make it explicit that SEO, optimizing for traditional search, is the keystone upon which the entire strategy is crafted.

Traditional SEO is still the way to build visibility in AI search. BrightEdge’s data indicates that this is directly effective for AIO and has a more indirect effect for AI Mode and ChatGPT.

ChatGPT can cite brand names directly from training data and from live data. It also cites brands directly from the LLM, which suggests that generating strong brand visibility tied to specific products and services may be helpful, as that is what eventually makes it into the AI training data.

BrightEdge’s conclusion about the data leans heavily into the idea that AI is creating opportunities for businesses that build brand awareness in the topics they want to be surfaced in.

They share:

“We’re witnessing the emergence of AI-native brand discovery. With this fundamental shift, brand visibility is determined not by search rankings but by AI recommendation algorithms with distinct personalities and preferences.

The brands winning this transition aren’t necessarily the ones with the biggest SEO budgets or the most content. They’re the ones recognizing that AI disagreement creates more paths to visibility, not fewer.

As AI becomes the primary discovery mechanism across industries, understanding these platform-specific triggers isn’t optional—it’s the difference between capturing comprehensive brand visibility and watching competitors claim the opportunities you didn’t know existed.

The 62% disagreement gap isn’t breaking the system. It’s creating one—and smart brands are already learning to work it.”

BrightEdge’s report:

ChatGPT vs Google AI: 62% Brand Recommendation Disagreement

Featured Image by Shutterstock/MMD Creative