Google On Scaled Content: “It’s Going To Be An Issue” via @sejournal, @martinibuster

Google’s John Mueller and Danny Sullivan discussed why AI generated content is problematic, citing the newly updated quality rater guideline and sharing examples of how AI can be used in a positive way that has added value.

Danny Sullivan, known as Google Search Liaison, spoke about the topic in more detail, providing an example of what a high quality use of AI generated content is to serve as a contrast to what isn’t a good use of it.

Update To The Quality Rater Guidelines

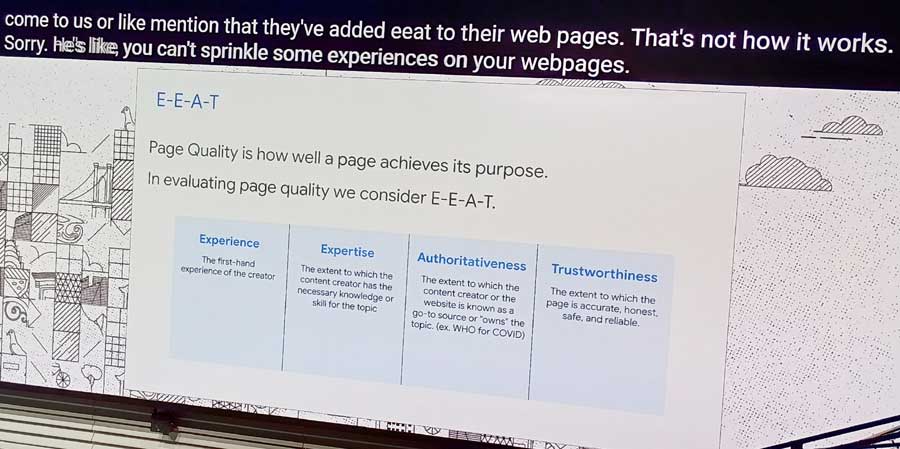

The quality rater guidelines (QRG) is a book created by Google to provide guidance to third-party quality raters who rate tests of changes to Google’s search results. It was recently updated and it now includes guidance about AI generated content that’s folded into a section about content created with little effort or originality.

Mueller discussed AI generated content in the context of scaled content abuse, noting that the quality raters are taught to rate that kind of content as low quality.

The new section of the QRG advises the raters:

“The lowest rating applies if all or almost all of the MC on the page (including text, images, audio, videos, etc) is copied, paraphrased, embedded, auto or AI generated, or reposted from other sources with little to no effort, little to no originality, and little to no added value for visitors to the website. Such pages should be rated Lowest, even if the page assigns credit for the content to another source.”

Doesn’t Matter How It’s Scaled: It’s Going To Be An Issue

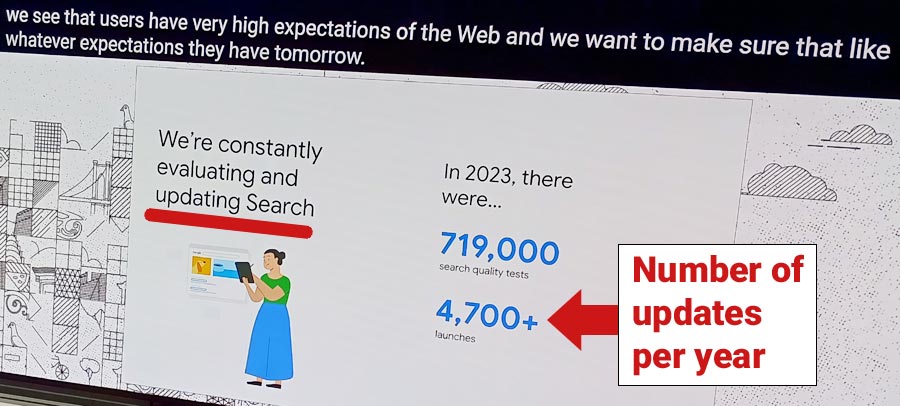

Danny Sullivan, known as Google Search Liaison, started his part of the discussion by saying that to Google, AI generated content is no different than scaled content tactics from the past, comparing it to the spam tactics of 2005 when Google used statistical analysis and other methods to catch scaled content. He also emphasized that it doesn’t matter how the content was scaled.

According to my notes, here’s a paraphrase of what he said:

“The key things are, large amounts of unoriginal content and also no matter how it’s created.

Because like, ‘What are you going to do about AI? How are you going to deal with all the AI explosion? AI can generate thousands of pages?’

Well 2005 just called, it’d like to explain to you how human beings can generate thousands of pages overnight that look like they’re human generated because they weren’t human generated and etcetera, etcetera, etcetera.

If you’ve been in the SEO space for a long time, you well understand that scaled content is not a new type of thing. So we wanted to really stress: we don’t really care how you’re doing this scaled content, whether it’s AI, automation, or human beings. It’s going to be an issue.

So those are things that you should consider if you’re wondering about the scaled content abuse policy and you want to avoid being caught by it.”

How To Use AI In A Way That Adds Value

A helpful thing about Danny’s session is that he offered an example of a positive use AI, citing how retailers offer a summary of actual user reviews that give an overall user sentiment of the product without having to read reviews. This is an example of how AI is providing an added value as opposed to being the entire main content.

This is from my notes of what he said:

“When I go to Amazon, I skip down to the reviews and the reviews have a little AI-generated thing at the top that tells me what the users generally think, and I’m like, this is really helpful.

And the thing that’s really helpful to me about it is, it’s AI applied to original content, the reviews, to give me a summary. That was added value for me and unique value for me. I liked it.”

As Long As It’s High Quality….

Danny next discussed how they tried to put out a detailed policy about AI generated content but he said it was misconstrued by some parts of the SEO community to mean that AI generated content was fine as long as it was quality AI generated content.

In my 25 years of SEO experience, let me tell you, whenever an SEO tells you that an SEO tactic is fine “as long as it’s quality” run. The “as long as it’s quality” excuse has been used to justify low-quality SEO practices like reciprocal links, directory links, paid links, and guest posts – If it’s not already an SEO joke it should be.

Danny continued:

“And then people’s differentiation of what’s quality is all messed up. And they say Google doesn’t care if it’s AI!’ And that is not really what we said.

We didn’t say that.”

Don’t Mislead Yourself About Quality Of Scaled Content

Danny advised that anyone using artificially generated content should think about two things to use as tests for whether it’s a good idea:

- The motivation for mass generated content.

- Unoriginality of the scaled content.

Traffic Motivated Content

The motivation shouldn’t be because it will bring more traffic. The motivation should because there’s a value-add for site visitors.

This is how Danny Sullivan explained it, according to my notes:

“Any method that you undertake to mass generate content, you should be carefully thinking about it. There’s all sorts of programmatic things, maybe they’re useful. Maybe they’re not. But you should think about it.

And the things to especially think about is if you’re primarily doing into it to game search traffic.

Like, if the primary intent of the content was, ‘I’m going to get that traffic’ and not, ‘some user actually expected it’ if they ever came to my website directly. That’s one of the many things you can use to try to determine it.”

Originality Of Scaled Content

SEOs who praise their AI-generated content lose their enthusiasm when the content is about a topic they’re actually expert in and will concede that it’s not as smart as they are… And what’s going on, that if you are not an expert then you lack the expertise to judge the credibility of the AI generated content.

AI is trained to crank out the next likeliest word in a series of words, a level of unoriginality so extreme that only a computer can accomplish it.

Sullivan next offered a critique of the originality of AI-generated content:.

“The other thing is, is it unoriginal?

If you are just using the tool saying, ‘Write me 100 pages on the 100 different topics that I got because I ran some tool that pulled all the People Also Asked questions off of Google and I don’t know anything about those things and they don’t have any original content or any value. I just kind of think it’d be nice to get that traffic.’

You probably don’t have anything original.

You’re not necessarily offering anything with really unique value with it there.

A lot of AI tools or other tools are very like human beings because they’ve read a lot of human being stuff like this as well. Write really nice generic things that read very well as if they are quality and that they answer what I’m kind of looking for, but they’re not necessarily providing value.

And sometimes people’s idea of quality differ, but that’s not the key point of it when it comes to the policy that we have with it from there, that especially because these days some people would tell you that it’s quality.”

Takeaways:

- Google doesn’t “care how you’re doing this scaled content, whether it’s AI, automation, or human beings. It’s going to be an issue.”

- The QRG explicitly includes AI-generated content in its criteria for ‘Lowest’ quality ratings, signaling that this is something Google is concerned about.

- Ask if the motivation for using AI-generated content is primarily to drive search traffic or to help users

- Originality and value-add are important qualities of content to consider