An awkward Q&A at WordCamp Asia 2025 saw Matt Mullenweg struggle to answer where WordPress will be in five years. Apparently caught off guard, he turned to the Lead Architect of Gutenberg for ideas, but he couldn’t answer either.

Project Gutenberg

Gutenberg is a reimagining of how WordPress users can build websites without knowing any code, with a visual interface of blocks for different parts of a web page, which is supposed to make it easy. Conceived as a four phase project, it’s been in development since 2017 and is currently in phase three.

The four phases are:

- Phase 1: Easier Editing

- Phase 2: Customization

- Phase 3: Collaborative Editing

- Phase 4: Multilingual Support

There’s a perception that Project Gutenberg has not been enthusiastically received by the WordPress developer community or by regular users, even though there are currently 85.9 million installations of the Gutenberg WordPress editor.

However, one developer at WordCamp Asia told Matt Mullenweg at the end of conference Q&A session that she was experiencing hesitations from people she speaks with about using WordPress and expressed frustration about how difficult it was to use it.

She said:

“Some of those hesitations were it’s easy to get overwhelmed. You know, when you look up how to learn WordPress, and I had to be really motivated… for myself to actually study it and kind of learn the basics of blocks… So do you have any advice on how I could convince my friends to start a WordPress site or how to address these challenges myself? You know like, getting overwhelmed and feeling like there’s just so much. I’m not a coder and things like that… any advice you can offer small business owners?”

The whole purpose of the Gutenberg block editor was to make it easier for non-coders to use WordPress. So a WordPress user asking for ideas on how to convince people to use WordPress presented an unflattering view of the success of the WordPress Gutenberg Project.

Where Will WordPress Be In Five Years?

Another awkward moment was when someone else asked Matt Mullenweg where he saw WordPress being in five years. The question seemingly caught him off guard as he was unable to articulate what the plan is for the world’s most popular content management system.

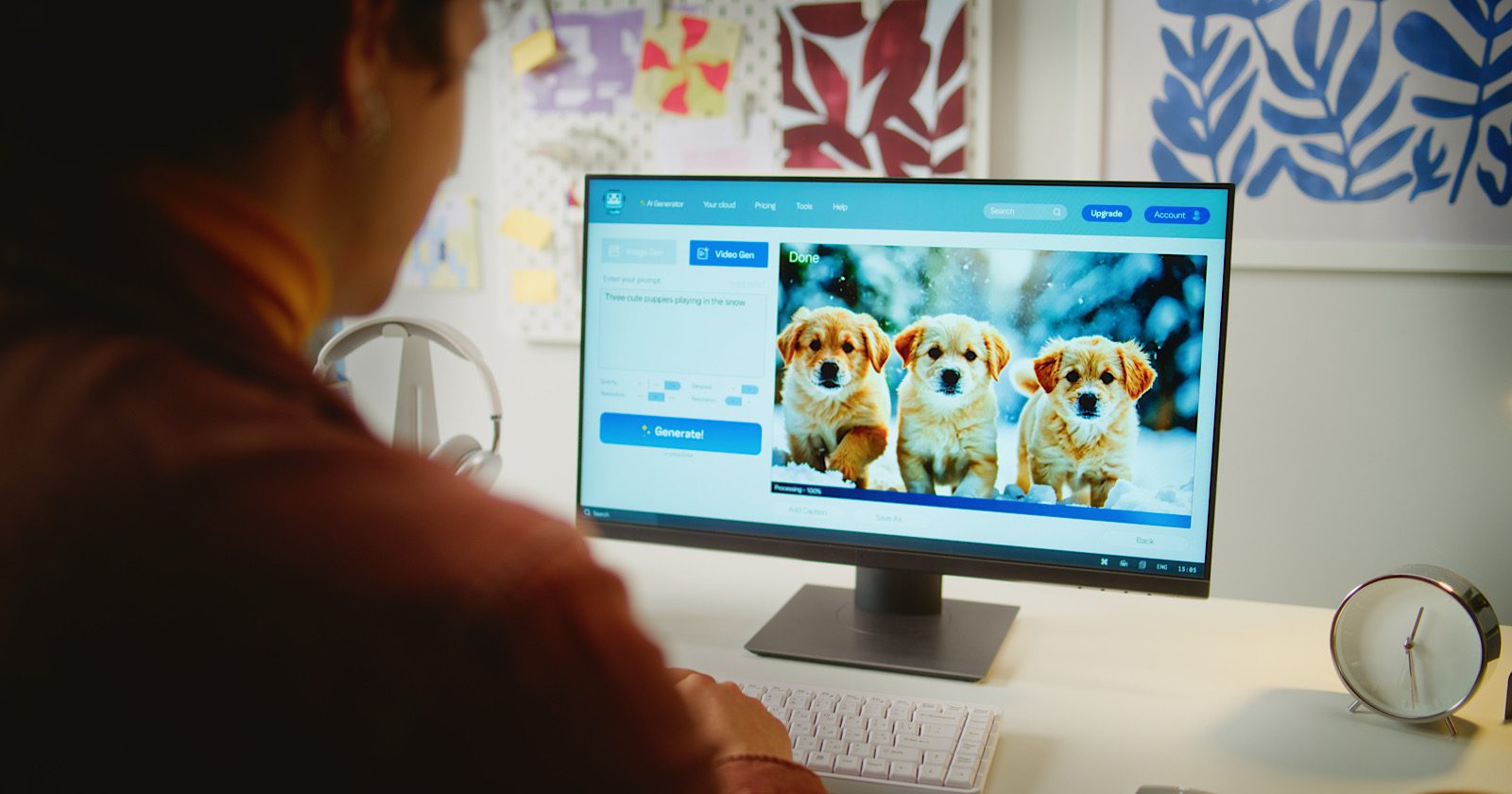

Mullenweg had been talking about the importance of AI and of some integrations being tested in the commercial version at WordPress.com. So the person asking the question asked if he had any other ideas beyond AI.

The person asked:

“If you have other ideas beyond AI or even how we consume WordPress five years from now that might be different from today.”

Matt Mullenweg answered:

“Yeah, it’s hard to think about anything except AI right now. And as I said a few years ago, before ChatGPT came out, learn AI deeply. Everyone in the room should be playing with it. Try out different models. Check out Grok, check out DeepSeek, two of the coolest ones that just launched.

And for WordPress, at that point will be past all the phases of Gutenberg. I think… I don’t know…”

It was at this point that Mullenweg calls on Matías Ventura, Lead Architect of Gutenberg, to ask him if he has any ideas of where WordPress is headed in five years.

He continued:

“Matías, what do you think? What’s post-Gutenberg? We’ve been working for so long, it’s…”

Matías Ventura, Lead Architect of Gutenberg, came up to a microphone to help Mullenweg answer the question he was struggling with.

Matías answered:

“I mean, hopefully we’ll be done by then so…”

Mullenweg commented:

“Sometimes that last 10% takes, you know, 90% of the time.”

Matías quipped that it can take a hundred years then continued his answer, which essentially admitted that there were no plans without actually admitting that there were no plans for five years out.

He continued his answer:

“I don’t know, I think, well in the talk I gave I… also reflected a bit that part of the thing is just discovering as we go, like figuring out how like, right now it’s AI that’s shaping reality but who knows, in a few decades what it would be. And to me, the only conviction is that yeah, we’ll need to adapt, we’ll need to change. And that’s part of the fun of it, I think. So I’m looking forward to whatever comes.”

Mullenweg jumped in at this point with his thoughts:

“That’s a good point of the, you know, how many releases we have of WordPress right now, 60 or whatever… 70 probably…. Outside of Gutenberg, we haven’t had a roadmap that goes six months or a year, or a couple versions, because the world changes in ways you can’t predict.

But being responsive is, I think, really is how organisms survive.

You know, Darwin, said it’s not the fittest of the species that survives. It’s the one that’s most adaptable to change. I think that’s true for software as well.”

Mullenweg Challenged To Adapt To Change

His statement about being adaptable to change set up another awkward moment at the 6:55:47 minute mark where Taco Verdonschot, co-owner of Progress Planner, stood up to the microphone and asked Mullenweg if he really was committed to being adaptable.

Taco Verdonschot is formerly of Yoast SEO and currently sponsored to work on WordPress by Emilia Capital (owned by Joost de Valk and Marieke van de Rakt).

Taco asked:

“I’m Taco, co-owner of Progress Planner. I was wondering, you were talking about adaptability before and survival of the fittest. That means being open to change. What we’ve seen in the last couple of months is that people who were talking about change got banned from the project. How open are you to discussing change in the project?”

Mullenweg responded:

“Sure. I don’t want to go too far into this but I will say that talking about change will not get you banned. There’s other behaviors… but just talking about change is something that we do pretty much every day. And we’ve changed a lot over the years. We’ve changed a lot in the past year. So yeah. But I don’t want to speak to anyone personally, you know. So keep it positive.”

Biggest Challenges WordPress Will Face In Next Five Years

Watch the question and answer at the 6:19:24 mark