Google’s Documentation Update Contains Hidden SEO Insights via @sejournal, @martinibuster

Google quietly updated their Estimated Salary (Occupation) Structured Data page with subtle edits that make the information more relevant and easily understood. The changes show how a page can be analyzed for weaknesses and subsequently improved.

Subtle Word Shifts Make A Difference

The art of writing is something SEO should consider now more than ever. It’s been important for at least the past six years but in my opinion it’s never been more important than it is today because of the preciseness of natural language queries for AI Overviews and AI assistants.

Three Takeaways About Content

- The words used on a page can exert a subtle influence in how a reader and a machine understand the page.

- Relevance is commonly understood as whether a web page is a match for a user’s search query and the user’s intent, which is an outdated way to think about it, in my opinion.

- A query is just a question and the answer is never a web page. The answer is generally a passage in a web page.

Google’s update to their “Estimated Salary (Occupation) Structured Data” web page offers a view of how Google updated one of their own web pages to be more precise.

There were only two changes that were so seemingly minimal they didn’t even merit a mention on their documentation changelog, they just updated it and pushed it live without any notice.

But the changes do make a difference in how precise the page is on the topic.

First Change: Focus Of Content

Google refers to “enriched search results” as different search experiences, like the recipe search experience, event search experience and the job experience.

The original version of the “Estimated Salary (Occupation) Structured Data” documentation focused on talking about the Job Experience search results. The updated version completely removed all references to the Job Experience and is now more precisely focused on the “estimated salary rich result” which is more precise than the less precise “Job Experience” phrasing.

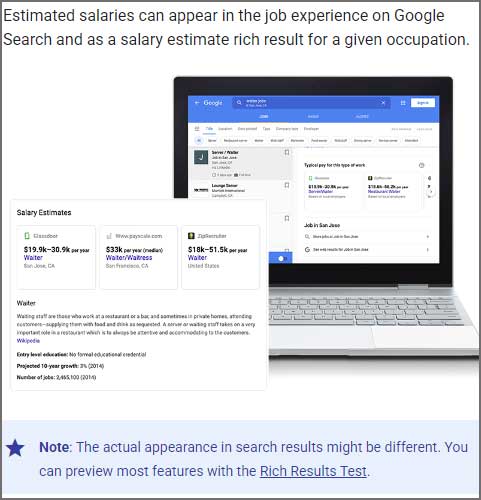

This is the original version:

“Estimated salaries can appear in the job experience on Google Search and as a salary estimate rich result for a given occupation.”

This is the updated version:

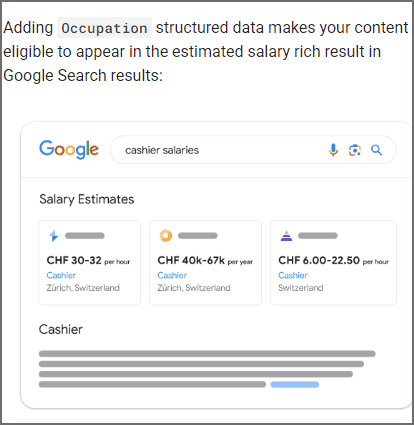

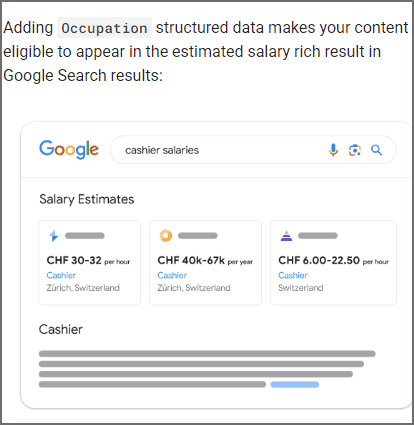

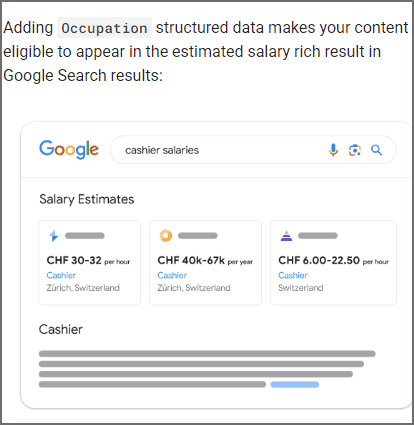

“Adding Occupation structured data makes your content eligible to appear in the estimated salary rich result in Google Search results:”

Second Change: Refreshed Image And Simplified

The second change refreshes an example image.

The change has three notable qualities:

- Precisely models a search result

- Aligns with removal of “job experience”

- Simplifies message

The original image contained a screenshot of a laptop with a search result and a closeup of the search result overlaid. The image looks more at home on a product page than an informational page. Someone spent a lot of time creating an attractive image but it’s too complex and neglects the number one rule of content which is that all content must communicate the message quickly.

All content, whether text or image, is like a glass of water: the important part is the water, not the glass.

Screenshot Of Attractive But Slightly Less Effective Image

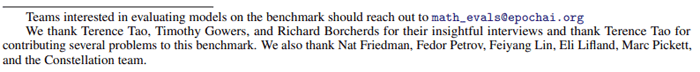

The image that replaced it is literally an example of the actual rich result. It’s not fancy but it doesn’t have to be. It just has to do the job of communicating.

Screenshot Of Google’s More Effective Image

The other thing this change accomplishes is that it removes the phrase “job experience” and replaces it with a sentence that aligns with the apparent goal of making this page about the Occupation structured data.

This is the new text:

“Adding Occupation structured data makes your content eligible to appear in the estimated salary rich result in Google Search results:”

Third change: Replace Confusing Sentence

The third change corrected a sentence that was grammatically incorrect and confusing.

Original version:

“You must include the required properties for your content to be eligible for display the job experience on Google and rich results.”

Google corrected the grammar error, made the sentence specific to the ‘estimated salary’ rich result, and removed the reference to Job Experience, aligning it more strongly with estimated salary rich results.

This is the updated version:

“You must include the required properties for your content to be eligible for display in the estimated salary rich result.”

Three Examples For Updating Web Pages

On one level the changes were literally about removing the focus on one topic and reinforcing a slightly different one. On another level it’s an example of giving users a better experience by communicating more precisely. Writing for humans is not just a creative art, it’s also a technical one. All writers, even novelists, understand that the craft of writing is technical because one of the most important factors is communicating ideas. Other issues like being comprehensive or fancy don’t matter as much as the communication part.

I think that the revisions Google made fits into what Google means when it says to make content for humans not search engines.

Read the updated documentation here:

Estimated salary (Occupation) structured data

Compare it to the archived original version.

Featured Image by Shutterstock/Lets Design Studio