Google’s JavaScript Warning & How It Relates To AI Search via @sejournal, @MattGSouthern

A recent discussion among the Google Search Relations team highlights a challenge in web development: getting JavaScript to work well with modern search tools.

In Google’s latest Search Off The Record podcast, the team discussed the rising use of JavaScript, and the tendency to use it when it’s not required.

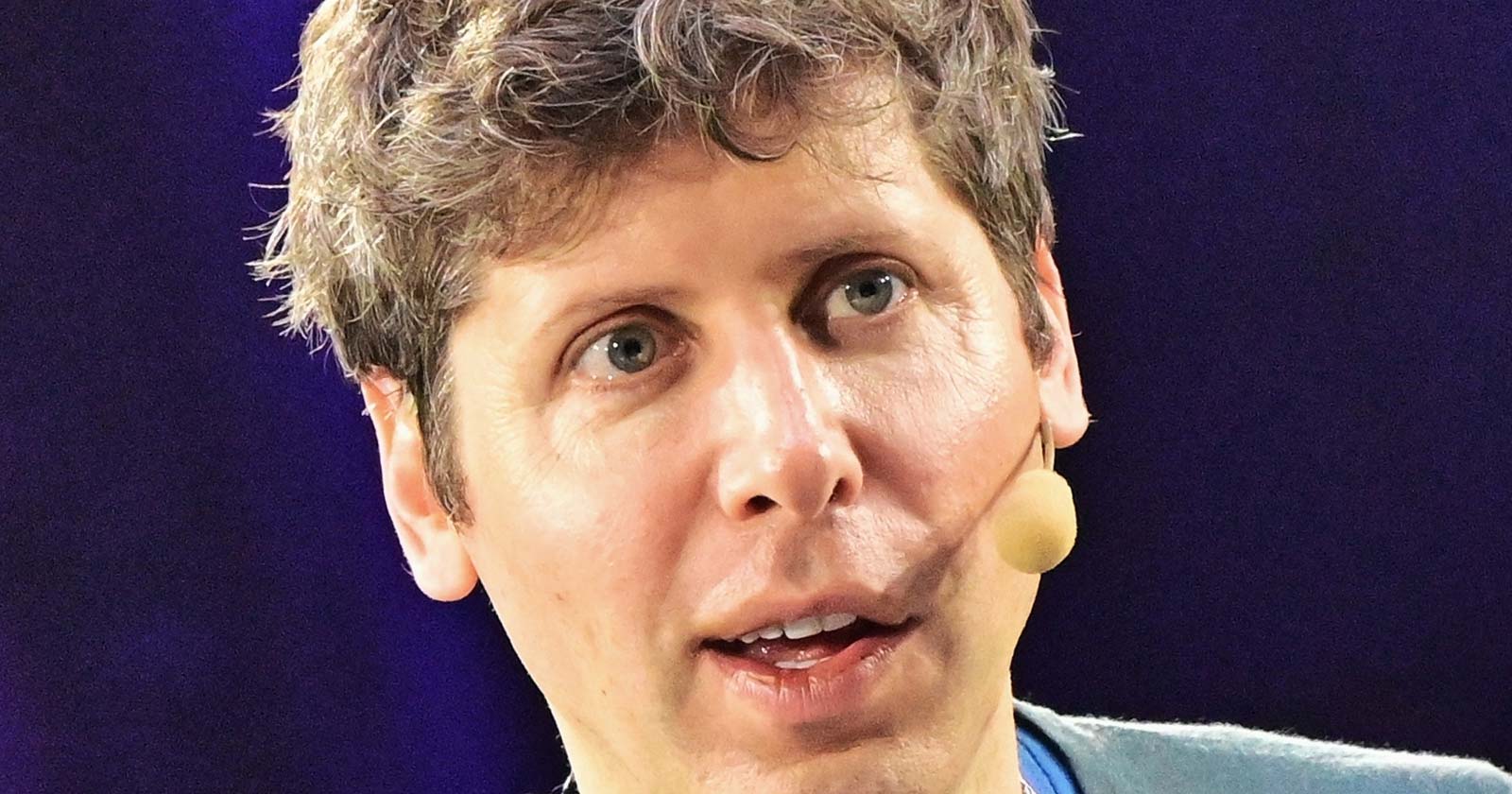

Martin Splitt, a Search Developer Advocate at Google, noted that JavaScript was created to help websites compete with mobile apps, bringing in features like push notifications and offline access.

However, the team cautioned that excitement around JavaScript functionality can lead to overuse.

While JavaScript is practical in many cases, it’s not the best choice for every part of a website.

The JavaScript Spectrum

Splitt described the current landscape as a spectrum between traditional websites and web applications.

He says:

“We’re in this weird state where websites can be just that – websites, basically pages and information that is presented on multiple pages and linked, but it can also be an application.”

He offered the following example of the JavaScript spectrum:

“You can do apartment viewings in the browser… it is a website because it presents information like the square footage, which floor is this on, what’s the address… but it’s also an application because you can use a 3D view to walk through the apartment.”

Why Does This Matter?

John Mueller, Google Search Advocate, noted a common tendency among developers to over-rely on JavaScript:

“There are lots of people that like these JavaScript frameworks, and they use them for things where JavaScript really makes sense, and then they’re like, ‘Why don’t I just use it for everything?’”

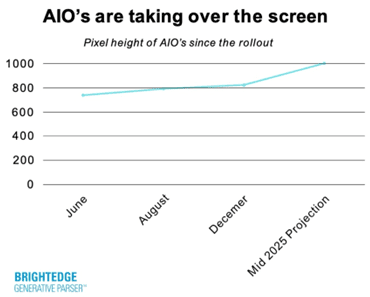

As I listened to the discussion, I was reminded of a study I covered weeks ago. According to the study, over-reliance on JavaScript can lead to potential issues for AI search engines.

Given the growing prominence of AI search crawlers, I thought it was important to highlight this conversation.

While traditional search engines typically support JavaScript well, its implementation demands greater consideration in the age of AI search.

The study finds AI bots make up an increasing percentage of search crawler traffic, but these crawlers can’t render JavaScript.

That means you could lose out on traffic from search engines like ChatGPT Search if you rely too much on JavaScript.

Things To Consider

The use of JavaScript and the limitations of AI crawlers present several important considerations:

- Server-Side Rendering: Since AI crawlers can’t execute client-side JavaScript, server-side rendering is essential for ensuring visibility.

- Content Accessibility: Major AI crawlers, such as GPTBot and Claude, have distinct preferences for content consumption. GPTBot prioritizes HTML content (57.7%), while Claude focuses more on images (35.17%).

- New Development Approach: These new constraints may require reevaluating the traditional “JavaScript-first” development strategy.

The Path Foward

As AI crawlers become more important for indexing websites, you need to balance modern features and accessibility for AI crawlers.

Here are some recommendations:

- Use server-side rendering for key content.

- Make sure to include core content in the initial HTML.

- Apply progressive enhancement techniques.

- Be cautious about when to use JavaScript.

To succeed, adapt your website for traditional search engines and AI crawlers while ensuring a good user experience.

Listen to the full podcast episode below:

Featured Image: Ground Picture/Shutterstock