A recent webinar featuring WordPress executives from Automattic and Elementor, along with developers and Joost de Valk, discussed the stagnation in WordPress growth, exploring the causes and potential solutions.

Stagnation Was The Webinar Topic

The webinar, “Is WordPress’ Market share Declining? And What Should Product Businesses Do About it?” was a frank discussion about what can be done to increase the market share of new users that are choosing a web publishing platform.

Yet something that came up is that there are some areas that WordPress is doing exceptionally well so it’s not all doom and gloom. As will be seen later on, the fact that the WordPress core isn’t progressing in terms of specific technological adoption isn’t necessarily a sign that WordPress is falling behind, it’s actually a feature.

Yet there is a stagnation as mentioned at the 17:07 minute mark:

“…Basically you’re saying it’s not necessarily declining, but it’s not increasing and the energy is lagging. “

The response to the above statement acknowledged that while there are areas of growth like in the education and government sectors, the rest was “up for grabs.”

Joost de Valk spoke directly and unambiguously acknowledged the stagnation at the 18:09 minute mark:

“I agree with Noel. I think it’s stagnant.”

That said, Joost also saw opportunities with ecommerce, with the performance of WooCommerce. WooCommerce, by the way, outperformed WordPress as a whole with a 6.80% year over year growth rate, so there’s a good reason that Joost was optimistic of the ecommerce sector.

A general sense that WordPress was entering a stall however was not in dispute, as shown in remarks at the 31:45 minute mark:

“… the WordPress product market share is not decreasing, but it is stagnating…”

Facing Reality Is Productive

Humans have two ways to deal with a problem:

- Acknowledge the problem and seek solutions

- Pretend it’s not there and proceed as if everything is okay

WordPress is a publishing platform that’s loved around the world and has literally created countless jobs, careers, powered online commerce as well as helped establish new industries in developing applications that extend WordPress.

Many people have a stake in WordPress’ continued survival so any talk about WordPress entering a stall and descent phase like an airplane that reached the maximum altitude is frightening and some people would prefer to shout it down to make it go away.

But facts cannot be brushed aside and that’s what this podcast tried to do. Everyone in the discussion has a stake in the continued growth of WordPress and their goal was not malign WordPress but discuss the current situation, identify what it is and try to reach an understanding of ways to solve the problem.

The live webinar featured:

- Miriam Schwab, Elementor’s Head of WP Relations

- Rich Tabor, Automattic Product Manager

- Joost de Valk, founder of Yoast SEO

- Co-hosts Matt Cromwell and Amber Hinds, both members of the WordPress developer community moderated the discussion.

WordPress Market Share Stagnation

The webinar acknowledged that WordPress market share, the percentage of websites online that use WordPress, was stagnating. Stagnation is a state at which something is neither moving forward nor backwards, it is simply stuck at an in between point. And that’s what was openly acknowledged and the main point of the discussion was understanding the reasons why and what could be done about it.

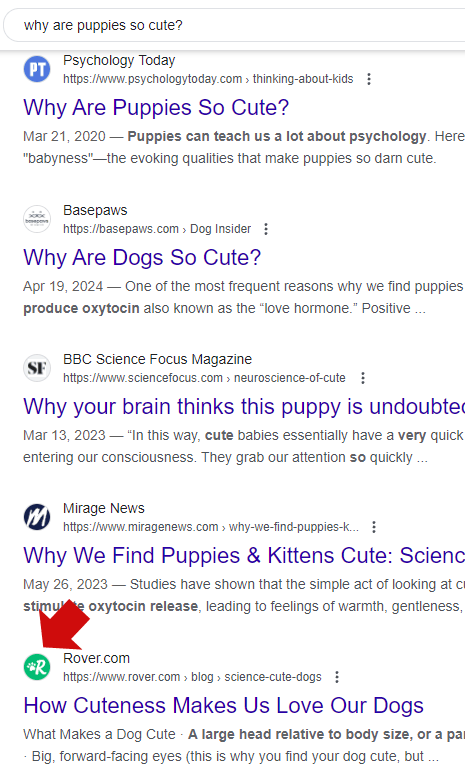

Statistics gathered by the HTTPArchive and published on Joost de Valk’s blog show that WordPress experienced a year over year growth of 1.85%, having spent the year growing and contracting its market share. For example, over the latest month over month period the market share dropped by -0.28%.

Crowing about the WordPress 1.85% growth rate as evidence that everything is fine is to ignore that a large percentage of new businesses and websites coming online are increasingly going to other platforms, with year over year growth rates of other platforms outpacing the rate of growth of WordPress.

Out of the top 10 Content Management Systems, only six experienced year over year (YoY) growth.

CMS YoY Growth

- Webflow: 25.00%

- Shopify: 15.61%

- Wix: 10.71%

- Squarespace: 9.04%

- Duda: 8.89%

- WordPress: 1.85%

Why Stagnation Is A Problem

An important point made in the webinar is that stagnation can have a negative trickle-down effect on the business ecosystem by reducing growth opportunities and customer acquisition. If fewer of the new businesses coming online are opting in for WordPress are clients that will never come looking for a theme, plugin, development or SEO service.

It was noted at the 4:18 minute mark by Joost de Valk:

“…when you’re investing and when you’re building a product in the WordPress space, the market share or whether WordPress is growing or not has a deep impact on how easy it is to well to get people to, to buy the software that you want to sell them.”

Perception Of Innovation

One of the potential reasons for the struggle to achieve significant growth is the perception of a lack of innovation, pointed out at the 16:51 minute mark that there’s still no integration with popular technologies like Next JS, an open-source web development platform that is optimized for fast rollout of scalable and search-friendly websites.

It was observed at the 16:51 minute mark:

“…and still today we have no integration with next JS or anything like that…”

Someone else agreed but also expressed at the 41:52 minute mark, that the lack of innovation in the WordPress core can also be seen as a deliberate effort to make WordPress extensible so that if users find a gap a developer can step in and make a plugin to make WordPress be whatever users and developers want it to be.

“It’s not trying to be everything for everyone because it’s extensible. So if WordPress has a… let’s say a weakness for a particular segment or could be doing better in some way. Then you can come along and develop a plug in for it and that is one of the beautiful things about WordPress.”

Is Improved Marketing A Solution

One of the things that was identified as an area of improvement is marketing. They didn’t say it would solve all problems. It was simply noted that competitors are actively advertising and promoting but WordPress is by comparison not really proactively there. I think to extend that idea, which wasn’t expressed in the webinar, is to consider that if WordPress isn’t out there putting out a positive marketing message then the only thing consumers might be exposed to is the daily news of another vulnerability.

Someone commented in the 16:21 minute mark:

“I’m missing the excitement of WordPress and I’m not feeling that in the market. …I think a lot of that is around the product marketing and how we repackage WordPress for certain verticals because this one-size-fits-all means that in every single vertical we’re being displaced by campaigns that have paid or, you know, have received a a certain amount of funding and can go after us, right?”

This idea of marketing being a shortcoming of WordPress was raised earlier in the webinar at the 18:27 minute mark where it was acknowledged that growth was in some respects driven by the WordPress ecosystem with associated products like Elementor driving the growth in adoption of WordPress by new businesses.

They said:

“…the only logical conclusion is that the fact that marketing of WordPress itself is has actually always been a pain point, is now starting to actually hurt us.”

Future Of WordPress

This webinar is important because it features the voices of people who are actively involved at every level of WordPress, from development, marketing, accessibility, WordPress security, to plugin development. These are insiders with a deep interest in the continued evolution of WordPress as a viable platform for getting online.

The fact that they’re talking about the stagnation of WordPress should be of concern to everybody and that they are talking about solutions shows that the WordPress community is not in denial but is directly confronting situations, which is how a thriving ecosystem should be responding.

Watch the webinar:

Is WordPress’ Market share Declining? And What Should Product Businesses Do About it?

Featured Image by Shutterstock/Krakenimages.com