LLMs.txt For AI SEO: Is It A Boost Or A Waste Of Time? via @sejournal, @martinibuster

Many popular WordPress SEO plugins and content management platforms offer the ability to generate LLMs.txt for the purpose of improving visibility in AI search platforms. With so many popular SEO plugins and CMS platforms offering LLMs.txt functionality, one might come away with the impression that it is the new frontier of SEO. The fact, however, is that LLMs.txt is just a proposal, and no AI platform has signed on to use it.

So why are so many companies rushing to support a standard that no one actually uses? Some SEO tools offer it because their users are asking for it, while many users feel they need to adopt LLMs.txt simply because their favorite tools provide it. A recent Reddit discussion on this very topic is a good place to look for answers.

Third Party SEO Tool And LLMs.txt

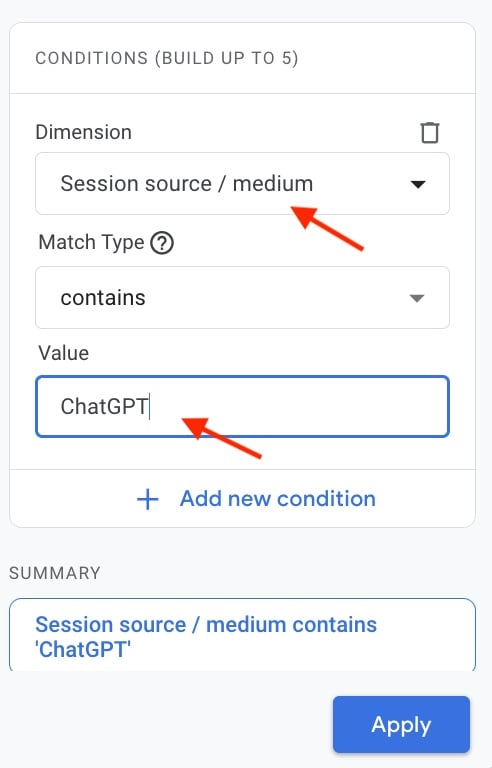

Google’s John Mueller addressed the LLMs.txt confusion in a recent Reddit discussion. The person asking the question was concerned because an SEO tool flagged it as 404, missing. The user had the impression that the tool implied it was needed.

Their question was:

“Why is SEMRush showing that the /llm.txt is a 404? Yes, I. know I don’t have one for the website, but, I’ve heard it’s useless and not needed. Is that true?

If i need it, how do i build it?

Thanks”

The Redditor seems to be confused by the Semrush audit that appears to imply that they need an LLMs.txt. I don’t know what they saw in the audit but this is what the official Semrush audit documentation shares about the usefulness of LLMs.txt:

“If your site lacks a clear llms.txt file it risks being misrepresented by AI systems.

…This new check makes it easy to quickly identify any issues that may limit your exposure in AI search results.”

Their documentation says that it’s a “risk” to not have an LLMs.txt but the fact is that there is absolutely no risk because no AI platform uses it. And that may be why the Redditor was asking the question, “If i need it, how do I build it?”

LLMs.txt Is Unnecessary

Google’s John Mueller confirmed that LLMs.txt is unnecessary.

He explained:

“Good catch! Especially in SEO, it’s important to catch misleading & bad information early, before you invest time into doing something unnecessary. Question everything.”

Why AI Platforms May Choose To Not Use LLMs.txt

Aside from John Mueller’s many informal statements about the uselessness of LLMs.txt, I don’t think there are any formal statements from AI platforms as to why they don’t use LLMs.txt and their associated .md markdown texts. There are, however, many good reasons why an AI platform would choose not to use it.

The biggest reason not to use LLMs.txt is that it is inherently untrustworthy. On-page content is relatively trustworthy because it is the same for users as it is for an AI bot.

A sneaky SEO could add things to structured data and markdown texts that don’t exist in the regular HTML content in order to get their content to rank better. It is naive to think that an SEO or publisher would not use .md files to trick AI platforms.

For example, unscrupulous SEOs add hidden text and AI prompts within HTML content. A research paper from 2024 (Adversarial Search Engine Optimization for Large Language Models) showed that manipulation of LLMs was possible using a technique they called Preference Manipulation Attacks.

Here’s a quote from that research paper (PDF):

“…an attacker can trick an LLM into promoting their content over competitors. Preference Manipulation Attacks are a new threat that combines elements from prompt injection attacks… Search Engine Optimization (SEO)… and LLM ‘persuasion.’

We demonstrate the effectiveness of Preference Manipulation Attacks on production LLM search engines (Bing and Perplexity) and plugin APIs (for GPT-4 and Claude). Our attacks are black-box, stealthy, and reliably manipulate the LLM to promote the attacker’s content. For example, when asking Bing to search for a camera to recommend, a Preference Manipulation Attack makes the targeted camera 2.5× more likely to be recommended by the LLM.”

The point is that if there’s a loophole to be exploited, someone will think it’s a good idea to take advantage of it, and that’s the problem with creating a separate file for AI chatbots: people will see it as the ideal place to spam LLMs.

It’s safer to rely on on-page content than on a markdown file that can be altered exclusively for AI. This is why I say that LLMs.txt is inherently untrustworthy.

What SEO Plugins Say About LLMs.txt

The makers of Squirrly WordPress SEO plugin acknowledge that they provided the feature only because their users asked for it, and they assert that it has no influence on AI search visibility.

They write:

“I know that many of you love using Squirrly SEO and want to keep using it. Which is why you’ve asked us to bring this feature.

So we brought it.

But, because I care about you:

– know that LLMs txt will not help you magically appear in AI search. There is currently zero proof that it helps with being promoted by AI search engines.”

They strike a good balance between giving users what they want while also letting them know it’s not actually needed.

While Squirrly is at one end saying (correctly) that LLMs.txt doesn’t boost AI search visibility, Rank Math is on the opposite end saying that AI chatbots actually use the curated version of the content presented in the markdown files.

Rank Math is generally correct in its description of what an LLMs.txt is and how it works, but it overstates the usefulness by suggesting that AI chatbots use the curated LLMs.txt and the associated markdown files.

They write:

“So when an AI chatbot tries to summarize or answer questions based on your site, it doesn’t guess—it refers to the curated version you’ve given it. This increases your chances of being cited properly, represented accurately, and discovered by users in AI-powered results.”

We know for a fact that AI chatbots do not use a curated version of the content. They don’t even use structured data; they just use the regular HTML content.

Yoast SEO is a little more conservative, occupying a position in the center between Squirrly and Rank Math, explaining the purpose of LLMs.txt but not overstating the benefits by hedging with words like “can” and “could.” That is a fair way to describe LLMs.txt, although I like Squirrly’s approach that says, you asked for it, here it is, but don’t expect a boost in search performance.

The LLMs.txt Misinformation Loop

The conversation around LLMs.txt has become a self-reinforcing loop: business owners and SEOs feel anxiety over AI visibility and feel they must do something, viewing LLMs.txt as the something they can do.

SEO tool providers are compelled to provide the LLMs.txt option, reinforcing the belief that it’s a necessity, unintentionally perpetuating the cycle of misunderstanding.

Concern over AI visibility has led to the adoption of LLMs.txt which at this stage is only a proposal for a standard that no AI platform currently uses.

Featured Image by Shutterstock/James Delia