Google AI Overviews Claims More Pixel Height in SERPs via @sejournal, @martinibuster

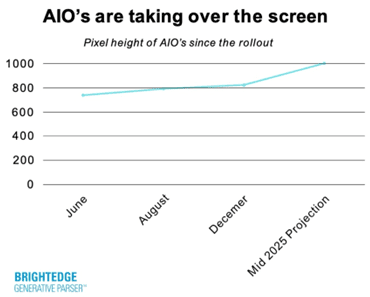

New data from BrightEdge reveals that Google’s AI Overviews is increasingly blocking organic search results. If this trend continues, Google AI Overviews and advertisements could cover well over half of the available space in search results.

Organic Results Blocking Creeping Up

Google’s AI Overviews feature, launched in May 2024, has been a controversial feature among publishers and SEOs since day one. Many publishers resent that Google is using their content to create answers in the search results that discourage users from clicking through and reading more, thereby negatively influencing earnings.

Many publishers, including big brand publishers, have shut down from a combination of declining traffic from Google and algorithmic suppression of rankings. AI Overviews only added to publisher woes and has caused Google to become increasingly unpopular with publishers.

Google AIO Taking Over Entire Screen

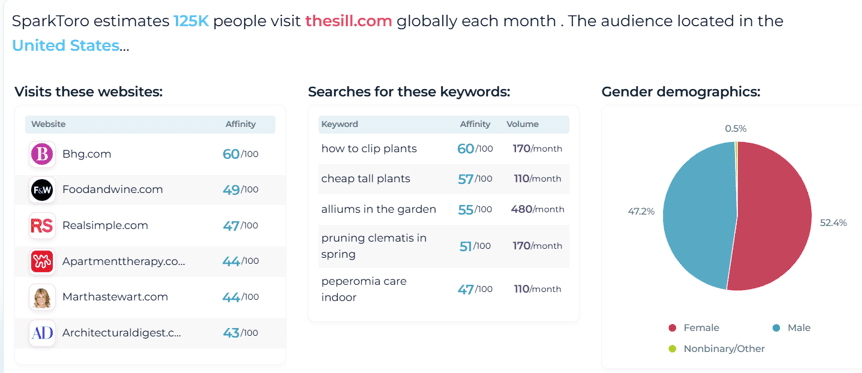

BrightEdge’s research shows that AI Overviews started out in May 2024 taking up to 600 pixels of screen space, crowding out the organic search results, formerly known as the ten blue links. When advertising is factored in there isn’t much space left over for links to publisher sites.

By the end of summer the amount of space taken over by Google’s AIO increased to 800 pixels and continued to climb. At this pace BrightEdge predicts that Google could eventually reach 1,000 pixels of screen space. To put that in perspective, 600 pixels is considered “above the fold,” what users typically see without scrolling.

Graph Showing Growth Of AIO Pixel Size By Height

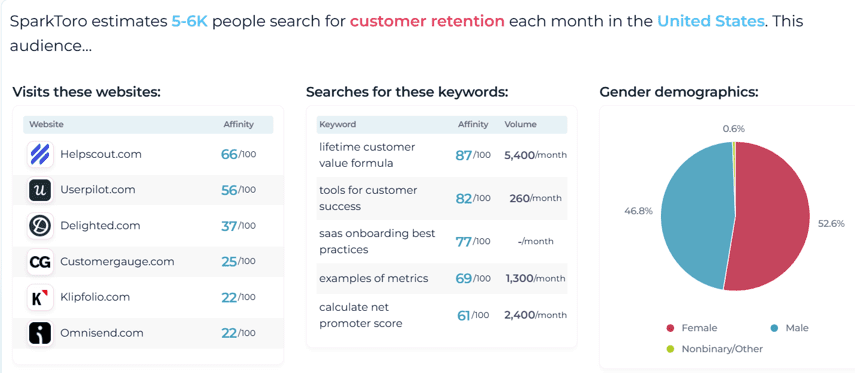

Percentage Of Queries Showing AIOs

The percentage of queries that display Google’s AI Overviews have also been creeping up. Health related search queries have been trending higher than any other niche. B2B Technology, eCommerce, and finance queries are also increasingly showing AI Overview search results.

Healthcare search queries initially triggered AIO at around 70% of the time. Health related queries are now triggered over 80% of the time.

B2B technology queries started out in May 2024 showing AIO results at about 30% of the time. Now those same queries trigger AIO results almost 50% of the time.

Finance queries that trigger AI Overviews have grown from around 5% to 20% of the time. BrightEdge data shows that Google AIO coverage is trending upwards and is predicted to cover an increasing amount of search queries across other topics, specifically in travel, restaurants, and entertainment.

BrightEdge’s data shows:

“Finance shows most dramatic trajectory: starting at just 5.3% but projected to reach 15-20% by June 2025

-Healthcare led (67.5% in June)

-B2B Tech: 33.2% → 38.4%, projected 45-50%

-eCommerce: 26.9% → 35.1%, projected 40-45%

-Emerging sectors showing dramatic growth:Entertainment (shows, events, venues): 0.3% → 5.2%

Travel (destinations, lodging, activities): 0.1% → 4.1%

Restaurants (dining, menus, reservations): ~0% → 6.0%”

BrightEdge explains that restaurant search query coverage started out small, focusing on long tail search queries like “restaurants with vegetarian food for groups” but is now is rolling out in higher amounts, suggesting that Google is feeling more comfortable with their AIO results and is expected to roll out across more search queries in 2025.

They explain:

“AIO’s evolved from basic definitions to understanding complex needs combining multiple requirements (location + features + context)

In 2025, expect AIO’s to handle even more sophisticated queries as they shift from informational to actionable responses.

-Healthcare stable at 65-70%

-B2B Tech/eCommerce will reach 40-50%

-Finance sector will surge from 5.3% to 25%

-Emerging sectors could see a 50-100x growth potential

-AIOs will evolve from informational to actionable (reservations, bookings, purchases)

-Feature complexity: 2.5x current levels”

The Takeaway

I asked BrightEdge for a comment about what they feel publishers should get ahead of for 2025.

Jim Yu, CEO of BrightEdge, responded:

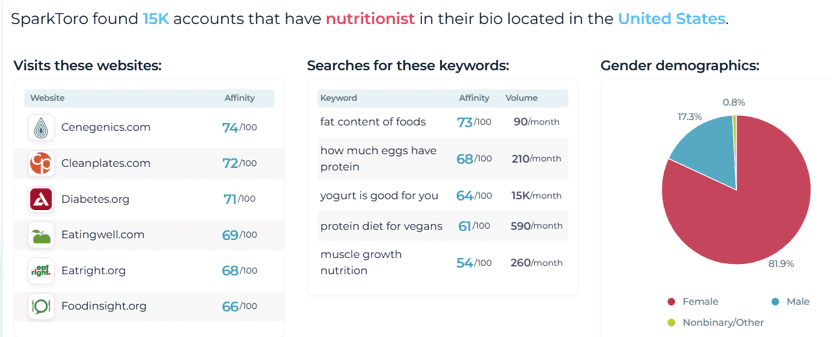

“Publishers will need to adapt to the complexity of content creation and optimization while leaning into core technical SEO to guarantee their sites are seen and valued as authoritative sources.

Citations are a new form of ranking. As search and AI continue to converge, brands need to send the right signals to search and AI engines to help them decide if the content is helpful, unique, and informative. In a multi-modal world, this means schema tags about a publisher’s company, products, images, videos, overall site and content structure, reviews, and more!

In 2025, content, site structure, and authority will matter more than ever, and SEO has a huge role to play in that.

Key Questions marketers need to address in 2025

- Is your content ready for 4-5 layered intents?

Can you match Google’s growing complexity?

Have you mapped your industry’s intent combinations?Key Actions for 2025

The Pattern is clear: Simple answers → rich, context-aware responses!

- Intent Monitoring: See which intents AIO’s are serving for your space

Query Evolution: Identify what new keyword patterns are emerging that AIO’s are serving

Citation Structure: Align content structure to intents and queries AIO’s are focused on to ensure you are cited

Competitive Intelligence: Track which competitor content AIOs select and whyAIOs aren’t just displaying content differently – they’re fundamentally changing how users find and interact with information.

The takeaway from the data is that publishers are encouraged to create unambiguous content that directly address topics in order to rank for complex search queries. A careful eye on how AI Overviews are displayed and what kinds of content are cited and linked to is encouraged.

Google’s CEO, Sundar Pichai, recently emphasized increasing the amount of coverage that AI assistants like Gemini handle, which implies that Google’s focus on AI, if successful, may begin to eat into the amount of traffic from the traditional search box. That’s a trend to be on the watch for and a wakeup call to get on top of creating content that resonates with today’s AI Search.

The source of AIO data is from the proprietary BrightEdge Generative Parser™ and DataCubeX, which regularly informs the BrightEdge guide to AIO.