7 SEO, Marketing, And Tech Predictions For 2026 via @sejournal, @Kevin_Indig

Previous predictions: 2018 | 2019 | 2020 | 2021 | 2022 | 2023 | 2024

This is my 8th time publishing annual predictions. As always, the goal is not to be right but to practice thinking.

For example, in 2018, I predicted “Niche communities will be discovered as a great channel for growth” and “Email marketing will return” in 2019. It took another 6 years. That same year, I also wrote “Smart speakers will become a viable user-acquisition channel in 2018”. Well…

All 2026 Predictions

- AI visibility tools face a reckoning.

- ChatGPT launches first quality update.

- Continued click-drops lead to a “Dark Web” defense.

- AI forces UGC platforms to separate feeds.

- ChatGPT’s ad platform provides “demand data.”

- Perplexity sells to xAI or Salesforce.

- Competition tanks Nvidia’s stock by -20%.

Boost your skills with Growth Memo’s weekly expert insights. Subscribe for free!

For the past three years, we have lived in the “generative era,” where AI could read the internet and summarize it for us. 2026 marks the beginning of the “agentic era,” where AI stops just consuming the web and starts writing to it – a shift from information retrieval to task execution.

This isn’t just a feature update; it is a fundamental restructuring of the digital economy. The web is bifurcating into two distinct layers:

- The Transactional Layer: Dominated by bots executing API calls and “Commercial Agents” (like Remarkable Alexa) that bypass the open web entirely.

- The Human Layer: Verified users and premium publishers retreating behind “Dark Web” blockades (paywalls, login gates, and C2PA encryption) to escape the sludge of AI content.

A big question mark is advertising, where Google’s expansion of ads into AI Mode and ChatGPT showing ads to free users could alleviate pressure on CPCs, but AI Overviews (AIOs) could drive them up. 2026 could be a year of wild price swings where smart teams (your “holistic pods”) move budget daily between Google (high cost/high intent) and ChatGPT (low cost/discovery) to exploit the spread.

It is not the strongest of the species that survives, nor the most intelligent; it is the one most adaptable to change.

— Leon C. Megginso

SEO/AEO

AI Visibility Tools Face A Reckoning

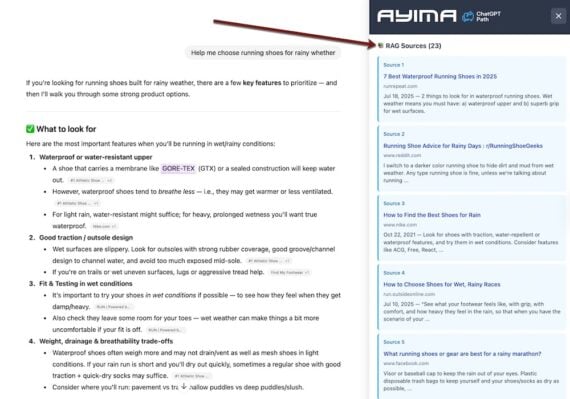

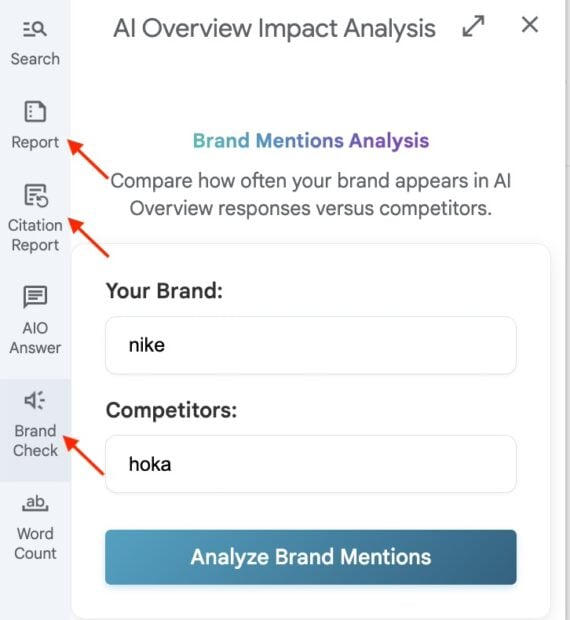

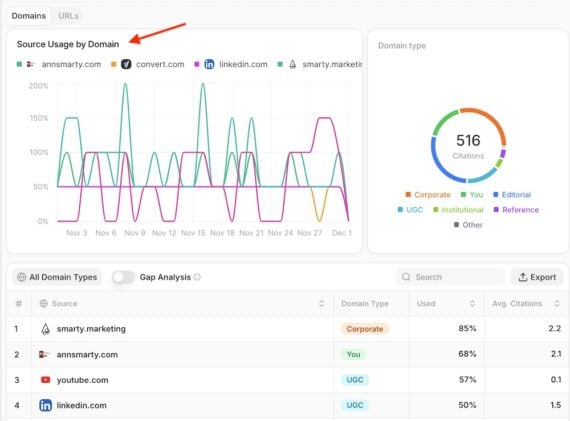

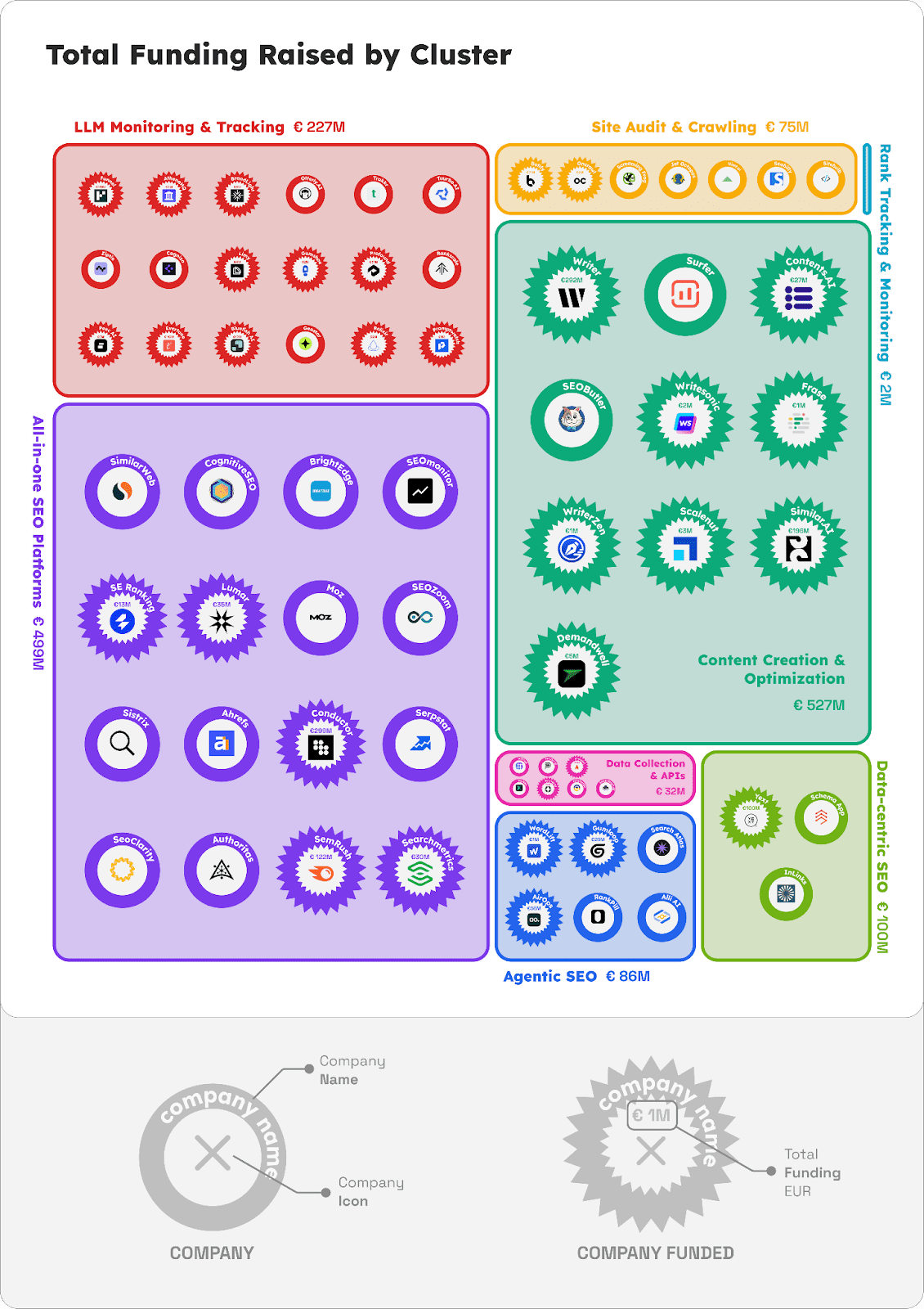

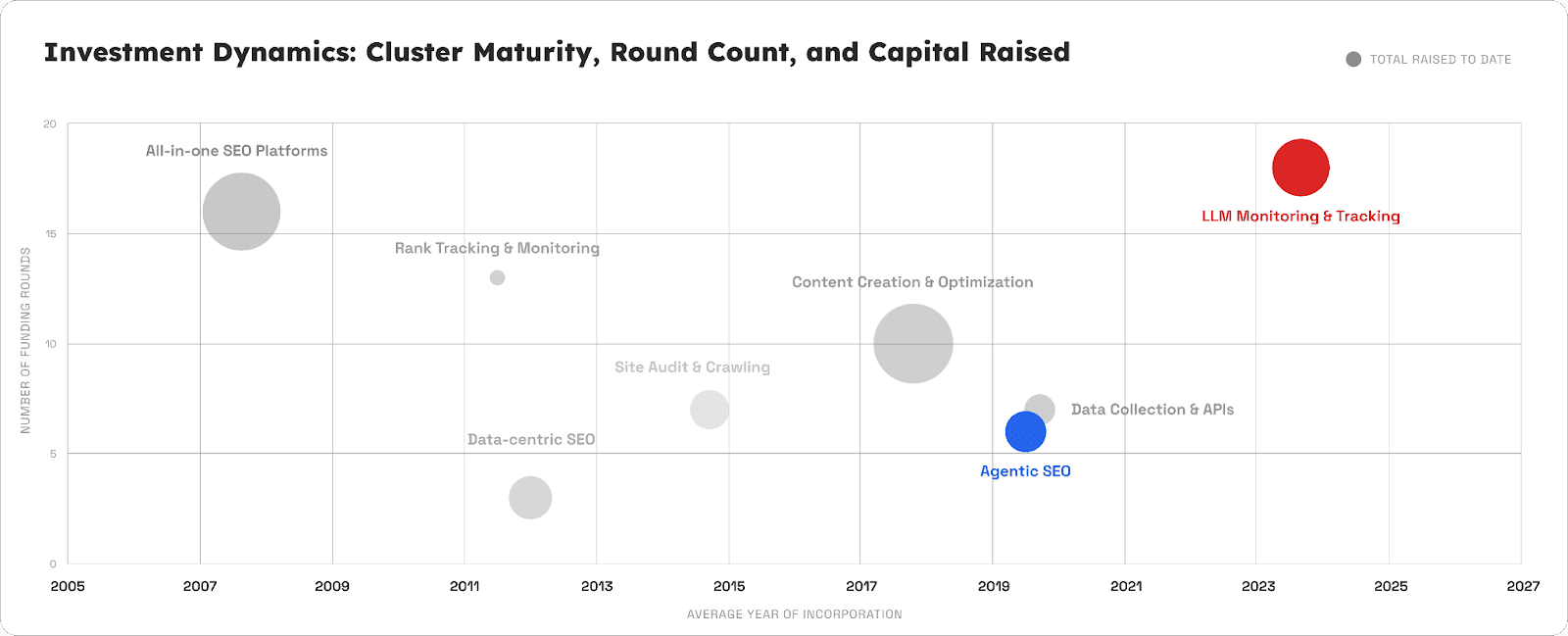

Prediction: I forecast an “Extinction Event” in Q3 2026 for the standalone AI visibility tracking category. Rather than a simple consolidation, our analysis shows the majority of pure-play tracking startups might fold or sell for parts as their 2025 funding runways expire simultaneously without the revenue growth to justify Series B rounds.

Why:

- Tracking is a feature, not a company. Amplitude built an AI tracker for free in three weeks, and legacy platforms like Semrush bundled it as a checkbox, effectively destroying the standalone business model.

- Many tools have almost zero “customer voice” proof of concept (e.g., zero G2 reviews), creating a massive valuation bubble.

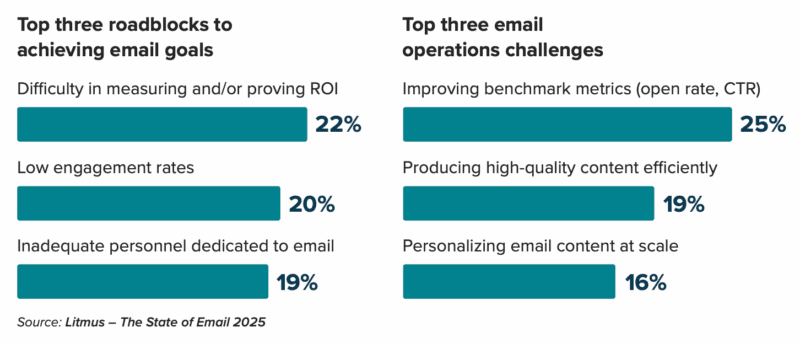

- The ROI of AI visibility optimization is still unclear and hard to prove.

Context:

- Roughly 20 companies raised over $220 million at high valuations. 73% of those companies were founded in 2024.

- Adobe’s $1.9 billion acquisition of Semrush proves that value lies in platforms with distribution, not in isolated dashboards.

Consequences:

- Smart money will flee “read-only” tools (dashboards) and rotate into “write-access” tools (agentic SEO) that can automatically ship content and fix issues.

- There will be -3 winners of AI visibility trackers on top of the established all-in-one platforms. Most of them will evolve into workflow automation, where most of the alpha is, and where established platforms have not yet built features.

- The remaining players will sell, consolidate, pivot, or shut down.

- AI visibility tracking itself faces a crisis of (1) what to track and (2) how to influence the numbers, since a large part of impact comes from third-party sites.

ChatGPT Launches First Quality Update

Prediction: It’ll be harder for spammers to influence AI visibility in 2026 with link spam, mass-generated AI content, and cloaking. By 2026, agents will likely use Multi-Source Corroboration to eliminate this asymmetry.

Why:

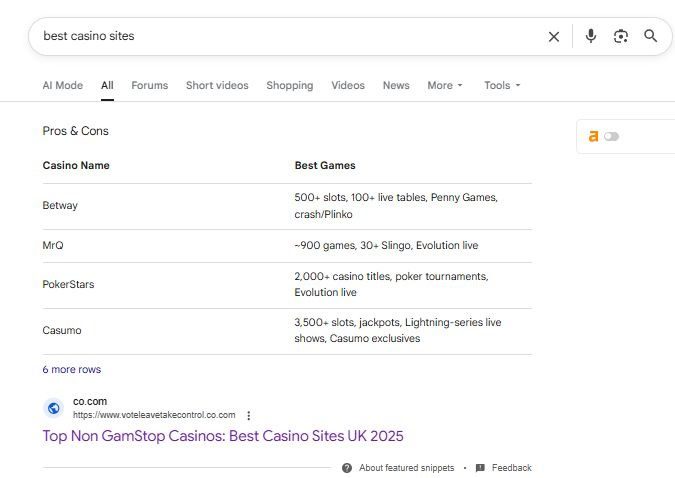

- The fact that you can publish a listicle about top solutions on your site and name yourself first and influence AI visibility seems off.

- New technology, like “ReliabilityRAG“ or “Multi-Agent Debate,” where one AI agent retrieves the info and another agent acts as a “judge” to verify it against other sources before showing it to the user, is available.

Context:

- Most current agents (like standard ChatGPT, Gemini, or Perplexity) use a process called Retrieval-Augmented Generation (RAG). But RAG is still susceptible to hallucination and making errors.

- Spammers often target specific, low-volume queries (e.g., “best AI tool for underwater basket weaving”) because there is no competition. However, new “knowledge graph” integration allows AIs to infer that a basket-weaving tool shouldn’t be a crypto-scam site based on domain authority and topic relevance, even if it’s the only page on the internet with those keywords.

Consequences:

- OpenAI engineers are likely already working on better quality filters.

- LLMs will shift from pure retrieval to corroboration.

- Spammers might move to more sophisticated tactics, where they try to manufacture the consensus by buying and using zombie media outlets, cloaking, and other malicious tactics.

Continued Click-Drops Lead To A “Dark Web” Defense

Prediction: AI Overviews (AIOs) scale to 75% of keywords for big sites. AI Mode rolls out to 10-20% of queries.

Why:

- Google said they’re seeing more queries as a result of AIOs. The logical conclusion is to show even more AIOs.

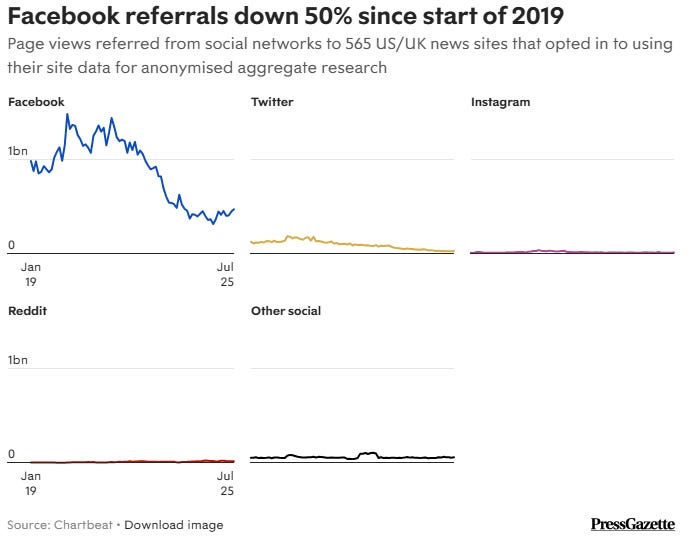

- CTR for organic search results tanked from 1.41% to 0.64% already in January. Since January, paid CTR dropped from 14.92% to 6.34% (over 42% less).

Context:

- Big sites already see AIOs for ~50% of their keywords.

- Google started testing ads in AI Mode. If successful, Google would feel more confident to roll out AI Mode more broadly, and the investor story would sound better.

- 80% of consumers now use AI summaries for at least 40% of their searches, according to Bain.

- 2025 saw a massive purge in digital media, with major layoffs at networks like NBC News, BBC, and tech publishers as they restructured for a “post-traffic” world.

Consequences:

- Publishers monetize audiences directly instead of ads and move to “experience-based” content (firsthand reviews, contrarian opinions, proprietary data) because AI cannot experience things. The space consolidates further (layoffs, acquisitions, Chapter 9).

- By 2026, we expect a massive wave of “LLM blockades.” Major publishers will update their robots.txt to block Google-Extended and GPTBot, forcing users to visit the site to see the answer. This creates a “Dark Web” of high-quality content that AI cannot see, bifurcating the internet into AI slop (free) and human insight (paid).

Marketing

AI Forces UGC Platforms To Separate Feeds

Prediction: By 2026, “identity spoofing” will become the single largest cybersecurity risk for public companies. We move from, Is this content real? to Is this source verified?

Why:

- Real influencers are risky (scandals, contract disputes). AI influencers are brand-safe assets that work 24/7/365 and never say anything controversial unless prompted. Brands will pay a premium to avoid humans.

Context:

- Deepfake fraud attempts increased 257% in 2024. Most detection tools currently have a 20%+ false positive rate, making them hard to use for platforms like YouTube without killing legitimate creator reach.

- Example: In 2024, the engineering firm Arup lost $25 million when an employee was tricked by a deepfake video conference call where the “CFO” and other colleagues were all AI simulations.

- In May 2023, a fake AI image of an explosion at the Pentagon caused a momentary dip in the S&P 500.

Consequences:

- Cryptographic signatures (C2PA) become the only proof of reality for video.

- YouTube and LinkedIn will likely split feeds into “verified human” (requires ID + biometric scan) and “synthetic/unverified.”

- “Blue checks” won’t just be for status, but a security requirement to comment or post video, effectively ending anonymity for high-reach accounts.

- Platforms will be forced by regulators (EU AI Act, August 2026 deadline) to label AI content.

- Cameras (Sony, Canon) and iPhones will start embedding C2PA digital signatures at the hardware level. If a video lacks this “chain of custody” metadata, platforms will auto-label it as “unverified/synthetic.”

ChatGPT’s Ad Platform Provides “Demand Data”

Prediction: OpenAI shifts to a hybrid pricing model in 2026: An “ad-supported free tier” and “credit-based pro tier.”

Why:

- Inference costs are skyrocketing. A heavy user paying $20/month can easily burn $100+ of computing, making them unprofitable.

Context:

- Leaked code in the ChatGPT Android App (v1.2025.329) explicitly references “search ads carousel” and “bazaar content.”

Consequences:

- Free users will see “sponsored citations” and product cards (ads) in their answers.

- Power users will face “compute credits” – a base subscription gets you standard GPT-5, but heavy use of deep research or reasoning agents will require buying top-up packs.

- We get a Search-Console style interface. Brands need data. If OpenAI wants to sell ads, it must give brands a dashboard showing, “Your product was recommended in 5,000 chats about running shoes.” The data will add fuel to the fire for AEO/GEO/LLMO/SEO.

- The leaked term “bazaar content” suggests OpenAI might not just show ads, but allow transactions inside the chat (e.g., “Book this flight”) where they take a cut. This moves OpenAI from a software company to a marketplace (like the App Store), effectively competing with Amazon and Expedia.

Tech

Perplexity Sells To xAI Or Salesforce

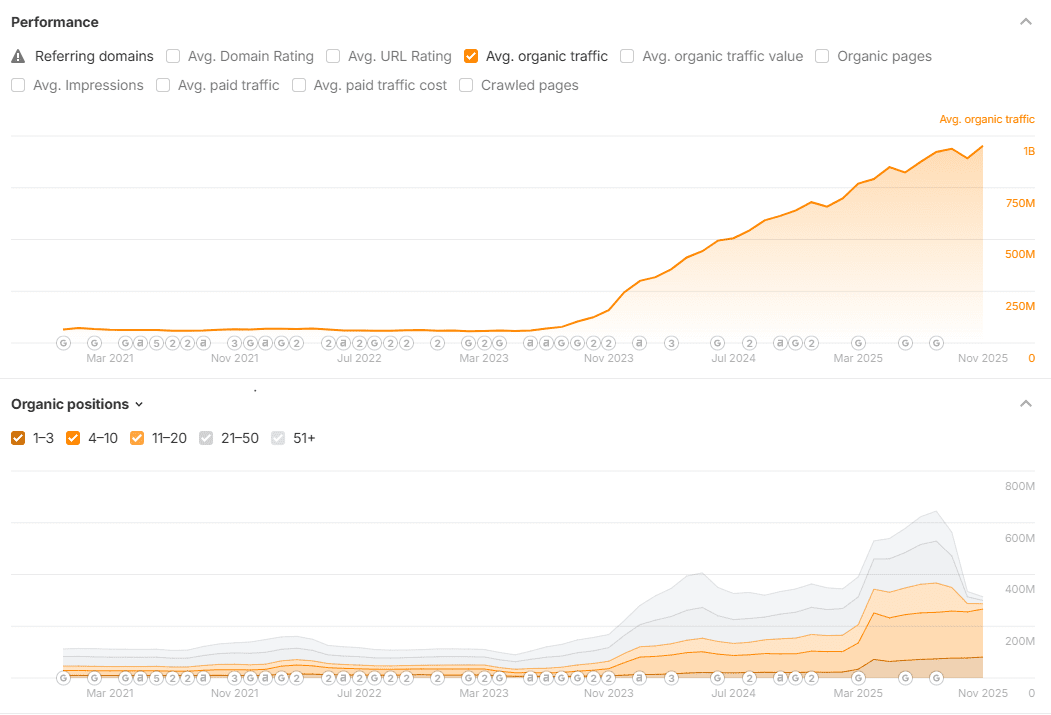

Prediction: Perplexity will be acquired in late 2026 for $25-$30 billion. After its user growth plateaus at ~50 million MAU, the “unit economics wall” forces a sale to a giant that needs its technology (real-time RAG), not its business model.

Why:

- In late 2025, Perplexity raised capital at a $20 billion valuation (roughly 100x its ~$200 million ARR). To justify this, they need Facebook-level growth. However, 2025 data shows they hit a ceiling at ~30 million users while ChatGPT surged to +800 million.

- By 2026, Google and OpenAI will have effectively cloned Perplexity’s core feature (Deep Research) and given it away for free.

Context:

- While Perplexity grew 66% YoY in 2025 to ~30 million monthly active users (MAU), this pales in comparison to ChatGPT’s +800 million.

- It costs ~10x more to run a Perplexity deep search query than a standard Google search. Without a high-margin ad network (which takes a decade to build), they burn cash on every free user, creating a “negative scale” problem.

- Salesforce acquired Informatica for ~$8 billion in 2025 specifically to power its agentforce strategy. This proves Benioff is willing to spend billions to own the data layer for enterprise agents.

- xAI raised over $20 billion in late 2025, valuing the company at $200 billion. Musk has the liquid cash to buy Perplexity tomorrow to fix Grok’s hallucination problems.

Consequences:

- xAI has the cash, and Musk needs a “real-time truth engine” for Grok. Perplexity could make X (Twitter) a more powerful news engine. Grok (X’s current AI) learns from tweets, but Perplexity cites sources that can reduce hallucination. Perplexity could also give xAI a browser, bringing it closer to Musk’s vision of a super app.

- Marc Benioff wants to own “enterprise search.” Imagine a Salesforce Agent that can search the entire public web (via Perplexity) + your private CRM data to write a perfect sales email.

Competition Tanks Nvidia’s Stock By -20%

Prediction: Nvidia stock will correct by >20% in 2026 as its largest customers successfully shift 15-20% of their workloads to custom internal silicon. This causes a P/E compression from ~45x to ~30x as the market realizes Nvidia is no longer a monopoly, but a “competitor” in a commoditized market. (Not investment advice!)

Why:

- Microsoft, Meta, Google, and Amazon likely account for over 40% of Nvidia’s revenue. For them, Nvidia is a tax on their margins. They are currently spending ~$300 billion combined on CAPEX in 2025, but a growing portion is now allocated to their own chip supply chains rather than Nvidia H100s/Blackwells.

- Hyperscalers don’t need chips that beat Nvidia on raw specs; they just need chips that are “good enough” for internal inference (running models), which accounts for 80-90% of compute demand.

Context:

- In late 2025, reports surfaced that Meta was negotiating to buy/rent Google’s TPU v6 (Trillium) chips to reduce its reliance on Nvidia.

- AWS Trainium 2 & 3 chips are reportedly 30-50% cheaper to operate than Nvidia H100s for specific workloads. Amazon is aggressively pushing these cheaper instances to startups to lock them into the AWS silicon ecosystem.

- Microsoft’s Maia 100 is now actively handling internal Azure OpenAI workloads. Every workload shifted to Maia is an H100 Nvidia didn’t sell.

- Reports confirm OpenAI is partnering with Broadcom to mass-produce its own custom AI inference chip in 2026, directly attacking Nvidia’s dominance in the “Model Serving” market.

- Fun fact: Without Nvidia, the S&P500 would’ve made 3 percentage points less in 2025.

Consequence:

- Nvidia will react by refusing to sell just chips. They will push the GB200 NVL72 – a massive, liquid-cooled supercomputer rack that costs millions. This forces customers to buy the entire Nvidia ecosystem (networking, cooling, CPUs), making it physically impossible to swap in a Google TPU or Amazon chip later.

- If hyperscalers signal even a 5% cut in Nvidia orders to favor their own chips, Wall Street will panic-sell, fearing the peak of the AI Infrastructure Cycle has passed.

Featured Image: Paulo Bobita/Search Engine Journal