One year ago, on July 21, 2023, seven leading AI companies—Amazon, Anthropic, Google, Inflection, Meta, Microsoft, and OpenAI—committed with the White House to a set of eight voluntary commitments on how to develop AI in a safe and trustworthy way.

These included promises to do things like improve the testing and transparency around AI systems, and share information on potential harms and risks.

On the first anniversary of the voluntary commitments, MIT Technology Review asked the AI companies that signed the commitments for details on their work so far. Their replies show that the tech sector has made some welcome progress, with big caveats.

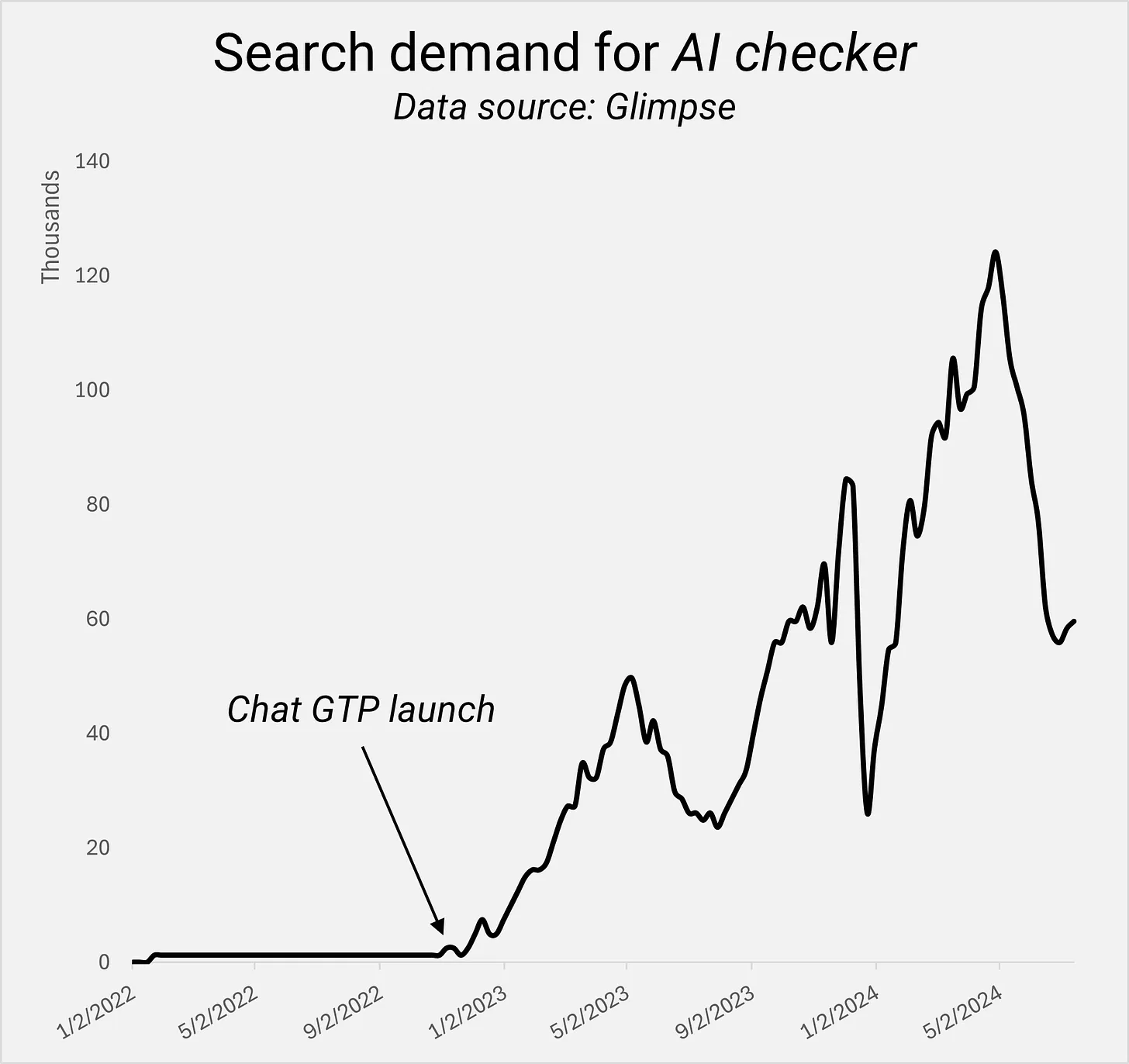

The voluntary commitments came at a time when generative AI mania was perhaps at its frothiest, with companies racing to launch their own models and make them bigger and better than their competitors’. At the same time, we started to see developments such as fights over copyright and deepfakes. A vocal lobby of influential tech players, such as Geoffrey Hinton, had also raised concerns that AI could pose an existential risk to humanity. Suddenly, everyone was talking about the urgent need to make AI safe, and regulators everywhere were under pressure to do something about it.

Until very recently, AI development has been a Wild West. Traditionally, the US has been loath to regulate its tech giants, instead relying on them to regulate themselves. The voluntary commitments are a good example of that: they were some of the first prescriptive rules for the AI sector in the US, but they remain voluntary and unenforceable. The White House has since issued an executive order, which expands on the commitments and also applies to other tech companies and government departments.

“One year on, we see some good practices towards their own products, but [they’re] nowhere near where we need them to be in terms of good governance or protection of rights at large,” says Merve Hickok, the president and research director of the Center for AI and Digital Policy, who reviewed the companies’ replies as requested by MIT Technology Review. Many of these companies continue to push unsubstantiated claims about their products, such as saying that they can supersede human intelligence and capabilities, adds Hickok.

One trend that emerged from the tech companies’ answers is that companies are doing more to pursue technical fixes such as red-teaming (in which humans probe AI models for flaws) and watermarks for AI-generated content.

But it’s not clear what the commitments have changed and whether the companies would have implemented these measures anyway, says Rishi Bommasani, the society lead at the Stanford Center for Research on Foundation Models, who also reviewed the responses for MIT Technology Review.

One year is a long time in AI. Since the voluntary commitments were signed, Inflection AI founder Mustafa Suleyman has left the company and joined Microsoft to lead the company’s AI efforts. Inflection declined to comment.

“We’re grateful for the progress leading companies have made toward fulfilling their voluntary commitments in addition to what is required by the executive order,” says Robyn Patterson, a spokesperson for the White House. But, Patterson adds, the president continues to call on Congress to pass bipartisan legislation on AI.

Without comprehensive federal legislation, the best the US can do right now is to demand that companies follow through on these voluntary commitments, says Brandie Nonnecke, the director of the CITRIS Policy Lab at UC Berkeley.

But it’s worth bearing in mind that “these are still companies that are essentially writing the exam by which they are evaluated,” says Nonnecke. “So we have to think carefully about whether or not they’re … verifying themselves in a way that is truly rigorous.”

Here’s our assessment of the progress AI companies have made in the past year.

Commitment 1

The companies commit to internal and external security testing of their AI systems before their release. This testing, which will be carried out in part by independent experts, guards against some of the most significant sources of AI risks, such as biosecurity and cybersecurity, as well as its broader societal effects.

All the companies (excluding Inflection, which chose not to comment) say they conduct red-teaming exercises that get both internal and external testers to probe their models for flaws and risks. OpenAI says it has a separate preparedness team that tests models for cybersecurity, chemical, biological, radiological, and nuclear threats and for situations where a sophisticated AI model can do or persuade a person to do things that might lead to harm. Anthropic and OpenAI also say they conduct these tests with external experts before launching their new models. For example, for the launch of Anthropic’s latest model, Claude 3.5, the company conducted predeployment testing with experts at the UK’s AI Safety Institute. Anthropic has also allowed METR, a research nonprofit, to do an “initial exploration” of Claude 3.5’s capabilities for autonomy. Google says it also conducts internal red-teaming to test the boundaries of its model, Gemini, around election-related content, societal risks, and national security concerns. Microsoft says it has worked with third-party evaluators at NewsGuard, an organization advancing journalistic integrity, to evaluate risks and mitigate the risk of abusive deepfakes in Microsoft’s text-to-image tool. In addition to red-teaming, Meta says, it evaluated its latest model, Llama 3, to understand its performance in a series of risk areas like weapons, cyberattacks, and child exploitation.

But when it comes to testing, it’s not enough to just report that a company is taking actions, says Bommasani. For example, Amazon and Anthropic said they had worked with the nonprofit Thorn to combat risks to child safety posed by AI. Bommasani would have wanted to see more specifics about how the interventions that companies are implementing actually reduce those risks.

“It should become clear to us that it’s not just that companies are doing things but those things are having the desired effect,” Bommasani says.

RESULT: Good. The push for red-teaming and testing for a wide range of risks is a good and important one. However, Hickok would have liked to see independent researchers get broader access to companies’ models.

Commitment 2

The companies commit to sharing information across the industry and with governments, civil society, and academia on managing AI risks. This includes best practices for safety, information on attempts to circumvent safeguards, and technical collaboration.

After they signed the commitments, Anthropic, Google, Microsoft, and OpenAI founded the Frontier Model Forum, a nonprofit that aims to facilitate discussions and actions on AI safety and responsibility. Amazon and Meta have also joined.

Engaging with nonprofits that the AI companies funded themselves may not be in the spirit of the voluntary commitments, says Bommasani. But the Frontier Model Forum could be a way for these companies to cooperate with each other and pass on information about safety, which they normally could not do as competitors, he adds.

“Even if they’re not going to be transparent to the public, one thing you might want is for them to at least collectively figure out mitigations to actually reduce risk,” says Bommasani.

All of the seven signatories are also part of the Artificial Intelligence Safety Institute Consortium (AISIC), established by the National Institute of Standards and Technology (NIST), which develops guidelines and standards for AI policy and evaluation of AI performance. It is a large consortium consisting of a mix of public- and private-sector players. Google, Microsoft, and OpenAI also have representatives at the UN’s High-Level Advisory Body on Artificial Intelligence.

Many of the labs also highlighted their research collaborations with academics. For example, Google is part of MLCommons, where it worked with academics on a cross-industry AI Safety Benchmark. Google also says it actively contributes tools and resources, such as computing credit, to projects like the National Science Foundation’s National AI Research Resource pilot, which aims to democratize AI research in the US.

Many of the companies also contributed to guidance by the Partnership on AI, another nonprofit founded by Amazon, Facebook, Google, DeepMind, Microsoft, and IBM, on the deployment of foundation models.

RESULT: More work is needed. More information sharing is a welcome step as the industry tries to collectively make AI systems safe and trustworthy. However, it’s unclear how much of the effort advertised will actually lead to meaningful changes and how much is window dressing.

Commitment 3

The companies commit to investing in cybersecurity and insider threat safeguards to protect proprietary and unreleased model weights. These model weights are the most essential part of an AI system, and the companies agree that it is vital that the model weights be released only when intended and when security risks are considered.

Many of the companies have implemented new cybersecurity measures in the past year. For example, Microsoft has launched the Secure Future Initiative to address the growing scale of cyberattacks. The company says its model weights are encrypted to mitigate the potential risk of model theft, and it applies strong identity and access controls when deploying highly capable proprietary models.

Google too has launched an AI Cyber Defense Initiative. In May OpenAI shared six new measures it is developing to complement its existing cybersecurity practices, such as extending cryptographic protection to AI hardware. It also has a Cybersecurity Grant Program, which gives researchers access to its models to build cyber defenses.

Amazon mentioned that it has also taken specific measures against attacks specific to generative AI, such as data poisoning and prompt injection, in which someone uses prompts that direct the language model to ignore its previous directions and safety guardrails.

Just a couple of days after signing the commitments, Anthropic published details about its protections, which include common cybersecurity practices such as controlling who has access to the models and sensitive assets such as model weights, and inspecting and controlling the third-party supply chain. The company also works with independent assessors to evaluate whether the controls it has designed meet its cybersecurity needs.

RESULT: Good. All of the companies did say they had taken extra measures to protect their models, although it doesn’t seem there is much consensus on the best way to protect AI models.

Commitment 4

The companies commit to facilitating third-party discovery and reporting of vulnerabilities in their AI systems. Some issues may persist even after an AI system is released and a robust reporting mechanism enables them to be found and fixed quickly.

For this commitment, one of the most popular responses was to implement bug bounty programs, which reward people who find flaws in AI systems. Anthropic, Google, Microsoft, Meta, and OpenAI all have one for AI systems. Anthropic and Amazon also said they have forms on their websites where security researchers can submit vulnerability reports.

It will likely take us years to figure out how to do third-party auditing well, says Brandie Nonnecke. “It’s not just a technical challenge. It’s a socio-technical challenge. And it just kind of takes years for us to figure out not only the technical standards of AI, but also socio-technical standards, and it’s messy and hard,” she says.

Nonnecke says she worries that the first companies to implement third-party audits might set poor precedents for how to think about and address the socio-technical risks of AI. For example, audits might define, evaluate, and address some risks but overlook others.

RESULT: More work is needed. Bug bounties are great, but they’re nowhere near comprehensive enough. New laws, such as the EU’s AI Act, will require tech companies to conduct audits, and it would have been great to see tech companies share successful examples of such audits.

Commitment 5

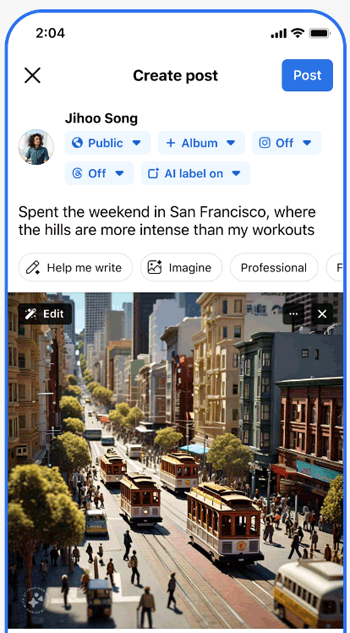

The companies commit to developing robust technical mechanisms to ensure that users know when content is AI generated, such as a watermarking system. This action enables creativity with AI to flourish but reduces the dangers of fraud and deception.

Many of the companies have built watermarks for AI-generated content. For example, Google launched SynthID, a watermarking tool for image, audio, text, and video generated by Gemini. Meta has a tool called Stable Signature for images, and AudioSeal for AI-generated speech. Amazon now adds an invisible watermark to all images generated by its Titan Image Generator. OpenAI also uses watermarks in Voice Engine, its custom voice model, and has built an image-detection classifier for images generated by DALL-E 3. Anthropic was the only company that hadn’t built a watermarking tool, because watermarks are mainly used in images, which the company’s Claude model doesn’t support.

All the companies excluding Inflection, Anthropic, and Meta are also part of the Coalition for Content Provenance and Authenticity (C2PA), an industry coalition that embeds information about when content was created, and whether it was created or edited by AI, into an image’s metadata. Microsoft and OpenAI automatically attach the C2PA’s provenance metadata to images generated with DALL-E 3 and videos generated with Sora. While Meta is not a member, it announced it is using the C2PA standard to identify AI-generated images on its platforms.

The six companies that signed the commitments have a “natural preference to more technical approaches to addressing risk,” says Bommasani, “and certainly watermarking in particular has this flavor.”

“The natural question is: Does [the technical fix] meaningfully make progress and address the underlying social concerns that motivate why we want to know whether content is machine generated or not?” he adds.

RESULT: Good. This is an encouraging result overall. While watermarking remains experimental and is still unreliable, it’s still good to see research around it and a commitment to the C2PA standard. It’s better than nothing, especially during a busy election year.

Commitment 6

The companies commit to publicly reporting their AI systems’ capabilities, limitations, and areas of appropriate and inappropriate use. This report will cover both security risks and societal risks, such as the effects on fairness and bias.

The White House’s commitments leave a lot of room for interpretation. For example, companies can technically meet this public reporting commitment with widely varying levels of transparency, as long as they do something in that general direction.

The most common solutions tech companies offered here were so-called model cards. Each company calls them by a slightly different name, but in essence they act as a kind of product description for AI models. They can address anything from the model’s capabilities and limitations (including how it measures up against benchmarks on fairness and explainability) to veracity, robustness, governance, privacy, and security. Anthropic said it also tests models for potential safety issues that may arise later.

Microsoft has published an annual Responsible AI Transparency Report, which provides insight into how the company builds applications that use generative AI, make decisions, and oversees the deployment of those applications. The company also says it gives clear notice on where and how AI is used within its products.

RESULT: More work is needed. One area of improvement for AI companies would be to increase transparency on their governance structures and on the financial relationships between companies, Hickok says. She would also have liked to see companies be more public about data provenance, model training processes, safety incidents, and energy use.

Commitment 7

The companies commit to prioritizing research on the societal risks that AI systems can pose, including on avoiding harmful bias and discrimination, and protecting privacy. The track record of AI shows the insidiousness and prevalence of these dangers, and the companies commit to rolling out AI that mitigates them.

Tech companies have been busy on the safety research front, and they have embedded their findings into products. Amazon has built guardrails for Amazon Bedrock that can detect hallucinations and can apply safety, privacy, and truthfulness protections. Anthropic says it employs a team of researchers dedicated to researching societal risks and privacy. In the past year, the company has pushed out research on deception, jailbreaking, strategies to mitigate discrimination, and emergent capabilities such as models’ ability to tamper with their own code or engage in persuasion. And OpenAI says it has trained its models to avoid producing hateful content and refuse to generate output on hateful or extremist content. It trained its GPT-4V to refuse many requests that require drawing from stereotypes to answer. Google DeepMind has also released research to evaluate dangerous capabilities, and the company has done a study on misuses of generative AI.

All of them have poured a lot of money into this area of research. For example, Google has invested millions into creating a new AI Safety Fund to promote research in the field through the Frontier Model Forum. Microsoft says it has committed $20 million in compute credits to researching societal risks through the National AI Research Resource and started its own AI model research accelerator program for academics, called the Accelerating Foundation Models Research program. The company has also hired 24 research fellows focusing on AI and society.

RESULT: Very good. This is an easy commitment to meet, as the signatories are some of the biggest and richest corporate AI research labs in the world. While more research into how to make AI systems safe is a welcome step, critics say that the focus on safety research takes attention and resources from AI research that focuses on more immediate harms, such as discrimination and bias.

Commitment 8

The companies commit to develop and deploy advanced AI systems to help address society’s greatest challenges. From cancer prevention to mitigating climate change to so much in between, AI—if properly managed—can contribute enormously to the prosperity, equality, and security of all.

Since making this commitment, tech companies have tackled a diverse set of problems. For example, Pfizer used Claude to assess trends in cancer treatment research after gathering relevant data and scientific content, and Gilead, an American biopharmaceutical company, used generative AI from Amazon Web Services to do feasibility evaluations on clinical studies and analyze data sets.

Google DeepMind has a particularly strong track record in pushing out AI tools that can help scientists. For example, AlphaFold 3 can predict the structure and interactions of all life’s molecules. AlphaGeometry can solve geometry problems at a level comparable with the world’s brightest high school mathematicians. And GraphCast is an AI model that is able to make medium-range weather forecasts. Meanwhile, Microsoft has used satellite imagery and AI to improve responses to wildfires in Maui and map climate-vulnerable populations, which helps researchers expose risks such as food insecurity, forced migration, and disease.

OpenAI, meanwhile, has announced partnerships and funding for various research projects, such as one looking at how multimodal AI models can be used safely by educators and by scientists in laboratory settings It has also offered credits to help researchers use its platforms during hackathons on clean energy development.

RESULT: Very good. Some of the work on using AI to boost scientific discovery or predict weather events is genuinely exciting. AI companies haven’t used AI to prevent cancer yet, but that’s a pretty high bar.

Overall, there have been some positive changes in the way AI has been built, such as red-teaming practices, watermarks and new ways for industry to share best practices. However, these are only a couple of neat technical solutions to the messy socio-technical problem that is AI harm, and a lot more work is needed. One year on, it is also odd to see the commitments talk about a very particular type of AI safety that focuses on hypothetical risks, such bioweapons, and completely fail to mention consumer protection, nonconsensual deepfakes, data and copyright, and the environmental footprint of AI models. These seem like weird omissions today.