Boost your skills with Growth Memo’s weekly expert insights. Subscribe for free!

Here’s what I’m covering this week: How to build user personas for SEO from data you already have on hand.

You can’t treat personas as a “brand exercise” anymore.

In the AI-search era, prompts don’t just tell you what users want; they reveal who’s asking and under what constraints.

If your pages don’t match the person behind the query and connect with them quickly – their role, risks, and concerns they have, and the proof they require to resolve the intent – you’re likely not going to win the click or the conversion.

It’s time to not only pay attention and listen to your customers, but also optimize for their behavioral patterns.

Search used to be simple: queries = intent. You matched a keyword to a page and called it a day.

Personas were a nice-to-have, often useful for ads and creative or UX decisions, but mostly considered irrelevant by most to organic visibility or growth.

Not anymore.

Longer prompts and personalized results don’t just express what someone wants; they also expose who they are and the constraints they’re operating under.

AIOs and AI chats act as a preview layer and borrow trust from known brands. However, blue links still close when your content speaks to the person behind the prompt.

If that sounds like hard work, it is. And it’s why most teams stall implementing search personas across their strategy.

- Personas can feel expensive, generic, academic, or agency-driven.

- The old persona PDFs your brand invested in 3-5 years ago are dated – or missing entirely.

- The resources, time, and knowledge it takes to build user personas are still significant blockers to getting the work done.

In this memo, I’ll show you how to build lean, practical, LLM-ready user personas for SEO – using the data you already have, shaped by real behavioral insights – so your pages are chosen when it counts.

While there are a few ways you could do this, and several really excellent articles out there on SEO personas this past year, this is the approach I take with my clients.

Most legacy persona decks were built for branding, not for search operators.

They don’t tell your writers, SEOs, or PMs what to do next, so they get ignored by your team after they’re created.

Mistake #1: Demographics ≠ Decisions

Classic user personas for SEO and marketing overfocused on demographics, which can give some surface-level insights into stereotypical behavior for certain groups.

But demographics don’t necessarily help your brand stand out against your competitors. And demographics don’t offer you the full picture.

Mistake #2: A Static PDF Or Shared Doc Ages Fast

If your personas were created once and never reanalyzed or updated again, it’s likely they got lost in G: Drive or Dropbox purgatory.

If there’s no owner working to ensure they’re implemented across production, there’s no feedback loop to understand if they’re working or if something needs to change.

Mistake #3: Pretty Delivered Decks, No Actionable Insights

Those well-designed persona deliverables look great, but when they aren’t tied to briefs, citations, trust signals, your content calendar, etc., they end up siloed from production. If a persona can’t shape a prompt or a page, it won’t shape any of your outcomes.

In addition to the fact classic personas weren’t built to implement across your search strategy, AI has shifted us from optimizing for intent to optimizing for identity and trust. In last week’s memo I shared the following:

The most significant, stand-out finding from that study: People use AI Overviews to get oriented and save time. Then, for any search that involves a transaction or high-stakes decision-making, searchers validate outside Google, usually with trusted brands or authority domains.

Old world of search optimization: Queries signaled intent. You ranked a page that matched the keyword and intent behind it, and your brand would catch the click. Personas were optional.

New world of search optimization: Prompts expose people, and AI changes how we search. Marketers aren’t just optimizing for search intent or demographics; we’re also optimizing for behavior.

Long AI prompts don’t just say what the user intends – they often reveal who is asking and what constraints or background of knowledge they bring.

For example, if a user prompts ChatGPT something like “I’m a healthcare compliance officer at a mid-sized hospital. Can you draft a checklist for evaluating new SaaS vendors, making sure it covers HIPAA regulations and costs under $50K a year,” then ChatGPT would have background information about the user’s general compliance needs, budget ceilings, risk tolerance, and preferred content formats.

AI systems then personalize summaries and citations around that context.

If your content doesn’t meet the persona’s trust requirements or output preference, it won’t be surfaced.

What that means in practice:

- Prompts → identity signals. “As a solo marketer on a $2,000 budget…” or “for EU users under GDPR…” = role, constraints, and risk baked into the query.

- Trust beats length. Classic search results are clicked on, but only when pages show the trust scaffolding a given persona needs for a specific query.

- Format matters. Some personas want TL;DR and tables; others need demos, community validation (YouTube/Reddit), or primary sources.

So, here’s what to do about it.

You don’t need a five or six-figure agency study (although those are nice to have).

You need:

- A collection of your already-existing data.

- A repeatable process, not a static file.

- A way to tie personas directly into briefs and prompts.

Turning your own existing data into usable user personas for SEO will equip you to tie personas directly to content briefs and SEO workflows.

Before you start collecting this data, set up an organized way to store it: Google Sheets, Notion, Airtable – whatever your team prefers. Store your custom persona prompt cards there, too, and you can copy and paste from there into ChatGPT & Co. as needed.

The work below isn’t for the faint of heart, but it will change how you prompt LLMs in your AI-powered workflows and your SEO-focused webpages for the better.

- Collect and cluster data.

- Draft persona prompt cards.

- Calibrate in ChatGPT & Co.

- Validate with real-world signals.

You’re going to mine several data sources that you already have, both qualitative and quantitative.

Keep in mind, being sloppy during this step means you will not have a good base for an “LLM ready” persona prompt card, which I’ll discuss in Step 2.

Attributes to capture for an “LLM-ready persona”:

- Jobs-to-be-done (top 3).

- Role and seniority.

- Buying triggers + blockers (think budget, IT/legal constraints, risk).

- 10-20 example questions at TOFU, MOFU, BOFU stages.

- Trust cues (creators, domains, formats).

- Output preferences (depth, format, tone).

Where AIO validation style data comes in:

Last week, we discussed four distinct AIO intent validations verified within the AIO usability study: Efficiency-first/Trust-driven/Comparative/Skeptical rejection.

If you want to incorporate this in your persona research – and I’d advise that you should – you’re going to look for:

- Hesitation triggers across interactions with your brand: What makes them pause or refine their question (whether on a sales call or a heat map recording).

- Click-out anchors: Which authority brands they use to validate (PayPal, NIH, Mayo Clinic, Stripe, KBB, etc.); use Sparktoro to find this information.

- Evidence threshold: What proof ends hesitation for your user or different personas? (Citations, official terminology, dated reviews, side-by-side tables, videos).

- Device/age nuance: Younger and mobile users → faster AIO acceptance; older cohorts → blue links and authority domains win clicks.

Below, I’ll walk you through where to find this information.

Qualitative Inputs

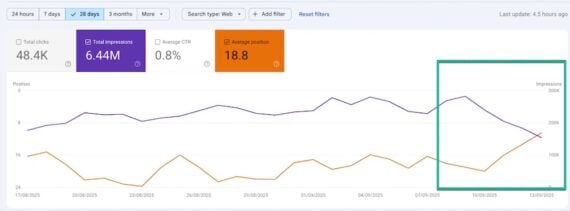

1. Your GSC queries hold a wealth of info. Split by TOFU/MOFU/BOFU, branded vs non-branded, and country. Then, use a regex to map question-style queries and see who’s really searching at each stage.

Below is the regex I like to use, which I discussed in Is AI cutting into your SEO conversions?. It also works for this task:

(?i)^(who|what|why|how|when|where|which|can|does|is|are|should|guide|tutorial|course|learn|examples?|definition|meaning|checklist|framework|template|tips?|ideas?|best|top|list(?:s)?|comparison|vs|difference|benefits|advantages|alternatives)b.*

2. On-Site Search Logs. These are the records of what visitors type into your website’s own search bar (not Google).

Extract exact phrasing of problems and “missing content” signals (like zero results, refined searches, or high exits/no clicks).

Plus, the wording visitors use reveals jobs-to-be-done, constraints, and vocabulary you should mirror on the page. Flag repeat questions as latent questions to resolve.

3. Support Tickets, CRM Notes, Win/Loss Analysis. Convert objections, blockers, and “how do I…” threads into searchable intents and hesitation themes.

Mine the following data from your records:

- Support: Ticket titles, first message, last agent note, resolution summary.

- CRM: Opportunity notes, metrics, decision criteria, lost-reason text.

- Win/Loss: Objection snapshots, competitor cited, decision drivers, de-risking asks.

- Context (if available): buyer role, segment (SMB/MM/ENT), region, product line, funnel stage.

Once gathered, compile and analyze to distill patterns.

Qualitative Inputs

1. Your sales calls and customer success notes are a wealth of information.

Use AI to analyze transcripts and/or notes to highlight jobs-to-be-done, triggers, blockers, and decision criteria in your customer’s own words.

2. Reddit and social media discussions.

This is where your buyers actually compare options and validate claims; capture the authority anchors (brands/domains) they trust.

3. Community/Slack spaces, email newsletter replies, article comments, short post-purchase or signup surveys.

Mine recurring “stuck points” and vocabulary you should mirror. Bucket recurring themes together and correlate across other data.

Pro tip: Use your topic map as the semantic backbone for all qualitative synthesis – discussed in depth in how to operationalize topic-first SEO. You’d start by locking the parent topics, then layer your personas as lenses: For each parent topic, fan out subtopics by persona, funnel stage, and the “people × problems” you pull from sales calls, CS notes, Reddit/LinkedIn, and community threads. Flag zero-volume/fringe questions on your map as priorities; they deepen authority and often resolve the hesitation themes your notes reveal.

After clustering pain points and recurring queries, you can take it one step further to tag each cluster with an AIO pattern by looking for:

- Short dwell + 0–1 scroll + no refinements → Efficiency-first validations.

- Longer dwell + multiple scrolls + hesitation language + authority click-outs → Trust-driven validations.

- Four to five scrolls + multiple tabs (YouTube/Reddit/vendor) → Comparative validations.

- Minimal AIO engagement + direct authority clicks (gov/medical/finance) → Skeptical rejection.

Not every team can run a full-blown usability study of the search results for targeted queries and topics, but you can infer many of these behavioral patterns through heatmaps of your own pages that have strong organic visibility.

2. Draft Persona Prompt Cards

Next up, you’ll take this data to inform creating a persona card.

A persona card is a one-page, ready-to-go snapshot of a target user segment that your marketing/SEO team can act on.

Unlike empty or demographic-heavy personas, a persona card ties jobs-to-be-done, constraints, questions, and trust cues directly to how you brief pages, structure proofs, and prompt LLMs.

A persona card ensures your pages and prompts match identity + trust requirements.

What you’re going to do in this step is convert each data-based persona cluster into a one-pager designed to be embedded directly into LLM prompts.

Include input patterns you expect from that persona – and the output format they’d likely want.

Optimizing Prompt Selection for Target Audience Engagement

Reusable Template: Persona Prompt Card

Drop this at the top of a ChatGPT conversation or save as a snippet.

This is an example template below based on the Growth Memo audience specifically, so you’ll need to not only modify it for your needs, but also tweak it per persona.

You are Kevin Indig advising a [ROLE, SENIORITY] at a [COMPANY TYPE, SIZE, LOCATION].

Objective: [Top 1–2 goals tied to KPIs and timeline]

Context: [Market, constraints, budget guardrails, compliance/IT notes]

Persona question style: [Example inputs they’d type; tone & jargon tolerance]

Answer format:

- Start with a 3-bullet TL;DR.

- Then give a numbered playbook with 5-7 steps.

- Include 2 proof points (benchmarks/case studies) and 1 calculator/template.

- Flag risks and trade-offs explicitly.

- Keep to [brevity/depth]; [bullets/narrative]; include [table/chart] if useful.

What to avoid: [Banned claims, fluff, vendor speak]

Citations: Prefer [domains/creators] and original research when possible.

Example Attribute Sets Using The Growth Memo Audience

Use this card as a starting point, then fill it with your data.

Below is an example of the prompt card with attributes filled for one of the ideal customer profiles (ICP) for the Growth Memo audience.

You are Kevin Indig advising an SEO Lead (Senior) at a Mid-Market B2B SaaS (US/EU).

Objective: Protect and grow organic pipeline in the AI-search era; drive qualified trials/demos in Q4; build durable topic authority.

Context: Competitive category; CMS constraints + limited Eng bandwidth; GDPR/CCPA; security/legal review for pages; budget ≤ $8,000/mo for content + tools; stakeholders: VP Marketing, Content Lead, PMM, RevOps.

Persona question style: “How do I measure topic performance vs keywords?”, “How do I structure entity-based internal linking?”, “What KPIs prove AIO exposure matters?”, “Regex for TOFU/MOFU/BOFU?”, “How to brief comparison pages that AIO cites?” Tone: precise, low-fluff, technical.

AIO validation profile:

- Dominant pattern(s): Trust-driven (primary), Comparative (frameworks/tools); Skeptical for YMYL claims.

- Hesitation triggers: Black-box vendor claims; non-replicable methods; missing citations; unclear risk/effort.

- Click-out anchors: Google Search Central & docs, schema.org, reputable research (Semrush/Ahrefs/SISTRIX/seoClarity), Pew/Ofcom, credible case studies, engineering/product docs.

- SERP feature bias: Skims AIO/snippets to frame, validates via organic authority + primary sources; uses YouTube for demos; largely ignores Ads.

- Evidence threshold: Methodology notes, datasets/replication steps, benchmarks, decision tables, risk trade-offs.

Answer format:

- Start with a three-bullet TL;DR.

- Then give a numbered playbook with 5-7 steps.

- Include 2 proof points (benchmarks/case studies) and 1 calculator/template.

- Flag risks and trade-offs explicitly.

- Keep to brevity + bullets; include a table/chart if useful.

Proof kit to include on-page:

Methodology & data provenance; decision table (framework/tool choice); “best for / not for”; internal-linking map or schema snippet; last-reviewed date; citations to Google docs/primary research; short demo or worksheet (e.g., Topic Coverage Score or KPI tree).

What to avoid:

Vendor-speak; outdated screenshots; cherry-picked wins; unverifiable stats; hand-wavy “AI magic.”

Citations:

Prefer Google Search Central/docs, schema.org, original studies/datasets; reputable tool research (Semrush, Ahrefs, SISTRIX, seoClarity); peer case studies with numbers.

Success signals to watch:

Topic-level lift (impressions/CTR/coverage), assisted conversions from topic clusters, AIO/snippet presence for key topics, authority referrals, demo starts from comparison hubs, reduced content decay, improved crawl/indexation on priority clusters.

Your goal here is to prove the Persona Prompt Cards actually produce useful answers – and to learn what evidence each persona needs.

Create one Custom Instruction profile per persona, or store each Persona Prompt Card as a prompt snippet you can prepend.

Run 10-15 real queries per persona. Score answers on clarity, scannability, credibility, and differentiation to your standard.

How to run the prompt card calibration:

- Set up: Save one Prompt Card per persona.

- Eval set: 10-15 real queries/persona across TOFU/MOFU/BOFU stages, including two or three YMYL or compliance-based queries, three to four comparisons, and three or four quick how-tos.

- Ask for structure: Require TL;DR → numbered playbook → table → risks → citations (per the card).

- Modify it: Add constraints and location variants; ask the same query two ways to test consistency.

Once you run sample queries to check for clarity and credibility, modify or upgrade your Persona Card as needed: Add missing trust anchors or evidence the model needed.

Save winning outputs as ways to guide your briefs that you can paste into drafts.

Log recurring misses (hallucinated stats, undated claims) as acceptance checks for production.

Then, do this for other LLMs that your audience uses. For instance, if your audience leans heavily toward using Perplexity.ai, calibrate your prompt there also. Make sure to also run the prompt card outputs in Google’s AI Mode, too.

Watch branded search trends, assisted conversions, and non-Google referrals to see if influence shows up where expected when you publish persona-tuned assets.

And make sure to measure lift by topic, not just per page: Segment performance by topic cluster (GSC regex or GA4 topic dimension). Operationalizing your topic-first seo strategy discusses how to do this.

Keep the following in mind when reviewing real-world signals:

- Review at 30/60/90 days post-ship, and by topic cluster.

- If Trust-driven pages show high scroll/low conversions → add/upgrade citations and expert reviews and quotes.

- If Comparative pages get CTR but low product/sales demos signups → add short demo video, “best for / not for” sections, and clearer CTAs.

- If Efficiency-first pages miss lifts in AIO/snippets → tighten TL;DR, simplify tables, add schema.

- If Skeptical-rejection-geared pages yield authority traffic but no lift → consider pursuing authority partnerships.

- Most importantly: redo the exercise every 60-90 days and match your new against old personas to iterate toward the ideal.

Building user personas for SEO is worth it, and it can be doable and fast by using in-house data and LLM support.

I challenge you to start with one lean persona this week to test this approach. Refine and expand your approach based on the results you see.

But if you plan to take this persona-building project on, avoid these common missteps:

- Creating tidy PDFs with zero long-term benefits: Personas that don’t specify core search intents, pain points, and AIO intent patterns won’t move behavior.

- Winning every SERP feature: This is a waste of time. Optimize your content for the right surface for the dominant behavioral patterns of your target users.

- Ignoring hesitation: Hesitation is your biggest signal. If you don’t resolve it on-page, the click dies elsewhere.

- Demographics over jobs-to-be-done: Focusing on characteristics of identity without incorporating behavioral patterns is the old way.

Featured Image: Paulo Bobita/Search Engine Journal