The Download: the worst technology of 2025, and Sam Altman’s AI hype

This is today’s edition of The Download, our weekday newsletter that provides a daily dose of what’s going on in the world of technology.

The 8 worst technology flops of 2025

Welcome to our annual list of the worst, least successful, and simply dumbest technologies of the year.

We like to think there’s a lesson in every technological misadventure. But when technology becomes dependent on power, sometimes the takeaway is simpler: it would have been better to stay away.

Regrets—2025 had a few. Here are some of the more notable ones.

—Antonio Regalado

A brief history of Sam Altman’s hype

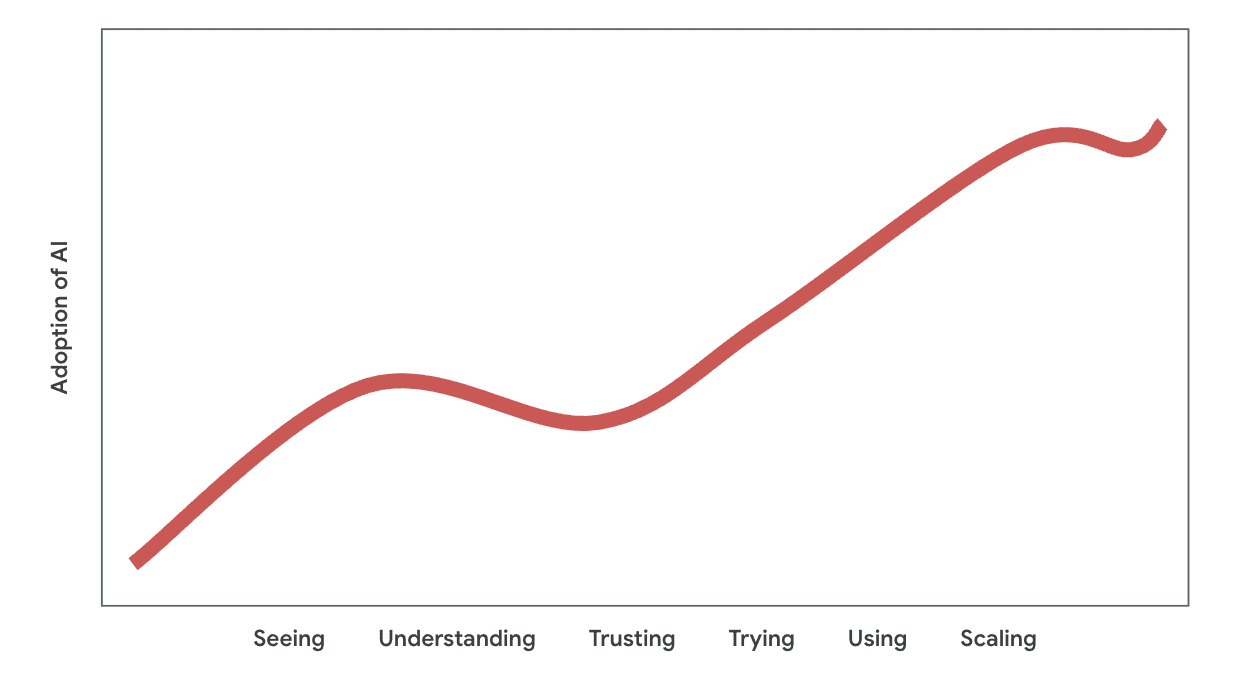

Each time you’ve heard a borderline outlandish idea of what AI will be capable of, it often turns out that Sam Altman was, if not the first to articulate it, at least the most persuasive and influential voice behind it.

For more than a decade he has been known in Silicon Valley as a world-class fundraiser and persuader. Throughout, Altman’s words have set the agenda. What he says about AI is rarely provable when he says it, but it persuades us of one thing: This road we’re on with AI can go somewhere either great or terrifying, and OpenAI will need epic sums to steer it toward the right destination. In this sense, he is the ultimate hype man.

To understand how his voice has shaped our understanding of what AI can do, we read almost everything he’s ever said about the technology. His own words trace how we arrived here. Read the full story.

—James O’Donnell

This story is part of our new Hype Correction package, a collection of stories designed to help you reset your expectations about what AI makes possible—and what it doesn’t. Check out the rest of the package here.

Can AI really help us discover new materials?

One of my favorite stories in the Hype Correction package comes from my colleague David Rotman, who took a hard look at AI for materials research. AI could transform the process of discovering new materials—innovation that could be especially useful in the world of climate tech, which needs new batteries, semiconductors, magnets, and more.

But the field still needs to prove it can make materials that are actually novel and useful. Can AI really supercharge materials research? And what would that look like? Read the full story.

—Casey Crownhart

This article is from The Spark, MIT Technology Review’s weekly climate newsletter. To receive it in your inbox every Wednesday, sign up here.

The must-reads

I’ve combed the internet to find you today’s most fun/important/scary/fascinating stories about technology.

1 China built a chip-making machine to rival the West’s supremacy

Suggesting China is far closer to achieving semiconductor independence than we previously believed. (Reuters)

+ China’s chip boom is creating a new class of AI-era billionaires. (Insider $)

2 NASA finally has a new boss

It’s billionaire astronaut Jared Isaacman, a close ally of Elon Musk. (Insider $)

+ But will Isaacman lead the US back to the Moon before China? (BBC)

+ Trump previously pulled his nomination, before reselecting Isaacman last month. (The Verge)

3 The parents of a teenage sextortion victim are suing Meta

Murray Dowey took his own life after being tricked into sending intimate pictures to an overseas criminal gang. (The Guardian)

+ It’s believed that the gang is based in West Africa. (BBC)

4 US and Chinese satellites are jostling in orbit

In fact, these clashes are so common that officials have given it a name—”dogfighting.” (WP $)

+ How to fight a war in space (and get away with it) (MIT Technology Review)

5 It’s not just AI that’s trapped in a bubble right now

Labubus, anyone? (Bloomberg $)

+ What even is the AI bubble? (MIT Technology Review)

6 Elon Musk’s Texan school isn’t operating as a school

Instead, it’s a “licensed child care program” with just a handful of enrolled kids. (NYT $)

7 US Border Patrol is building a network of small drones

In a bid to expand its covert surveillance powers. (Wired $)

+ This giant microwave may change the future of war. (MIT Technology Review)

8 This spoon makes low-salt foods taste better

By driving the food’s sodium ions straight to the diner’s tongue. (IEEE Spectrum)

9 AI cannot be trusted to run an office vending machine

Though the lucky Wall Street Journal staffer who walked away with a free PlayStation may beg to differ. (WSJ $)

10 Physicists have 3D-printed a Cheistmas tree from ice

No refrigeration kit required. (Ars Technica)

Quote of the day

“It will be mentioned less and less in the same way that Microsoft Office isn’t mentioned in job postings anymore.”

—Marc Cenedella, founder and CEO of careers platform Ladders, tells Insider why employers will increasingly expect new hires to be fully au fait with AI.

One more thing

Is this the electric grid of the future?

Lincoln Electric System, a publicly owned utility in Nebraska, is used to weathering severe blizzards. But what will happen soon—not only at Lincoln Electric but for all electric utilities—is a challenge of a different order.

Utilities must keep the lights on in the face of more extreme and more frequent storms and fires, growing risks of cyberattacks and physical disruptions, and a wildly uncertain policy and regulatory landscape. They must keep prices low amid inflationary costs. And they must adapt to an epochal change in how the grid works, as the industry attempts to transition from power generated with fossil fuels to power generated from renewable sources like solar and wind.

The electric grid is bracing for a near future characterized by disruption. And, in many ways, Lincoln Electric is an ideal lens through which to examine what’s coming. Read the full story.

—Andrew Blum

We can still have nice things

A place for comfort, fun and distraction to brighten up your day. (Got any ideas? Drop me a line or skeet ’em at me.)

+ A fragrance company is trying to recapture the scent of extinct flowers, wow.

+ Seattle’s Sauna Festival sounds right up my street.

+ Switzerland has built what’s essentially a theme park dedicated to Saint Bernards.

+ I fear I’ll never get over this tale of director supremo James Cameron giving a drowning rat CPR to save its life