Happy birthday, baby.

You have been born into an era of intelligent machines. They have watched over you almost since your conception. They let your parents listen in on your tiny heartbeat, track your gestation on an app, and post your sonogram on social media. Well before you were born, you were known to the algorithm.

Your arrival coincided with the 125th anniversary of this magazine. With a bit of luck and the right genes, you might see the next 125 years. How will you and the next generation of machines grow up together? We asked more than a dozen experts to imagine your joint future. We explained that this would be a thought experiment. What I mean is: We asked them to get weird.

Just about all of them agreed on how to frame the past: Computing shrank from giant shared industrial mainframes to personal desktop devices to electronic shrapnel so small it’s ambient in the environment. Previously controlled at arm’s length through punch card, keyboard, or mouse, computing became wearable, moving onto—and very recently into—the body. In our time, eye or brain implants are only for medical aid; in your time, who knows?

In the future, everyone thinks, computers will get smaller and more plentiful still. But the biggest change in your lifetime will be the rise of intelligent agents. Computing will be more responsive, more intimate, less confined to any one platform. It will be less like a tool, and more like a companion. It will learn from you and also be your guide.

What they mean, baby, is that it’s going to be your friend.

Present day to 2034

Age 0 to 10

When you were born, your family surrounded you with “smart” things: rockers, monitors, lamps that play lullabies.

But not a single expert name-checked those as your first exposure to technology. Instead, they mentioned your parents’ phone or smart watch. And why not? As your loved ones cradle you, that deliciously blinky thing is right there. Babies learn by trial and error, by touching objects to see what happens. You tap it; it lights up or makes noise. Fascinating!

Cognitively, you won’t get much out of that interaction between birth and age two, says Jason Yip, an associate professor of digital youth at the University of Washington. But it helps introduce you to a world of animate objects, says Sean Follmer, director of the SHAPE Lab in Stanford’s mechanical engineering department, which explores haptics in robotics and computing. If you touch something, how does it respond?

You are the child of millennials and Gen Z—digital natives, the first influencers. So as you grow, cameras are ubiquitous. You see yourself onscreen and learn to smile or wave to the people on the other side. Your grandparents read to you on FaceTime; you photobomb Zoom meetings. As you get older, you’ll realize that images of yourself are a kind of social currency.

Your primary school will certainly have computers, though we’re not sure how educators will balance real-world and onscreen instruction, a pedagogical debate today. But baby, school is where our experts think you will meet your first intelligent agent, in the form of a tutor or coach. Your AI tutor might guide you through activities that combine physical tasks with augmented-reality instruction—a sort of middle ground.

Some school libraries are becoming more like makerspaces, teaching critical thinking along with building skills, says Nesra Yannier, a faculty member in the Human-Computer Interaction Institute at Carnegie Mellon University. She is developing NoRILLA, an educational system that uses mixed reality—a combination of physical and virtual reality—to teach science and engineering concepts. For example, kids build wood-block structures and predict, with feedback from a cartoon AI gorilla, how they will fall.

Learning will be increasingly self-directed, says Liz Gerber, co-director of the Center for Human-Computer Interaction and Design at Northwestern University. The future classroom is “going to be hyper-personalized.” AI tutors could help with one-on-one instruction or repetitive sports drills.

All of this is pretty novel, so our experts had to guess at future form factors. Maybe while you’re learning, an unobtrusive bracelet or smart watch tracks your performance and then syncs data with a tablet, so your tutor can help you practice.

What will that agent be like? Follmer, who has worked with blind and low-vision students, thinks it might just be a voice. Yannier is partial to an animated character. Gerber thinks a digital avatar could be paired with a physical version, like a stuffed animal—in whatever guise you like. “It’s an imaginary friend,” says Gerber. “You get to decide who it is.”

Not everybody is sold on the AI tutor. In Yip’s research, kids often tell him AI-enabled technologies are … creepy. They feel unpredictable or scary or like they seem to be watching.

Kids learn through social interactions, so he’s also worried about technologies that isolate. And while he thinks AI can handle the cognitive aspects of tutoring, he’s not sure about its social side. Good teachers know how to motivate, how to deal with human moods and biology. Can a machine tell when a child is being sarcastic, or redirect a kid who is goofing off in the bathroom? When confronted with a meltdown, he asks, “is the AI going to know this kid is hungry and needs a snack?”

2040

Age 16

By the time you turn 16, you’ll likely still live in a world shaped by cars: highways, suburbs, climate change. But some parts of car culture may be changing. Electric chargers might be supplanting gas stations. And just as an intelligent agent assisted in your schooling, now one will drive with you—and probably for you.

Paola Meraz, a creative director of interaction design at BMW’s Designworks, describes that agent as “your friend on the road.” William Chergosky, chief designer at Calty Design Research, Toyota’s North American design studio, calls it “exactly like a friend in the car.”

While you are young, Chergosky says, it’s your chaperone, restricting your speed or routing you home at curfew. It tells you when you’re near In-N-Out, knowing your penchant for their animal fries. And because you want to keep up with your friends online and in the real world, the agent can comb your social media feeds to see where they are and suggest a meetup.

Just as an intelligent agent assisted in your schooling, now one will drive with you—and probably for you.

Cars have long been spots for teen hangouts, but as driving becomes more autonomous, their interiors can become more like living rooms. (You’ll no longer need to face the road and an instrument panel full of knobs.) Meraz anticipates seats that reposition so passengers can talk face to face, or game. “Imagine playing a game that interacts with the world that you are driving through,” she says, or “a movie that was designed where speed, time of day, and geographical elements could influence the storyline.”

Without an instrument panel, how do you control the car? Today’s minimalist interiors feature a dash-mounted tablet, but digging through endless onscreen menus is not terribly intuitive. The next step is probably gestural or voice control—ideally, through natural language. The tipping point, says Chergosky, will come when instead of giving detailed commands, you can just say: “Man, it is hot in here. Can you make it cooler?”

An agent that listens in and tracks your every move raises some strange questions. Will it change personalities for each driver? (Sure.) Can it keep a secret? (“Dad said he went to Taco Bell, but did he?” jokes Chergosky.) Does it even have to stay in the car?

Our experts say nope. Meraz imagines it being integrated with other kinds of agents—the future versions of Alexa or Google Home. “It’s all connected,” she says. And when your car dies, Chergosky says, the agent does not. “You can actually take the soul of it from vehicle to vehicle. So as you upgrade, it’s not like you cut off that relationship,” he says. “It moves with you. Because it’s grown with you.”

2049

Age 25

By your mid-20s, the agents in your life know an awful lot about you. Maybe they are, indeed, a single entity that follows you across devices and offers help where you need it. At this point, the place where you need the most help is your social life.

Kathryn Coduto, an assistant professor of media science at Boston University who studies online dating, says everyone’s big worry is the opening line. To her, AI could be a disembodied Cyrano that whips up 10 options or workshops your own attempts. Or maybe it’s a dating coach. You agree to meet up with a (real) person online, and “you have the AI in a corner saying ‘Hey, maybe you should say this,’ or ‘Don’t forget this.’ Almost like a little nudge.”

“There is some concern that we are going to see some people who are just like, ‘Nope, this is all I want. Why go out and do that when I can stay home with my partner, my virtual buddy?’”

T. Makana Chock, director, the Extended Reality Lab, Syracuse University

Virtual first dates might solve one of our present-day conundrums: Apps make searching for matches easier, but you get sparse—and perhaps inaccurate—info about those people. How do you know who’s worth meeting in real life? Building virtual dating into the app, Coduto says, could be “an appealing feature for a lot of daters who want to meet people but aren’t sure about a large initial time investment.”

T. Makana Chock, who directs the Extended Reality Lab at Syracuse University, thinks things could go a step further: first dates where both parties send an AI version of themselves in their place. “That would tell both of you that this is working—or this is definitely not going to work,” Chock says. If the date is a dud—well, at least you weren’t on it.

Or maybe you will just date an entirely virtual being, says Sun Joo (Grace) Ahn, who directs the Center for Advanced Computer-Human Ecosystems at the University of Georgia. Or you’ll go to a virtual party, have an amazing time, “and then later on you realize that you were the only real human in that entire room. Everybody else was AI.”

This might sound odd, says Ahn, but “humans are really good at building relationships with nonhuman entities.” It’s why you pour your heart out to your dog—or treat ChatGPT like a therapist.

There is a problem, though, when virtual relationships become too accommodating, says Chock: If you get used to agents that are tailored to please you, you get less skilled at dealing with real people and risking awkwardness or rejection. “You still need to have human interaction,” she says. “And there is some concern that we are going to see some people who are just like, ‘Nope, this is all I want. Why go out and do that when I can stay home with my partner, my virtual buddy?’”

By now, social media, online dating, and livestreaming have likely intertwined and become more immersive. Engineers have shrunk the obstacles to true telepresence: internet lag time, the uncanny valley, and clunky headsets, which may now be replaced by something more like glasses or smart contact lenses.

Online experiences may be less like observing someone else’s life and more like living it. Imagine, says Follmer: A basketball star wears clothing and skin sensors that track body position, motion, and forces, plus super-thin gloves that sense the texture of the ball. You, watching from your couch, wear a jersey and gloves made of smart textiles, woven with actuators that transmit whatever the player feels. When the athlete gets shoved, Follmer says, your fan gear “can really shove you right back.”

Gaming is another obvious application. But it’s not the likely first mover in this space. Nobody else wants to say this on the record, so I will: It’s porn. (Baby, ask your parents and/or AI tutor when you’re older.)

By your 20s, you are probably wrestling with the dilemmas of a life spent online and on camera. Coduto thinks you might rebel, opting out of social media because your parents documented your first 18 years without permission. As an adult, you’ll want tighter rules for privacy and consent, better ways to verify authenticity, and more control over sensitive materials, like a button that could nuke your old sexts.

But maybe it’s the opposite: Now you are an influencer yourself. If so, your body can be your display space. Today, wearables are basically boxes of electronics strapped onto limbs. Tomorrow, hopes Cindy Hsin-Liu Kao, who runs the Hybrid Body Lab at Cornell University, they will be more like your own skin. Kao develops wearables like color-changing eyeshadow stickers and mini nail trackpads that can control a phone or open a car door. In the not-too-distant future, she imagines, “you might be able to rent out each of your fingernails as an ad for social media.” Or maybe your hair: Weaving in super-thin programmable LED strands could make it a kind of screen.

What if those smart lenses could be display spaces too? “That would be really creepy,” she muses. “Just looking into someone’s eyes and it’s, like, CNN.”

2059

Age 35

By now, you’ve probably settled into domestic life—but it might not look much like the home you grew up in. Keith Evan Green, a professor of human-centered design at Cornell, doesn’t think we should imagine a home of the future. “I would call it a room of the future,” he says, because it will be the place for everything—work, school, play. This trend was hastened by the covid pandemic.

Your place will probably be small if you live in a big city. The uncertainties of climate change and transportation costs mean we can’t build cities infinitely outward. So he imagines a reconfigurable architectural robotic space: Walls move, objects inflate or unfold, furniture appears or dissolves into surfaces or recombines. Any necessary computing power is embedded. The home will finally be what Le Corbusier imagined: a machine for living in.

Green pictures this space as spartan but beautiful, like a temple—a place, he says, to think and be. “I would characterize it as this capacious monastic cell that is empty of most things but us,” he says.

Our experts think your home, like your car, will respond to voice or gestural control. But it will make some decisions autonomously, learning by observing you: your motion, location, temperature.

Ivan Poupyrev, CEO and cofounder of Archetype AI, says we’ll no longer control each smart appliance through its own app. Instead, he says, think of the home as a stage and you as the director. “You don’t interact with the air conditioner. You don’t interact with a TV,” he says. “You interact with the home as a total.” Instead of telling the TV to play a specific program, you make high-level demands of the entire space: “Turn on something interesting for me; I’m tired.” Or: “What is the plan for tomorrow?”

Stanford’s Follmer says that just as computing went from industrial to personal to ubiquitous, so will robotics. Your great-grandparents envisioned futuristic homes cared for by a single humanoid robot—like Rosie from The Jetsons. He envisions swarms of maybe 100 bots the size of quarters that materialize to clean, take out the trash, or bring you a cold drink. (“They know ahead of time, even before you do, that you’re thirsty,” he says.)

Baby, perhaps now you have your own baby. The technologies of reproduction have changed since you were born. For one thing, says Gerber, fertility tracking will be way more accurate: “It is going to be like weather prediction.” Maybe, Kao says, flexible fabric-like sensors could be embedded in panty liners to track menstrual health. Or, once the baby arrives, in nipple stickers that nursing parents could apply to track biofluid exchange. If the baby has trouble latching, maybe the sticker’s capacitive touch sensors could help the parent find a better position.

Also, goodbye to sleep deprivation. Gerber envisions a device that, for lack of an existing term, she’s calling a“baby handler”—picture an exoskeleton crossed with a car seat. It’s a late-night soothing machine that rocks, supplies pre-pumped breast milk, and maybe offers a bidet-like “cleaning and drying situation.”For your children, perhaps, this is their first experience of being close to a machine.

2074

Age 50

Now you are at the peak of your career. For professions heading toward AI automation, you may be the “human in the loop” who oversees a machine doing its tasks. The 9-to-5 workday, which is crumbling in our time, might be totally atomized into work-from-home fluidity or earn-as-you-go gig work.

Ahn thinks you might start the workday by lying in bed and checking your messages—on an implanted contact lens. Everyone loves a big screen, and putting it in your eye effectively gives you “the largest monitor in the world,” she says.

You’ve already dabbled with AI selves for dating. But now virtual agents are more photorealistic, and they can mimic your voice and mannerisms. Why not make one go to meetings for you?

Kori Inkpen, who studies human-computer interaction at Microsoft Research, calls this your “ditto”—more formally, an embodied mimetic agent, meaning it represents a specific person. “My ditto looks like me, acts like me, sounds like me, knows sort of what I know,” she says. You can instruct it to raise certain points and recap the conversation for you later. Your colleagues feel as if you were there, and you get the benefit of an exchange that’s not quite real time, but not as asynchronous as email. “A ditto starts to blend this reality,” Inkpen says.

In our time, augmented reality is slowly catching on as a tool for workers whose jobs require physical presence and tangible objects. But experts worry that once the last baby boomers retire, their technical expertise will go with them. Perhaps they can leave behind a legacy of training simulations.

Inkpen sees DIY opportunities. Say your fridge breaks. Instead of calling a repair person, you boot up an AR tutorial on glasses, a tablet, or a projection that overlays digital instructions atop the appliance. Follmer wonders if haptic sensors woven into gloves or clothing would let people training for highly specialized jobs—like surgery—literally feel the hand motions of experienced professionals.

For Poupyrev, the implications are much bigger. One way to think about AI is “as a storage medium,” he says. “It’s a preservation of human knowledge.” A large language model like ChatGPT is basically a compendium of all the text information people have put online. Next, if we feed models not only text but real-world sensor data that describes motion and behavior, “it becomes a very compressed presentation not of just knowledge, but also of how people do things.” AI can capture how to dance, or fix a car, or play ice hockey—all the skills you cannot learn from words alone—and preserve this knowledge for the future.

2099

Age 75

By the time you retire, families may be smaller, with more older people living solo.

Well, sort of. Chaiwoo Lee, a research scientist at the MIT AgeLab, thinks that in 75 years, your home will be a kind of roommate—“someone who cohabitates that space with you,” she says. “It reacts to your feelings, maybe understands you.”

By now, a home’s AI could be so good at deciphering body language that if you’re spending a lot of time on the couch, or seem rushed or irritated, it could try to lighten your mood. “If it’s a conversational agent, it can talk to you,” says Lee. Or it might suggest calling a loved one. “Maybe it changes the ambiance of the home to be more pleasant.”

The home is also collecting your health data, because it’s where you eat, shower, and use the bathroom. Passive data collection has advantages over wearable sensors: You don’t have to remember to put anything on. It doesn’t carry the stigma of sickness or frailty. And in general, Lee says, people don’t start wearing health trackers until they are ill, so they don’t have a comparative baseline. Perhaps it’s better to let the toilet or the mirror do the tracking continuously.

Green says interactive homes could help people with mobility and cognitive challenges live independently for longer. Robotic furnishings could help with lifting, fetching, or cleaning. By this time, they might be sophisticated enough to offer support when you need it and back off when you don’t.

Kao, of course, imagines the robotics embedded in fabric: garments that stiffen around the waist to help you stand, a glove that reinforces your grip.

If getting from point A to point B is becoming difficult, maybe you can travel without going anywhere. Green, who favors a blank-slate room, wonders if you’ll have a brain-machine interface that lets you change your surroundings at will. You think about, say, a jungle, and the wallpaper display morphs. The robotic furniture adjusts its topography. “We want to be able to sit on the boulder or lie down on the hammock,” he says.

Anne Marie Piper, an associate professor of informatics at UC Irvine who studies older adults, imagines something similar—minus the brain chip—in the context of a care home, where spaces could change to evoke special memories, like your honeymoon in Paris. “What if the space transforms into a café for you that has the smells and the music and the ambience, and that is just a really calming place for you to go?” she asks.

Gerber is all for virtual travel: It’s cheaper, faster, and better for the environment than the real thing. But she thinks that for a truly immersive Parisian experience, we’ll need engineers to invent … well, remote bread. Something that lets you chew on a boring-yet-nutritious source of calories while stimulating your senses so you get the crunch, scent, and taste of the perfect baguette.

2149

Age 125

We hope that your final years will not be lonely or painful.

Faraway loved ones can visit by digital double, or send love through smart textiles: Piper imagines a scarf that glows or warms when someone is thinking of you, Kao an on-skin device that simulates the touch of their hand. If you are very ill, you can escape into a soothing virtual world. Judith Amores, a senior researcher at Microsoft Research, is working on VR that responds to physiological signals. Today, she immerses hospital patients in an underwater world of jellyfish that pulse at half of an average person’s heart rate for a calming effect. In the future, she imagines, VR will detect anxiety without requiring a user to wear sensors—maybe by smell.

“It is a little cool to think of cemeteries in the future that are literally haunted by motion-activated holograms.”

Tim Recuber, sociologist, Smith College

You might be pondering virtual immortality. Tim Recuber, a sociologist at Smith College and author of The Digital Departed, notes that today people create memorial websites and chatbots, or sign up for post-mortem messaging services. These offer some end-of-life comfort, but they can’t preserve your memory indefinitely. Companies go bust. Websites break. People move on; that’s how mourning works.

What about uploading your consciousness to the cloud? The idea has a fervent fan base, says Recuber. People hope to resurrect themselves into human or robotic bodies, or spend eternity as part of a hive mind or “a beam of laser light that can travel the cosmos.” But he’s skeptical that it’ll work, especially within 125 years. Plus, what if being a ghost in the machine is dreadful? “Embodiment is, as far as we know, a pretty key component to existence. And it might be pretty upsetting to actually be a full version of yourself in a computer,” he says.

There is perhaps one last thing to try. It’s another AI. You curate this one yourself, using a lifetime of digital ephemera: your videos, texts, social media posts. It’s a hologram, and it hangs out with your loved ones to comfort them when you’re gone. Perhaps it even serves as your burial marker. “It is a little cool to think of cemeteries in the future that are literally haunted by motion-activated holograms,” Recuber says.

It won’t exist forever. Nothing does. But by now, maybe the agent is no longer your friend.

Maybe, at last, it is you.

Baby, we have caveats.

We imagine a world that has overcome the worst threats of our time: a creeping climate disaster; a deepening digital divide; our persistent flirtation with nuclear war; the possibility that a pandemic will kill us quickly, that overly convenient lifestyles will kill us slowly, or that intelligent machines will turn out to be too smart.

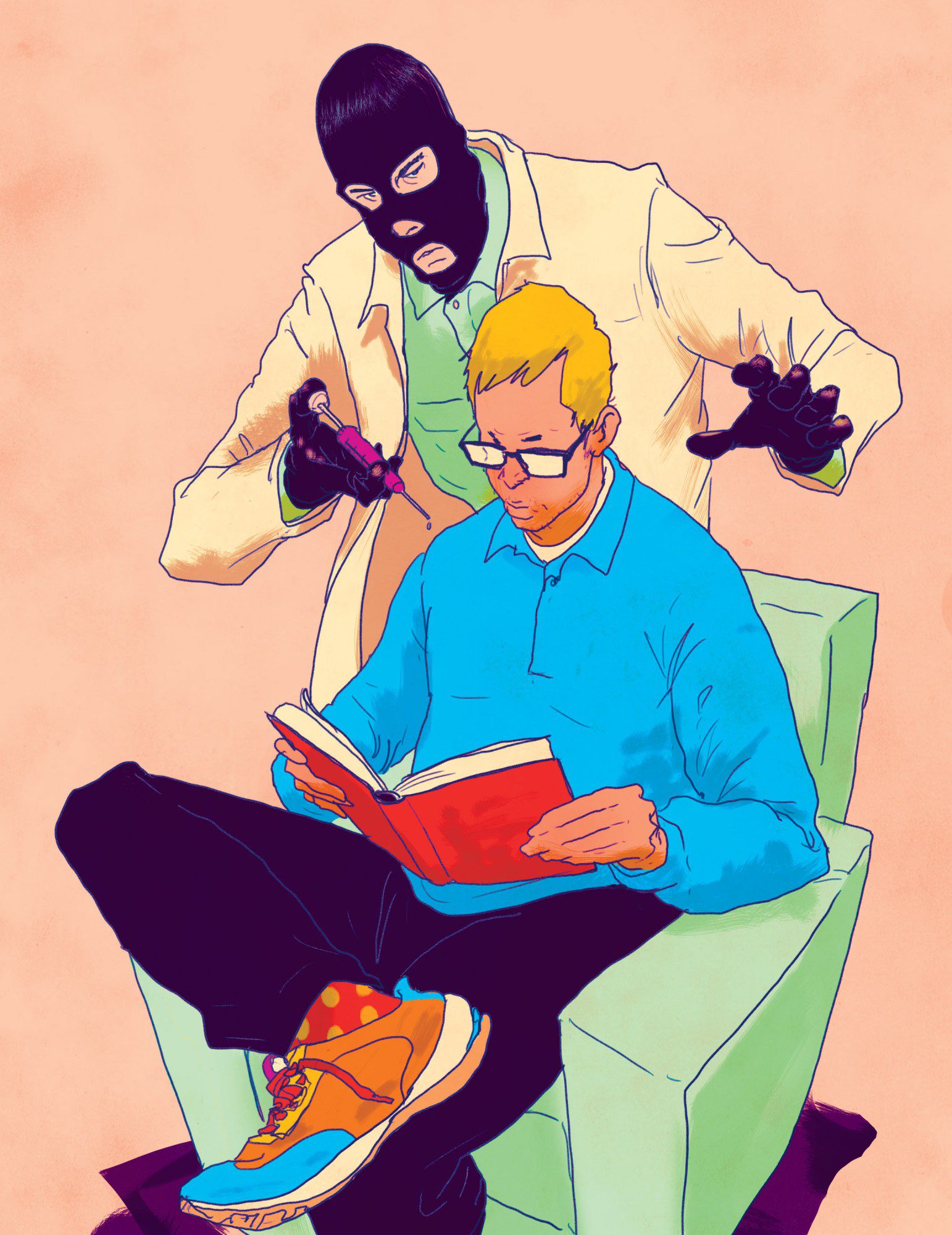

We hope that democracy survives and these technologies will be the opt-in gadgetry of a thriving society, not the surveillance tools of dystopia. If you have a digital twin, we hope it’s not a deepfake.

You might see these sketches from 2024 as a blithe promise, a warning, or a fever dream. The important thing is: Our present is just the starting point for infinite futures.

What happens next, kid, depends on you.

Kara Platoni is a science reporter and editor in Oakland, California.