The PPC ecosystem is about to undergo significant changes driven by regulation.

With regulation updates such as regional consent requirements and Chrome’s deprecation of third-party cookies later this year (see the timeline from Chrome), as well as other shifts such as Apple’s App Tracking Transparency (ATT) policy and cross-device customer journeys, the amount of visible data available to marketers is on the decline.

With that, Google’s ad measurement products and the ecosystem as a whole must evolve to meet this moment and be positioned for the next era.

So much change can feel overwhelming, but with a solid plan, you’ll be ready.

When I joined Google in early 2021, it was clear that regulatory and privacy changes and AI advancements would be key focus areas for marketers over the next several years. Fast-forward three years, and we’re now at the inflection point.

In this article, we’ll walk through the big pieces to put in place to ensure your measurement capabilities continue now and in the years ahead.

Preparation Is Key

AI has been playing a critical role by enabling predictive and analytical capabilities and filling measurement gaps where data is not available.

AI-powered conversion modeling, for example, is essential for maintaining measurement, campaign optimization, and improved bidding capabilities.

As I wrote last year about GA4, for example, these shifts were a major driver for developing a measurement platform that can account for less observable data via third-party cookies and more data being aggregated to protect user anonymity.

Many marketers are still deeply reliant on third-party cookies.

As our products have evolved, there are important actions you should take now to ensure you’re taking advantage of new capabilities designed to help you maintain ad measurement in 2024 and beyond.

Let’s dive in.

Sitewide Tagging

This may sound basic, but the very first step you should take is to implement sitewide tagging with either the Google Tag or Google Tag Manager.

And if you have tagging set up, do a double-check to ensure it’s implemented correctly and collecting the data you need to measure conversions. Here’s how to get started with conversion tracking.

To check that you are tracking conversions correctly, check the “Status” column for each of your conversion actions in the summary table (Goals > Conversions > Summary). You can then troubleshoot if you think there may be problems – Tag Assistant is also a helpful tool for this.

Once your tagging is implemented fully, you have your measurement foundation in place and can start building on top of it. Which leads me to…

First-party Data

For years, discussions have been going on about the growing importance of first-party data – consented information you have collected directly from your visitors and customers – as a key part of building a durable measurement plan.

The need to focus on building your first-party data strategy may still have felt abstract, but with the deprecation of third-party cookies and less observable data, first-party data is what will power your advertising strategy in this new landscape.

Of course, better ads measurement is just one reason to have a first-party data strategy. When thinking about your first-party data plan, it’s important to start with a customer-centric point of view.

What’s the value exchange you’ll be able to deliver for your customers?

It could be early access to new products or services, special discounts, bonus content, loyalty rewards, or other offers that can help you build stronger customer relationships, improve customer lifetime value, and grow your customer base.

We’ve discussed how enhanced conversions for leads uses first-party data.

Additionally, you can connect CRM and customer data platform (CDP) platforms with Google Ads, Google Analytics 4, Campaign Manager 360, and Search Ads 360.

First-party audience lists like Customer Match can help improve audience modeling, expansion, and remarketing. Working with a Customer Match partner can make this process simpler.

Additionally, we introduced Google Ads Data Manager last year to make it much easier to connect and use your first-party data, including Customer Match lists, offline conversions and leads, store sales, and app data.

It’s continuing to roll out and will reach general availability this quarter. You’ll be able to access it in a new “Data manager” section within “Tools” when it becomes available in your account.

When you connect your customer and product data to Google’s advertising and measurement tools, you’ll have a more holistic view of the impact of your advertising.

This is also where AI comes in to enable conversion modeling, predictive targeting, and analytics solutions, even when user-level data isn’t available.

Enhanced Conversions

Enhanced conversions is an increasingly important feature as the privacy landscape evolves.

It can help provide a more accurate, aggregated view of how people convert after engaging with your ads, including post-view and cross-device conversions than is possible with site tagging alone.

Enhanced conversions work by sending hashed, user-provided data from your website to Google, which is then matched to signed-in Google accounts. Sales originating from Google Search and YouTube can then be attributed to ads in a privacy-safe way.

Supplementing your existing conversion tags with more observable data also strengthens conversion modeling and provides more comprehensive data to be able to measure conversion lift from your advertising, understand the incremental impact of your advertising, and help better inform Smart Bidding.

There are two flavors of enhanced conversions:

Enhanced Conversions For Web In Google Ads And GA4

Already available in Google Ads, we recently rolled out support for enhanced conversions for web in GA4 as well.

An advantage of implementing enhanced conversions in GA4 rather than only in Google Ads is that user-provided data can be used for additional purposes (such as demographics and interests, as well as paid and organic measurement).

Wondering if you should set up enhanced conversions in one or both? Here’s some guidance:

- If you are using Google Ads conversion actions, you should use Google Ads enhanced conversions.

- If you’re using GA4 for cross-channel conversion measurement, you should use Google Analytics-enhanced conversions.

- If you’re doing both, you can opt to set them both up on the same property. However, you need to be aware of which one you are bidding to and including in the Conversion counts to avoid double counting conversions. Be sure your Google Ads conversion tracking setup only includes the appropriate conversions in the Conversions column. In other words, be sure you’re not including the same action from both Ads and GA4.

You’ll find details on setting up enhanced conversions in GA4 and/or Google Ads here.

Enhanced Conversions For Leads

If you’re tracking offline conversions, enhanced conversions for leads in Google Ads enable you to upload or import conversion data into Google Ads using first-party customer data from your website lead forms.

If you’re using offline conversion imports to measure offline leads (i.e., Lead-gen), we recommend upgrading to Enhanced conversions for leads.

Unlike OCI, with enhanced conversions for leads, you don’t need to modify your lead forms or CRM to receive a Google Click ID (GCLID).

Instead, enhanced conversions for leads uses information already captured about your leads – like email addresses – to measure conversions in a way that protects user privacy.

It’s also easy to set up with Google Tag, Google Tag Manager, or via that API if you want additional flexibility. It can then be configured right from within your Google Ads account.

Learn more about enhanced conversions for leads here. Note there are policy requirements and restrictions for using enhanced conversions.

Consent Mode

The accuracy of conversion measurement can also be improved with consent mode.

Consent choice requirements are part of regulatory changes and evolving privacy expectations (your legal and/or privacy teams can provide further guidance). Consent mode is the mechanism for passing your users’ consent choices to Google.

Consent mode has become especially relevant for advertisers with end-users in the European Economic Area (EEA) and the UK as Google strengthens enforcement of its EU user consent policy in March.

As part of this, consent mode (v2) now includes two new parameters – ad_user_data and ad_personalization – to send consent signals for ad personalization and remarketing purposes to Google.

You can find more details on consent mode v2 here. The simplest way to implement consent mode is to work with a Google CMP Partner.

If you have consent mode implemented but don’t update to v2, you will not have the option to remarket/personalize ads to these audiences in the future. To retain measurement for these audiences, you should implement consent mode by the end of 2024.

Consent mode also enables conversion tracking when consent is provided and conversion modeling when users don’t consent to ads or analytics cookies.

In Google Ads, when conversion modeling becomes available after you’ve met the thresholds, you’ll be able to view your conversion modeling uplift on “domain x country level” in the conversion Diagnostics tab.

You may have seen a notification in Google Ads asking you to check your consent settings. This message will appear to all customers globally to alert you to the new Google Services selection in your account and to check your settings.

We recommend all relevant Google services be configured to receive data labeled with consent to maintain campaign performance.

Conversion Modeling

Conversion modeling has long been used in Google’s measurement solutions and is increasingly important with the deprecation of individual identifiers like cookies on the web and device IDs in apps.

Additionally, Google privacy policies prohibit the use of fingerprinting and other tactics that use heuristics to identify and track individual users.

How it works:

Google’s conversion modeling uses AI/machine learning trained on a set of observable data sources – including first-party data; data from platform APIs like Apple’s SKAdNetwork and Chrome’s Privacy Sandbox Attribution Reporting API; and data sets of users similar to those interacting with your ads – to help fill in the gaps when those signals are missing.

Conversions are categorized as “observable” (conversions that can be tied directly to an ad interaction) and “unobservable” (conversions that can’t be directly linked to specific ad interactions).

We then identify an observable group of conversions with similar behaviors and characteristics (again, based on a diverse set of observable data sources noted above) and train the campaign model to arrive at a total number of conversions made by all users who interacted with your ad.

To validate model accuracy, we apply the conversion models to a portion of traffic that’s held back.

We then compare modeled and actual observed conversions from this traffic to check that there are no significant discrepancies and ensure our models can correctly quantify the number of conversions that took place on each campaign channel.

This information is also used to tune the models. You can read more about how conversion modeling works here.

You’ll find modeled data in your conversions and cross-device conversions reporting columns.

How To Improve Your Conversion Modeling

This is where everything we’ve discussed so far comes together!

The following steps will ensure you’re capturing as many “observable” conversions as possible. This will provide a more solid foundation for your conversion modeling.

The first step to improving your conversion modeling, no surprise, is to be sure your conversion tracking is set up properly with Google Tag or Google Tag Manager.

Next, implement enhanced conversions for web. For conversions affected by Apple’s ITP, enhanced conversions help advertisers recover up to 15% additional conversions compared to advertisers who haven’t implemented enhanced conversions.

Advertisers who implement enhanced conversions also see a conversion uplift of 17% on YouTube and a 3.5% impact on Search bidding.

Then, consider using consent mode. Again, this is particularly relevant for advertisers in the EEA, UK, and CH regions whose measurement is affected by the ePrivacy Directive.

Additionally, for app developers, on-device conversion measurement helps increase the number of observable app install or in-app conversions from your iOS App campaigns in a privacy-centric manner.

Data-driven attribution looks at all of your ad interaction account-wide and compares the paths of customers who convert to those of users who don’t convert to identify conversion patterns. It identifies the steps in the journey that have a higher predictability of leading to a conversion. The model then gives more credit to those ad interactions.

Each data-driven model is specific to each advertiser. Those who switch to a data-driven attribution model from a non-data-driven one typically see a 6% average increase in conversions.

That additional conversion data also helps inform Smart Bidding.

GA4 properties began including paid and organic channel-modeled conversions around the end of July 2021.

Reports such as the Event, Conversions, and Attribution reports and Explorations will include modeled data and automatically attribute conversion events across channels based on a mix of observed data where possible and modeled data where necessary.

Marketing Mix Modeling

With the loss of visible event-level data, many CMOs are also taking a fresh look at aggregated measurement methods such as marketing mix modeling (MMM).

While MMMs aren’t new, they are privacy-friendly and have become increasingly accessible for companies with robust first-party data strategies.

This month, we introduced an open-source MMM called Meridian to help advertisers get a more holistic picture across channels.

By open-sourcing the model, advertisers can choose to use the MMM solution as it is, build on top of it, or use whichever pieces they find most useful.

It’s launching with three primary methodologies to help marketers:

- Get better video measurement by modeling reach and frequency in MMMs.

- Improve lower funnel measurement by accounting for organic search volume; and

- Calibrate MMMs for accuracy by integrating incrementality experiments across channels.

Meridian is currently in closed beta, but all eligible non-Meridian MMM users can now review and use any of these three methodologies in their own models.

Take Action Now

Now is the time to ensure you have an action plan for durable, future-proof, privacy-first measurement.

I know these may sound like a bunch of buzzwords, but the aim is to have a plan that will prepare you for third-party cookie deprecation and can evolve with future changes.

More resources:

Featured Image: Photon photo/Shutterstock

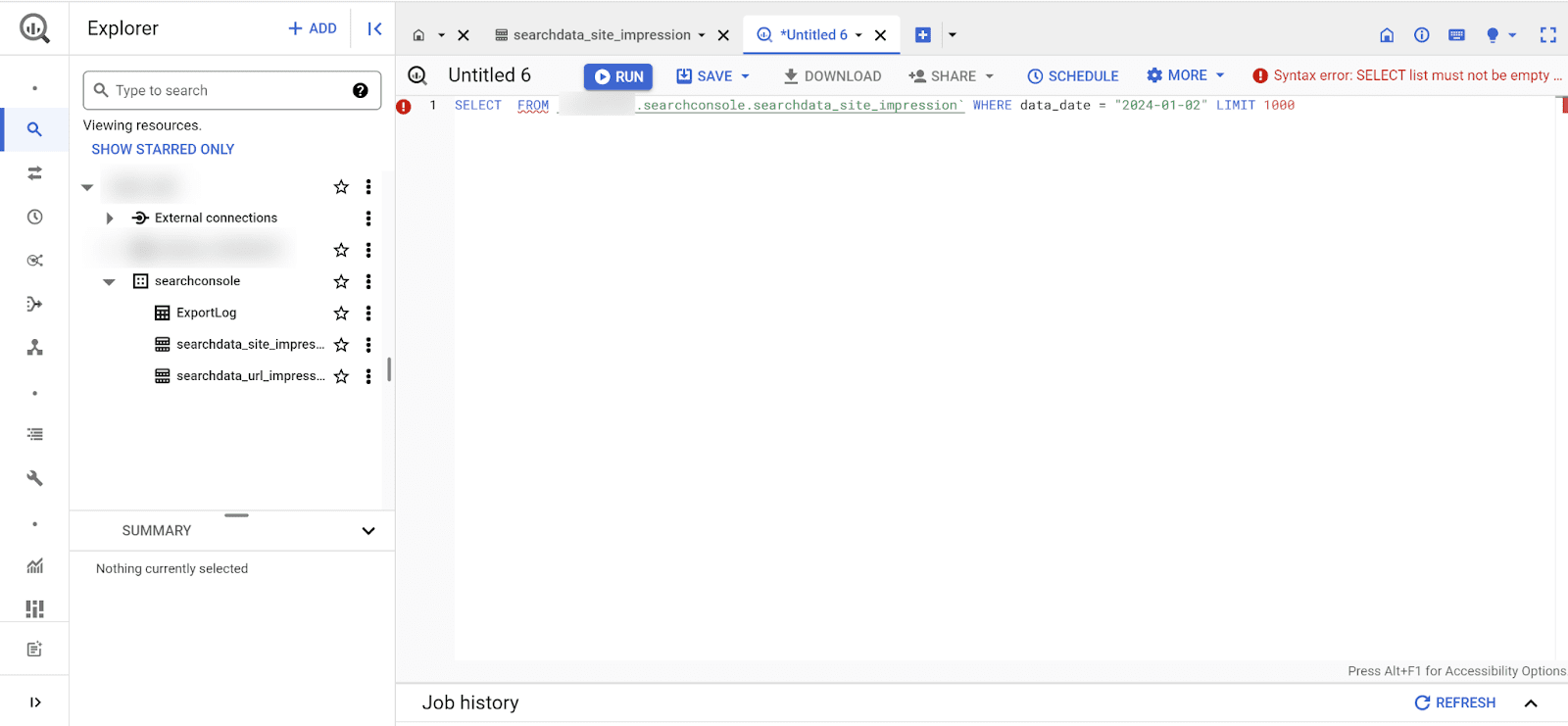

![21 AI Use Cases For Turning Inbound Calls Into Marketing Data [+Prompts]](https://ecommerceedu.com/wp-content/uploads/2024/03/Picture2.png)

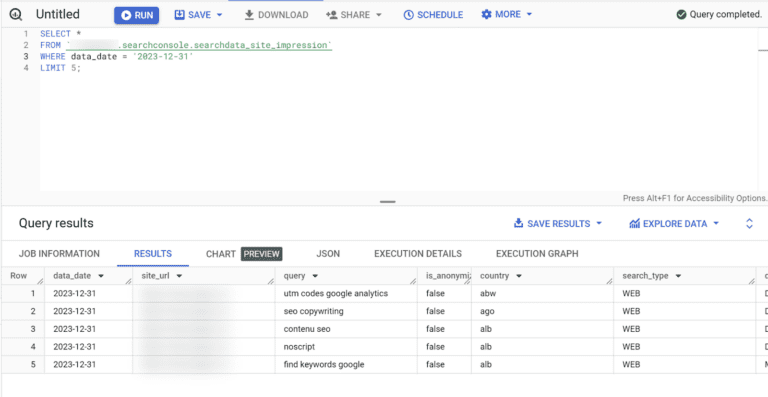

![21 AI Use Cases For Turning Inbound Calls Into Marketing Data [+Prompts]](https://ecommerceedu.com/wp-content/uploads/2024/03/Picture3.png)

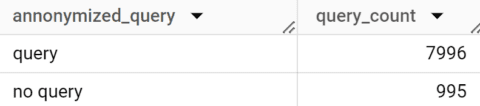

![21 AI Use Cases For Turning Inbound Calls Into Marketing Data [+Prompts]](https://ecommerceedu.com/wp-content/uploads/2024/03/Picture4.png)

![21 AI Use Cases For Turning Inbound Calls Into Marketing Data [+Prompts]](https://ecommerceedu.com/wp-content/uploads/2024/03/Picture5.png)