How AI and Wikipedia have sent vulnerable languages into a doom spiral

When Kenneth Wehr started managing the Greenlandic-language version of Wikipedia four years ago, his first act was to delete almost everything. It had to go, he thought, if it had any chance of surviving.

Wehr, who’s 26, isn’t from Greenland—he grew up in Germany—but he had become obsessed with the island, an autonomous Danish territory, after visiting as a teenager. He’d spent years writing obscure Wikipedia articles in his native tongue on virtually everything to do with it. He even ended up moving to Copenhagen to study Greenlandic, a language spoken by some 57,000 mostly Indigenous Inuit people scattered across dozens of far-flung Arctic villages.

The Greenlandic-language edition was added to Wikipedia around 2003, just a few years after the site launched in English. By the time Wehr took its helm nearly 20 years later, hundreds of Wikipedians had contributed to it and had collectively written some 1,500 articles totaling over tens of thousands of words. It seemed to be an impressive vindication of the crowdsourcing approach that has made Wikipedia the go-to source for information online, demonstrating that it could work even in the unlikeliest places.

There was only one problem: The Greenlandic Wikipedia was a mirage.

Virtually every single article had been published by people who did not actually speak the language. Wehr, who now teaches Greenlandic in Denmark, speculates that perhaps only one or two Greenlanders had ever contributed. But what worried him most was something else: Over time, he had noticed that a growing number of articles appeared to be copy-pasted into Wikipedia by people using machine translators. They were riddled with elementary mistakes—from grammatical blunders to meaningless words to more significant inaccuracies, like an entry that claimed Canada had only 41 inhabitants. Other pages sometimes contained random strings of letters spat out by machines that were unable to find suitable Greenlandic words to express themselves.

“It might have looked Greenlandic to [the authors], but they had no way of knowing,” complains Wehr.

“Sentences wouldn’t make sense at all, or they would have obvious errors,” he adds. “AI translators are really bad at Greenlandic.”

What Wehr describes is not unique to the Greenlandic edition.

Wikipedia is the most ambitious multilingual project after the Bible: There are editions in over 340 languages, and a further 400 even more obscure ones are being developed and tested. Many of these smaller editions have been swamped with automatically translated content as AI has become increasingly accessible. Volunteers working on four African languages, for instance, estimated to MIT Technology Review that between 40% and 60% of articles in their Wikipedia editions were uncorrected machine translations. And after auditing the Wikipedia edition in Inuktitut, an Indigenous language close to Greenlandic that’s spoken in Canada, MIT Technology Review estimates that more than two-thirds of pages containing more than several sentences feature portions created this way.

This is beginning to cause a wicked problem. AI systems, from Google Translate to ChatGPT, learn to “speak” new languages by scraping huge quantities of text from the internet. Wikipedia is sometimes the largest source of online linguistic data for languages with few speakers—so any errors on those pages, grammatical or otherwise, can poison the wells that AI is expected to draw from. That can make the models’ translation of these languages particularly error-prone, which creates a sort of linguistic doom loop as people continue to add more and more poorly translated Wikipedia pages using those tools, and AI models continue to train from poorly translated pages. It’s a complicated problem, but it boils down to a simple concept: Garbage in, garbage out.

“These models are built on raw data,” says Kevin Scannell, a former professor of computer science at Saint Louis University who now builds computer software tailored for endangered languages. “They will try and learn everything about a language from scratch. There is no other input. There are no grammar books. There are no dictionaries. There is nothing other than the text that is inputted.”

There isn’t perfect data on the scale of this problem, particularly because a lot of AI training data is kept confidential and the field continues to evolve rapidly. But back in 2020, Wikipedia was estimated to make up more than half the training data that was fed into AI models translating some languages spoken by millions across Africa, including Malagasy, Yoruba, and Shona. In 2022, a research team from Germany that looked into what data could be obtained by online scraping even found that Wikipedia was the sole easily accessible source of online linguistic data for 27 under-resourced languages.

This could have significant repercussions in cases where Wikipedia is poorly written—potentially pushing the most vulnerable languages on Earth toward the precipice as future generations begin to turn away from them.

“Wikipedia will be reflected in the AI models for these languages,” says Trond Trosterud, a computational linguist at the University of Tromsø in Norway, who has been raising the alarm about the potentially harmful outcomes of badly run Wikipedia editions for years. “I find it hard to imagine it will not have consequences. And, of course, the more dominant position that Wikipedia has, the worse it will be.”

Use responsibly

Automation has been built into Wikipedia since the very earliest days. Bots keep the platform operational: They repair broken links, fix bad formatting, and even correct spelling mistakes. These repetitive and mundane tasks can be automated away with little problem. There is even an army of bots that scurry around generating short articles about rivers, cities, or animals by slotting their names into formulaic phrases. They have generally made the platform better.

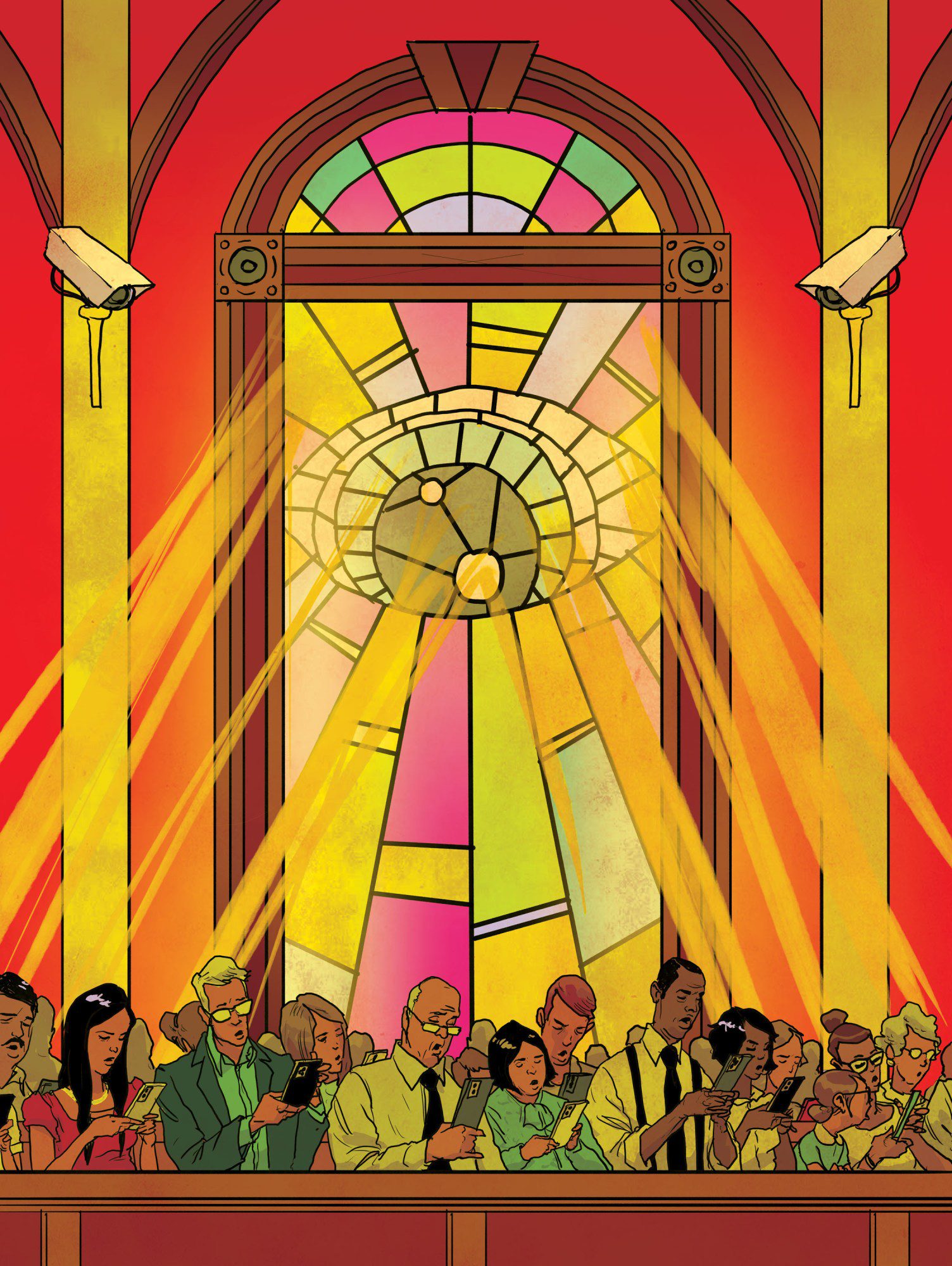

But AI is different. Anybody can use it to cause massive damage with a few clicks.

Wikipedia has managed the onset of the AI era better than many other websites. It has not been flooded with AI bots or disinformation, as social media has been. It largely retains the innocence that characterized the earlier internet age. Wikipedia is open and free for anyone to use, edit, and pull from, and it’s run by the very same community it serves. It is transparent and easy to use. But community-run platforms live and die on the size of their communities. English has triumphed, while Greenlandic has sunk.

“We need good Wikipedians. This is something that people take for granted. It is not magic,” says Amir Aharoni, a member of the volunteer Language Committee, which oversees requests to open or close Wikipedia editions. “If you use machine translation responsibly, it can be efficient and useful. Unfortunately, you cannot trust all people to use it responsibly.”

Trosterud has studied the behavior of users on small Wikipedia editions and says AI has empowered a subset that he terms “Wikipedia hijackers.” These users can range widely—from naive teenagers creating pages about their hometowns or their favorite YouTubers to well-meaning Wikipedians who think that by creating articles in minority languages they are in some way “helping” those communities.

“The problem with them nowadays is that they are armed with Google Translate,” Trosterud says, adding that this is allowing them to produce much longer and more plausible-looking content than they ever could before: “Earlier they were armed only with dictionaries.”

This has effectively industrialized the acts of destruction—which affect vulnerable languages most, since AI translations are typically far less reliable for them. There can be lots of different reasons for this, but a meaningful part of the issue is the relatively small amount of source text that is available online. And sometimes models struggle to identify a language because it is similar to others, or because some, including Greenlandic and most Native American languages, have structures that make them badly suited to the way most machine translation systems work. (Wehr notes that in Greenlandic most words are agglutinative, meaning they are built by attaching prefixes and suffixes to stems. As a result, many words are extremely context specific and can express ideas that in other languages would take a full sentence.)

Research produced by Google before a major expansion of Google Translate rolled out three years ago found that translation systems for lower-resourced languages were generally of a lower quality than those for better-resourced ones. Researchers found, for example, that their model would often mistranslate basic nouns across languages, including the names of animals and colors. (In a statement to MIT Technology Review, Google wrote that it is “committed to meeting a high standard of quality for all 249 languages” it supports “by rigorously testing and improving [its] systems, particularly for languages that may have limited public text resources on the web.”)

Wikipedia itself offers a built-in editing tool called Content Translate, which allows users to automatically translate articles from one language to another—the idea being that this will save time by preserving the references and fiddly formatting of the originals. But it piggybacks on external machine translation systems, so it’s largely plagued by the same weaknesses as other machine translators—a problem that the Wikimedia Foundation says is hard to solve. It’s up to each edition’s community to decide whether this tool is allowed, and some have decided against it. (Notably, English-language Wikipedia has largely banned its use, claiming that some 95% of articles created using Content Translate failed to meet an acceptable standard without significant additional work.) But it’s at least easy to tell when the program has been used; Content Translate adds a tag on the Wikipedia back end.

Other AI programs can be harder to monitor. Still, many Wikipedia editors I spoke with said that once their languages were added to major online translation tools, they noticed a corresponding spike in the frequency with which poor, likely machine-translated pages were created.

Some Wikipedians using AI to translate content do occasionally admit that they do not speak the target languages. They may see themselves as providing smaller communities with rough-cut articles that speakers can then fix—essentially following the same model that has worked well for more active Wikipedia editions.

Google Translate, for instance, says the Fulfulde word for January means June, while ChatGPT says it’s August or September. The programs also suggest the Fulfulde word for “harvest” means “fever” or “well-being,” among other possibilities.

But once error-filled pages are produced in small languages, there is usually not an army of knowledgeable people who speak those languages standing ready to improve them. There are few readers of these editions, and sometimes not a single regular editor.

Yuet Man Lee, a Canadian teacher in his 20s, says that he used a mix of Google Translate and ChatGPT to translate a handful of articles that he had written for the English Wikipedia into Inuktitut, thinking it’d be nice to pitch in and help a smaller Wikipedia community. He says he added a note to one saying that it was only a rough translation. “I did not think that anybody would notice [the article],” he explains. “If you put something out there on the smaller Wikipedias—most of the time nobody does.”

But at the same time, he says, he still thought “someone might see it and fix it up”—adding that he had wondered whether the Inuktitut translation that the AI systems generated was grammatically correct. Nobody has touched the article since he created it.

Lee, who teaches social sciences in Vancouver and first started editing entries in the English Wikipedia a decade ago, says that users familiar with more active Wikipedias can fall victim to this mindset, which he terms a “bigger-Wikipedia arrogance”: When they try to contribute to smaller Wikipedia editions, they assume that others will come along to fix their mistakes. It can sometimes work. Lee says he had previously contributed several articles to Wikipedia in Tatar, a language spoken by several million people mainly in Russia, and at least one of those was eventually corrected. But the Inuktitut Wikipedia is, by comparison, a “barren wasteland.”

He emphasizes that his intentions had been good: He wanted to add more articles to an Indigenous Canadian Wikipedia. “I am now thinking that it may have been a bad idea. I did not consider that I could be contributing to a recursive loop,” he says. “It was about trying to get content out there, out of curiosity and for fun, without properly thinking about the consequences.”

“Totally, completely no future”

Wikipedia is a project that is driven by wide-eyed optimism. Editing can be a thankless task, involving weeks spent bickering with faceless, pseudonymous people, but devotees put in hours of unpaid labor because of a commitment to a higher cause. It is this commitment that drives many of the regular small-language editors I spoke with. They all feared what would happen if garbage continued to appear on their pages.

Abdulkadir Abdulkadir, a 26-year-old agricultural planner who spoke with me over a crackling phone call from a busy roadside in northern Nigeria, said that he spends three hours every day fiddling with entries in his native Fulfulde, a language used mainly by pastoralists and farmers across the Sahel. “But the work is too much,” he said.

Abdulkadir sees an urgent need for the Fulfulde Wikipedia to work properly. He has been suggesting it as one of the few online resources for farmers in remote villages, potentially offering information on which seeds or crops might work best for their fields in a language they can understand. If you give them a machine-translated article, Abdulkadir told me, then it could “easily harm them,” as the information will probably not be translated correctly into Fulfulde.

Google Translate, for instance, says the Fulfulde word for January means June, while ChatGPT says it’s August or September. The programs also suggest the Fulfulde word for “harvest” means “fever” or “well-being,” among other possibilities.

Abdulkadir said he had recently been forced to correct an article about cowpeas, a foundational cash crop across much of Africa, after discovering that it was largely illegible.

If someone wants to create pages on the Fulfulde Wikipedia, Abdulkadir said, they should be translated manually. Otherwise, “whoever will read your articles will [not] be able to get even basic knowledge,” he tells these Wikipedians. Nevertheless, he estimates that some 60% of articles are still uncorrected machine translations. Abdulkadir told me that unless something important changes with how AI systems learn and are deployed, then the outlook for Fulfulde looks bleak. “It is going to be terrible, honestly,” he said. “Totally, completely no future.”

Across the country from Abdulkadir, Lucy Iwuala contributes to Wikipedia in Igbo, a language spoken by several million people in southeastern Nigeria. “The harm has already been done,” she told me, opening the two most recently created articles. Both had been automatically translated via Wikipedia’s Content Translate and contained so many mistakes that she said it would have given her a headache to continue reading them. “There are some terms that have not even been translated. They are still in English,” she pointed out. She recognized the username that had created the pages as a serial offender. “This one even includes letters that are not used in the Igbo language,” she said.

Iwuala began regularly contributing to Wikipedia three years ago out of concern that Igbo was being displaced by English. It is a worry that is common to many who are active on smaller Wikipedia editions. “This is my culture. This is who I am,” she told me. “That is the essence of it all: to ensure that you are not erased.”

Iwuala, who now works as a professional translator between English and Igbo, said the users doing the most damage are inexperienced and see AI translations as a way to quickly increase the profile of the Igbo Wikipedia. She often finds herself having to explain at online edit-a-thons she organizes, or over email to various error-prone editors, that the results can be the exact opposite, pushing users away: “You will be discouraged and you will no longer want to visit this place. You will just abandon it and go back to the English Wikipedia.”

These fears are echoed by Noah Ha‘alilio Solomon, an assistant professor of Hawaiian language at the University of Hawai‘i. He reports that some 35% of words on some pages in the Hawaiian Wikipedia are incomprehensible. “If this is the Hawaiian that is going to exist online, then it will do more harm than anything else,” he says.

Hawaiian, which was teetering on the verge of extinction several decades ago, has been undergoing a recovery effort led by Indigenous activists and academics. Seeing such poor Hawaiian on such a widely used platform as Wikipedia is upsetting to Ha‘alilio Solomon.

“It is painful, because it reminds us of all the times that our culture and language has been appropriated,” he says. “We have been fighting tooth and nail in an uphill climb for language revitalization. There is nothing easy about that, and this can add extra impediments. People are going to think that this is an accurate representation of the Hawaiian language.”

The consequences of all these Wikipedia errors can quickly become clear. AI translators that have undoubtedly ingested these pages in their training data are now assisting in the production, for instance, of error-strewn AI-generated books aimed at learners of languages as diverse as Inuktitut and Cree, Indigenous languages spoken in Canada, and Manx, a small Celtic language spoken on the Isle of Man. Many of these have been popping up for sale on Amazon. “It was just complete nonsense,” says Richard Compton, a linguist at the University of Quebec in Montreal, of a volume he reviewed that had purported to be an introductory phrasebook for Inuktitut.

Rather than making minority languages more accessible, AI is now creating an ever expanding minefield for students and speakers of those languages to navigate. “It is a slap in the face,” Compton says. He worries that younger generations in Canada, hoping to learn languages in communities that have fought uphill battles against discrimination to pass on their heritage, might turn to online tools such as ChatGPT or phrasebooks on Amazon and simply make matters worse. “It is fraud,” he says.

A race against time

According to UNESCO, a language is declared extinct every two weeks. But whether the Wikimedia Foundation, which runs Wikipedia, has an obligation to the languages used on its platform is an open question. When I spoke to Runa Bhattacharjee, a senior director at the foundation, she said that it was up to the individual communities to make decisions about what content they wanted to exist on their Wikipedia. “Ultimately, the responsibility really lies with the community to see that there is no vandalism or unwanted activity, whether through machine translation or other means,” she said. Usually, Bhattacharjee added, editions were considered for closure only if a specific complaint was raised about them.

But if there is no active community, how can an edition be fixed or even have a complaint raised?

Bhattacharjee explained that the Wikimedia Foundation sees its role in such cases as about maintaining the Wikipedia platform in case someone comes along to revive it: “It is the space that we provide for them to grow and develop. That is where we are at.”

Inari Saami, spoken in a single remote community in northern Finland, is a poster child for how people can take good advantage of Wikipedia. The language was headed toward extinction four decades ago; there were only four children who spoke it. Their parents created the Inari Saami Language Association in a last-ditch bid to keep it going. The efforts worked. There are now several hundred speakers, schools that use Inari Saami as a medium of instruction, and 6,400 Wikipedia articles in the language, each one copy-edited by a fluent speaker.

This success highlights how Wikipedia can indeed provide small and determined communities with a unique vehicle to promote their languages’ preservation. “We don’t care about quantity. We care about quality,” says Fabrizio Brecciaroli, a member of the Inari Saami Language Association. “We are planning to use Wikipedia as a repository for the written language. We need to provide tools that can be used by the younger generations. It is important for them to be able to use Inari Saami digitally.”

This has been such a success that Wikipedia has been integrated into the curriculum at the Inari Saami–speaking schools, Brecciaroli adds. He fields phone calls from teachers asking him to write up simple pages on topics from tornadoes to Saami folklore. Wikipedia has even offered a way to introduce words into Inari Saami. “We have to make up new words all the time,” Brecciaroli says. “Young people need them to speak about sports, politics, and video games. If they are unsure how to say something, they now check Wikipedia.”

Wikipedia is a monumental intellectual experiment. What’s happening with Inari Saami suggests that with maximum care, it can work in smaller languages. “The ultimate goal is to make sure that Inari Saami survives,” Brecciaroli says. “It might be a good thing that there isn’t a Google Translate in Inari Saami.”

That may be true—though large language models like ChatGPT can be made to translate phrases into languages that more traditional machine translation tools do not offer. Brecciaroli told me that ChatGPT isn’t great in Inari Saami but that the quality varies significantly depending on what you ask it to do; if you ask it a question in the language, then the answer will be filled with words from Finnish and even words it invents. But if you ask it something in English, Finnish, or Italian and then ask it to reply in Inari Saami, it will perform better.

In light of all this, creating as much high-quality content online as can possibly be written becomes a race against time. “ChatGPT only needs a lot of words,” Brecciaroli says. “If we keep putting good material in, then sooner or later, we will get something out. That is the hope.” This is an idea supported by multiple linguists I spoke with—that it may be possible to end the “garbage in, garbage out” cycle. (OpenAI, which operates ChatGPT, did not respond to a request for comment.)

Still, the overall problem is likely to grow and grow, since many languages are not as lucky as Inari Saami—and their AI translators will most likely be trained on more and more AI slop. Wehr, unfortunately, seems far less optimistic about the future of his beloved Greenlandic.

Since deleting much of the Greenlandic-language Wikipedia, he has spent years trying to recruit speakers to help him revive it. He has appeared in Greenlandic media and made social media appeals. But he hasn’t gotten much of a response; he says it has been demoralizing.

“There is nobody in Greenland who is interested in this, or who wants to contribute,” he says. “There is completely no point in it, and that is why it should be closed.”

Late last year, he began a process requesting that the Wikipedia Language Committee shut down the Greenlandic-language edition. Months of bitter debate followed between dozens of Wikipedia bureaucrats; some seemed to be surprised that a superficially healthy-seeming edition could be gripped by so many problems.

Then, earlier this month, Wehr’s proposal was accepted: Greenlandic Wikipedia is set to be shuttered, and any articles that remain will be moved into the Wikipedia Incubator, where new language editions are tested and built. Among the reasons cited by the Language Committee is the use of AI tools, which have “frequently produced nonsense that could misrepresent the language.”

Nevertheless, it may be too late—mistakes in Greenlandic already seem to have become embedded in machine translators. If you prompt either Google Translate or ChatGPT to do something as simple as count to 10 in proper Greenlandic, neither program can deliver.

Jacob Judah is an investigative journalist based in London.