When Agents Replace Websites via @sejournal, @DuaneForrester

Let’s talk about an agentic future. As task-completing agents move from concept to adoption, their impact on how we discover and transact online will be significant. Websites won’t vanish, but in many cases, their utility will shrink as agents become the new intermediary layer between people and answers. Domains will still exist, but their value as discovery assets is likely to erode. Building and maintaining a site will increasingly mean structuring it for agents to retrieve from, not just for people to browse, and the idea of domains appreciating as scarce assets will feel less connected to how discovery actually happens.

The growth trajectory for AI agents is already clear in the data. Grand View Research valued the global AI agents market at USD 5.40 billion in 2024, with forecasts reaching USD 50.31 billion by 2030 at an annual growth rate of about 45.8%. Regionally, the Asia-Pacific market was USD 1.30 billion in 2024 and is projected to expand to USD 14.15 billion by 2030, with China alone expected to grow from USD 402.6 million to USD 3.98 billion over the same period. Europe is following a similar path, climbing from USD 1.32 billion in 2024 to USD 11.49 billion by 2030. Longer-term, Precedence Research projects the global agentic AI market will rise from USD 7.55 billion in 2025 to nearly USD 199.05 billion by 2034, a compound growth rate of 43.84%. These forecasts from multiple regions show a consistent global pattern: adoption is accelerating everywhere, and the shift toward agentic systems is not theoretical; it is underway. These figures are about task-completing agents, not casual chat use.

Image Credit: Duane Forrester

Image Credit: Duane ForresterDo We Still Need Websites In An Agentic World?

It’s easy to forget how limited the internet felt in the 1990s. On AOL, you didn’t browse the web the way we think of it today. You navigated keywords. One word dropped you into chat rooms, news channels, or branded content. The open web was technically out there, but for most people, America Online WAS the internet.

That closed-garden model eventually gave way to the open web. Domains became navigation anchors. Owning a clean .com or a trusted extension like .org or .gov signaled legitimacy. Websites evolved into the front doors of digital identity, where brand credibility and consumer trust were built. Search rankings reinforced this. An exact-match domain once boosted visibility, and later the concept of “domain authority” helped indicate who showed up at the top of search results. For nearly three decades, websites have been the central hub of digital discovery and transactions.

But we may be circling back. Only this time, the keyword is no longer “AOL Keyword: Pizza Hut.” It’s your natural-language intent: “Book me a flight,” “Order flowers,” “Find me a dentist nearby.” And instead of AOL, the gatekeepers are LLMs and agentic systems.

From Navigation To Answers

The rise of agentic systems collapses the journey we’ve been used to. Where discovery once meant search, scanning results, clicking a domain, and navigating a site, it now means describing your intent and letting the system do the rest. You don’t need Expedia or United.com if your agent confirms your flight. You don’t need to touch OpenTable’s site if a reservation is placed automatically for tomorrow night. You don’t need to sift through Nike’s catalog if new running shoes just arrive at your door.

In this flow, the answer layer replaces the click, the task layer replaces the browsing session, and the source itself becomes invisible. The consumer no longer cares which site delivered the data or handled the transaction, as long as the result is correct.

Proof In Practice: WeChat

This shift isn’t hypothetical. In China, it’s already happening at scale. WeChat introduced Mini-Programs in 2017 as “apps within an app,” designed so users never need to leave the WeChat environment. By 2024, they had become mainstream: Recent reports suggest there are between 3.9 and 4.3 million WeChat Mini-Programs in the ecosystem today. (3.9m source, 4.3m source), with over 900 million monthly active users. And while Mini-Programs are closer to apps than actual AIs, it’s all about task completion and consumers adopting layers of task completion.

In food and beverage and hospitality, over 80% of top chain restaurants now run ordering or take-out flows directly through Mini-Programs, meaning customers never touch a separate website. International brands often prioritize Mini-Programs as their Chinese storefronts instead of building localized websites, since WeChat already handles discovery, product listings, payments, and customer service. Luxury brand LOEWE, for example, launched its 2024 “Crafted World” exhibition in Shanghai entirely via a WeChat Mini-Program, offering ticketing and interactive digital content without requiring users to leave the app.

For many domestic Chinese businesses, this has become the default strategy: their websites exist, if at all, as minimal shells, while the real customer experience lives entirely inside WeChat. And it’s worth keeping in mind, we talked about WeChat serving over 1 billion monthly active users. ChatGPT currently sees over 800 million a week, so roughly three times WeChat’s volume on a monthly basis. An agentic era of direct-to-consumer facilitated by platforms like ChatGPT, WeChat, Claude, Gemini, and CoPilot could bring a massive shift in consumer behavior.

Western Parallels

Western platforms are already moving in this direction. Instagram Checkout allows users to buy products directly inside Instagram, without ever visiting a retailer’s website. Shopify details this integration here. TikTok offers similar flows. Its partnership with Shopify enables in-app checkout so the consumer never leaves TikTok. Even services like Uber now function as APIs inside larger ecosystems. You can book a ride from within another app and never open Uber directly.

In each case, the website still exists, but the consumer may never see it. Discovery, consideration, and conversion all happen inside the closed flow.

The AOL Parallel

The resemblance to the mid-1990s is striking. AOL’s big push came in that period, when its “Keyword” model positioned the service as the internet itself. Instead of typing URLs, people entered AOL Keywords and stayed inside AOL’s curated walls. By mid-1996, AOL had roughly 6 million U.S. subscribers doing this, representing about 13% of the nation’s estimated 44 million internet users at the time.

Today, the “keyword” has become your intent. The agent interprets it, makes the decision, and fulfills the request. The outcome is the same: a closed environment where the gateway controls visibility and access. Only this time, it’s powered by LLMs and APIs instead of dial-up modems.

This is not an isolated evolution. There’s mounting evidence that the open web itself is weakening. Google recently stated in a legal filing that “the open web is already in rapid decline … harming publishers who rely on open-web display advertising revenue.” That report was covered by Search Engine Roundtable.

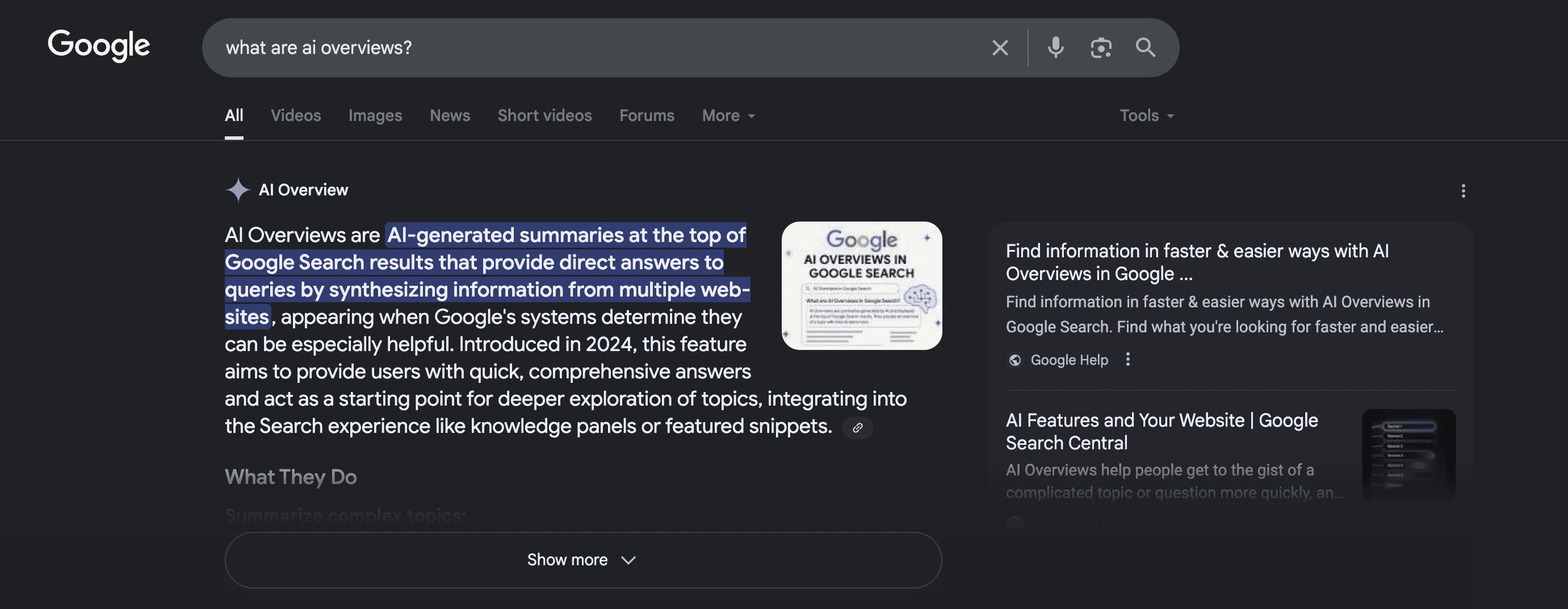

Pew Research found that when Google displays AI-generated summaries in search results, users click links only 8% of the time, compared to 15% when no summary is present. That’s nearly a 50% decline in link clicks. Digital Content Next reported that premium publishers saw a 10% year-over-year drop in referral traffic from Google during a recent eight-week span.

The Guardian covered MailOnline’s specific case, where desktop click-through dropped 56% when AI summaries appeared, and mobile click-through fell 48%. Advertising spend tells a similar story. MarketingProfs reports that professionally produced news content is projected to receive just 51% of global content ad spend in 2025, down from 72% in 2019. Search Engine Land shows that open-web display ads have fallen from about 40% of Google AdWords impressions in 2019 to only 11% by early 2025.

The story is consistent. Consumers click less, publishers earn less, and advertisers move their budgets elsewhere. The open web will likely no longer be the center of gravity.

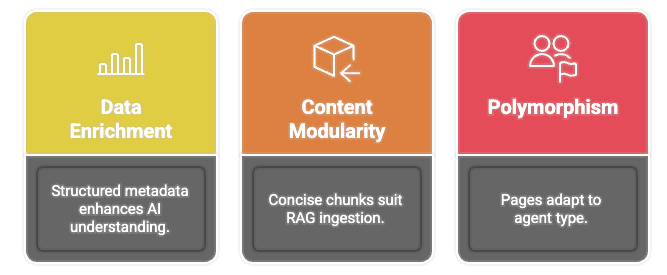

If websites lose their central role, what takes their place? Businesses will still need technical infrastructure, but the front door will change. Instead of polished homepages, structured data and APIs will feed agents directly. Verification layers like schema, certifications, and machine-readable credentials will carry more weight than design. Machine-validated authority (how often your brand is retrieved or cited by LLMs) will become a core measure of trust. And partnerships or API integrations will replace traditional SEO in ensuring visibility.

This doesn’t mean websites vanish. They’ll remain important for compliance, long-form storytelling, and niches where users still seek a direct experience. But for mainstream interactions, the website is being demoted to plumbing.

And while design and user experience may lose ground to agentic flows, content itself remains critical. Agents still need to be fed with high-quality text, structured product data, verified facts, and fresh signals of authority. Video will grow in importance as agents surface summaries and clips in conversational answers. First-party user-generated content, especially reviews, will carry more weight as a trust signal. Product data like clean specs, accurate availability, transparent pricing will be non-negotiable inputs to agent systems.

In other words, the work of SEO isn’t disappearing. Technical SEO remains the plumbing that ensures content is discoverable and accessible to machines. Content creation continues to matter, both because it fuels agent responses and because humans still consume it when they step beyond the agent flow. The shift is less about content’s relevance and more about where and how it gets consumed. Web design and UX work, however, will inevitably come under scrutiny as optional costs as the agent interface takes over consumer experiences.

One consequence of this shift is that brands risk losing their direct line to the customer. When an agent books the flight, orders the shoes, or schedules the dentist, the consumer’s loyalty may end up with the agent itself, not the underlying business. Just as Amazon’s marketplace turned many sellers into interchangeable storefronts beneath the Amazon brand, agentic systems may flatten brand differentiation unless companies build distinctive signals that survive mediation. That could mean doubling down on structured trust markers, recognizable product data, or even unique content assets that agents consistently retrieve. Without those, the relationship belongs to the agent, not you.

That potential demotion for websites carries consequences. Domains will still matter for branding, offline campaigns, and human recall, but their value as entry points to discovery is shrinking. The secondary market for “premium” domains is already showing signs of stress. Registries have begun cutting or eliminating premium tiers; .art, for example, recently removed over a million names from its premium list to reprice them downward. Investor commentary also points to weaker demand, with TechStartups noting in 2025 that domain sales are “crashing” as AI and shifting search behaviors reduce the perceived need for expensive keyword names.

We’ve seen this arc before. Families once paid hundreds of dollars for full sets of printed encyclopedias. Owning Britannica on your shelf was a marker of credibility and access to knowledge. Today, those same volumes can be found in thrift stores for pennies, eclipsed by digital access that made the scarcity meaningless. Domains are on a similar path. They will remain useful for identity and branding, but the assumption that a keyword .com will keep appreciating looks more like nostalgia than strategy.

Defensive portfolios across dozens of ccTLDs will be harder to justify, just as stocking encyclopedias became pointless once Wikipedia existed. Websites will remain as infrastructure, but their role as front doors will continue to shrink.

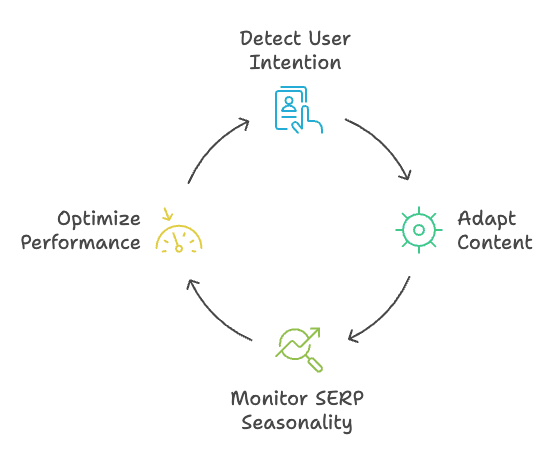

Marketing strategies must adapt. The focus will move from polishing landing pages to ensuring your data is retrievable, your brand is trusted by agents, and your authority is machine-validated. SEO, as we know it, will transform from competing for SERP rankings to competing for retrieval and integration into agent responses.

Another underappreciated consequence of all this is measurement. For decades, marketers have relied on web analytics: page views, bounce rates, conversions. Agentic systems obscure that visibility. If a customer never lands on your site but still books through an agent, you may gain the revenue but lose the data trail. New metrics will be needed. Not just whether a page ranks, but whether your content was retrieved, cited, or trusted inside agent flows. In that sense, the industry will need to redefine what “traffic” and “conversion” even mean when the interface is a conversation rather than a website.

The Fear And The Possibility

The fear is obvious. We’ve been here before with AOL. A closed gateway can dominate visibility, commoditize brands, and reduce consumer choice. The open web and search engines broke us out of that in the late 1990s. No one wants to return to those walls.

But the possibility is also real. Businesses that adapt to agentic discovery (with structured signals, trusted data feeds, and machine-recognized authority) can thrive. The website may become plumbing, but plumbing matters. It carries the flow and information that powers the experience.

So the real question isn’t whether websites will still exist. Ultimately, they will, in some format. The question is whether your business is still focused on decorating the door, or whether you’re investing in the pipes that agents actually use to deliver value.

More Resources:

This post was originally published on Duane Forrester Decodes.

Featured Image: Collagery/Shutterstock